This report is written by Tiger Research and analyzes Subsquid's decentralized data infrastructure, which aims to bridge the gap between blockchain data transparency and accessibility.

Key Points Summary

- Subsquid (hereinafter referred to as SQD) simplifies blockchain data access through decentralized infrastructure. It supports over 200 blockchains and distributes data across multiple nodes.

- The SQD network adopts a modular structure, allowing developers to freely configure data processing and storage methods. This enables users to efficiently utilize data in a multi-chain environment through a unified structure.

- Subsquid aims to become the data backbone of Web3, similar to the standard set by Snowflake with "one platform, multiple workloads." Through its recent acquisition of Rezolve AI, it is expanding its business into AI and payment sectors. SQD is expected to become the core infrastructure connecting Web3 and the agent economy.

1. Is Blockchain Data Really Open to Everyone?

One of the defining characteristics of blockchain technology is that all its data is open to everyone. Traditional industries store data in closed databases that are inaccessible externally. Blockchain operates differently. All records are transparently published on the chain.

However, data transparency does not guarantee ease of use. Data transparency does not ensure accessibility. Blockchains are optimized to securely execute transactions and achieve network consensus. They are not designed as infrastructure for data analysis. The functions for verifying and storing data have advanced, but the infrastructure for efficiently querying and utilizing that data remains insufficient. The methods for querying on-chain data have not significantly changed from ten years ago to today.

Source: Tiger Research

Consider an analogy. A town called "Tiger Town" has a huge river named "Ethereum." This river is a public good. Anyone can draw water from it. However, drawing water is difficult and inefficient. Everyone must bring a bucket to the riverbank to draw water directly. To use it as drinking water, they must go through a purification process of boiling or filtering.

The current blockchain development environment operates similarly. Abundant data is readily available, but the infrastructure to utilize it is lacking. For example, suppose a developer wants to build a dApp using trading data from the decentralized exchange Uniswap. The developer must request data through Ethereum's RPC nodes, process it, and store it. However, RPC nodes have limitations for large-scale data analysis or executing complex queries. The blockchain ecosystem operates in a multi-chain environment that includes multiple blockchains, complicating matters further.

Developers can use centralized services like Alchemy or Infura to address these limitations. However, this approach undermines the core value of decentralization in blockchain technology. Even if smart contracts are decentralized, centralized data access introduces risks of censorship and single points of failure. The blockchain ecosystem needs fundamental innovation in data access methods to achieve true accessibility.

2. Subsquid: A New Paradigm for Blockchain Data Infrastructure

Source: SQD

Subsquid (hereinafter referred to as SQD) is a decentralized data infrastructure project aimed at addressing the complexity and inefficiency of blockchain data access. SQD's goal is to make it easy for anyone to utilize blockchain data.

Source: Tiger Research

Returning to the previous analogy. In the past, everyone had to bring a bucket to the riverbank to draw water directly. Now, distributed water purification plants draw water from the river and purify it. The townspeople no longer need to go to the riverbank. They can access clean water whenever they need it. The SQD team provides this infrastructure through the “SQD Network”.

The SQD network operates as a distributed query engine and data lake. It currently supports processing data from over 200 blockchain networks. Since the mainnet launch in June 2024, its scale has grown to process hundreds of millions of queries monthly. This growth stems from three core features. These features elevate SQD beyond a simple data indexing platform and showcase the evolution of blockchain data infrastructure.

2.1. Decentralized Architecture for High Availability

A significant portion of existing blockchain data infrastructure relies on centralized providers like Alchemy. This approach has advantages in initial accessibility and management efficiency. However, it limits users to only the chains supported by the provider and incurs high costs as usage increases. It is also susceptible to single points of failure. This centralized structure conflicts with the core value of decentralization in blockchain.

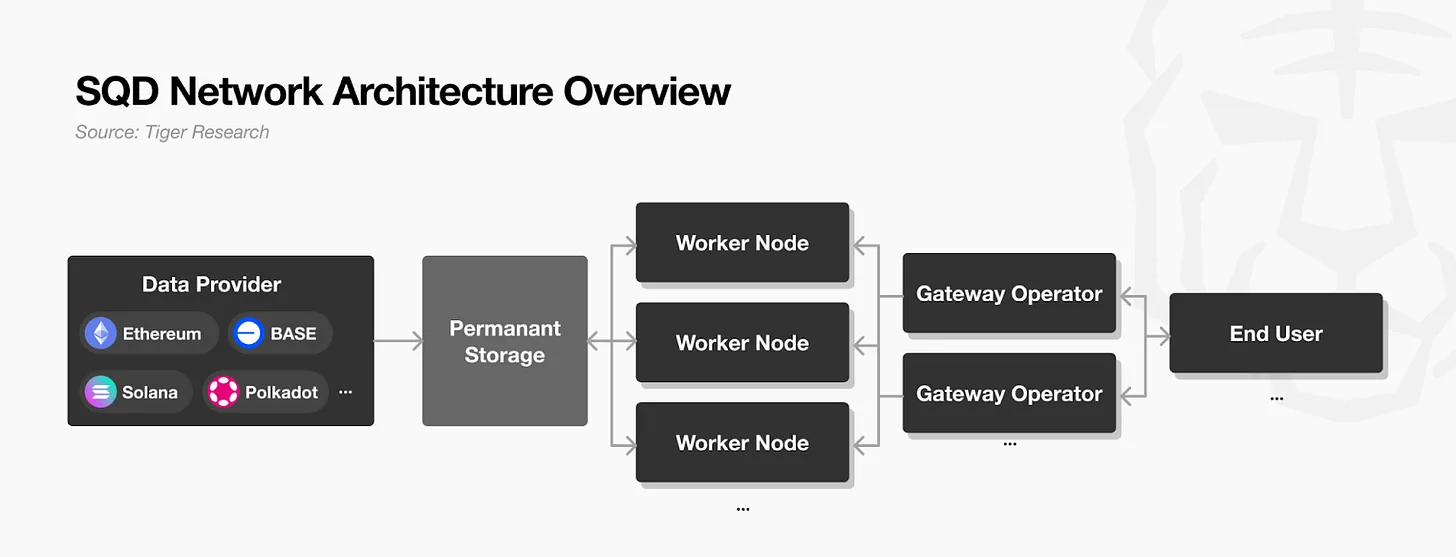

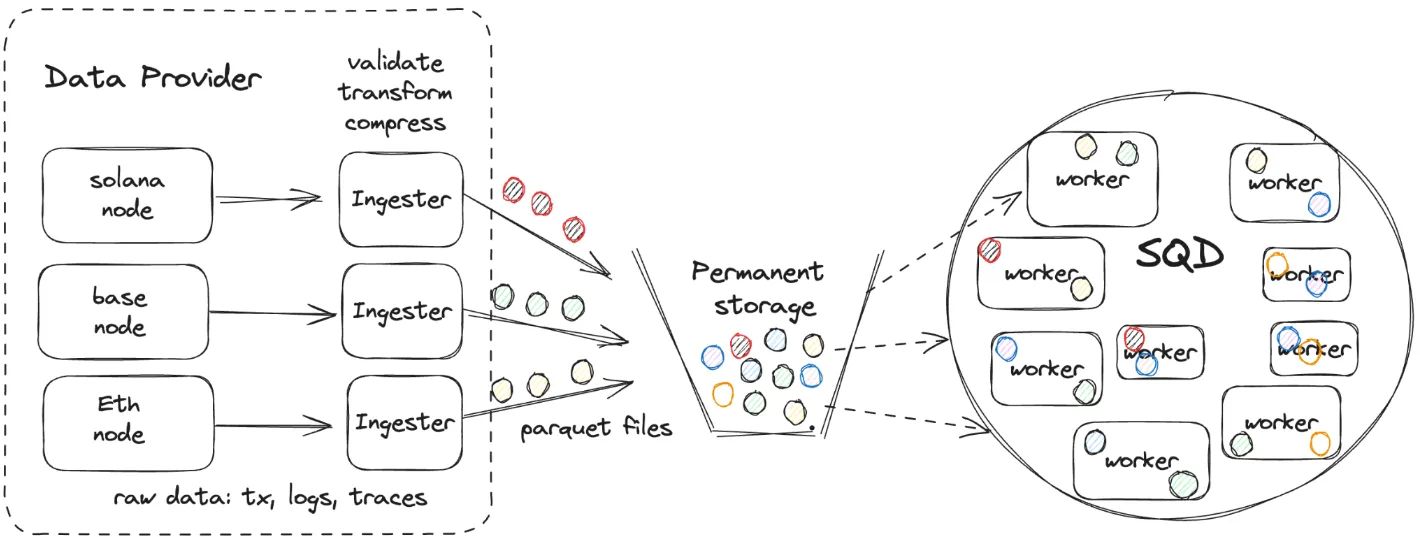

The SQD network addresses these limitations through a decentralized architecture. Data providers collect raw data from multiple blockchains, such as Ethereum and Solana. They chunk the data, compress it, and upload it with metadata to the network. Worker nodes split the data created by data providers into chunks for distributed storage. When query requests arrive, they are processed and responded to quickly. Each worker node acts like a mini API, providing its stored data. The entire network operates like thousands of distributed API servers. Gateway operators serve as the interface between end users and the network. They receive user queries and forward them to the appropriate worker nodes for processing.

Source: SQD

Anyone can participate as a worker node or gateway operator. This allows the network's capacity and processing performance to scale horizontally. Data is redundantly stored across multiple worker nodes. Even if some nodes fail, overall data access remains unaffected. This ensures high availability and resilience.

In the initial onboarding phase, data providers are currently managed by the SQD team. This strategy ensures the initial data quality and stability. As the network matures, external providers will be able to participate through token governance. This will fully decentralize the data procurement phase.

2.2. Token Economics Ensuring Network Sustainability

For a distributed network to operate normally, participants need incentives to act voluntarily. SQD addresses this issue through an economic incentive structure centered around the native token $SQD. Each participant stakes or delegates tokens based on their roles and responsibilities. This collectively builds the network's stability and reliability.

Worker nodes are the core operators managing blockchain data. To participate, they must stake 100,000 $SQD as collateral to address malicious behavior or the provision of incorrect data. If issues arise, the network will confiscate their deposit. Nodes that continuously provide stable and accurate data will earn $SQD token rewards. This naturally incentivizes responsible operation.

Gateway operators must lock $SQD tokens to process user requests. The amount of locked tokens determines their bandwidth, i.e., the number of requests they can handle. Longer lock-up periods allow them to process more requests.

Token holders can indirectly participate in the network without running nodes themselves. They can delegate their stake to trusted worker nodes. Nodes that receive more delegations gain the ability to process more queries and earn more rewards. Delegators share a portion of these rewards. Currently, there are no minimum delegation requirements or lock-up period restrictions. This creates a permissionless curation system where the community can choose nodes in real-time. The entire community participates in network quality management through this structure.

2.3. Modular Structure for Flexibility

Another notable feature of the SQD network is its modular structure. Existing indexing solutions adopt a monolithic structure, handling all aspects such as data collection, processing, storage, and querying within a single system. This simplifies initial setup but limits developers' freedom to choose data processing methods or storage locations.

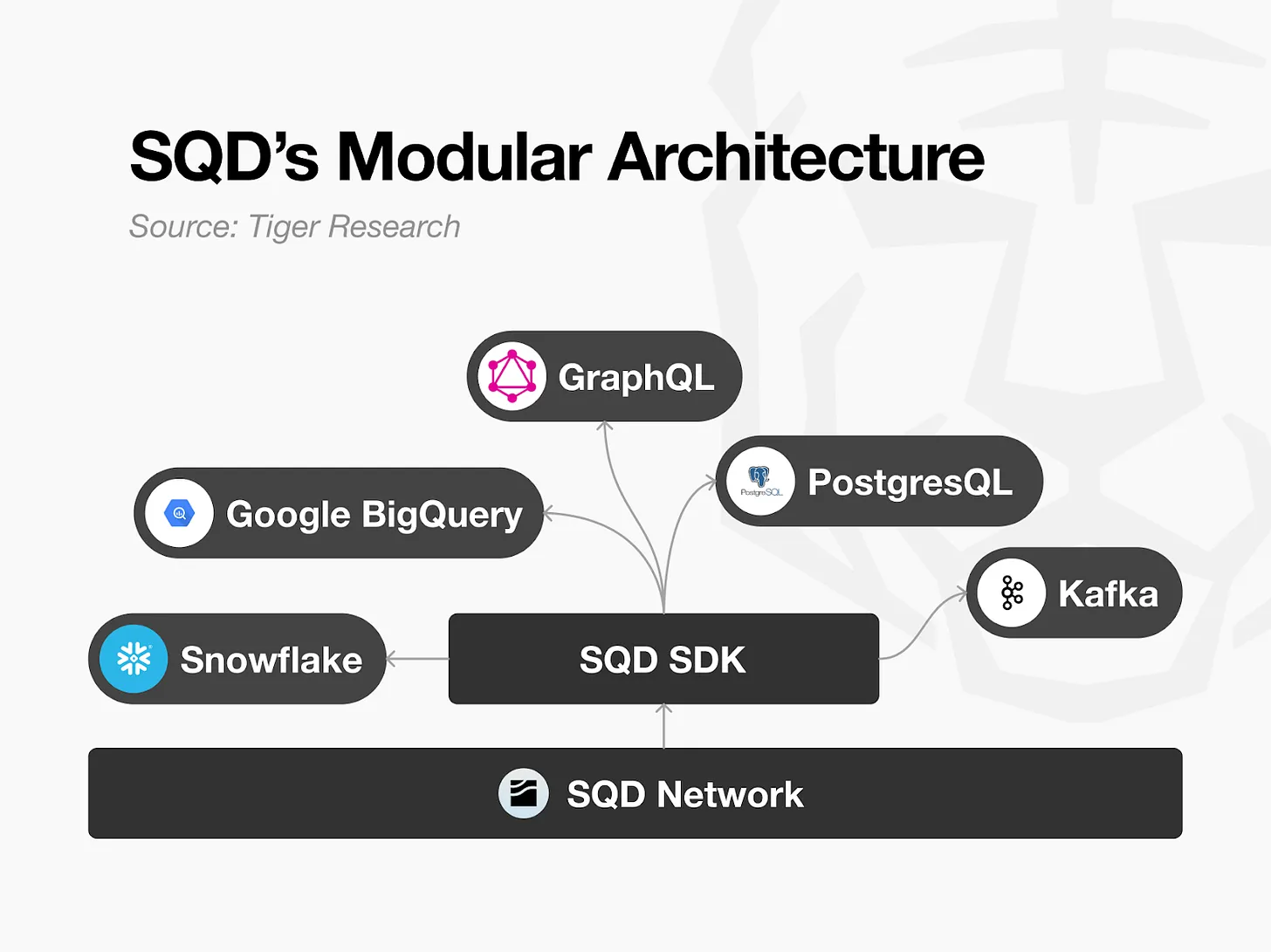

SQD completely separates the data access layer from the processing layer. The SQD network only handles the E (Extract) part of the ETL (Extract-Transform-Load) process. It acts solely as a "data feed," quickly and reliably extracting raw data from the blockchain. Developers can freely choose how to transform and store the data using the SQD SDK.

This structure provides practical flexibility. Developers can store data in PostgreSQL and serve it via GraphQL API. They can export it as CSV or Parquet files. They can load it directly into cloud data warehouses like Google BigQuery. Future plans include supporting large-scale data analysis environments through Snowflake and enabling real-time analysis and monitoring platforms by streaming data directly via Kafka integration without separate storage.

SQD co-founder Dmitry Zhelezov likens this to "providing Lego blocks." SQD does not provide finished products but delivers the highest-performing, most reliable raw materials to developers. Developers can combine these materials according to their needs to complete their data infrastructure. Both traditional enterprises and crypto projects can use familiar tools and languages to process blockchain data. They can flexibly build data pipelines optimized for their specific industries and use cases.

3. Subsquid's Next Steps: Towards Better Data Infrastructure

The SQD team has reduced the complexity and inefficiency of blockchain data access through the SQD network, laying the foundation for decentralized data infrastructure. However, as the scale and scope of blockchain data usage rapidly expand, simple accessibility is no longer sufficient. The ecosystem now requires faster processing speeds and more flexible utilization environments.

The SQD team is advancing the network structure to meet these demands. The team focuses on increasing data processing speeds and creating structures capable of handling data without relying on servers. To achieve this, SQD is developing in phases: 1) SQD Portal and 2) Light Squid.

3.1. SQD Portal: Decentralized Parallel Processing and Real-Time Data

In the existing SQD network, gateways act as intermediaries connecting end users and worker nodes. When a user requests a query, the gateway forwards it to the appropriate worker node and returns the response to the end user. This process is stable, but it can only process queries sequentially at a time. Large-scale queries take a considerable amount of time. Even with thousands of worker nodes available, the system fails to fully utilize their processing capabilities.

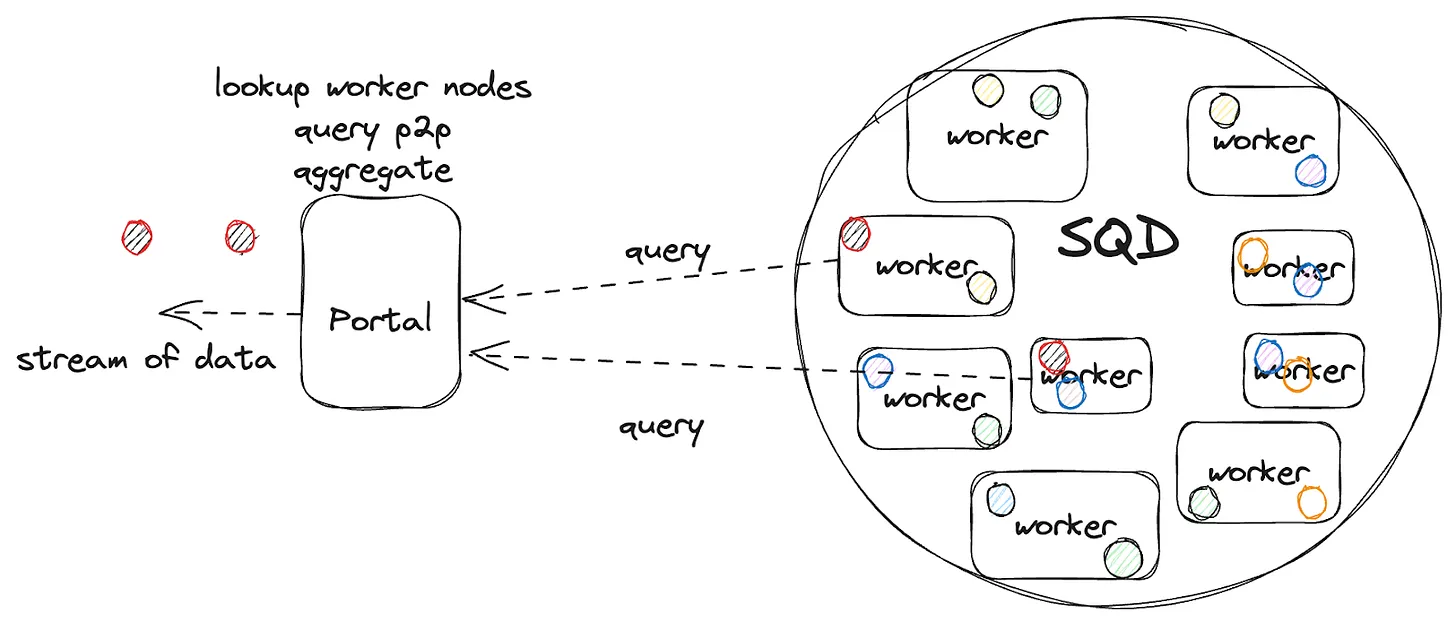

Source: SQD

The SQD team aims to address this issue through the SQD Portal. The core of the Portal is decentralized parallel processing. It splits a single query into multiple parts and simultaneously sends requests to about 3,000 or more worker nodes. Each worker node processes the assigned part in parallel. The Portal then collects these responses in real-time and delivers them via streaming.

The Portal pre-fetches data into a buffer. This ensures uninterrupted delivery even in the event of network delays or temporary failures. Just like YouTube buffers videos for seamless playback, users receive data without waiting. The team has also restructured the previously Python-based query engine into Rust, significantly improving parallel processing performance. Overall processing speeds have increased by several times compared to before.

The Portal further addresses the real-time data issue. No matter how fast data processing becomes, worker nodes only retain confirmed historical blocks. They cannot retrieve the latest transaction or block information that has just been generated. Users previously had to rely on external RPC nodes to obtain this information. The Portal solves this problem with a real-time distributed stream called "Hotblocks." Hotblocks collect newly generated unconfirmed blocks in real-time from blockchain RPC nodes or dedicated streaming services and store them internally within the Portal. The Portal merges confirmed historical data from worker nodes with the latest block data from Hotblocks. Users can receive data from the past to the present in a single request without needing a separate RPC connection.

The SQD team plans to fully transition existing gateways to the Portal. The Portal is currently in a closed testing phase. In the future, anyone will be able to run Portal nodes directly and perform the gateway role within the network. Existing gateway operators will naturally transition to become Portal operators. (The SQD network architecture can be found here.)

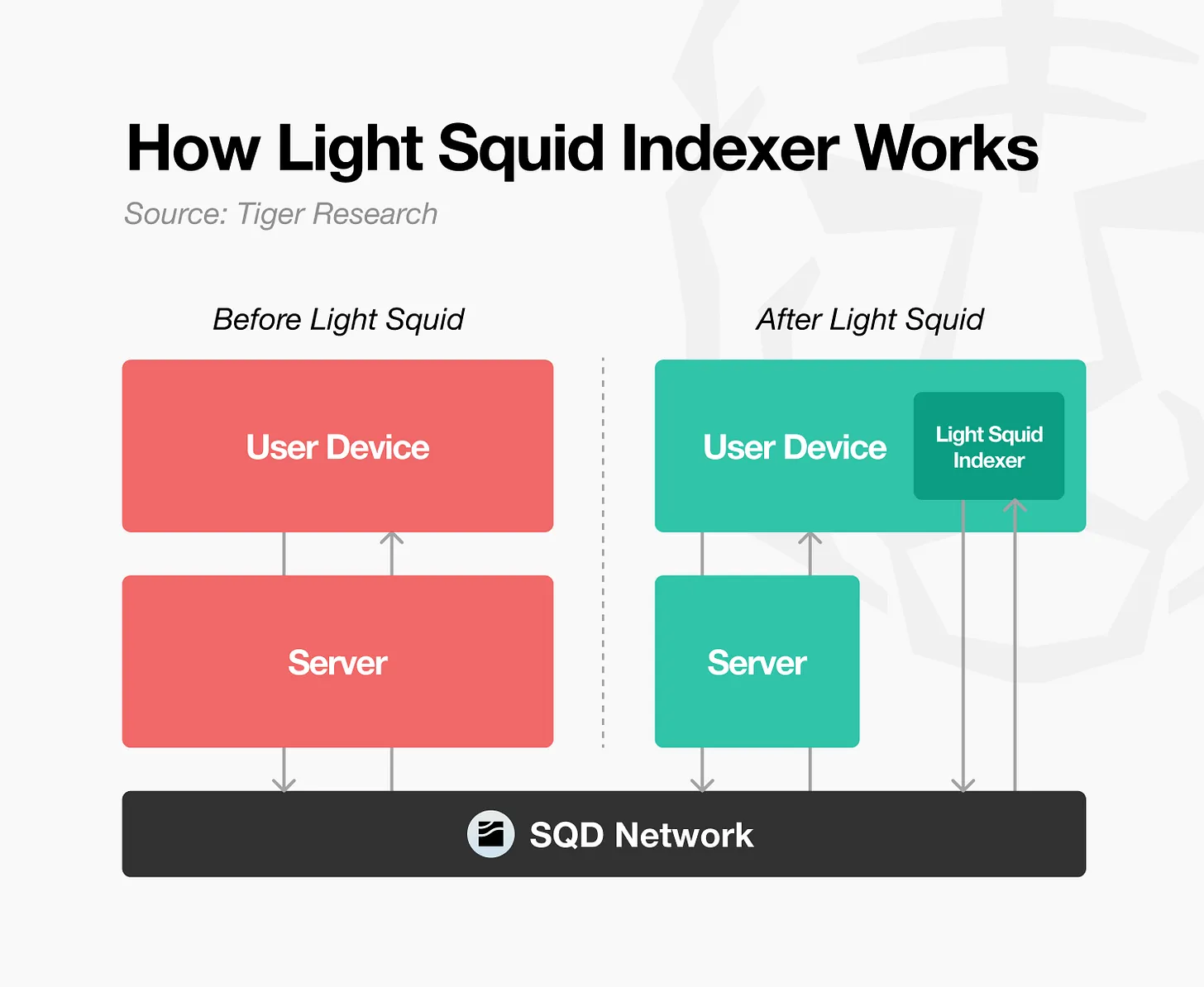

3.2. Light Squid: Indexing in Local Environments

The SQD network reliably provides data, but developers still face the limitations of operating independent servers. Even when retrieving data from worker nodes through the Portal, large database servers like PostgreSQL are needed to process and deliver it to users. This process incurs significant infrastructure building and maintenance costs. Data still relies on a single provider (the developer's server), which is far from a truly distributed structure.

Light Squid simplifies this intermediate step. The original structure operated like a wholesaler (the developer) running a large warehouse (server) to distribute data to consumers. Light Squid transforms this into a D2C (direct-to-consumer) approach, delivering data directly from the source (SQD network) to end users. Users receive the necessary data through the Portal and store it in their local environment. They can query directly in their browser or personal devices. Developers no longer need to maintain separate servers. Even if the user's network connection is interrupted, they can still view the locally stored data.

For example, an application displaying NFT trading history can now run directly in the user's browser without a central server. This is similar to how Instagram displays information feeds offline in Web2. It aims to provide a smooth user experience for dApps in local environments. However, Light Squid is designed as an option to achieve the same indexing environment locally. It does not completely replace server-centric structures. Data is still supplied through a distributed network. As utilization expands to the user level, the SQD ecosystem is expected to evolve into a more accessible form.

4. How Subsquid Works in Practice

The SQD network is merely an infrastructure for providing data, but its applications are limitless. Just as all IT-based industries begin with data, improvements in data infrastructure expand the possibilities for all services built upon it. SQD is already changing the way blockchain data is utilized across various fields and delivering concrete results.

4.1. DApp Developers: Unified Multi-Chain Data Management

The decentralized exchange PancakeSwap is a representative case study. In a multi-chain environment, exchanges must aggregate transaction volumes, liquidity pool data, and token pair information from each chain in real-time. In the past, developers had to connect RPC nodes for each chain, parse event logs, and align different data structures individually. This process would repeat every time a new chain was added. Each protocol upgrade increased the maintenance burden.

After adopting SQD, PancakeSwap can now manage data from multiple chains through a unified pipeline. SQD provides data from each chain in a standardized format. Now a single indexer can handle all chains simultaneously. Adding a new chain now only requires changing the configuration. Data processing logic is consistently managed from a central location. The development team has reduced the time spent on data infrastructure management. They can now focus more on improving core services.

4.2. Data Analysts: Flexible Data Processing and Integrated Analysis

On-chain analysis platforms like Dune and Artemis provide high accessibility and convenience by allowing quick and easy data queries using SQL. However, their limitations lie in that work can only be done within the chains and data structures supported by the platform. Additional processes are required when combining external data or executing complex transformations.

SQD complements this environment, enabling data analysts to handle data more freely. Users can directly extract the necessary blockchain data, transform it into the desired format, and load it into their own databases or warehouses. For example, analysts can retrieve trading data from a specific decentralized exchange, aggregate it by time period, combine it with existing financial data, and apply it to their own analysis models. SQD does not replace the convenience of existing platforms. It increases the freedom and scalability of data processing. Analysts can expand the depth and application range of on-chain data analysis through a broader data scope and customized processing methods.

4.3. AI Agents: Core Infrastructure for the Agent Economy

For AI agents to make autonomous decisions and execute trades, they need a reliable and transparent infrastructure. Blockchain provides a suitable foundation for autonomous agents. All transaction records are transparently public and difficult to tamper with. Cryptocurrency payments enable automatic execution.

However, AI agents currently struggle to access blockchain infrastructure directly. Each developer must build and integrate data sources individually. The network structures vary, hindering standardized access. Even centralized API services require multiple steps, including account registration, key issuance, and payment setup. These processes preset human intervention, which is unsuitable for autonomous environments.

The SQD network bridges this gap. Based on a permissionless architecture, agents automate data requests and payments using the $SQD token. They receive necessary information in real-time and process it independently. This establishes an operational foundation for autonomous AI that connects directly to the data network without human intervention.

Source: Rezolve.Ai

On October 9, 2025, Rezolve AI announced the acquisition of SQD, further clarifying this direction. Rezolve is a Nasdaq-listed AI-based business solutions provider. Through this acquisition, Rezolve is building the core infrastructure for the AI agent economy. Rezolve plans to combine the digital asset payment infrastructure of the previously acquired Smartpay with SQD's distributed data layer. This will create an integrated infrastructure that allows AI to process data, intelligence, and payments in a single workflow. Once Rezolve completes this integration, AI agents will analyze blockchain data in real-time and execute transactions independently. This marks a significant turning point for SQD as a data infrastructure for the AI agent economy.

4.4. Institutional Investors: Real-Time Data Infrastructure for the Institutional Market

With the expansion of real-world asset tokenization (RWA), institutional investors are actively participating on-chain. Institutions require data infrastructure that ensures accuracy and transparency to leverage on-chain data for trading, settlement, and risk management.

Source: OceanStream

SQD has launched OceanStream to meet this demand. OceanStream is a decentralized data lakehouse platform that streams data from over 200 blockchains in real-time. The platform is designed to provide institutional-grade data quality and stability. It combines sub-second latency streaming with over 3PB of indexed historical data to enhance the backtesting, market analysis, and risk assessment environments for financial institutions. This enables institutions to monitor more chains and asset classes in real-time at a lower cost. They can execute regulatory reporting and market monitoring within a unified integrated system.

OceanStream participated in a roundtable hosted by the U.S. Securities and Exchange Commission's crypto task force, discussing how the transparency and verifiability of on-chain data affect market stability and investor protection. This indicates that SQD is establishing itself as a data-driven structure that connects tokenized financial markets with institutional capital, rather than merely developing infrastructure.

5. SQD's Vision: Building the Data Pillar of Web3

The competitiveness of the Web3 industry depends on its ability to leverage data. However, due to different blockchain structures, data remains fragmented. The infrastructure to effectively address this issue is still in its early stages. SQD aims to bridge this gap by building a standardized data layer that processes all blockchain data within a single structure. In addition to on-chain data, SQD also plans to integrate off-chain data, including financial transactions, social media, and business operations, to create an analytical environment that spans both worlds.

This vision is similar to how Snowflake sets the standard for data integration in traditional industries with "one platform, multiple workloads." SQD aims to establish itself as the data pillar of Web3 by integrating blockchain data and connecting off-chain data sources.

However, SQD will take time to develop into a fully decentralized infrastructure. The project is currently in a guiding phase, and the SQD team still plays a crucial role. There are limitations in terms of the scale of the developer community and the diversity of the ecosystem. Nevertheless, the growth demonstrated in just over a year since the autonomous mainnet launch, along with the strategic expansion through the acquisition by Rezolve AI, showcases a clear direction. SQD is paving the way for blockchain data infrastructure and evolving into the foundational data layer supporting the entire Web3 ecosystem—from dApp development to institutional investment to the AI agent economy. Its potential is expected to grow significantly.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。