You need to understand #AI Agent; this book (paper) is a must-read for everyone. Li Feifei's "AGENT AI" is the most enjoyable and forward-looking book I've read this year, and it's not difficult to understand the entire text, as it lacks deep professional terminology and algorithmic logic. It's worth reading for every ordinary person, and a link to the full text is provided at the end of the article.

I can responsibly tell everyone: AI Agent is the most worthwhile investment area in the later stages of artificial intelligence (whether in the US stock market or the Web3 field), and it is the direction that is closest to consumer perception. For the general public, it is the most directly accessible and scalable field.

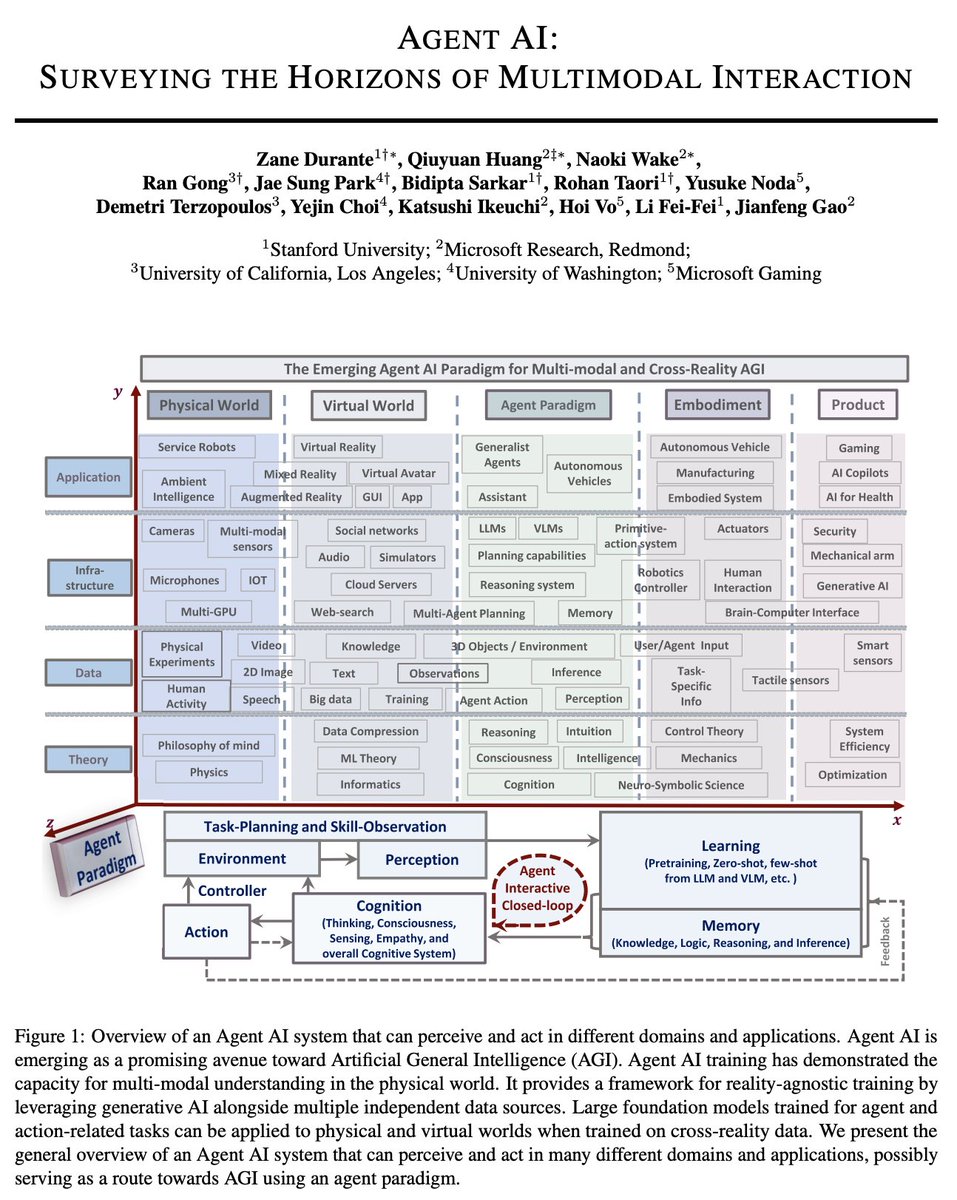

As described in the opening paper: An overview of AI Agent systems, which can perceive and act in different fields and applications. AI Agents are a promising pathway to General Artificial Intelligence (AGI). AI Agent training has proven its ability for multimodal understanding in the physical world. It provides a framework for training unrelated to reality by leveraging generative artificial intelligence combined with multiple independent data sources. We propose an overall overview of an agent-based artificial intelligence system capable of perceiving and acting in many different fields and applications, as a paradigm for AGI.

The article emphasizes the current technological status, application prospects, and future development directions of AI Agents in multimodal human-computer interaction (HCI). Some core technologies and innovative directions revealed are worth our deep thinking and exploration. We should not let AI Agents remain at the level of voice interaction and visual interaction; their scope is much broader:

- Core Concepts and Significance of Multimodal HCI

Multimodal HCI achieves natural, flexible, and efficient interaction between humans and computers by integrating various information modes such as voice, text, images, and touch. The core goals of this technology are:

• To enhance the naturalness and immersion of interactions.

• To expand the applicability of human-computer interaction scenarios.

• To promote the ability of computers to understand diverse human input patterns.

- Future Development Directions

The article systematically organizes five research areas:

1️⃣ Big Data Visualization Interaction

📢 Concept: Transforming complex data into easily understandable graphical representations, enhancing user experience through multiple sensory channels (visual, tactile, auditory, etc.).

🔎 Progress:

• Exploration of data visualization based on Virtual Reality (VR) and Augmented Reality (AR);

• Helping users better understand data distribution in medical and research fields through tactile feedback (such as force and vibration feedback).

📝 Applications:

• Smart city monitoring: Real-time display of urban traffic data through dynamic heat maps.

• Medical data analysis: Exploring multidimensional data combined with tactile feedback.

2️⃣ Sound Field Perception-Based Interaction

📢 Concept: Utilizing microphone arrays and machine learning algorithms to analyze sound field changes in the environment, facilitating non-visual human-computer interaction.

🔎 Progress:

• Improvement in the accuracy of sound source localization technology;

• Robust voice interaction technology in noisy environments.

📝 Applications:

• Smart home: Voice-controlled devices that complete tasks without physical contact.

• Assistive technology: Providing sound-based interaction methods for visually impaired users.

3️⃣ Mixed Reality Physical Interaction

📢 Concept: Merging virtual information with the physical world through mixed reality technology (MR), allowing users to manipulate virtual environments using real-world objects.

🔎 Progress:

• Optimization of virtual object interaction based on physical touch;

• High-precision physical-virtual object mapping technology.

📝 Applications:

• Education and training: Immersive teaching through simulated real environments.

• Industrial design: Using virtual prototypes for product validation.

4️⃣ Wearable Interaction

📢 Concept: Achieving interaction through wearable devices such as smartwatches and health monitoring devices using gestures, touch, or skin electronics technology.

🔎 Progress:

• Increased sensitivity and durability of skin sensors;

• Multi-channel fusion algorithms enhancing interaction accuracy.

📝 Applications:

• Health monitoring: Real-time tracking of heart rate, sleep, and exercise status;

• Gaming and entertainment: Controlling virtual characters through wearable devices.

5️⃣ Human-Computer Dialogue Interaction

📢 Concept: Researching technologies such as speech recognition, emotion recognition, and speech synthesis to enable computers to better understand and respond to user language input.

🔎 Progress:

• The popularity of large language models (such as GPT) has greatly improved the naturalness of dialogue systems;

• Increased accuracy of speech emotion recognition technology.

📝 Applications:

• Customer service robots: Supporting multilingual voice interaction.

• Smart assistants: Personalized voice command responses.

Thus, we see many AI Agent projects, especially in the Web3 field, mostly still at the level of intelligent assistants for human-computer dialogue interaction, such as 24-hour tweeting, personalized AI voice chatting, and couple chatting. However, we have recently observed some projects combining intelligent wearables with #Depin + #AI to provide innovations in the field of health data, such as rings (I won't name specific companies; you can check for yourself, also part of the #SOL chain ecosystem), smartwatches, pendants, etc. The opportunities here are more valuable and interesting than traditional single #AI public chains or applications, and investors will prefer them. After all, we have invested in two companies that combine hardware, software, and AI, which will be a promising direction!

Areas Where Technology Companies Are Investing Heavily

Expanding Interaction Methods: Exploring new interaction means, such as olfactory and temperature perception, to further enhance the dimensions of multimodal integration.

Optimizing Multimodal Combinations: Designing efficient and flexible multimodal combinations to enable different modes to work together more naturally.

Device Miniaturization: Developing lighter and lower-power devices for everyday use.

Cross-Device Distributed Interaction: Enhancing interoperability between devices to achieve seamless multi-device interaction.

Improving Algorithm Robustness: Especially in open environments, enhancing the stability and real-time performance of multimodal perception and fusion algorithms.

Investment-Worthy Application Scenarios

• Medical Rehabilitation: Helping patients with rehabilitation training and psychological counseling through voice, image, and tactile feedback.

• Office Education: Providing intelligent office assistants and personalized education platforms to enhance efficiency and experience.

• Military Simulation: Using mixed reality technology for combat simulation and tactical training.

• Entertainment and Gaming: Creating immersive gaming and entertainment experiences to enhance user interaction with virtual environments.

In summary: Dr. Li's article systematically organizes the core technologies of multimodal HCI using the future application scenarios of AI Agents, combining practical applications and future research directions, guiding investors in #AIAgent towards direction and investment logic. This article can be said to be a must-read AI book for 2024, allowing me to better understand the key role of multimodal human-computer interaction technology in promoting future intelligent living and revealing its immense potential in open environments and complex scenarios. Investing in the future is the way to seize wealth! As always: Layout #AI, learn #AI, invest #AI. Time is of the essence! 🧐

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。