Author: Shayon Sengupta, Multicoin Capital

Translation: JIN, Techub News

On June 6, 2024, Binance announced that Launchpool will launch the IO token from io.net and users can deposit BNB and FDUSD into the IO mining pool on the Launchpool website to receive IO rewards starting at 8:00 am Hong Kong time on June 7. The IO mining will last for 4 days. The website is expected to be updated approximately five hours before the mining activity opens.

In addition, Binance will list the IO token from io.net at 8:00 pm Hong Kong time on June 11 and open IO/BTC, IO/USDT, IO/BNB, IO/FDUSD, and IO/TRY trading markets.

IO Token Unlock and Rewards

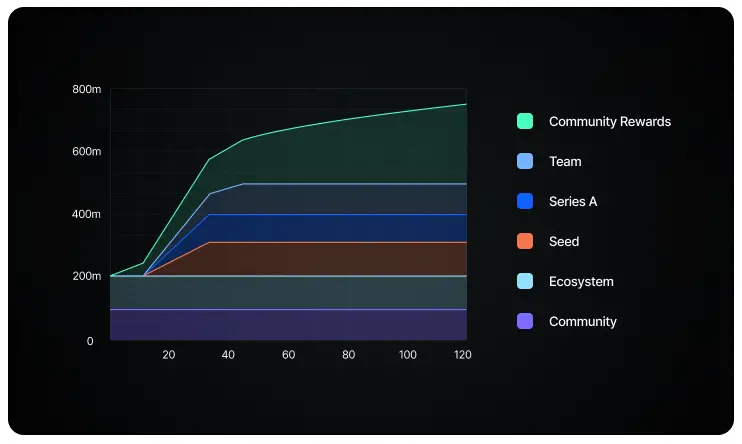

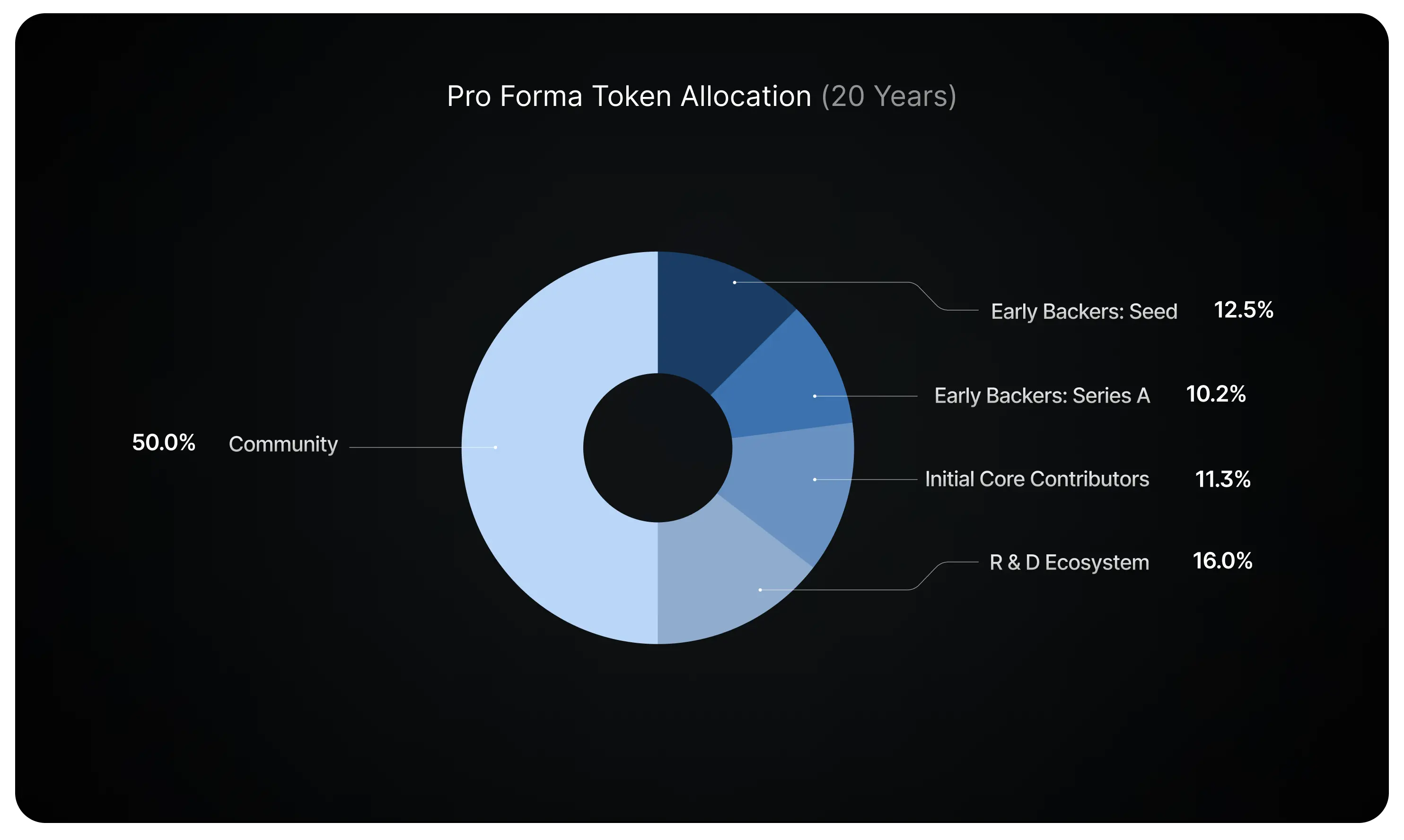

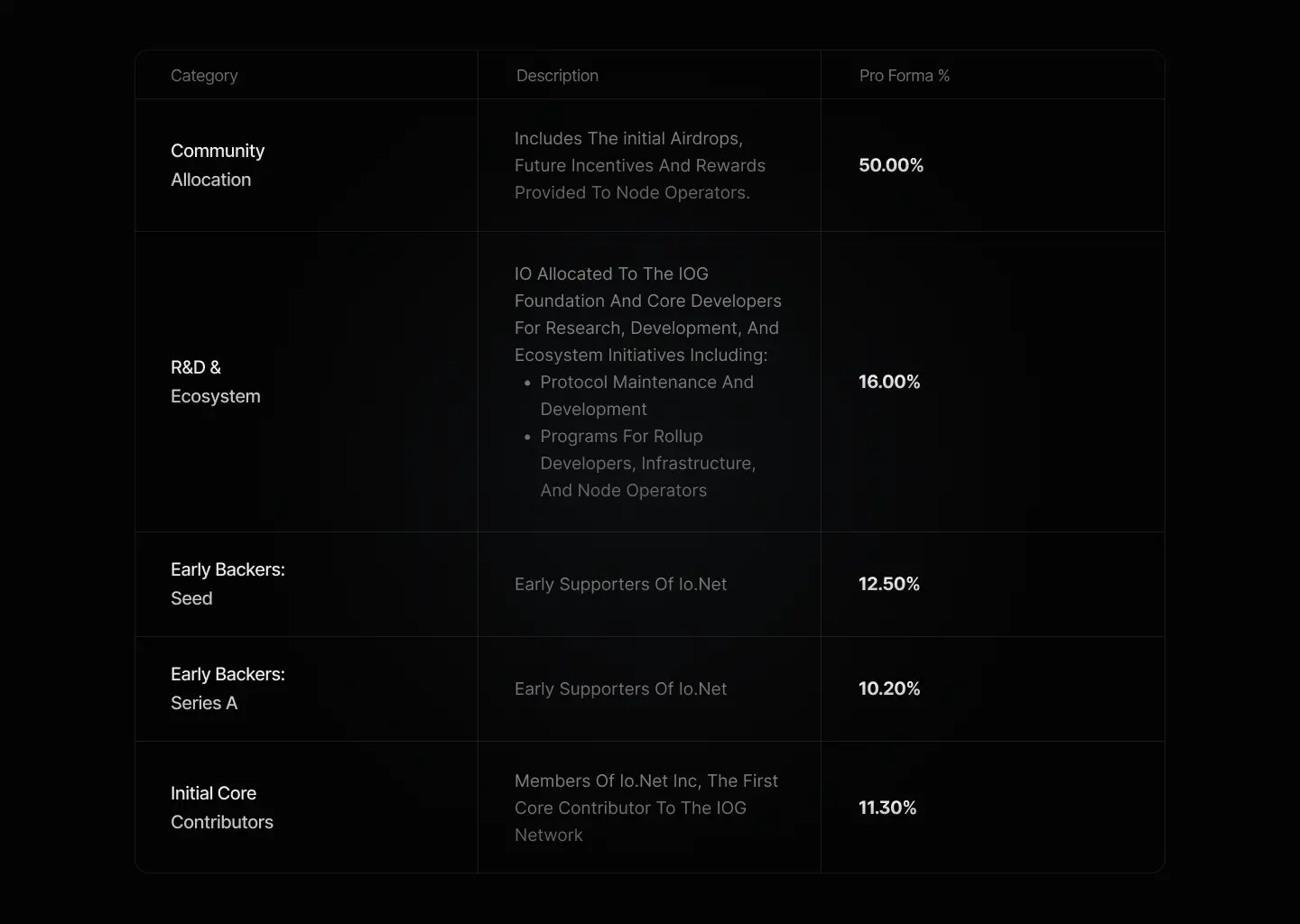

According to the official documentation of io.net, the total supply of IO tokens is 8 billion, with 5 billion IO tokens released at launch and the gradual issuance of 3 billion IO tokens over the next 20 years until reaching the upper limit of 8 billion tokens. The initial 5 billion supply unlock and rewards are shown in the following figure, divided into five categories: seed investors, A-round investors, core contributors, research and ecosystem, and community.

IO Token Unlock and Rewards

Estimated Allocation of IO Tokens

Seed Investors: 12.5%

A-round Investors: 10.2%

Core Contributors: 11.3%

Research: 16%

Ecosystem and Community: 50%

Estimated Allocation of IO Tokens

The following introduction of io.net is written by Multicoin Capital, a participant in the previous $30 million A-round financing of io.net:

We are pleased to announce our investment in io.net, a distributed network that provides AI computing power rental services. We not only led the seed round but also participated in the A-round financing. io.net has raised a total of $30 million, with participants including Multicoin, Hack VC, 6th Man Ventures, Modular Capital, and a consortium of angel investors, aiming to build an on-demand, always-available AI computing power market.

I first met Ahmad Shadid, the founder of io.net, at the April 2023 Solana hackathon event Austin Hacker House and was immediately drawn to his unique insights into decentralized infrastructure for ML (machine learning) computing power.

Since then, the io.net team has demonstrated strong execution. Today, the network has aggregated tens of thousands of distributed GPUs and provided over 57,000 hours of computing time for AI enterprises. We are excited to collaborate with them and contribute to the resurgence of AI in the next decade.

I. Global Computing Power Shortage

The demand for AI computing is growing at an astonishing rate, a demand that is currently unmet. In 2023, data centers providing computing power for AI needs generated revenue exceeding 100 billion USD, but even in the most conservative scenario, the demand for AI exceeds chip supply.

During a period of high interest rates and cash flow shortages, new data centers capable of accommodating such hardware require significant initial investment. The core issue lies in the limitations on the production of advanced chips such as NVidia A100 and H100. While GPU performance continues to improve and costs steadily decrease, the manufacturing process cannot be accelerated due to shortages of raw materials, components, and production capacity.

Despite the promising future of AI, the physical space required to support its operation is increasing daily, leading to a significant increase in demand for space, power, and cutting-edge equipment. io.net has paved the way for us, removing constraints on computing power.

io.net is a classic case of real-world application of DePIN: by using token incentives to structurally reduce the cost of acquiring supply-side resources, it reduces costs for the ultimate GPU computing power demanders. It aggregates idle GPU resources from around the world into a shared pool for use by AI developers and companies. Today, the network is supported by thousands of GPUs from data centers, mining farms, and consumer-grade devices.

While these valuable resources can be integrated, they do not automatically scale to a distributed network. In the history of cryptocurrency technology, there have been several attempts to build distributed GPU computing networks, all of which failed to meet the demands of demanders.

Coordinating and scheduling computing work on heterogeneous hardware with different memory, bandwidth, and storage configurations is a key step in achieving a distributed GPU network. We believe that the io.net team has the most practical solution on the market today, one that can aggregate this hardware to be useful for end customers and be cost-effective.

II. Paving the Way for Clusters

In the history of computer development, software frameworks and design patterns adjust themselves around the available hardware configurations in the market. Most frameworks and libraries used for AI development heavily rely on centralized hardware resources, but in the past decade, distributed computing infrastructure has made significant progress in practical applications.

io.net utilizes existing idle hardware resources to network them through a custom network and orchestration layer, creating a highly scalable GPU internet. This network uses Ray, Ludwig, Kubernetes, and various other open-source distributed computing frameworks so that ML engineering and operations teams can scale their workloads on existing GPU networks.

ML teams can parallelize workloads on io.net GPUs by launching clusters of computing devices and utilize these libraries for orchestration, scheduling, fault tolerance, and scaling. For example, if a group of freelance graphic designers contributes their GPUs to the network, io.net can build a cluster designed to allow image model developers from around the world to rent collective computing resources.

BC8.ai is an example of a fine-tuned stable diffusion variant model, which was trained entirely on the io.net network. The io.net browser displays real-time inferences and incentives for network contributors.

Supercomputer for Artificial Intelligence

Each generated image's information is recorded on-chain. All fees are paid to the 6 RTX 4090 clusters, which are consumer-grade GPUs used for gaming.

Today, the network has tens of thousands of devices spread across mining farms, underutilized data centers, and consumer nodes of Render Network. In addition to creating new GPU supply, io.net is also able to compete on cost with traditional cloud service providers, often offering cheaper resources.

They achieve cost reduction by outsourcing GPU coordination and operations to decentralized protocols. On the other hand, cloud service providers mark up their products due to employee expenses, hardware maintenance, and data center operational costs. The cost of consumer-grade GPU clusters and mining farms is much lower than the costs that hyperscalers are willing to accept for large-scale computing centers, creating a structural arbitrage that dynamically prices resources on io.net below the continuously rising cloud service rates.

III. Building the GPU Internet

io.net has a unique advantage in maintaining lightweight asset operations and reducing the marginal cost of serving any specific customer to almost zero, while directly establishing relationships with market demand and supply, able to serve thousands of people who need access to GPUs to build competitive AI products, a future where everyone will interact with it.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。