Efficiency is not enough, but is being very efficient always good?

Author: DAN SHIPPER

Translator: Ines

Midjourney prompt: "From the perspective of someone about to cross a bridge, imagine a rope suspension bridge spanning a huge and dangerous chasm, watercolor painting."

How much should I optimize? This is a question I often ask myself, and I'm sure you do too. If you're optimizing for a goal, such as building a multi-generational company, finding the perfect life partner, or designing a perfect exercise plan, then your inclination is to strive for perfection.

Optimization is the pursuit of perfection—we optimize towards a goal because we don't want to settle. But is relentlessly pursuing perfection always better? In other words, how much optimization is too much?

For a long time, people have been trying to figure out the difficulty of optimization. You can place them on a spectrum.

On one end is John Mayer, who believes less is more. In his hit "Gravity," he sings:

"Oh, twice as much ain't twice as good / And can't sustain like one half could / It's wanting more that's gonna send me to my knees."

Dolly Parton holds the opposite view. Her famous quote is: "Less is not more. More is more."

Aristotle disagrees with both views. He proposed the golden mean over 2000 years ago: when optimizing for a goal, you need a middle ground between too much and too little.

Which one should we choose? It's now 2023. We hope to be more quantitative and less vague on this issue. Ideally, we can measure how effective optimization for a goal is in some way.

Today, we often turn to machines for help. Goal optimization is a key focus of machine learning and artificial intelligence researchers. To make a neural network do anything useful, you have to give it a goal and work to make it better at achieving that goal. The answers computer scientists have found in neural networks can teach us a lot about optimization in general.

A recent article by machine learning researcher Jascha Sohl-Dickstein has made me particularly excited. He put forward the following view:

Machine learning tells us that over-optimizing for a goal can lead to things going off the rails—this can be quantified. When machine learning algorithms over-optimize for a goal, they often ignore the big picture, leading to what researchers call "overfitting." In practice, when we overly focus on perfecting a process or task, we become too adapted to the task at hand and cannot effectively respond to changes or new challenges.

So, when it comes to optimization—indeed, "more" does not mean "more." Bring it on, Dolly Parton.

This article is my attempt to summarize Jascha's article and explain his views in plain language. To understand this, let's take a look at how machine learning models are trained.

Mindsera uses artificial intelligence to help you uncover hidden thinking patterns, reveal thinking blind spots, and better understand yourself.

You can use useful frameworks and mental models to build your own thinking, make better decisions, improve your health, and increase work efficiency using journal templates.

Mindsera's AI mentor imitates the thinking of intellectual giants such as Marcus Aurelius and Socrates, providing you with new insights.

Intelligent analysis will generate original works based on your writing, measure your emotional state, reflect your personality, and provide personalized advice to help you improve.

Build self-awareness, clarify your thoughts, and succeed in an increasingly uncertain world.

Let's get started.

Want to become a volunteer? Click here.

👉https://www.passionfroot.me/every

Being too efficient can make everything worse

Imagine you want to create a machine learning model that performs exceptionally well at classifying dog pictures. You want to be able to input a picture of a dog and get the corresponding breed. But you don't just want a regular dog picture classifier. You're aiming for an unparalleled machine learning classifier, at any cost, tirelessly programmed, with the help of countless cups of coffee. (After all, we're optimizing.)

So, how do you achieve this vision? While there are multiple strategies, you're likely to choose supervised learning. Supervised learning is like having a tutor for your machine learning model: it continuously asks the model questions and corrects it when it's wrong, gradually familiarizing the model with the answers to the problem. During this training process, the model will gradually improve its accuracy in answering questions.

First, you need to prepare a dataset of images to train your model. You predefine labels for these images, such as "Poodle," "Cocker Spaniel," "Dandie Dinmont Terrier," and so on. Then, you input these images and their corresponding labels into the model to start its learning journey.

The model's learning process is like a "trial and error" process. You show it an image, and it tries to guess the label of the image. If the answer is wrong, you make adjustments to the model in the hope that it will give a more accurate answer next time. By persisting with this method, over time, you'll find that the model performs better and better at predicting the labels of images in the training set.

Now, the model is performing better and better at predicting the labels of images in the training set, and you set it a new task. You ask the model to label images of dogs it has never seen during training.

This is an important test: if you only ask the model about images it has seen before, it's a bit like letting it cheat in the test. So, you bring in some images of dogs that you're sure the model hasn't seen before.

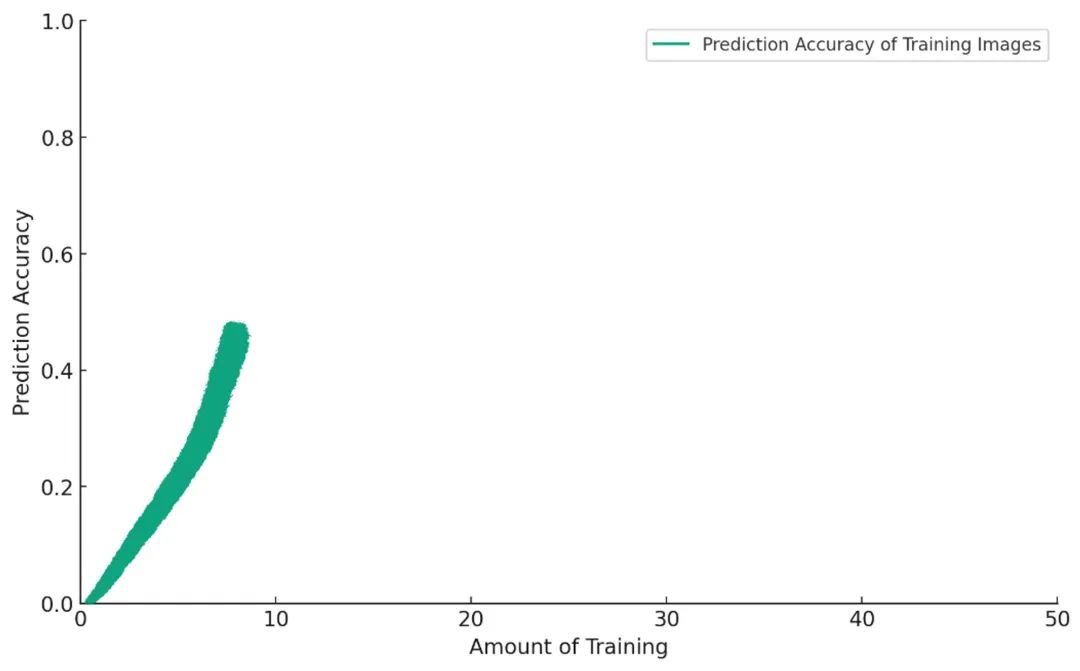

At first, everything goes very smoothly. The more you train the model, the better it performs:

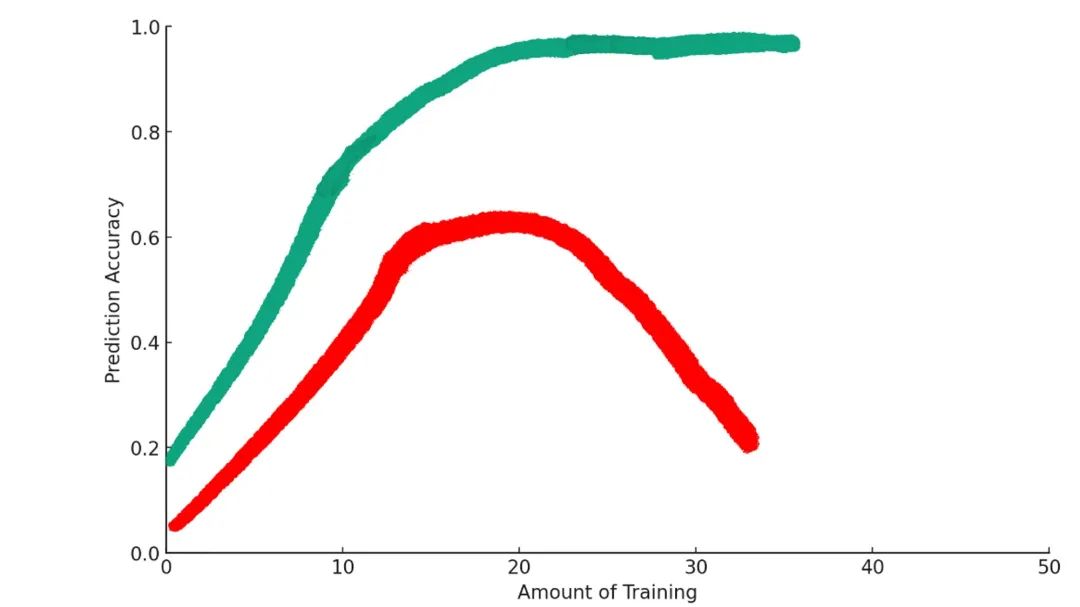

But if you continue training, the model will start to exhibit behavior akin to an AI version of "pooping on the carpet": what's going on here?

What's happening here?

Some training will make the model more optimized for achieving the goal. But beyond a certain point, excessive training actually makes things worse. This is a phenomenon in machine learning called "overfitting."

Why does overfitting make things worse

In model training, we're actually doing something subtle.

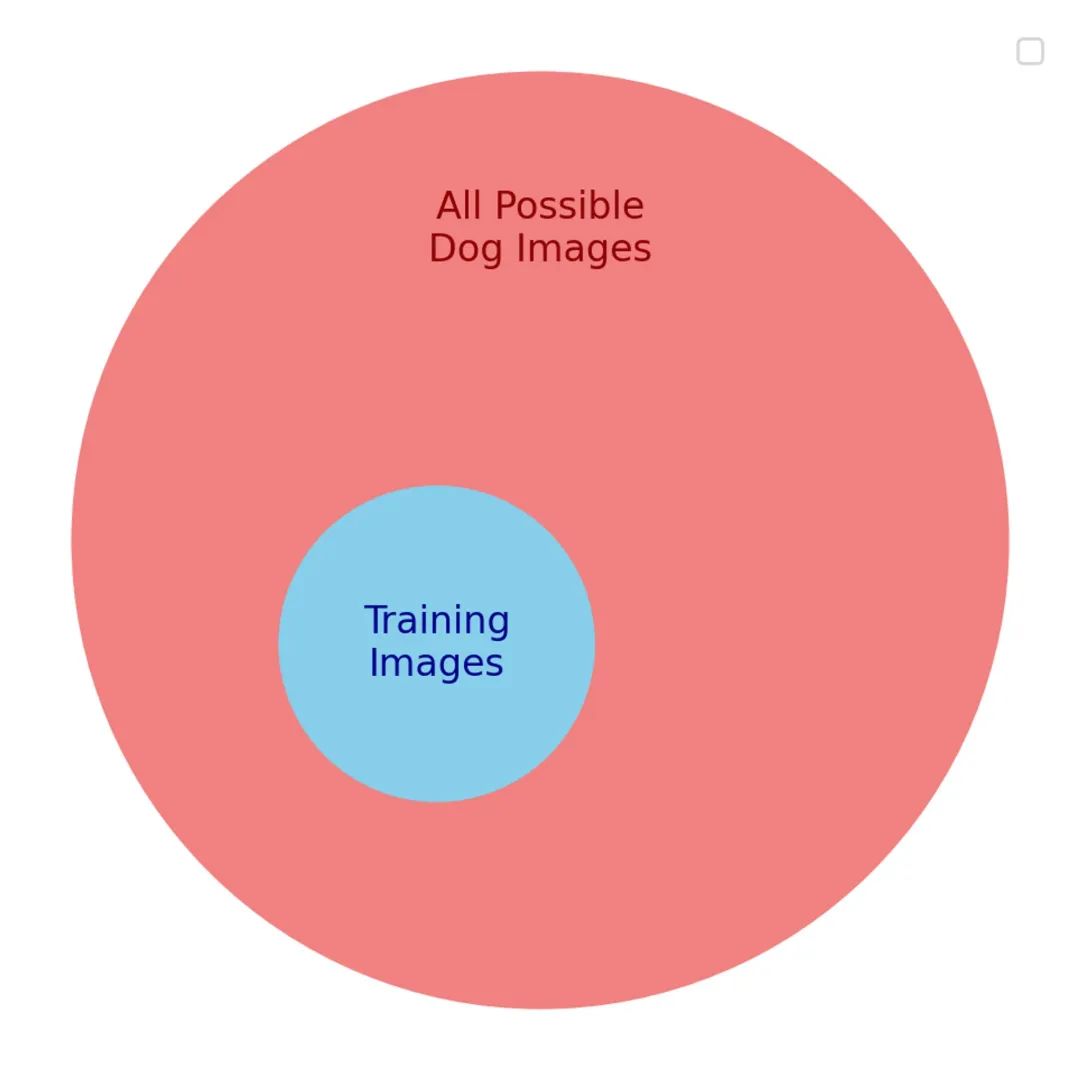

We want the model to be good at labeling any dog picture—this is our real goal. But we can't optimize directly for this because we can't possibly get all possible dog pictures. Instead, we optimize for a proxy goal: a subset of dog pictures that we hope will represent the real goal.

There are many similarities between the proxy goal and the real goal. So, at the beginning, the model makes progress on both of these goals. But as the model trains more and more, the useful similarities between these two goals gradually decrease. Soon, the model is only good at recognizing the content in the training set and performs poorly on other content.

As the model continues training, it starts to rely too much on the details of the dataset you used to train it. For example, there might be too many pictures of yellow Labrador Poodles in the training dataset. When the model is overtrained, it might mistakenly learn that all yellow dogs are Labrador Poodles.

When the overfitted model encounters new pictures that differ from the characteristics of the training dataset, it performs poorly.

Overfitting reveals an important insight into our exploration of goal optimization.

First, when you try to optimize anything, you are rarely optimizing the thing itself—you're optimizing a proxy. In the dog classification problem, we can't train the model on all possible dog pictures. Instead, we try to optimize for a subset of dog pictures and hope that it generalizes well. It does—until we over-optimize.

This also brings up the second insight: when you over-optimize a proxy function, you actually move further away from your original goal.

Once you understand this mechanism in machine learning, you start to see it everywhere.

Applying Overfitting to the Real World

Take schools, for example:

In school, we want to optimize learning subject knowledge for our courses. But measuring how deeply you understand a subject is difficult, so we have standardized tests. Standardized tests can to some extent represent how well you understand a subject.

But when students and schools put too much pressure on standardized test scores, the pressure to optimize test scores starts to harm real learning. Students become too adapted to the process of improving test scores. They learn how to take tests (or cheat) to optimize their scores, rather than truly learning the subject knowledge.

Overfitting also exists in the business world. In the book "Fooled by Randomness," Nassim Taleb writes about a banker named Carlos, a well-dressed trader in emerging market bonds. His trading style was to buy on dips: when the Mexican currency devalued in 1995, Carlos bought at the low and profited when bond prices rose after the crisis.

This buying on dips strategy brought in $80 million in net profits for his firm. But Carlos became "overfitted" to the markets he was exposed to, and his pursuit of optimizing returns ultimately led to his downfall.

In the summer of 1998, he bought Russian bonds at a low point. As the summer progressed, the downturn intensified—Carlos kept buying. He kept doubling down until the bond prices were very low, and he eventually lost $300 million—three times what he had earned in his entire career up to that point.

As Taleb points out in his book, "In the markets, the most successful traders may be those most adapted to the latest cycle."

In other words, over-optimizing returns may mean over-adapting to the current market cycle. Your performance will significantly improve in the short term. But the current market cycle is just a representation of the overall market behavior—when the cycle changes, your previously successful strategies may suddenly bankrupt you.

The same insight applies to my business. Every is a subscription media business, and I want to increase MRR (monthly recurring revenue). To achieve this, I could increase traffic to our articles by rewarding authors for more page views.

This is likely to work! Increasing traffic does increase our paying subscribers—up to a point. Beyond that point, I bet authors would start to increase page views through clickbait or salacious articles that don't attract the engaged, paying readers. Ultimately, if I turn Every into a clickbait factory, our paying subscribers might decrease instead of growing.

If you continue to observe your life or your business, you'll surely see the same pattern. The question is: what should we do?

So, What Should We Do?

Machine learning researchers use many techniques to prevent overfitting. Jascha's article tells us three major measures that can be taken: early stopping, introducing random noise, and regularization.

Early Stopping

This means always checking the model's performance on its real goal and pausing training when the performance starts to decline.

In Carlos's case, as a trader, losing all his funds on buying bonds at a low point might mean needing a strict loss control mechanism that forces him to exit trades after a certain cumulative loss.

Introducing Random Noise

Introducing noise in the input or parameters of a machine learning model makes it harder to overfit. The same principle applies to other systems.

For students and schools, this might mean conducting standardized tests at random times to increase the difficulty of rote memorization.

Regularization

In machine learning, regularization is used to penalize the model to prevent it from becoming too complex. The more complex the model, the easier it is to overfit the data. The technical details aren't too important, but the same concept can be applied to increase friction within other systems.

If I want to incentivize all Every authors to increase our MRR by increasing page views, I could modify the way page views are rewarded so that any page views beyond a certain threshold gradually count less.

These are potential solutions to address the overfitting problem, which brings us back to our initial question: what is the optimal level of optimization?

The Optimal Level of Optimization

The main lesson we've learned is that you almost never directly optimize for a goal—instead, you're usually optimizing for something that looks like your goal but is slightly different. It's a proxy.

Because you have to optimize for a proxy, when you over-optimize, you become too good at maximizing your proxy goal—often at the expense of your real goal.

So, the key point to remember is: know what you're optimizing for. Know that the proxy for the goal is not the goal itself. Stay flexible in the optimization process, and be prepared to stop or switch strategies when the useful similarity between your proxy goal and the real goal is exhausted.

As for the views of John Mayer, Dolly Parton, and Aristotle on the wisdom of optimization, I think we have to give the award to Aristotle and his golden mean.

When optimizing for a goal, the optimal level of optimization lies between too much and too little. It's "just right."

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。