The key issue is not the amount of computing power, but how to empower AI products.

Written by: FMGResearch

TL; DR

In the competition of AI era products, the resource side (computing power, data, etc.) is indispensable, especially stable resource support.

Model training/iteration also requires a large number of user targets (IP) to help feed data, leading to a qualitative change in model efficiency.

Combining with Web3 can help small and medium-sized AI startups achieve overtaking of traditional AI giants.

For the DePIN ecosystem, the resource side such as computing power and bandwidth determines the lower limit (pure computing power integration does not have a moat); the application and deep optimization of AI models (similar to BitTensor), specialization (Render, Hivemaper), and effective use of data determine the upper limit of the project.

In the context of AI+DePIN, model inference & fine-tuning, as well as the mobile AI model market, will receive attention.

AI Market Analysis & Three Issues

According to data, from September 2022, on the eve of ChatGPT's birth, to August 2023, the top 50 AI products globally generated over 24 billion visits, with an average monthly growth of 236.3 million visits.

The prosperity of AI products is accompanied by an increasing dependence on computing power.

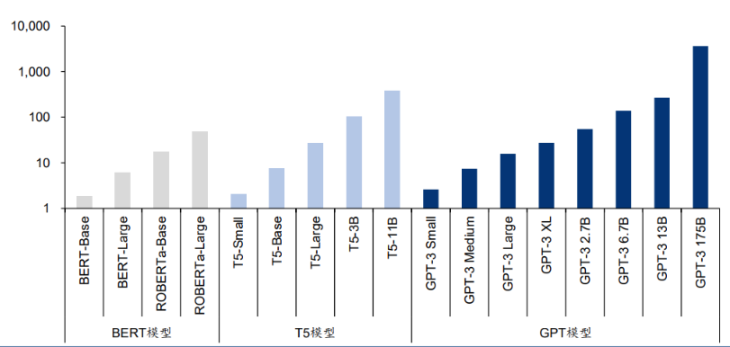

Source: Language Models are Few-Shot Learners

A paper from the University of Massachusetts Amherst pointed out, "Training an artificial intelligence model emits carbon equivalent to the emissions of five cars over its lifetime." However, this analysis only involves one training. When the model is repeatedly trained for improvement, energy usage will significantly increase.

The latest language models contain tens of billions or even trillions of weights. A popular model, GPT-3, has 175 billion machine learning parameters. Training this model using A100 requires 1024 GPUs, 34 days, and $4.6 million.

The post-AI era's product competition has gradually evolved into a resource-side war centered on computing power.

Source: AI is harming our planet: addressing AI’s staggering energy cost

This leads to three issues: First, whether an AI product has sufficient resource side (computing power, bandwidth, etc.), especially stable resource support. This reliability requires sufficient computing power for decentralization. In the traditional field, due to the gap in chip demand, coupled with the world wall constructed based on policies and ideologies, chip manufacturers naturally have an advantage and can significantly raise prices. For example, the price of the NVIDIA H100 chip model increased from $36,000 in April 2023 to $50,000, further increasing the cost for AI model training teams.

The second issue is that the satisfaction of resource-side conditions helps AI projects meet the hardware needs, but model training/iteration also requires a large number of user targets (IP) to help feed data. After the model scale exceeds a certain threshold, its performance shows breakthrough growth in different tasks.

The third issue is that small and medium-sized AI startups find it difficult to achieve overtaking. The monopolistic nature of computing power in the traditional financial market also leads to the monopolistic nature of AI model solutions. Large AI model manufacturers represented by OPenAI and Google Deepmind are further building their moats. Small and medium-sized AI teams need to seek more differentiated competition.

All three issues can be addressed through Web3. In fact, the integration of AI and Web3 has a long history and a relatively prosperous ecosystem.

The following image shows some of the tracks and projects of the AI+Web3 ecosystem produced by Future Money Group.

AI+DePIN

1. DePIN's Solution

DePIN stands for Decentralized Physical Infrastructure Network, which is also a collection of production relations between people and devices. It combines token economics with hardware devices (such as computers, in-vehicle cameras, etc.) to organically integrate users with devices and realize the orderly operation of the economic model.

Compared to the broader definition of Web3, DePIN has a natural advantage in attracting off-chain AI teams and related funding due to its deeper connection with hardware devices and traditional enterprises.

The DePIN ecosystem's pursuit of distributed computing power and incentives for contributors happens to address the needs of AI products for computing power and IP.

- DePIN uses token economics to drive the entry of global computing power (computing centers & idle personal computing power), reducing the centralization risk of computing power and lowering the cost for AI teams to access computing power.

- The large and diverse IP composition of the DePIN ecosystem helps AI models obtain diverse and objective data acquisition channels, and a sufficient number of data providers also ensures the performance improvement of AI models.

- The overlap of DePIN ecosystem users with Web3 users in character portraits can help AI projects develop more AI models with Web3 characteristics, forming differentiated competition, which is not available in the traditional AI market.

In the Web2 field, AI model data collection usually comes from public datasets or is collected by the model maker, which is subject to cultural and geographical limitations, leading to subjective "distortion" of the content produced by AI models. Traditional data collection methods are also limited by collection efficiency and cost, making it difficult to achieve a larger model scale (parameter quantity, training duration, and data quality) for AI models. For AI models, the larger the model scale, the easier it is for the model's performance to undergo a qualitative change.

Source: Large Language Models’ emergent abilities: how they solve problems they were not trained to address?

DePIN happens to have a natural advantage in this area. Taking Hivemapper as an example, it is distributed in 1920 regions worldwide, with nearly 40,000 contributors providing data for MAP AI (map AI model).

The combination of AI and DePIN also means that the integration of AI and Web3 has reached new heights. Current AI projects in Web3 have widely erupted in applications and almost have not escaped direct dependence on Web2 infrastructure, and are likely to rely on existing AI models on traditional computing platforms, with little involvement in creating AI models.

Web3 elements have always been at the bottom of the food chain, unable to obtain truly excessive returns. The same is true for distributed computing platforms; the combination of AI and computing power alone cannot truly tap into the potential of both. In this relationship, the computing power provider cannot obtain more excess profits, and the ecosystem architecture is too singular, so it cannot promote the flywheel to operate through token economics.

However, the concept of AI+DePIN is breaking this inherent relationship and shifting the focus of Web3 to a broader aspect of AI models.

2. Summary of AI+DePIN Projects

DePIN naturally possesses the equipment (computing power, bandwidth, algorithms, data), users (model training data providers), and incentive mechanisms (token economics) that AI urgently needs.

We can boldly define that a project providing comprehensive objective conditions for AI (computing power/bandwidth/data/IP), offering AI model (training/inference/fine-tuning) scenarios, and endowed with token economics can be defined as AI+DePIN.

Future Money Group will list the classic paradigms of AI+DePIN for the following.

We have divided the projects into four categories based on the type of resource provision, namely computing power, bandwidth, data, and others, and attempted to sort out different projects in each category.

2.1 Computing Power

The computing power side is the main component of the AI+DePIN sector and currently constitutes the largest number of projects. In the computing power sector, the main components of computing power are GPUs (graphics processing units), CPUs (central processing units), and TPUs (specialized machine learning chips). TPUs, mainly developed by Google due to their high manufacturing difficulty, are only available for cloud computing services, resulting in a relatively small market size. GPUs, on the other hand, are more efficient than CPUs in handling parallel mathematical operations and are the main source of computing power in the current market.

Therefore, many AI+DePIN projects in the computing power aspect specialize in graphics and video rendering or related gaming aspects, due to the characteristics of GPUs.

From a global perspective, the main providers of computing power in AI+DePIN products consist of three parts: traditional cloud computing service providers, idle personal computing power, and self-owned computing power. Among them, cloud computing service providers account for a large proportion, followed by idle personal computing power. This means that such products often play the role of computing power intermediaries. The demand side consists of various AI model development teams.

Currently, computing power in this category is almost never fully utilized in practice and is often idle. For example, Akash Network is currently using about 35% of its computing power, with the rest being idle. The same is true for io.net.

This may be due to the relatively low demand for AI model training and is also the reason why AI+DePIN can provide low-cost computing power. As the AI market expands, this situation is expected to improve.

Akash Network: Decentralized Peer-to-Peer Cloud Service Market

Akash Network is a decentralized peer-to-peer cloud service market, often referred to as the Airbnb of cloud services. Akash Network allows users and companies of different scales to use their services quickly, stably, and economically.

Similar to Render, Akash also provides GPU deployment, leasing, and AI model training services to users.

In August 2023, Akash launched Supercloud, allowing developers to set the price they are willing to pay to deploy their AI models, while providers with additional computing power host users' models. This feature is very similar to Airbnb, allowing providers to rent out unused capacity.

By openly bidding, Akash Network incentivizes resource providers to open up their idle computing resources, making resource utilization more efficient and providing more competitive prices to resource demanders.

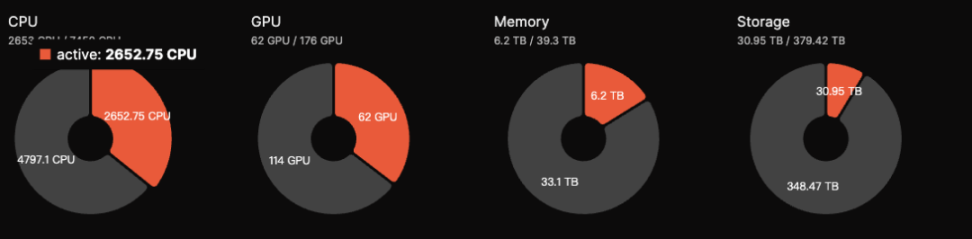

Currently, Akash's ecosystem has a total of 176 GPUs, but the active number is 62, with an activity rate of 35%, lower than the 50% level in September 2023. The estimated daily income is around $5,000. The AKT token has staking functionality, allowing users to participate in network security maintenance by staking the token and earning an annualized return of around 13.15%.

Akash's performance in the AI+DePIN sector is relatively high-quality, and its FDV of $700 million compared to Render and BitTensor indicates significant upside potential.

Akash has also integrated with BitTensor's Subnet to expand its development space. Overall, Akash's project is one of the high-quality projects in the AI+DePIN track, with excellent fundamentals.

io.net: AI+DePIN with the Most GPU Access

io.net is a decentralized computing network that supports the development, execution, and scaling of ML (machine learning) applications on the Solana blockchain, using the world's largest GPU cluster to allow machine learning engineers to rent and access distributed cloud service computing power at a fraction of the cost of centralized services.

According to official data, io.net has over 1 million GPUs in standby. In addition, io.net's collaboration with Render has also expanded the available GPU resources for deployment.

The io.net ecosystem has a large number of GPUs, but almost all of them come from cooperation with various cloud computing vendors and access by individual nodes, and the idle rate is high. For example, out of 8426 RTX A6000 GPUs, only 11% (927) are in use, and other GPU models are hardly used. However, io.net's major advantage at present is its low pricing, with costs ranging from 0.1 to 1 USD, compared to Akash's GPU call cost of 1.5 USD per hour.

In the future, io.net is considering allowing GPU providers in the IO ecosystem to increase their chances of being used by staking native assets. The more assets they invest, the greater their chances of being selected. At the same time, AI engineers staking native assets can also use high-performance GPUs.

In terms of GPU access scale, io.net is the largest among the 10 projects listed in this article. Apart from the idle rate, the number of GPUs in use is also the highest. In terms of token economics, the native token and protocol token IO will be launched in the first quarter of 2024, with a maximum supply of 22,300,000 tokens. Users will be charged a 5% fee when using the network, which will be used to burn IO tokens or provide incentives for new users on both the supply and demand sides. The token model has obvious upward characteristics, so although io.net has not yet launched its token, it has a high market heat.

Golem: Computing Power Market Dominated by CPUs

Golem is a decentralized computing power market that supports anyone to share and aggregate computing resources by creating a network of shared resources. Golem provides users with scenarios for renting computing power.

Golem's market consists of three parties: computing power providers, computing power demanders, and software developers. Computing power demanders submit computing tasks, and the Golem network allocates these tasks to suitable computing power providers (providing RAM, disk space, and CPU cores, etc.). After the computing tasks are completed, settlement is done through token payments.

Golem mainly uses CPU for computing power stacking, although the cost is lower than that of GPUs (the Inter i9 14900k costs around $700, while the A100 GPU costs between $12,000 and $25,000). However, CPUs cannot handle high-concurrency operations and consume more power. Therefore, using CPUs for computing power leasing may be slightly weaker in narrative compared to GPU projects.

Magnet AI: Assetization of AI Models

Magnet AI integrates GPU computing power providers to offer model training services to different AI model developers. Unlike other AI+DePIN products, Magnet AI allows different AI teams to release ERC-20 tokens based on their own models, and users can receive airdrops and additional rewards by participating in interactions with different models.

In Q2 2024, Magnet AI will launch Polygon zkEVM & Arbrium.

Similar to io.net, Magnet AI primarily focuses on integrating GPU resources and providing model training services to AI teams.

The difference is that io.net focuses more on integrating GPU resources, encouraging different GPU clusters, companies, and individuals to contribute GPUs and receive rewards, driving computing power.

Magnet AI seems to focus more on AI models, and the existence of AI model tokens may attract and retain users through token airdrops, and drive the entry of AI developers by tokenizing AI models.

In summary, Magnet acts as a marketplace built with GPUs, where any AI developer or model deployer can issue ERC-20 tokens, and users can obtain different tokens or actively hold different tokens.

Render: Professional Player in Graphics Rendering AI Models

Render Network is a provider of decentralized rendering solutions based on GPU, aiming to connect creators and idle GPU resources through blockchain technology to eliminate hardware limitations, reduce time and costs, and provide digital rights management, further promoting the development of the metaverse.

According to the Render whitepaper, artists, engineers, and developers can create a range of AI applications using Render, such as AI-assisted 3D content generation, AI-accelerated full-range rendering, and using Render's 3D scene graph data for related AI model training.

Render provides the Render Network SDK to AI developers, enabling them to use Render's distributed GPUs to execute AI computing tasks, from NeRF (Neural Radiance Fields) and LightField rendering processes to generative AI tasks.

According to a report by Global Market Insights, the global 3D rendering market is expected to reach $6 billion. With a FDV of $2.2 billion, Render still has development potential.

Currently, specific data on Render based on GPUs cannot be found, but due to OTOY's association with Apple and its wide-ranging business, including the star renderer OctaneRender under OTOY, which supports leading 3D toolsets for VFX, gaming, motion design, architectural visualization, and simulation in the Unity3D and Unreal engines.

Google and Microsoft have also joined the RNDR network. Render processed nearly 250,000 rendering requests in 2021, and artists in the ecosystem generated sales of around $5 billion through NFTs.

Therefore, the reference valuation of Render should be compared to the potential of the general rendering market (approximately $30 billion). With the BME (Burn and Mint Equilibrium) economic model, Render still has significant upside potential in terms of token price and FDV.

Clore.ai: Video Rendering

Clore.ai is a platform that provides GPU computing power rental services based on PoW. Users can rent out their GPUs for tasks such as AI training, video rendering, and cryptocurrency mining at a low cost.

Its business scope includes AI training, movie rendering, VPN, and cryptocurrency mining. When there is a specific computing power service demand, the network assigns tasks; if there is no computing power service demand, the network finds the cryptocurrency with the highest mining profitability at that time and participates in mining.

In the past six months, the number of GPUs at Clore.ai has increased from around 2,000 to 9,000, surpassing Akash in terms of GPU integration. However, its secondary market FDV is only about 20% of Akash's.

In terms of token economics, CLORE adopts a POW mining model, with no pre-mining or ICO, with 50% of each block allocated to miners, 40% to lessors, and 10% to the team.

With a total supply of 1.3 billion tokens, mining started in June 2022, and it is expected to enter full circulation by 2042, with a current circulation of approximately 220 million tokens. By the end of 2023, the circulation will be about 250 million tokens, accounting for 20% of the total supply. Therefore, the actual FDV is around $31 million. In theory, Clore.ai is severely undervalued, but due to its token economics, with a 50% mining allocation and a high mining-to-selling ratio, there is significant resistance to price increases.

Livepeer: Video Rendering, Inference

Livepeer is a decentralized video protocol based on Ethereum that rewards various parties for securely processing video content at a reasonable price.

According to the official statement, Livepeer has thousands of GPU resources processing millions of minutes of video transcoding every week.

Livepeer may adopt a "mainnet" + "subnet" approach, allowing different node operators to create subnets and execute these tasks by cashing out payments on the Livepeer mainnet. For example, introducing an AI video subnet specifically for AI model training in the video rendering field.

Livepeer will subsequently expand its AI-related aspects from simple model training to inference & fine-tuning.

Aethir: Focus on Cloud Gaming and AI

Aethir is a cloud gaming platform that provides decentralized cloud infrastructure (DCI) specifically built for gaming and AI companies. It helps replace players' heavy GPU computing loads, ensuring that game players can experience ultra-low latency on any device, anywhere.

Additionally, Aethir provides deployment services including GPU, CPU, and disk elements. On September 27, 2023, Aethir officially launched commercial cloud gaming and AI computing power services for global customers, providing computing power support for its platform's games and AI models through the integration of decentralized computing power.

Cloud gaming eliminates the hardware and operating system limitations of terminal devices by transferring the computing rendering power demand to the cloud, significantly expanding the potential player base.

2.2 Bandwidth

Bandwidth is one of the resources provided by DePIN to AI. The global bandwidth market exceeded $50 billion in 2021, and it is predicted to surpass $100 billion by 2027.

As AI models become more numerous and complex, model training typically involves various parallel computing strategies, such as data parallelism, pipeline parallelism, and tensor parallelism. In these parallel computing modes, the importance of collective communication operations between multiple computing devices is increasingly prominent. Therefore, the role of network bandwidth becomes evident when building large-scale training clusters for AI models.

More importantly, stable and reliable bandwidth resources can ensure simultaneous responses between different nodes, technically avoiding the occurrence of single-point control (e.g., Falcon adopts a low-latency + high-bandwidth relay network mode to seek a balance between latency and bandwidth demands), ultimately ensuring the trustworthiness and resistance to censorship of the entire network.

Grass: Bandwidth Mining Product Compatible with Mobile Devices

Grass is the flagship product of Wynd Network, which focuses on open network data and raised $1 million in funding in 2023. Grass allows users to earn passive income by selling unused network resources through internet connections.

Users can sell internet bandwidth on Grass to provide bandwidth services to AI development teams in need, helping with AI model training and earning token rewards.

Currently, Grass is about to launch a mobile version. Since mobile devices and PCs have different IP addresses, this means that Grass users will provide more IP addresses to the platform, and Grass will collect more IP addresses to provide better data efficiency for AI model training.

As of November 29, 2023, the Grass platform has had 103,000 downloads and 1,450,000 unique IP addresses.

The demand for AI model training differs between mobile devices and PCs, so the applicable categories for AI model training are different.

For example, mobile devices have a large amount of data for image optimization, facial recognition, real-time translation, voice assistants, and device performance optimization, which are difficult to provide on PCs.

Currently, Grass is in a relatively advanced position in mobile AI model training. Considering the huge potential of the global mobile device market, Grass's prospects are worth paying attention to.

However, Grass has not yet provided more effective information on AI models, and it is speculated that the initial operation may be primarily based on mining tokens.

Meson Network: Layer 2 Compatible with Mobile Devices

Meson Network is a next-generation storage acceleration network based on blockchain Layer 2, which aggregates idle servers through mining, schedules bandwidth resources, and serves the file and streaming acceleration market, including traditional websites, videos, live broadcasts, and blockchain storage solutions.

Meson Network can be understood as a bandwidth resource pool, with both supply and demand sides. The former contributes bandwidth, and the latter uses bandwidth.

In Meson's specific product structure, there are 2 products (GatewayX, GaGaNode) responsible for receiving bandwidth contributions from nodes worldwide, and 1 product (IPCola) responsible for monetizing these aggregated bandwidth resources.

GatewayX primarily targets integrated commercial idle bandwidth, mainly focusing on IDC centers.

According to Meson's data dashboard, there are currently over 20,000 nodes connected to IDCs worldwide, forming a data transmission capacity of 12.5Tib/s.

GaGaNode mainly integrates residential and personal device idle bandwidth, providing edge computing assistance.

IPCola is Meson's monetization channel, handling tasks such as IP and bandwidth allocation.

Currently, Meson has revealed that its revenue is over a million dollars in six months. According to the official website, Meson has 27,116 IDC nodes and an IDC capacity of 17.7TB/s.

Meson is expected to issue tokens in March-April 2024, but the token economics have been disclosed.

Token name: MSN, initial supply of 100 million tokens, first-year mining inflation rate of 5%, decreasing by 0.5% annually.

Network 3: Integrated with Sei Network

Network3 is an AI company that has built a dedicated AI Layer 2 and integrated with Sei. By optimizing and compressing AI model algorithms, edge computing, and privacy computing, it provides services to AI developers globally, helping developers to train and verify models on a large scale quickly, conveniently, and efficiently.

Currently, Network3 has over 58,000 active nodes, providing 2PB of bandwidth services. It has partnered with 10 blockchain ecosystems, including Alchemy Pay, ETHSign, and IoTeX.

2.3 Data

Unlike computing power and bandwidth, data supply on the current market is relatively niche and has a distinct professionalism. The demand group is usually the project itself or AI model development teams in related categories, such as Hivemapper.

By feeding its own data to train its map model, this paradigm logically does not have any difficulties. Therefore, we can try to broaden the scope to include DePIN projects similar to Hivemapper, such as DIMO, Natix, and FrodoBots.

Hivemapper: Empowering Map AI Products

HiveMapper is one of the top DePIN concepts on Solana, dedicated to creating a decentralized "Google Maps." Users can earn HONEY tokens by purchasing HiveMapper's dashcams and sharing real-time images with HiveMapper.

Future Money Group has provided a detailed description of Hivemapper in the article "FMG Research Report: 19x Increase in 30 Days, Understanding Car DePIN Business Models Represented by Hivemapper," so it will not be further elaborated here. Hivemapper is included in the AI+DePIN sector because it has introduced MAP AI, an AI map-making engine that can generate high-quality map data based on data collected from dashcams.

Map AI introduces a new role, the AI trainer, which encompasses the previous dashcam data contributors and Map AI model trainers.

Hivemapper does not deliberately require specialized AI model trainers, but instead adopts a low-participation threshold similar to remote tasks, guessing geographical locations, and other game-like behaviors to allow more IP addresses to participate. The richer the IP resources of the DePIN project, the more efficient the AI can obtain data. Users participating in AI training can also receive rewards in HONEY tokens.

The application scenarios of AI in Hivemapper are relatively niche, and Hivemapper does not support third-party model training. The purpose of Map AI is to optimize its own map products. Therefore, the investment logic for Hivemapper will not change.

Potential

DIMO: Collecting Data from Inside Cars

DIMO is a car IoT platform built on Polygon, allowing drivers to collect and share their vehicle data, including mileage, driving speed, location tracking, tire pressure, battery/engine health, etc.

By analyzing vehicle data, the DIMO platform can predict when maintenance is needed and promptly alert users. Drivers can not only gain in-depth knowledge about their vehicles but also contribute data to DIMO's ecosystem to receive DIMO tokens as rewards. Data consumers can extract data from the protocol to understand the performance of components such as batteries, autonomous driving systems, and controls.

Natix: Privacy-Empowered Map Data Collection

Natix is a decentralized network built using AI privacy patents. It aims to combine global camera devices (smartphones, drones, cars) based on AI privacy patents to create a secure camera network while collecting data under privacy compliance and populating decentralized dynamic maps (DDMap).

Users participating in data provision can receive tokens and NFTs as incentives.

FrodoBots: Decentralized Network Application Using Robots as Carriers

FrodoBots is a DePIN-like game with mobile robots as carriers that collect impact data through cameras and have a certain social attribute.

Users can participate in the game by purchasing robots and interacting with players worldwide. The cameras on the robots also collect and aggregate road and map data.

These three projects all involve data collection and IP provision. Although they have not yet conducted AI model training, they provide necessary conditions for the introduction of AI models, including Hivemapper. These projects, including Hivemapper, require data collection through cameras and the formation of comprehensive maps. Therefore, the adapted AI models are mainly limited to the field of map construction. Empowering AI models will help these projects establish stronger competitive advantages.

It is important to note that data collection through cameras often encounters regulatory issues related to privacy infringement, such as the definition of the right to privacy for pedestrians when external cameras capture images, and users' concerns about their own privacy. For example, Natix operates AI to protect privacy.

2.4 Algorithms

While computing power, bandwidth, and data focus on resource allocation, algorithms focus on AI models. Taking BitTensor as an example, BitTensor does not directly contribute data or computing power but uses blockchain networks and incentive mechanisms to schedule and select different algorithms, creating a free competition and knowledge-sharing model market in the AI field.

Similar to OpenAI, BitTensor aims to maintain the decentralized nature of models while achieving inference performance matching that of traditional model giants.

The algorithm track has a certain forward-looking nature, and similar projects are not common. As AI models, especially those born on Web3, emerge, competition between models will become normalized.

At the same time, competition between models will increase the importance of downstream AI model industries, such as inference and fine-tuning. AI model training is just the upstream of the AI industry. A model needs to be trained first to have initial intelligence and then undergo more detailed model inference and adjustments (understood as optimization) based on this foundation before it can be deployed at the edge as a finished product. These processes require a more complex ecosystem architecture and computing power support, indicating significant potential for development.

BitTensor: AI Model Oracle

BitTensor is a decentralized machine learning ecosystem with a structure similar to the Polkadot main network + subnets.

Working logic: Subnets transmit activity information to the Bittensor API (acting as an oracle), which then forwards useful information to the main network, which then distributes rewards.

BitTensor ecosystem roles:

Miners: Providers of various AI algorithms and models worldwide, hosting AI models and providing them to the Bittensor network; different types of models form different subnets.

Validators: Evaluators within the Bittensor network. They assess the quality and effectiveness of AI models, rank AI models based on performance for specific tasks, and help consumers find the best solutions.

Users: Final users of AI models provided by Bittensor. They can be individuals or developers seeking to use AI models for applications.

Nominators: Delegates tokens to specific validators to show support and can switch to different validators for delegation.

The open AI supply chain: Some provide different models, some evaluate different models, and some use the results provided by the best models.

Unlike intermediaries such as Akash and Render, which are similar to "computing power intermediaries," BitTensor is more like a "labor market," using existing models to absorb more data to make the models more reasonable. Miners and validators play the roles of "contractors" and "supervisors." Users pose questions, miners provide answers, validators evaluate the quality of the answers, and then return them to the users.

The BitTensor token is TAO. TAO's market value is currently second only to RNDR, but due to the existence of a long-term halving release mechanism over 4 years, the ratio of market value to fully diluted value is among the lowest of several projects, indicating that the overall circulation of TAO is relatively low at present, but the unit price is high. This means that the actual value of TAO is undervalued.

It is currently difficult to find suitable valuation targets. If we consider the similarity in architecture, Polkadot (about $12 billion) can be used as a reference, indicating nearly an 8-fold increase in potential for TAO.

If we consider the "oracle" attribute, Chainlink (about $14 billion) can be used as a reference, indicating nearly a 9-fold increase in potential for TAO.

If we consider business similarity, OpenAI (acquired by Microsoft for about $30 billion) can be used as a reference, indicating a potential increase of around 20 times for TAO.

Conclusion

Overall, AI+DePIN is driving a paradigm shift in the AI track under the Web3 context, moving the market beyond the inherent thinking of "What can AI do in Web3?" to consider the larger question of "What can AI and Web3 bring to the world?"

If NVIDIA CEO Jensen Huang's release of generative large models is called the "iPhone" moment of AI, then the combination of AI and DePIN signifies the true "iPhone" moment for Web3.

As the most easily accepted and mature use case of Web3 in the real world, DePIN is making Web3 more acceptable.

Due to the partial overlap between IP nodes in AI+DePIN projects and Web3 players, their combination is also helping the industry to give birth to its own models and AI products for Web3. This will benefit the overall development of the Web3 industry and pave the way for new tracks in the industry, such as inference and fine-tuning of AI models, as well as the development of mobile AI models.

An interesting point is that the AI+DePIN products listed in the article seem to fit into the development path of public chains. In previous cycles, various new public chains emerged, attracting developers to settle in with their own TPS and governance methods.

The current AI+DePIN products are similar in this regard, attracting various AI model developers based on their own computing power, bandwidth, data, and IP advantages. Therefore, we currently see a trend of homogenized competition in AI+DePIN products.

The key issue is not the amount of computing power (although this is a very important prerequisite), but how to use this computing power. The current AI+DePIN track is still in the early stages of "wild growth," so we can have high expectations for the future pattern and form of AI+DePIN products.

References

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。