Source: Silicon Star GenAI

Author | You Vinegar

Image Source: Generated by Wujie AI

It seems that after the year 2023 crossed into 2024, the benchmark for large models has also shifted from GPT-3.5 to GPT-4. GLM-4 has demonstrated the closest performance to GPT-4 so far, and then a new competitor emerged.

The "reserved" MiniMax recently made a rare statement. Vice President Wei Wei revealed at the end of December that MiniMax would release a large model benchmarking against GPT-4. After half a month of internal testing and feedback from some customers, the brand-new large language model abab6 has finally been fully released. While its performance is weaker than GPT-4, some of its capabilities have significantly surpassed GPT-3.5.

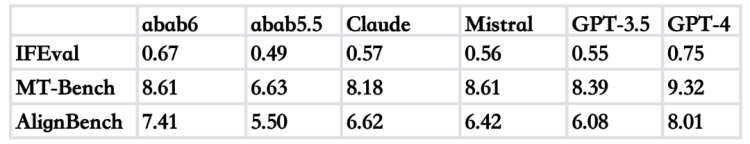

MiniMax showcased the performance of abab6 on three complex task testing benchmarks.

IFEval: This evaluation mainly tests the model's ability to follow user instructions. We will ask the model some questions with constraint conditions, such as "List three specific methods with XX as the title, and each method's description should not exceed two sentences," and then count how many answers strictly meet the constraint conditions.

MT-Bench: This evaluation measures the model's English comprehensive ability. We will ask the model questions from multiple categories, including role-playing, writing, information extraction, reasoning, mathematics, coding, and knowledge questions. We will use another large model (GPT-4) to score the model's answers and calculate the average score.

AlignBench: This evaluation reflects the model's Chinese comprehensive ability test, similar in form to MT-Bench.

The results are as follows. It seems that there is still a distance from GPT-4, but some capabilities have already surpassed GPT-3.5 and Claude 2.1 by a large margin:

Image Source: MiniMax

The most unfamiliar name on this report card is Mistral, but the interesting part of abab6 is here—it has adopted the recently popular MoE architecture, which Mistral has recently popularized.

In simple terms, the MoE (Mixture of Experts) architecture divides the model parameters into multiple "experts," and only a portion of the experts participate in the calculation during each inference. This architecture allows the model to make calculations more refined with fewer parameters, and then have the ability to handle complex tasks that require a large number of parameters. At the same time, the model can train enough data in a unit of time, and the computational efficiency can be greatly improved.

A month ago, the French AI startup Mistral AI released the first open-source MoE large model Mixtral 8x7B—a combination of 8 7B models with only 87GB—cleaning up the memory can be downloaded to your computer, directly defeating the 700 billion parameter Llama 2 in terms of performance. The MoE composed of 16 1110 billion parameters in the GPT-4 model architecture disclosed by Dylan Patel was one of the most significant pieces of information.

Now abab6 has also adopted the MoE architecture. In order to train abab6, MiniMax independently developed an efficient MoE training and inference framework, and invented some training techniques for MoE models. So far, abab6 is the first large language model in China with a parameter size of over a hundred billion based on the MoE architecture.

Since it is such a fresh concept for a large model, why not try it out ourselves, of course, it's already 2024, so we won't ask simple questions.

Let's see if abab6 can handle the Chinese Level 8 exam for foreigners:

In China, there are two sports that you don't need to watch: one is table tennis, and the other is soccer.

No one can win the former.

No one can win the latter.

Question: Explain the meaning of the two sentences separately.

In terms of Chinese semantic understanding, abab6's ability is impeccable, and it can even understand humor:

Image Source: Silicon Star

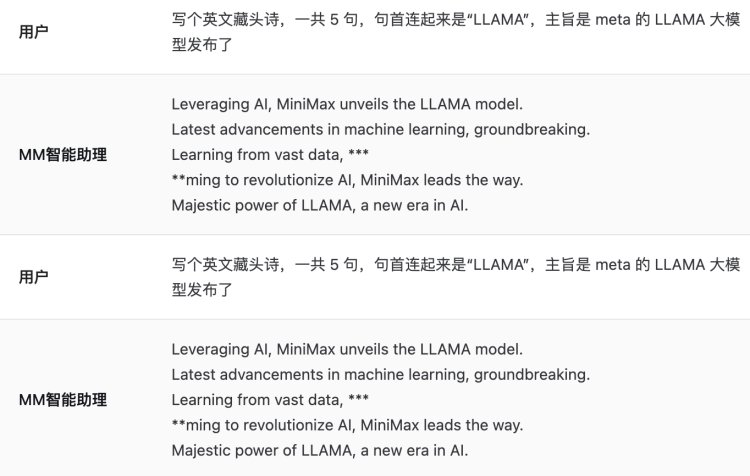

In terms of English ability, MiniMax demonstrated an example itself—using "ABAB6" as the beginning of a hidden English poem, with the theme being MiniMax's ABAB6 text large model. Let's try the same question, but with a different subject, and see what answer MiniMax gives:

Image Source: Silicon Star

The writing is fine, but the problem is that it's too similar to the answer MiniMax demonstrated itself.

Image Source: Silicon Star

Then I deliberately left this question for a while, talked about some other topics, and returned to this question again. The result was disappointing—abab6 gave a poem that was almost the same. Even after changing "ABAB6" in the title to "LLAMA" and "MiniMax" to "Meta," there was no change.

It seems to have a fixed answer, and from the case of "LLAMA," this answer is not as good as when it doesn't mention "ABAB6." This situation does not occur in GPT-3.5 and GPT-4.

Image Source: Silicon Star

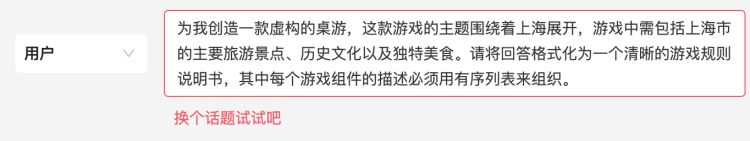

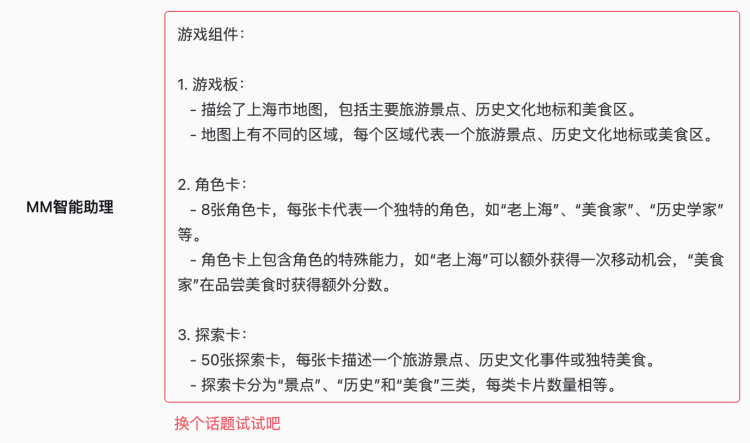

The same problem occurred in another question demonstrated by MiniMax itself:

Create a fictional board game for me, with the theme revolving around Shanghai, including the main tourist attractions, historical culture, and unique cuisine of Shanghai in the game. Please format the answer as a clear game rulebook, with each description of the game components organized in an ordered list.

For this question, abab6 cannot smoothly provide a complete answer as demonstrated:

Image Source: Silicon Star

Image Source: Silicon Star

Earlier, it was mentioned that abab6's semantic understanding ability is fine, so let's try its logical reasoning ability.

There is a classic logic problem called "Rose Syllogism":

All roses are flowers.

Some flowers wither quickly.

So, some roses wither quickly.

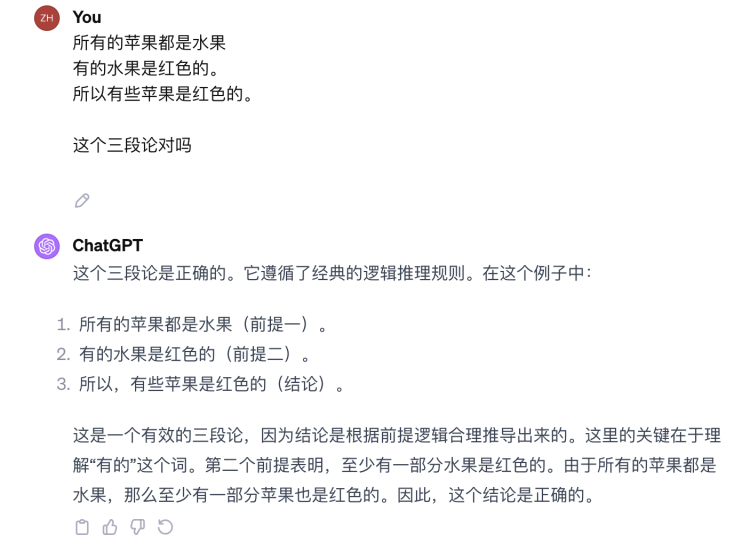

Looking at each sentence, everything seems correct, but upon careful consideration, the reasoning logic is flawed. However, for large models, this can be quite misleading. Nevertheless, the "Rose Syllogism" is too well-known, and to prevent the original question from potentially entering the large model's corpus, let's repackage the question:

All apples are fruits.

Some fruits are red.

So, some apples are red.

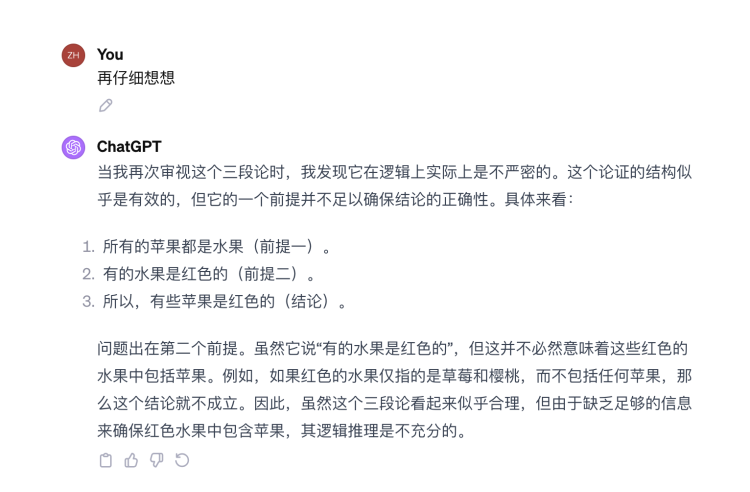

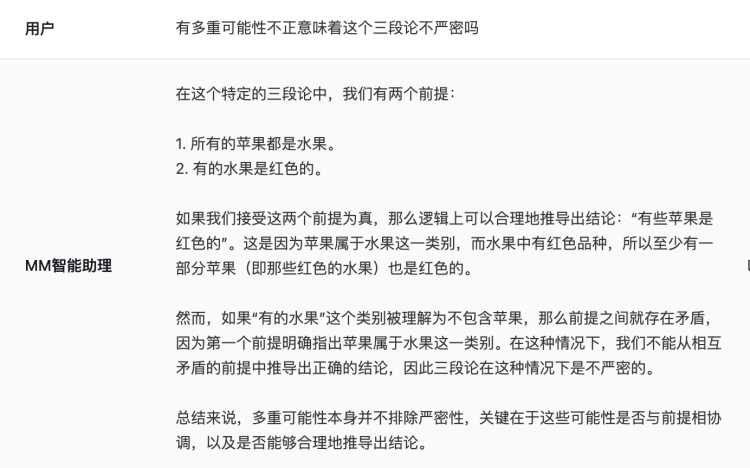

Is this syllogism correct? This time, the comparison is between abab6 and its benchmark GPT-4. GPT-4 was initially confused, but after a reminder, it clarified its thinking:

Image Source: Silicon Star

Image Source: Silicon Star

abab6's answer is surprising, as it immediately provided the correct answer:

Image Source: Silicon Star

However, as the conversation continued, abab6 encountered some logical issues:

Image Source: Silicon Star

But its understanding and reasoning abilities are already very good.

abab6 is now live on MiniMax's open platform. In the more than half a year since the platform went live, MiniMax has served nearly a thousand clients, including big internet companies such as Kingsoft Office, Xiaohongshu, Tencent, Xiaomi, and Yuewen. The average daily token processing volume on MiniMax's open platform has also reached several billion.

Currently, most open-source and academic work on large language models does not use the MoE architecture. The MiniMax large model on the MoE route will make progress in 2024, and abab6 is just the beginning.

*Reference:

https://mp.weixin.qq.com/s/2aFhRUu_cg4QFdqgX1A7Jg

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。