Article Source: Quantum Bit

Image Source: Generated by Wujie AI

A special IMO International Mathematical Olympiad for AI has arrived—

With a whopping prize of $10 million!

The competition claims to be "representing a new Turing test," how does it compare?

It goes head-to-head with the most intelligent young math prodigies, aiming for the same standard of gold medals.

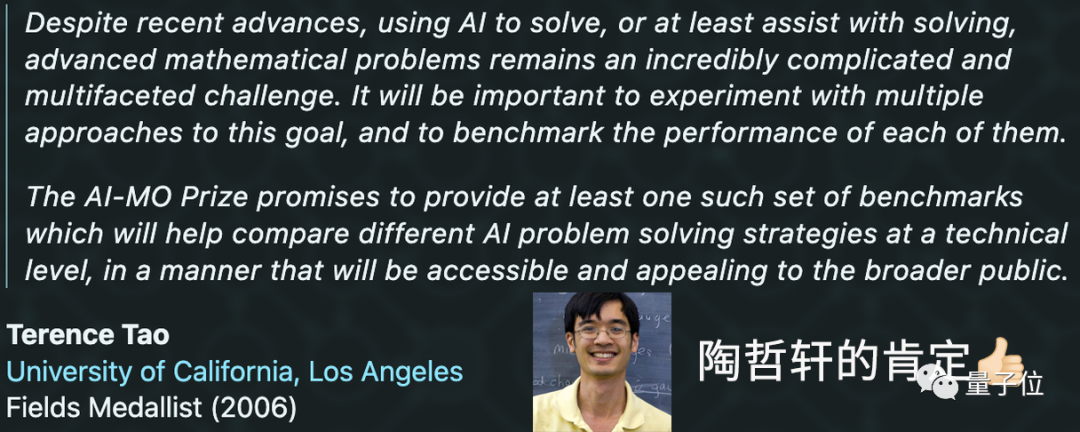

Don't underestimate this competition, even the math genius Terence Tao has joined and is giving it a strong recommendation on the official website:

This competition provides a set of benchmarks for distinguishing AI problem-solving strategies, which is exactly what we need now.

With the news out, netizens are quite excited.

As the IMO chairman said: which big model can match the world's smartest young people?

As the saying goes, "under a heavy reward, there must be a brave man," and AI with its own approach is indeed something to look forward to.

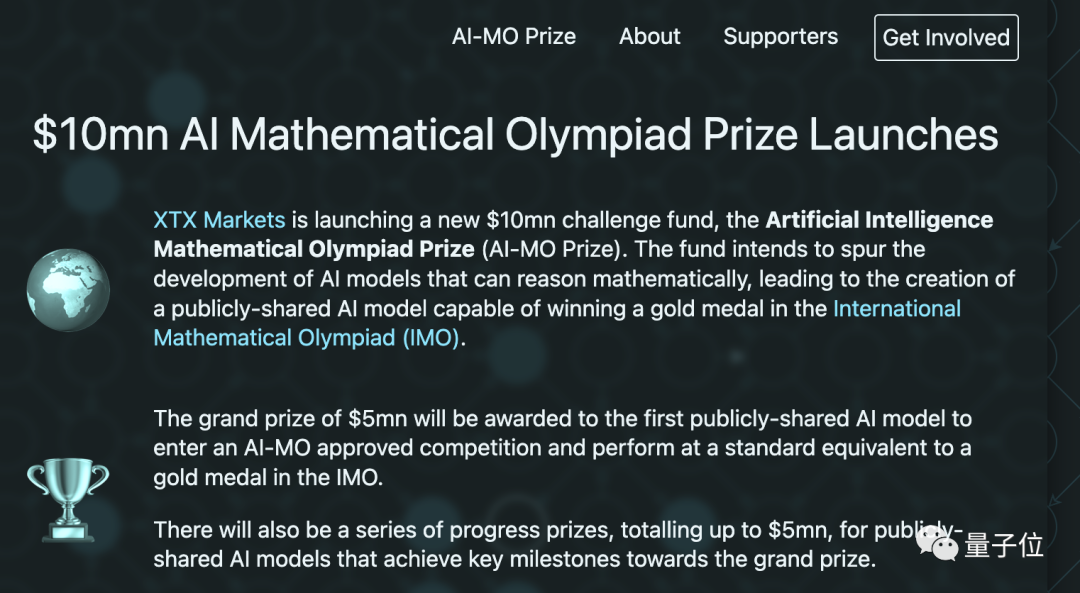

AI Participates in IMO, with a Top Prize of $5 Million

This competition is abbreviated as AI-MO.

Its original intention is to promote the mathematical reasoning ability of large language models and encourage the development of new AI models that can match the highest level of human mathematics (IMO competition).

Why choose IMO as the benchmark?

IMO problems are generally divided into four major categories: algebra, geometry, number theory, and combinatorics. They do not require advanced mathematical knowledge, but they do require participants to have the correct thinking and mathematical literacy.

Statistics show that the likelihood of a gold medalist winning the Fields Medal is 50 times that of an ordinary Cambridge doctoral graduate.

In addition, half of the Fields Medal winners have participated in the IMO competition.

Based on this competition, the AI-MO competition specifically for AI will open in early 2024.

The organizing committee requires that the participating AI models must process the problems in the same format as human players and must generate a final answer that is readable by humans, which will then be evaluated by an expert group using IMO standards.

The competition results will be announced at the 65th IMO conference held in Bath, England in July next year.

In the end, AI models that reach the gold medal level will receive a grand prize of $5 million.

The remaining AI models that have "achieved a key milestone" will share the remaining progress prize, with a total amount of $5 million.

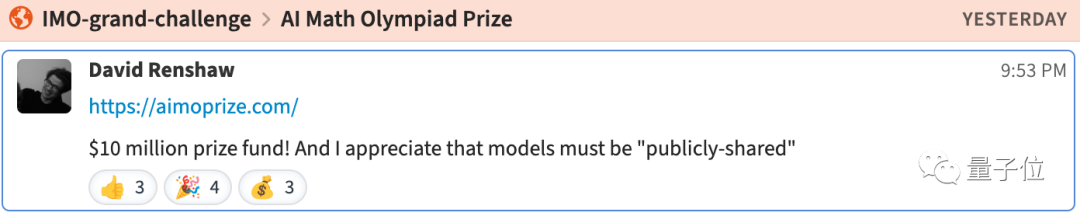

It is worth mentioning that in order to qualify for the prize, participants must adhere to the AI-MO public sharing agreement, which means that the award-winning model must be open source.

As for the specific rules, the organizing committee is still in discussions, and the official is currently recruiting members of the advisory committee (especially in need of mathematicians, AI and machine learning experts) and a director to lead the competition, both of which are paid and can be fully remote. It is unknown which big shots will join.

However, it is important to note that AI-MO is not an official competition initiated by IMO.

The true initiator of this competition is XTX Markets, a non-bank financial institution based in London, UK, engaged in machine learning quantitative trading.

Not to mention, XTX Markets is quite ambitious.

Last year, it also established a scholarship specifically to encourage female students to study mathematics in collaboration with the University of Oxford.

As for the competition itself, some netizens have started to speculate: which AI model has the most potential?

The GPT-4 with the Wolfram plugin was the first to be mentioned, but it was also the first to be poured cold water on.

However, OpenAI behind it is still favored (although large tech companies are not the target audience of this competition).

Some pessimistic netizens directly assert:

The competition is cool, but no one should be able to do it within five years.

At the same time, some people also believe:

Training such a model is not difficult, the difficulty lies in obtaining and processing data, after all, these problems involve not only text, but also many complex meanings of images and symbols.

All will be revealed in 2024.

It is worth mentioning that AI-MO is not the first AI challenge to IMO.

In 2019, several researchers from OpenAI, Microsoft, Stanford University, and Google, among other academic institutions, launched a competition called IMO Grand Challenge.

No One Has Successfully Met the Previous Challenge

The IMO Grand Challenge was also established to find AI capable of winning an IMO gold medal.

Let's take a look at the 5 rules set for AI in this mathematical competition:

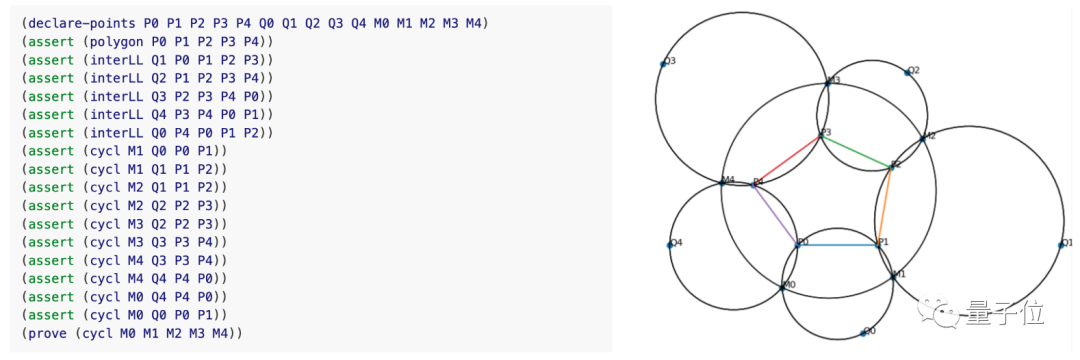

Regarding the format. In order to ensure the rigor and verifiability of the proof process, problems and proofs need to be formalized (machine-verifiable).

In other words, IMO problems will be transformed into input expressions based on the Lean programming language through the Lean theorem prover, and AI also needs to write out the proof using the Lean programming language.

Regarding scoring. Each proof question for AI will be judged for correctness within 10 minutes, as this is also the time for IMO referee scoring. Unlike humans, AI does not have the concept of "partial credit".

Regarding resources. Like humans, AI needs 4.5 hours per day to solve 3 problems (over two days of competition), and there are no restrictions on computing resources.

Regarding reproducibility. AI must be open source and the model must be made public and reproducible before the end of the first day of IMO. AI is not allowed to be connected to the internet.

Regarding the challenge itself. The biggest challenge is to enable AI to achieve a gold medal 🏅 like a human.

This competition was initiated by 7 AI research scholars and mathematicians:

Daniel Selsam from OpenAI, Leonardo de Moura from Microsoft, Kevin Buzzard from Imperial College London, Reid Barton from the University of Pittsburgh, Percy Liang from Stanford University, Sarah Loos from Google AI, and Freek Wiedijk from Radboud University.

Now, four years later, there has been some attention from participants.

However, although many AI and mathematics researchers have attempted to challenge this field or a small goal within the field, they are still far from the ultimate goal of winning the IMO championship.

There have even been suggestions to consider setting up a "simple mode" for this competition:

For example, researcher Xi Wang attempted to use several existing SMT solvers to solve real IMO problems, but with limited success.

At that time, existing AI could prove some less difficult IMO problems, such as proving Napoleon's theorem (if equilateral triangles are constructed on the sides of any triangle, the centers of those triangles form an equilateral triangle).

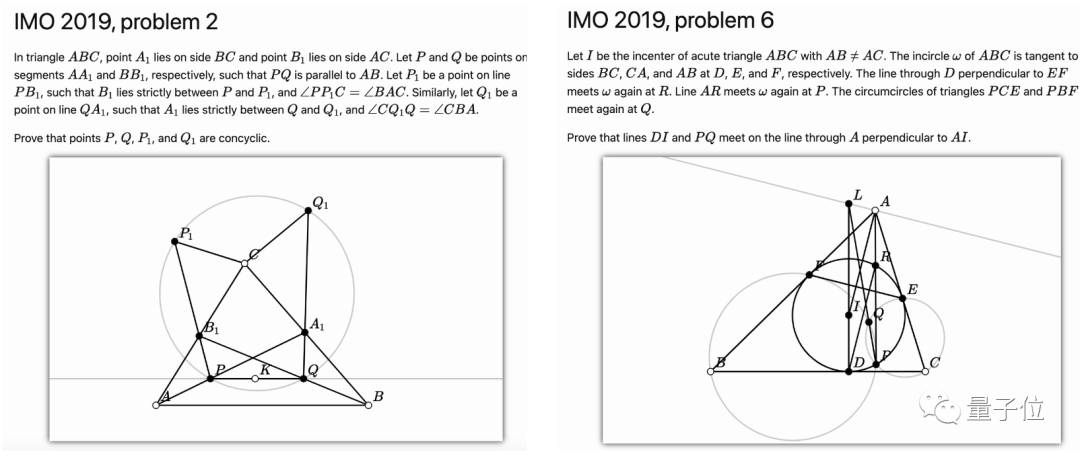

However, when it came to proving other problems, such as the geometry problem in IMO 2019, the existing solvers either could not solve them or took more than half an hour.

Similarly, OpenAI researchers (at the time still at Microsoft) Dan Selsam and Jesse Michael Han had also researched AI solving IMO geometry problems for a period of time and summarized their findings in a blog post.

This blog post describes how they tinkered with a geometry solver and the steps involved in designing a geometry solver, including:

Geometry representation, constraint solving, algorithm selection, solver architecture, challenges, and solutions.

For example, the geometry representation involves representing geometry problems in a format that computers can understand and process, and vice versa, including automatically converting programming languages into diagrams for human readability:

In addition, it also describes how to choose the appropriate solving algorithm for different types of IMO geometry problems, and so on.

However, even so, this blog post did not provide a specific solution, only concluding that "the solver may be able to achieve the goal of winning an IMO gold medal".

Furthermore, the geometry problems targeted by the challengers only account for one-fourth of the IMO problem types (there are also algebra, combinatorics, and number theory)…

Although four years have passed and there has not yet been a true AI "all-around IMO champion," the initiator of this idea, IMO Grand Challenge, has still caused quite a stir in the industry.

Alex Gerko admitted that the IMO Grand Challenge was also the impetus for him to organize AI-MO:

It's time to give "AI challenging IMO" a little stimulation!

Of course, the prize money for AI-MO has also attracted the attention of the organizers of IMO Grand Challenge and many challengers:

With the drive of money 💰, will the industry really produce an AI capable of solving difficult math problems and successfully surpassing a multitude of humans to win an IMO gold medal?

Based on the current capabilities, which AI do you think is most likely to take the lead?

Reference links:

[1]https://twitter.com/AlexanderGerko/status/1729113193706832265

[2]https://imo-grand-challenge.github.io/

[3]https://aimoprize.com/

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。