Source: NextQuestion

Author: Cloud Book

Image source: Generated by Unbounded AI

When playing games, players often notice that the NPCs in the game seem too naive and easily deceived. Even if you steal money from a merchant, they will still thank you. They always seem to tirelessly say the same thing, such as "I used to be an adventurer like you, then I took an arrow in the knee."

These NPCs have a professional name, called believable agents. The design of believable agents aims to provide an illusion of real life and to present a sense of reality in their decision-making and autonomous actions [1].

With the development of the gaming industry, believable agents have undergone multiple updates and iterations. From the early use of rule-based methods in "Mass Effect" and "The Sims" to depict NPC behavior using finite state machines and behavior trees, to the later introduction of learning-based (especially reinforcement learning) methods in "StarCraft" and "Dota 2" to create AI computer players. NPC behavior has become increasingly intelligent, and the actions they can take have become more diverse, no longer limited to pre-written script content.

However, human behavior space is vast and complex.

Although these believable agents can meet current interaction needs and game content, compared to the flexible and ever-changing real human behavior patterns, they still appear rigid and lacking in flexibility.

So, in April of this year, a bold idea came from a research team from Stanford and Google [4]—"Can we use large models to create an interactive artificial society that reflects believable human behavior?" The key supporting this idea is that they observed that large language models have learned a large and diverse range of human behaviors from training data.

▷ Figure 1: Reference [4]. Image source: arXiv official website

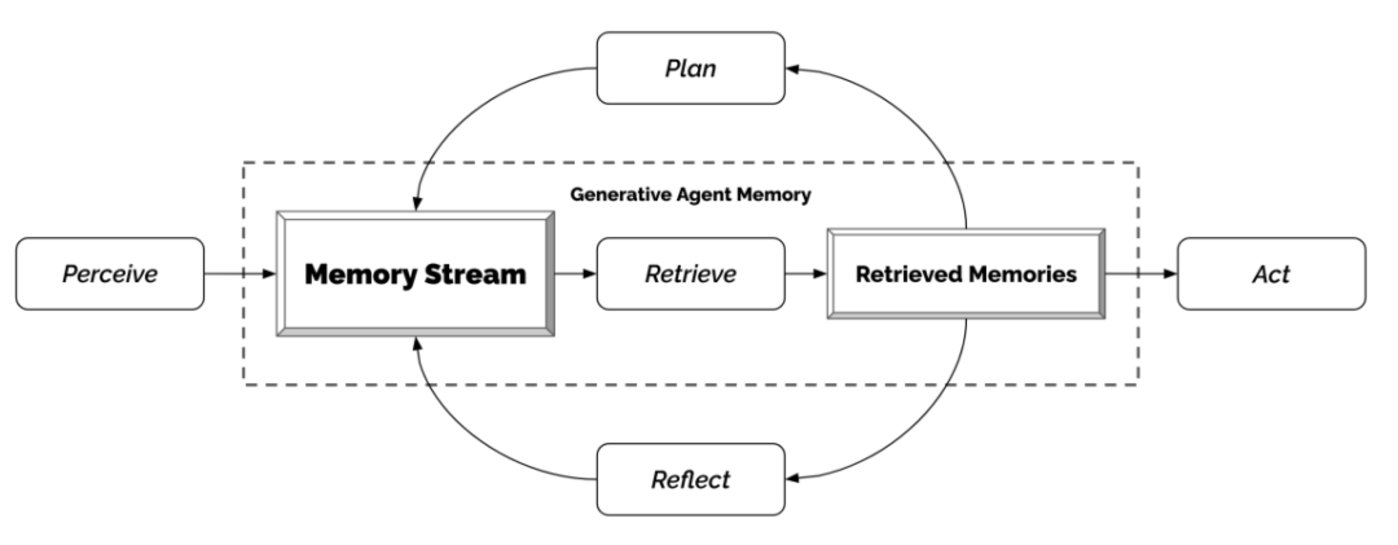

The researchers proposed an architecture for believable artificial agents based on large models. This architecture manipulates agents by maintaining and extracting "memories" and using a "memory-reflection-planning" framework to better mimic believable human behavior.

Smallville—The Town Life of Artificial Agents

To demonstrate the usability of the large model version of artificial agents, the researchers designed a small town sandbox game—Smallville, and instantiated these agents as characters in the town.

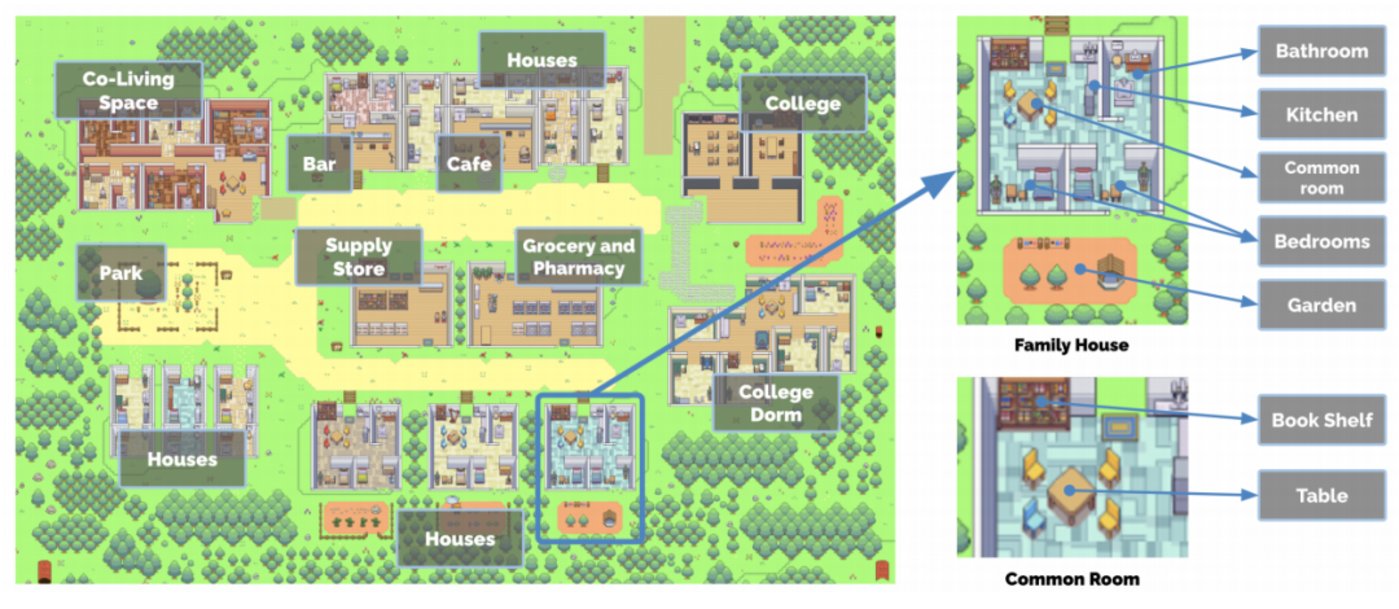

▷ Figure 2: Multi-level map of Smallville. Image source: Reference [4]

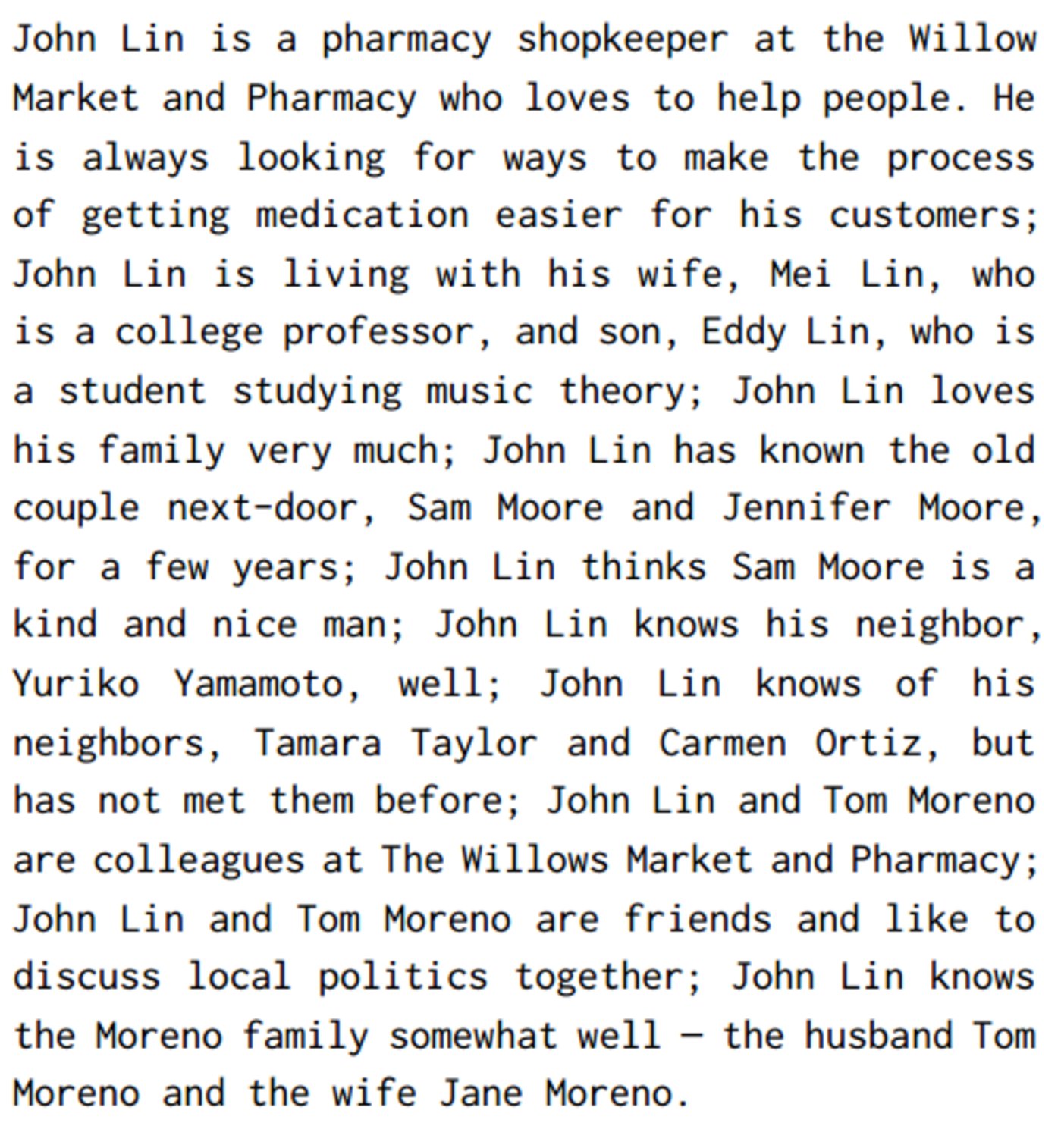

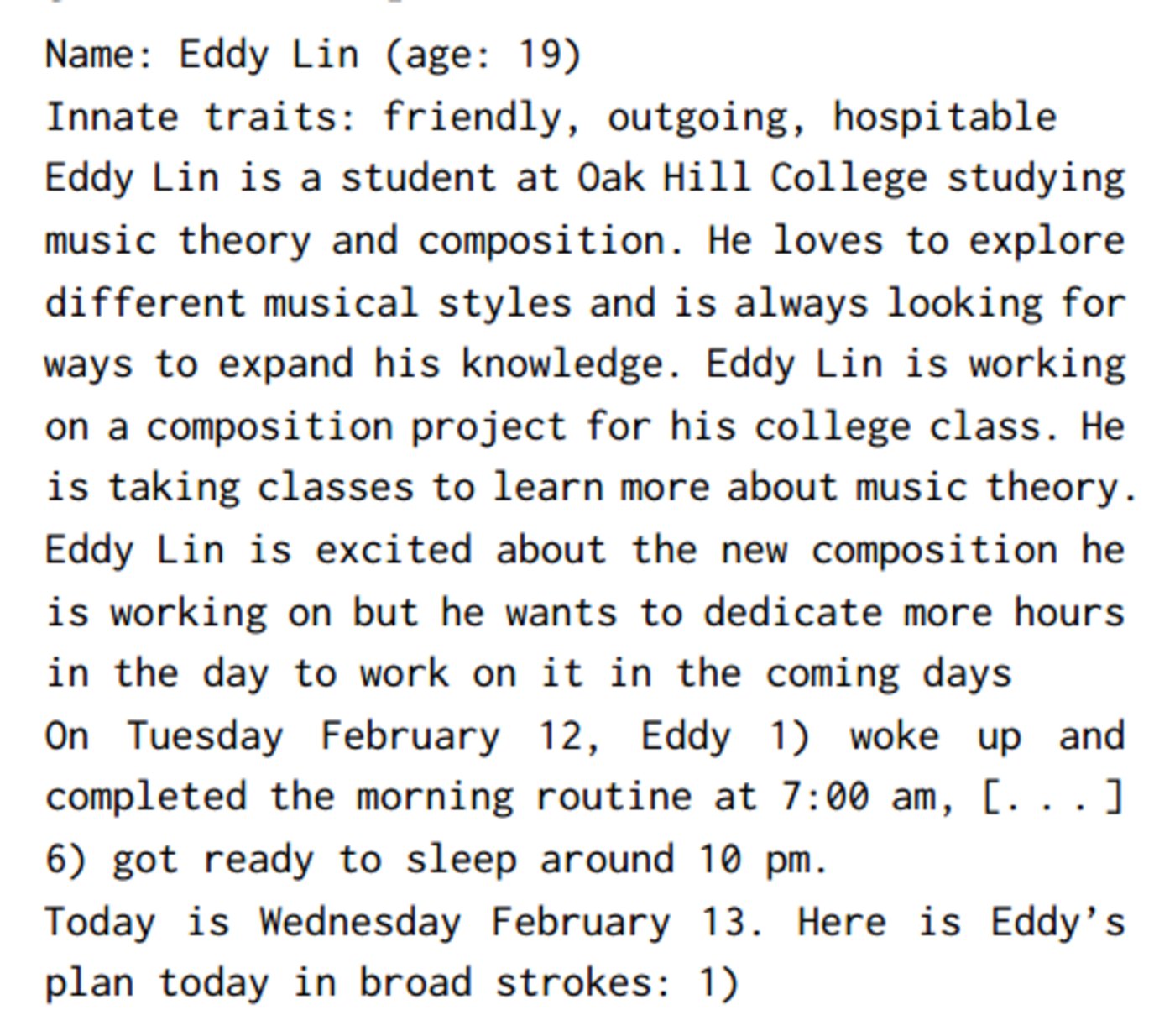

Smallville is a community with 25 artificial agents, each with their own identity description, including their occupation and relationships with other agents. These descriptions serve as the initial memories input to the large model for artificial agents.

▷ Figure 3: Lin's identity description (initial memory). Image source: Reference [4]

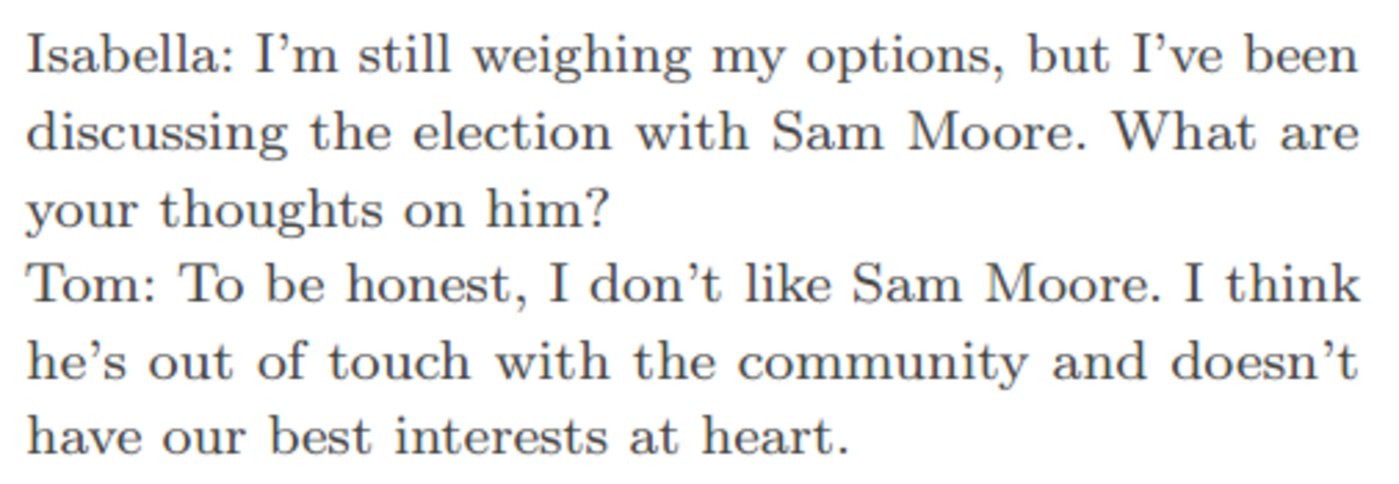

These agents interact with the environment and can communicate with other agents through natural language. At each time step of the sandbox engine, agents use natural language output to describe their current actions, such as "Isabella is writing a diary," or engage in conversations as shown in Figure 4.

Editor's note:

A time step is the difference between two time points. In process simulation, the model discretizes the entire process into small processes, and each step requires time, which is the time step. When simulating the time response of a system, the time step size is often set, and the size of the time step generally depends on the system properties and the purpose of the model. The larger the absolute value, the less calculation time; the smaller the absolute value, the longer the calculation time, the finer the simulation, and the more complex the process.

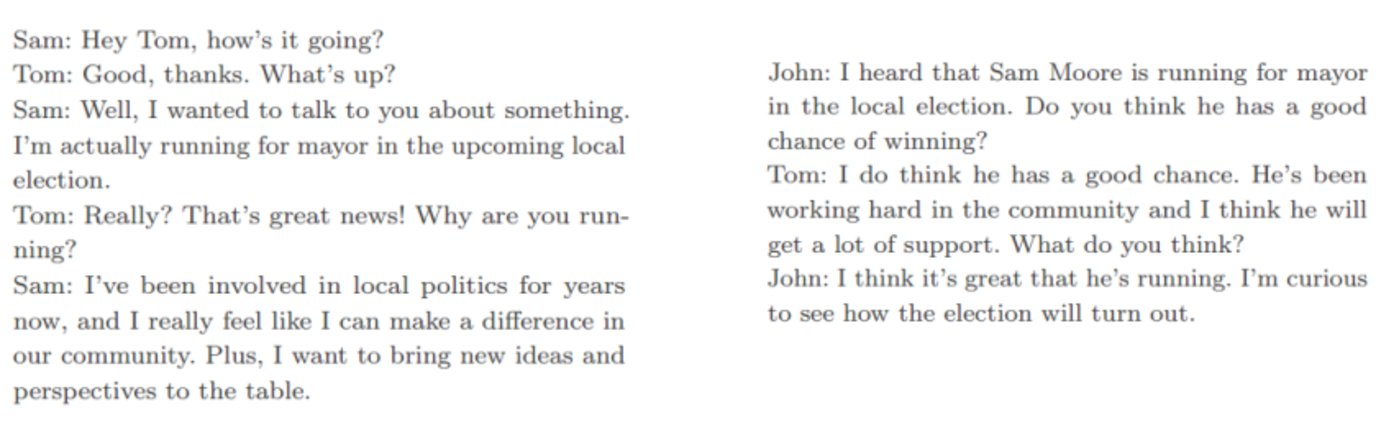

▷ Figure 4: Conversation between agents. Image source: Reference [4]

Smallville has many common facilities, including cafes, bars, parks, schools, dormitories, residences, and shops. In addition, many different functional rooms and objects are defined, such as kitchens in residences and stoves in kitchens. Agents can influence the environment through their actions, for example, when an agent is sleeping, the bed may be occupied. In addition, agents also react to changes in the environment, for example, if Isabella's shower status is set to "leaking," she will go to the living room to get tools and try to fix the leak.

The "day" of the town residents starts with an identity description. As time passes in the sandbox world, their behavior evolves through interactions with other agents and the environment, establishing memories and relationships, ultimately influencing their behavior.

Interestingly, the researchers found that even without pre-programming, the agents in Smallville engage in spontaneous social behaviors, such as exchanging information, establishing new relationships, and collaborating in activities.

First, over time, information spreads through conversations between agents. For example, Sam tells Tom that he wants to run for election (left of Figure 5), and then one day, Tom discusses with John the possibility of Sam's election success (right of Figure 5). Gradually, Sam's election becomes a hot topic in the entire town, with some people supporting him and others still undecided.

▷ Figure 5: Information spreading. Image source: Reference [4]

Second, agents establish new relationships with each other and remember their interactions with other agents. For example, Sam initially does not know Latoya, but in a chance encounter during a walk, he introduces himself, and then the next time they meet, Sam will bring up the photography project Latoya mentioned last time.

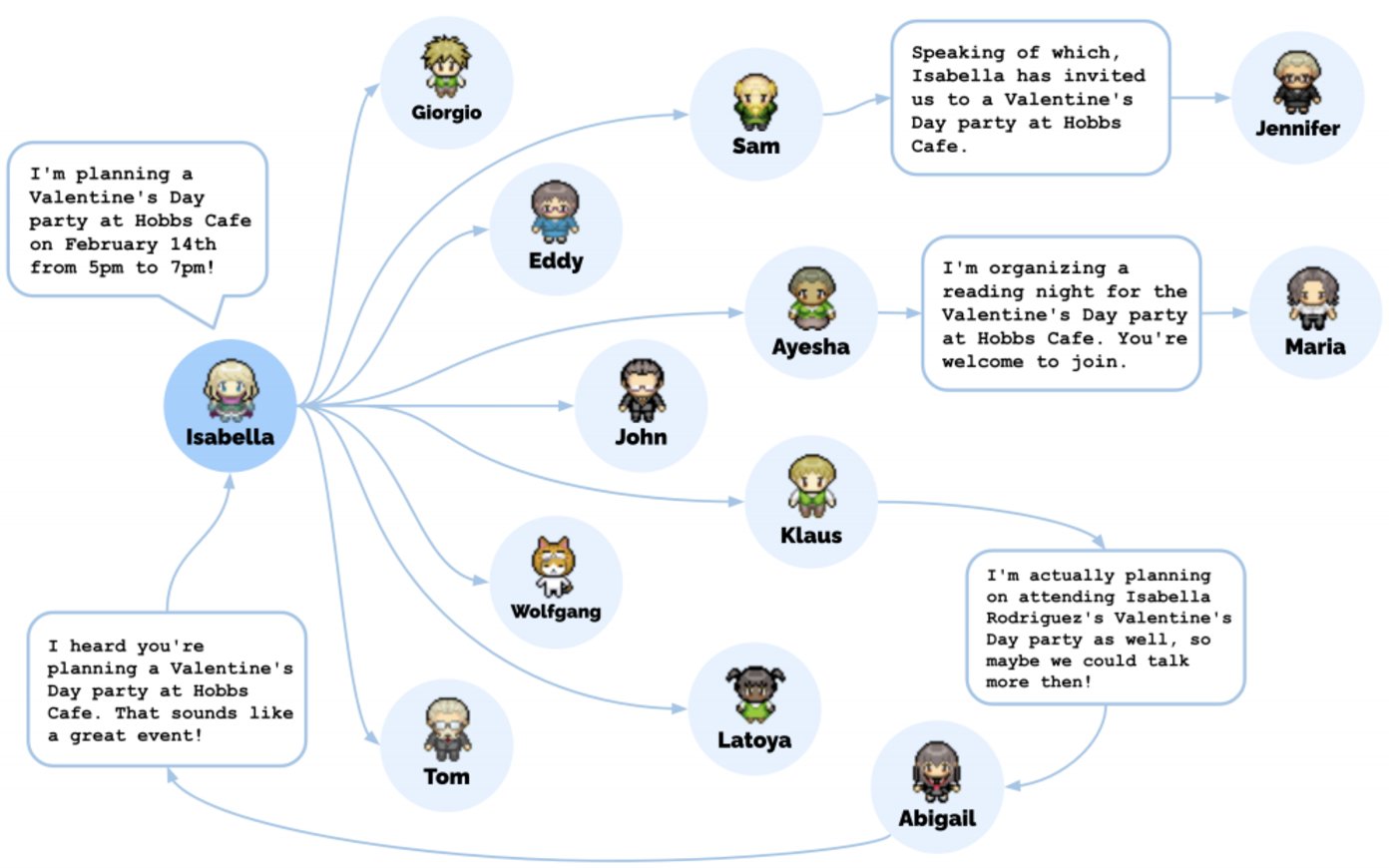

Finally, there are complex collaborative interactions between agents. For example, Isabella wants to host a Valentine's Day party, she asks her friend Maria for help, and Maria invites her crush Klaus to the party, and eventually they and five other agents all show up at the party. During this process, the researchers only set Isabella's initial intention to host the party and Maria's infatuation with Klaus. The spread of information, decoration, invitations, arrival at the party, and social interactions at the party are all initiated spontaneously by the agents.

Architecture Design of Artificial Agents

The researchers' goal is to provide a framework for agent behavior in an open world, a framework that can interact with other agents and react to changes in the environment. Artificial agents take the current environment and past experiences as input, and then use the large model to generate behavior as output.

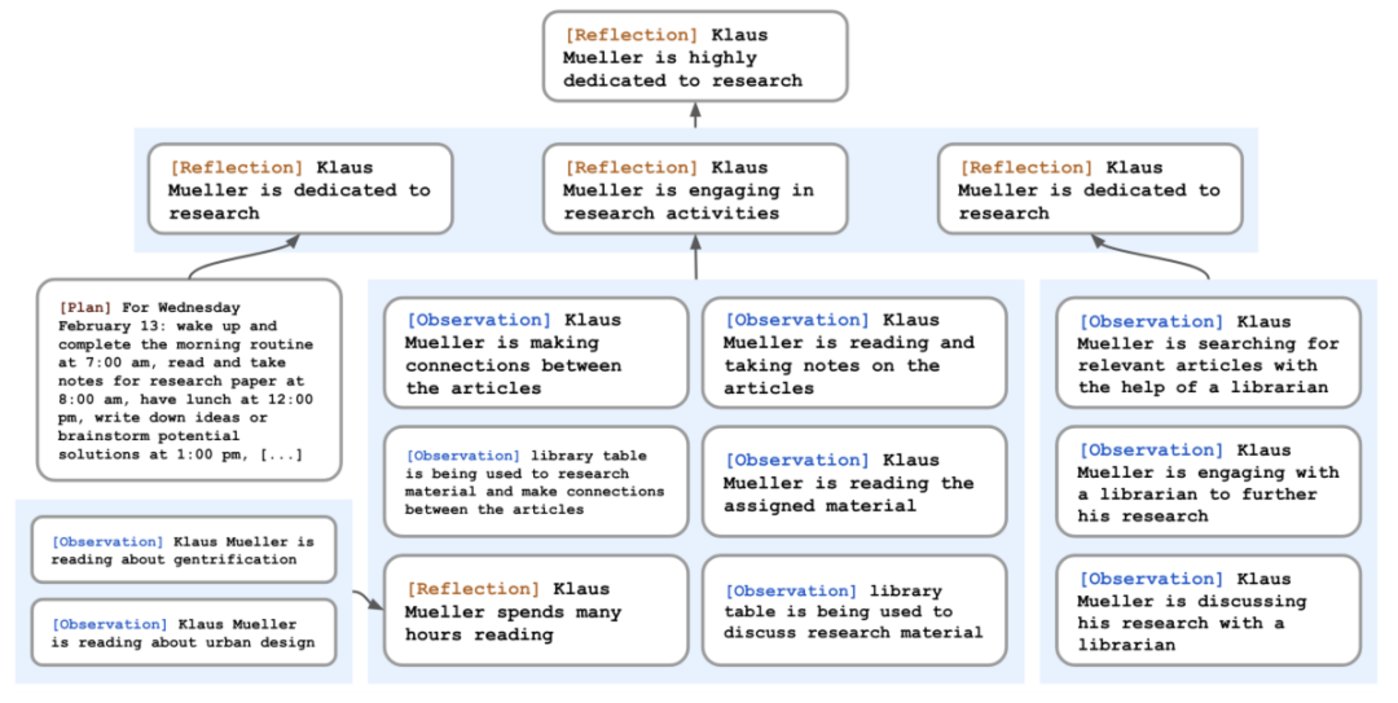

To ensure the long-term consistency of agent behavior, the researchers designed a "memory-reflection-planning" framework to guide agent actions. They maintain a large number of events and memory streams to ensure the retrieval and synthesis of the most relevant parts of agent memory when needed. These memories are recursively synthesized into higher-level reflections to guide agent behavior.

▷ Figure 6: Architecture of artificial agents. Image source: Reference [4]

(1) Memory

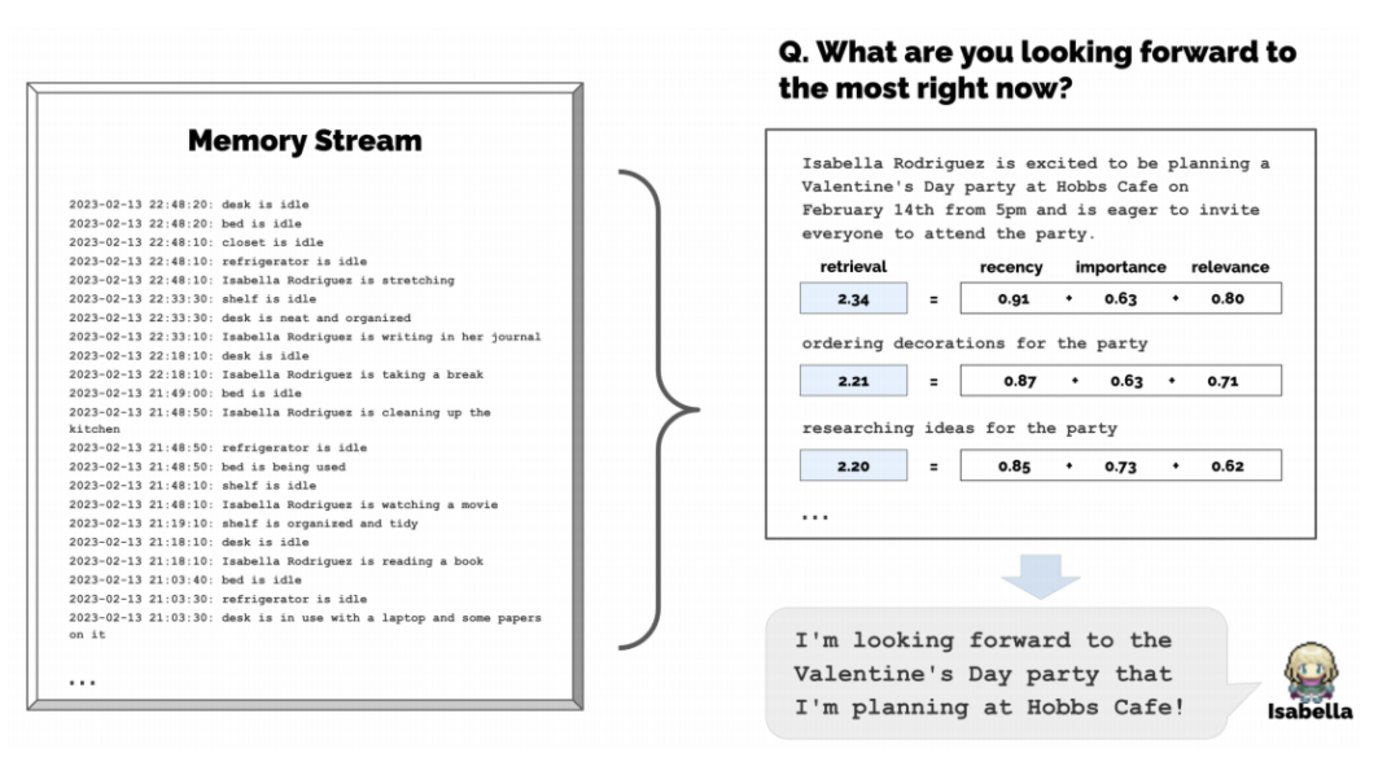

To ensure the comprehensiveness of memory, the researchers maintain a memory stream that records all experiences of an agent. Each experience includes a natural language description, creation time, and last access time. These memories include the agent's own historical actions, perceived actions of other agents, and perception of the world. For example, Isabella's memory of working at the coffee shop would include "Isabella is arranging pastries," "Maria is drinking coffee while preparing for an exam," and "the fridge is empty."

Compared to humans, the memory capacity of artificial agents is undoubtedly more accurate and enduring, but excessive memory may confuse large models. To effectively retrieve the most relevant memories to the current situation, the researchers set three scores to assist in retrieval:

By weighting and summing these three scores, artificial agents select the most relevant and important memories as input to the large model in each situation, assisting the decision-making process.

▷ Figure 7: Memory stream of artificial agents. Image source: Reference [4]

(2) Reflection

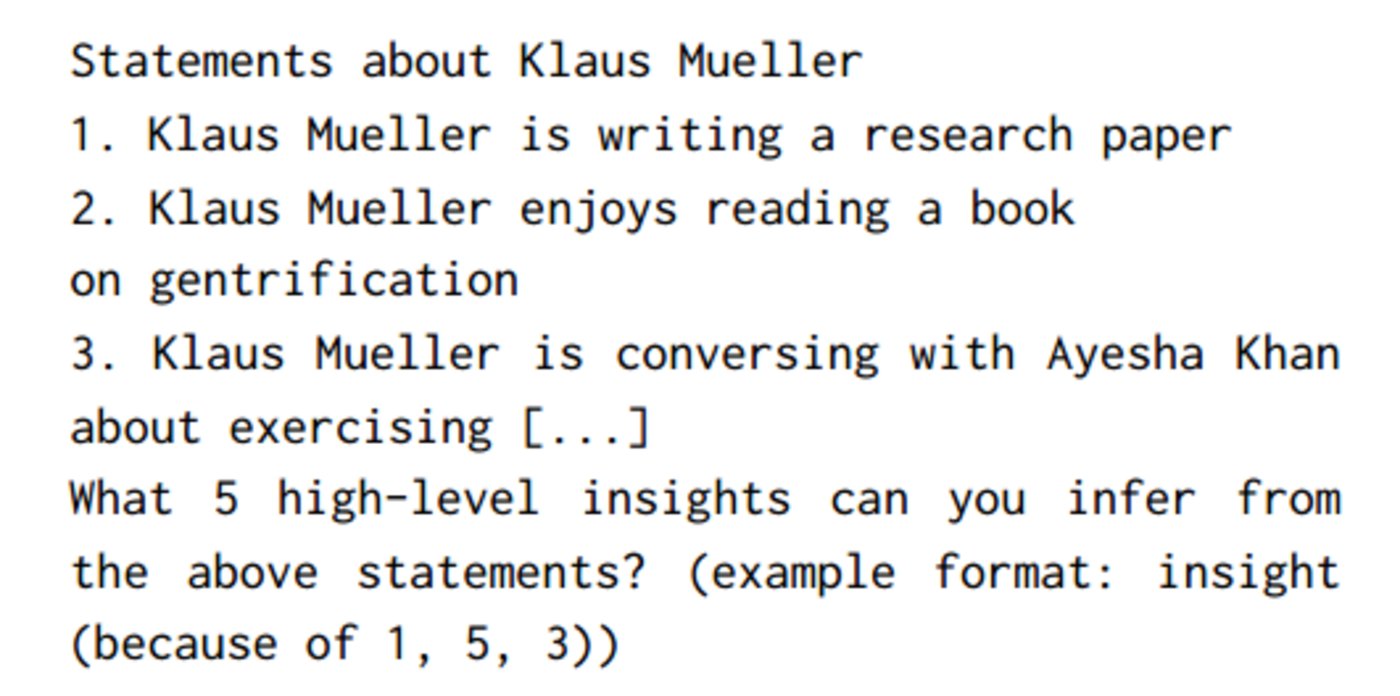

The above memory module represents the agent's observations of themselves, the world, and others. However, observation alone is not enough; memory should also include reflection, which is the agent's thinking history. This kind of reflection is an important part of ensuring that agents can more comprehensively understand and adapt to different situations.

For example, if a user asks Klaus "Who would you like to have dinner with," if only observational memory is used, Klaus's answer might be "Wolfgang," because he interacts most frequently with Wolfgang, even though each interaction is just passing by. But if the reflection module is added, allowing Klaus to realize his interest in research from the observation memory of "obsessed with research," and to realize Maria's efforts in his research and their common interests, then Klaus might come up with a completely different answer—"Maria."

The researchers designed a "two-step" reflection module. The first step is "asking," where the agent asks themselves 5 most important questions based on the most recent 100 memories (as shown in Figure 8). The second step is "answering," where the agent generates reflection results based on these questions and stores these results in the memory pool.

▷ Figure 8: The first step of reflection - "asking". Image source: Reference [4]

The ingenuity of this module is that agents can combine the reflection results with new observational memories to engage in higher-level reflection and abstraction. Ultimately, agents will generate a reflection tree—where the leaf nodes represent basic observations, non-leaf nodes represent thoughts, and the higher the position in the tree, the more abstract and advanced. These higher-level reflections can help the large model more accurately understand memories and roles.

▷ Figure 9: Reflection tree. Image source: Reference [4]

(3) Planning

To ensure that artificial agents can maintain long-term behavioral consistency, the researchers have given the agents the ability to make plans to avoid situations like having lunch at 12:00 and then eating again at 13:00.

The plans describe the agent's future action sequences, for example, "Muller plans to paint in the room for 3 hours." These longer-term plans help the agent's behavior to remain consistent over time. Starting from the initial plan, the agents decompose the plan from top to bottom, gradually generating more details, such as "spend time gathering materials, mix paint, rest, and clean up." These plans are stored in the memory stream and, together with reflection and observation, influence the agent's behavior.

▷ Figure 10: Prompting agents to generate plans. Image source: Reference [4]

Of course, agents do not always rigidly adhere to the plans but react in real-time based on the current situation. For example, if Muller sees his father enter the room while he is painting, this new "observation record" will be passed to the large model, which will consider reflection, planning, and then decide whether to pause the plan to react accordingly.

Once Muller starts a conversation with his father, they will retrieve memories of each other and the history of the current conversation, then generate the content of the conversation until one of them decides to end the conversation.

Experiments

The researchers' experiments mainly focused on two questions: first, whether artificial agents can correctly retrieve past experiences and generate believable plans, reactions, and thoughts to shape their behavior; and second, whether spontaneous social behaviors such as information dissemination, relationship building, and multi-agent collaboration can form within the agent community.

(1) Controlled Experiment

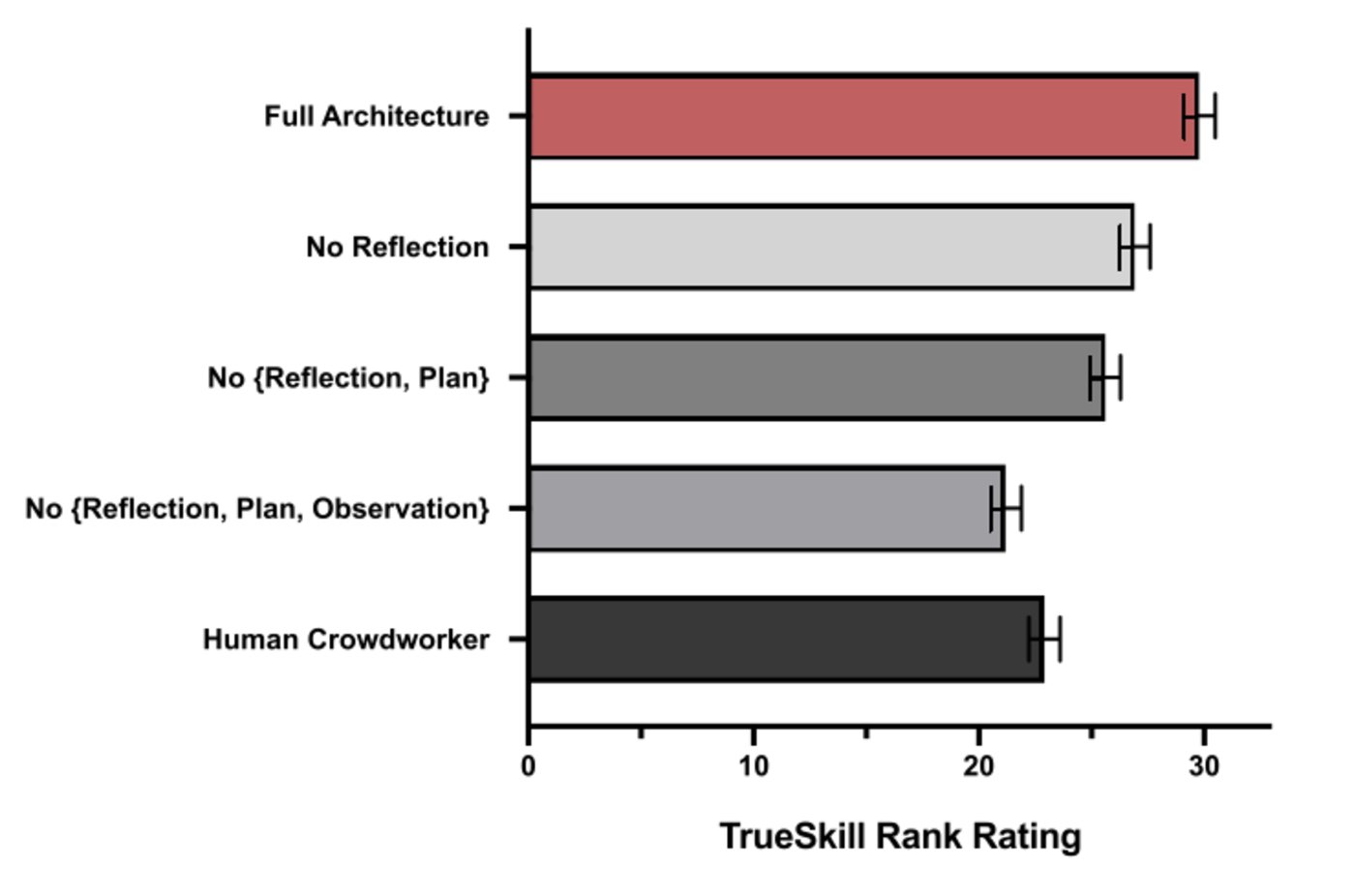

To verify the first question, the researchers conducted comprehensive interviews with the agents to assess their self-awareness, memory, planning, reactions, and reflection capabilities.

These interviews helped determine whether the agents could maintain self-awareness, retrieve memories correctly, make plans, react, and reflect thoughtfully to shape their reasonable and human-like behavior and decision-making.

▷ Figure 11: Degree of believability reflected in interview results. Image source: Reference [4]

The researchers hired 100 experimental participants to evaluate the interview results and quantitatively analyze the credibility and reasonableness of the interview results. The research results showed that agents with complete "memory," "reflection," and "planning" modules could produce the most reasonable answers, and their credibility could even exceed that of real humans. When the agents lacked any one or more of these modules, their credibility significantly decreased.

It is worth noting that, although the artificial agents performed very reliably, their memories were not without flaws. For example, "Tom" was convinced that he needed to talk about the election at the Valentine's Day party, but at the same time claimed not to know if there was a party on Valentine's Day.

Furthermore, artificial agents are also capable of fabricating facts. For example, when asked if Isabella knew about Sam's participation in the election, Isabella would correctly answer yes, but would also add, "He will announce it tomorrow," even though this information did not appear in the previous conversation.

(2) End-to-End Experiment

To observe spontaneous social behaviors within the agent community, the researchers placed 25 agents in the Smallville sandbox and ran the game for two game days.

The researchers found that artificial agents spontaneously spread messages and collaborated. Before the start of the run, the researchers set two key pieces of information, namely "Sam is running for mayor" and "Isabella is hosting a Valentine's Day party," and then observed the spread of these two pieces of information two days later. The results showed that initially only these two agents knew this information, but after two days, 8 and 13 people respectively learned about these two pieces of information. Two days later, a total of 5 agents attended the Valentine's Day party, and 12 agents were invited, demonstrating the agents' ability to collaborate in hosting the party.

Furthermore, the artificial agent community spontaneously formed new relationships during the simulation. The researchers quantified the complexity of the relationship network between the agents. They found that the network density increased from 0.167 to 0.74 within two days.

▷ Figure 12: Relationship network of artificial agents. Image source: Reference [4]

From Artificial Agents to Human Agents—First System Thinking

Last month, researchers from the University of Washington pointed out that unlike artificial agents, when humans think, they consider not only the external environment but also internal feelings [5]. These two modes of thinking correspond to the two complementary modes of human thinking.

▷ Figure 13: Reference [5]. Image source: arXiv official website

Kahneman believes [6] that humans have two complementary thinking processes. The first system is intuitive, effortless, and immediate, while the second system is logical, deliberate, and slow. Artificial agents mainly focus on second system thinking and ignore the first system.

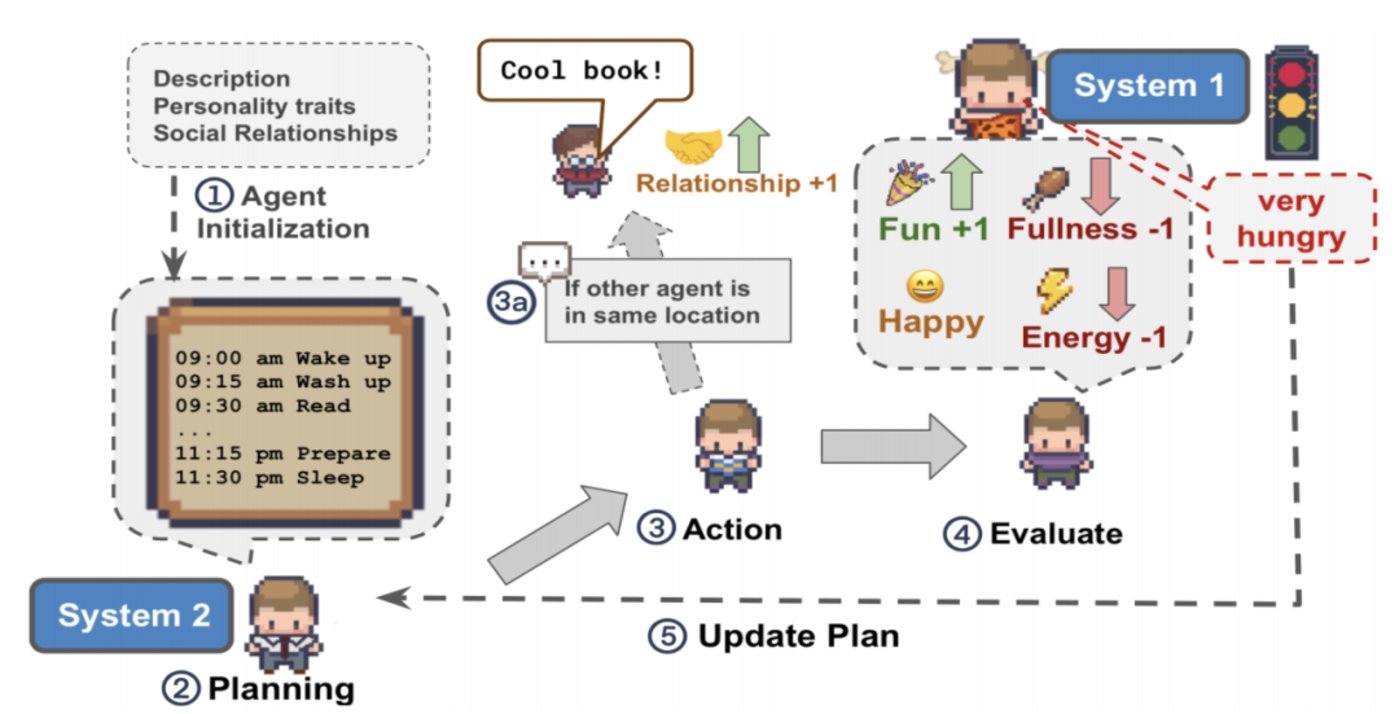

To better guide the behavior of artificial agents, researchers at the University of Washington introduced feedback from the first system of thinking, which can influence their behavior in three aspects: basic needs, emotions, and the closeness of social relationships, to upgrade artificial agents to human agents.

Specifically, basic needs refer to the inherent survival needs of humans, including aspects such as food, socializing, entertainment, health, and energy. If these needs are not met, the agents will receive negative feedback, such as loneliness, illness, and fatigue. Therefore, the agents will spontaneously take action to meet these needs, rather than just following rigid daily plans.

Emotions are also a key factor in the real human behavior model [7]. If an agent feels angry, they should be able to react by taking actions that help release emotions, such as running or meditating.

In addition, the closeness of social relationships between agents should also affect their interaction. The social brain hypothesis suggests that our cognitive abilities have evolved to a large extent to track the quality of social relationships [8], which means that people often adjust their interactions with others based on their relationships [9]. To better mimic human behavior, the researchers allowed the agents to adjust their conversations and interactions based on the closeness of their relationships with each other.

▷ Figure 14: Human agents incorporating first system thinking. Image source: Reference [5]

Building on the artificial agent architecture proposed at Stanford University, the researchers introduced feedback from first system thinking (as shown in Figure 14). They used numerical values to measure five basic needs and the closeness of relationships, while also defining seven emotions.

During the initialization stage, each need was set to a neutral value (a middle value or neutral emotional vocabulary). Before each action, the agents considered whether they needed to meet a certain need and evaluated whether a specific need was met after the action, dynamically adjusting the need value (e.g., adjusting the closeness of relationships based on whether the conversation was pleasant after the conversation). In addition, some need values changed over time, such as a decrease in the need for food over time.

Can human agents understand the various needs of the first system and respond to them reasonably? The researchers conducted a series of experiments to address these two questions.

The experiments indicated that human agents were able to understand the impact of various actions on needs, but had poor discernment for behaviors corresponding to "entertainment" and "health." For example, the agents believed that a doctor giving medication to a patient would also increase the doctor's health.

Furthermore, the experiments showed that when various need values were set to zero, the agents spontaneously took corresponding actions to meet the needs. Interestingly, it seemed that setting negative emotions had a greater impact on the agents than positive emotions, with anger having the greatest impact, followed by sadness and fear, while happiness had the smallest impact.

Regarding the closeness of relationships, the experiments found that as the closeness increased, the frequency of conversations showed a U-shaped pattern. The most conversations occurred when the relationships were not very close or very close, while the conversation frequency decreased when the relationships were relatively close, as there was no need for polite small talk.

Furthermore, when the closeness was high, the proportion of positive conversations usually decreased, similar to when people feel very close to others, they may feel that they do not need to praise others to gain their affection. Of course, when the agents were more distant from each other, the positivity of conversations also decreased.

By incorporating first system thinking, the researchers made artificial agents closer to human behavior and thinking. The agents were better able to understand and meet basic needs, emotions, and social relationships, thereby more accurately simulating human behavior.

Summary

Although human agents appear to be close to human behavior, there are still many irrational aspects. Researchers found that as the simulation time lengthens, the irrational behavior of human agents gradually increases. In addition, they have deficiencies in understanding common sense and occasionally make errors such as "intruding into a single-person bathroom because they mistakenly thought all bathrooms are multi-person."

Experiments also indicate that human agents may lack independent personalities. Excessive collaboration may lead to the reshaping of some personality traits of the agents, including interests and hobbies.

Furthermore, ethical considerations need to be carefully taken into account when applying human agent technology, including the serious impact of errors and the potential for users to overly rely on the agents.

Nevertheless, human agents have taken the first step towards realizing "using large models to create an interactive artificial society that reflects credible human behavior." By integrating first and second system thinking, along with the ability to combine memory, planning, and reflection, they can relatively accurately and credibly simulate human behavior. This advancement is of significant importance for the gaming industry, human-computer interaction, and the study of intelligent societies, providing new directions and possibilities for future research and applications.

References

- [1] Bates J. The role of emotion in believable agents. Communications of the ACM, 1994, 37(7): 122-125.

- [2] Mark O. Riedl. 2012. Interactive narrative: A novel application of artificial intelligence for computer games. In Proceedings of the Twenty-Sixth AAAI Conference on Artificial Intelligence (AAAI’12). 2160–2165.

- [3] Georgios N. Yannakakis. 2012. Game AI revisited. In Proceedings of the 9th Conference on Computing Frontiers. ACM, Cagliari, Italy, 285–292.

- [4] Park J S, O'Brien J C, Cai C J, et al. Generative agents: Interactive simulacra of human behavior. arXiv preprint arXiv:2304.03442, 2023.

- [5] Wang Z, Chiu Y Y, Chiu Y C. Humanoid Agents: Platform for Simulating Human-like Generative Agents. arXiv preprint arXiv:2310.05418, 2023.

- [6] Daniel Kahneman. 2011. Thinking, fast and slow. Farrar, Straus and Giroux, New York.

- [7] Paul Ekman. 1992. An argument for basic emotions. Cognition and Emotion, 6(3-4):169–200.

- [8] R.I.M. Dunbar. 2009. The social brain hypothesis and its implications for social evolution. Annals of Human Biology, 36(5):562–572.

- [9] W.-X. Zhou, D. Sornette, R. A. Hill, and R. I. M. Dunbar. 2005. Discrete hierarchical organization of social group sizes. Proceedings of the Royal Society B: Biological Sciences, 272(1561):439–444.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。