Source: Brain Pole

Image Source: Generated by Wujie AI

Since the beginning of this year, the popularity of large models has made the cloud computing industry boil. One of the most compelling evidence is the emergence of a new model called MaaS (Model as Service), and "model factories" have been established.

MaaS, or Model as a Service, refers to users being able to directly call basic large models through APIs to build, train, and deploy exclusive models for different business scenarios. The cloud platform will provide full-cycle management and tools from data, models to application services.

Currently, major cloud computing giants such as Microsoft Azure, Alibaba Cloud, Huawei Cloud, Tencent Cloud, Baidu Cloud, and JD Cloud have all launched MaaS services.

Why are cloud providers doing MaaS? One major consideration is that as IT infrastructure service providers, also known as the IaaS model, they have long been facing market competition with price wars, and have not been able to effectively recover value through PaaS and SaaS to provide ToB digital services to government and enterprise customers.

In this situation, cloud providers urgently need to find a new and high-value business model, and large models have brought this possibility.

However, can the burgeoning MaaS really help cloud providers "turn the tide"?

The Inevitable Arrival of MaaS

First of all, it is necessary to declare that the emergence of the MaaS model and the establishment of a large number of "model factories" have great commercial potential.

You may ask, even the access to OpenAI's models is declining, is there really such a high demand for training large models that requires so many "model factories" and MaaS services?

Our judgment is that the industrialization of large models has just begun, and industrialization will give rise to a large number of segmented models with different parameters, specifications, and scenarios, which must improve the efficiency of model training and deployment and promote the industrialization of model production. Different models are like various types of steel used to build AI applications, so "model factories" are like "steel mills" that must be built.

There are three main points supporting the inevitability of the MaaS model:

First, demand. General-purpose basic large models have been quickly filled. Many enterprises still hope to use the ability to call basic models to transform their own businesses or develop new AI applications, which require more industry knowledge, more precise skills, and more tailored vertical models. Data shows that the penetration rate of industry intelligence will increase from 7% in 2021 to 30% in 2026, and the core business systems of more industries will be penetrated by the capabilities of large models. Therefore, the demand for the production of large models is still strong.

Second, supply. Currently, the productivity of large models is still limited. Firstly, computing resources are scarce, and the training and inference of large models require high demands for computing and storage resources. Many enterprises and institutions have "no cards available," which makes it impossible to train and infer large models.

At the same time, training proprietary large models requires a large amount of high-quality data, and a series of complex engineering processes such as data cleaning and preprocessing greatly affect development efficiency. The training cycle is long and cannot quickly meet the requirements for business launch.

In addition, deploying and applying well-trained large models requires consideration of various issues such as computing resources, business scenarios, different parameter specifications, network bandwidth, security and compliance, and many enterprises and institutions lack relevant technical expertise and experience, and the initial investment is easily wasted.

To increase the quantity and quality of large models, the "model factories" under the MaaS model must be built.

Third, catalyst. Cloud providers have sufficient motivation to mature and market the MaaS model. IaaS infrastructure as a service has led to the rise of public clouds, but the initial investment in IaaS infrastructure is large, and the revenue capability is low, which has long been a problem. PaaS requires cloud providers to invest a large amount of manpower, with a long return period, and the value of SaaS is insufficient, with low unit prices and requiring a large amount of customization and operation services. At this time, through this new model of MaaS, providing model capabilities to users in a comprehensive manner is a high-value and highly certain choice.

On the one hand, the huge data scale of large models will bring more demand for computing resources and cloud usage. In addition, the customized needs of industry enterprise users can be paid on a project basis. The large number of API calls for AI applications has already generated various business models such as token-based payment, subscription-based payment, and commercial versions.

With so many commercial prospects, like a shot of adrenaline, it is driving cloud providers to accelerate the layout of MaaS.

Let's return to reality and see how domestic cloud providers are doing this MaaS business.

Domestic MaaS with "Factory in the Back and Store in the Front"

The overall trend today is that domestic cloud providers' MaaS has basically adopted the "factory in the back and store in the front" model.

How to understand this?

Cloud providers play the role of "factory," using their advantages in infrastructure, industry service capabilities, and full-process development tools and suites to meet customers' diverse needs for model pre-training, model fine-tuning, model deployment, and intelligent application development, ensuring the smooth delivery of large models to customers.

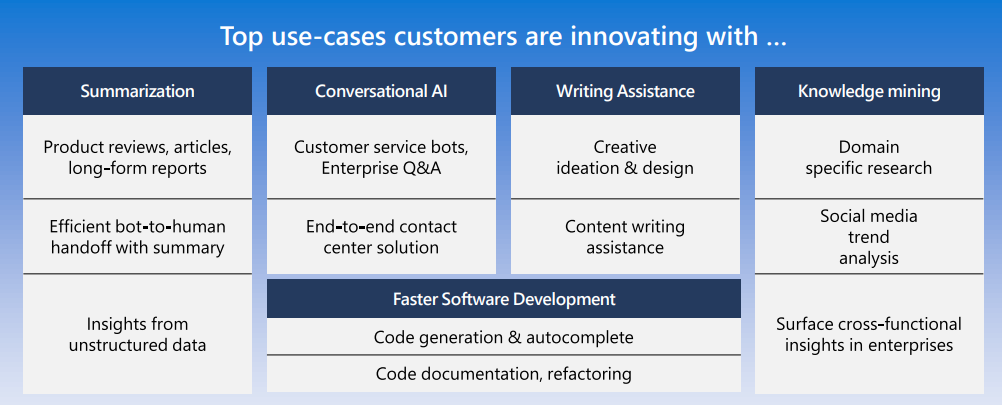

For example, Microsoft Azure's Azure OpenAI service supports developers to call OpenAI GPT-4, GPT-3, Codex, and DALL-E model APIs to build and fine-tune models, and provide support for applications. This is the "factory" model, where Azure mainly provides some enterprise-level functions such as security, compliance, and regional availability.

Looking at domestic cloud providers, they also emphasize their "store" capabilities.

Cloud providers play the role of "store," and will also participate in the development of industry large models and AI native applications, control the quality of models and applications, provide selected services, and carry out market promotion and sales.

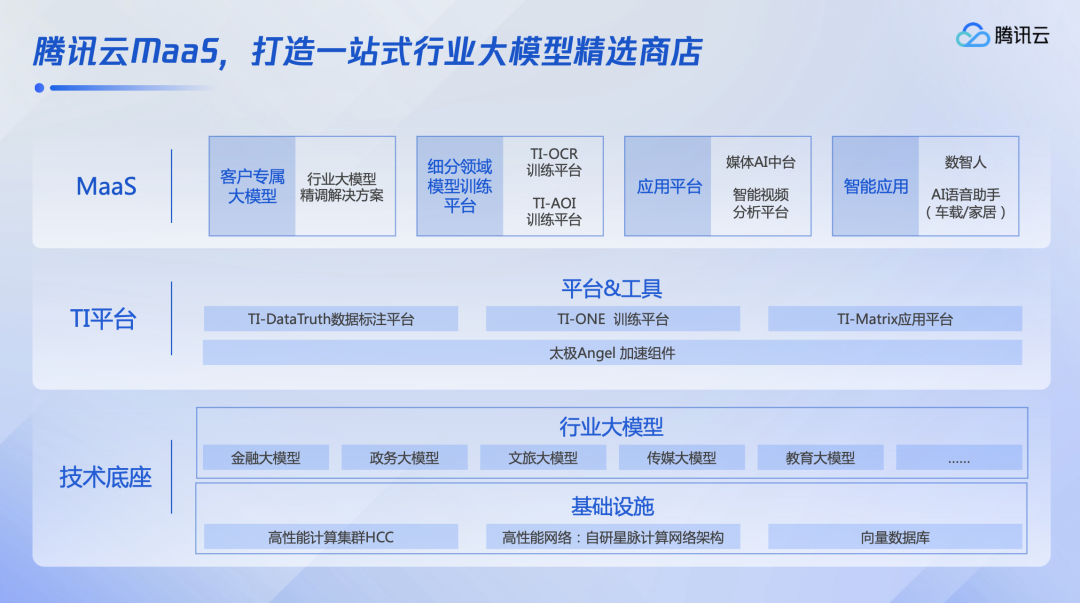

For example, in June of this year, Tencent Cloud launched a one-stop MaaS service at the Industry Large Model and Intelligent Application Technology Summit, relying on Tencent Cloud's TI platform to create an industry large model selection store, which includes several top SaaS products such as Tencent Enterprise Point, Tencent Meeting, and Tencent Cloud AI Code Assistant.

At the Huawei Full Connection Conference 2023 in September, Huawei Cloud's MaaS service adopted a three-tier decoupled architecture of 5 basic large models, N industry large models, and X scenario models, from the basic model at L0 level, to the industry-specific features at L1 level, and the out-of-the-box AI application at L2 level, and launched the Ascend AI Cloud Service Hundred Models and Thousand Forms Zone.

At the Baidu World 2023 just held in October, Baidu Intelligent Cloud's MaaS service platform Qianfan launched the Qianfan AI Native Application Store, becoming a gathering place for commercial opportunities for large models, providing support for brand exposure, traffic support, and sales resources for merchants. The first batch of selected applications includes Baidu's internal Xiling Digital Human Platform, and Baidu Intelligent Cloud's Yinin Intelligent Creation Platform.

It can be seen that in the era of intelligence, vertical models and AI applications will definitely flourish, and they cannot do without model factories. However, the entire stage has only just taken the first step. Currently, there are not few foundational models in the upstream, and their capabilities are not lacking, but it is still very difficult to produce convincing vertical models and applications.

A Protracted Battle to Break Through the Siege

In contrast to the more mature digital and SaaS markets in Europe and the United States, the main force of domestic intelligence in China is traditional industries and enterprises. Many potential buyers of models and applications are not clear about the capabilities of large models, do not know which models are suitable for their business, and are worried that their custom model orders are too small to be taken seriously…

ISV service providers and developers are concerned that by the time they invest time and effort in the entire process, their products will be outdated, or they will not find customers for commercial realization.

In this situation, cloud providers doing only behind-the-scenes "factory" work is far from enough. A more complete model supply chain mechanism needs to be established. The "store in the front and factory in the back" has become the mainstream choice for domestic MaaS. The "factory" is responsible for production, while the "store" is responsible for promotion.

The "store in the front and factory in the back" model greatly increases the difficulty of MaaS.

A Prolonged Battle to Break Through the Siege

In the "store in the front and factory in the back" model, cloud providers are both producers and salespeople; they are both ToB service providers and ToC developers. Multiple identities not only increase the competitive factors and difficulty of MaaS but also bring various conflicts with industry partners, customers, and developers. Specifically, there are several points:

To make money, relying solely on basic models is not enough.

Relying solely on basic models, such as the Azure OpenAI service, which mainly focuses on a few high-quality models like OpenAI GPT-4, GPT-3, Codex, and DALL-E, is not enough to meet the needs of enterprise users, industry partners, and developers. For domestic MaaS service providers, it is also necessary to develop mature vertical large models in key areas such as finance, education, government affairs, and industry with high requirements and high frequency. This is to meet the needs of large models in various industries.

For example, Tencent Cloud's industry large model selection store not only provides mixed-element model calling services but also includes industry large models in more than 20 fields such as finance, cultural tourism, and retail. Similarly, Huawei Cloud and Baidu Cloud are also focusing on "professional teaching" based on "general education" to reduce the threshold for large models to enter the industry.

This creates the first contradiction: cloud providers need to build industry large models, which requires a large number of talents, time, resources, and industry cooperation. It is a significant investment to cover each key industry, and it increases the difficulty of MaaS profitability. However, if cloud providers do not build industry large models, the gap between basic models and AI applications is too large, and ISV service providers, integrators, and developers are hesitant to use them. This will limit the growth of MaaS.

The second contradiction is the abundance and cost of computing power.

Computing power is the foundation for training large models. Each MaaS platform uses its own cluster scale and performance as one of its primary selling points.

It is important to realize that an abundance of computing power means very high computing resource costs, energy consumption, and maintenance costs for cloud providers. Training large models often requires GPU clusters with thousands or tens of thousands of cards. If a GPU server overheats and crashes, the entire cluster must stop, and the training task needs to be restarted. This places very high demands on the hardware performance and maintenance capabilities of cloud service providers, and only a few major cloud providers can support this.

To improve inference efficiency and reduce costs, cloud providers are also competing at the technical level. For example, to achieve the ultimate performance of AI computing power, Huawei Cloud has optimized AI cloud services on top of its infrastructure. Tencent Cloud has developed a new generation of HCC high-performance computing clusters for model training. Baidu has continuously optimized inference costs, with the inference cost of the WENXIN 3.5 version dropping to a fraction of what it was when it was first released in May. Simply stacking cards is not a long-term solution; reducing costs and increasing efficiency is the key.

In addition, at the infrastructure level, cloud providers also face the reality test of AI computing power localization and green low-carbon initiatives. Huawei Cloud's Ascend AI with self-developed chips and Baidu Intelligent Cloud with Kunlun chips can provide more stable underlying computing power, and there should be more opportunities in the future. However, the huge computing resources require user scale and usage levels to support them. As several basic models compete, there may be idle resources. How to recover costs in this situation is also a test of cloud providers' wisdom.

The third contradiction is the tools and teaching of MaaS.

As a "model factory," the MaaS platform needs to provide a full set of development tools and suites for large models, which has become an industry consensus.

Currently, the preparations of the leading cloud platforms are also very sufficient. Huawei Cloud provides the Pangu large model engineering suite, covering data engineering, model development, and application development. It is claimed that the time to complete end-to-end development of a billion-dollar industry model has been reduced from 5 months to 1 month, a fivefold increase in overall speed. Baidu Intelligent Cloud's Qianfan platform provides prefabricated datasets, application paradigms, and other tools to help enterprises apply large models. Tencent Cloud's TI platform also includes a full set of tools such as data labeling, training, evaluation, testing, and deployment.

With such rich tools and platforms, it seems that putting the "teaching tools" into the hands of industry customers and partners should enable the industrial production of large models. However, it is clear that this is not enough. Training a vertical large model is not a simple task. Some industry enterprises have a high level of digitalization and a strong talent pool, and they can use the MaaS platform and tools immediately, such as Kingdee and UFIDA.

However, many more industry partners and enterprise customers, even with these tools and suites, will find it difficult to meet customized requirements without in-depth technical guidance, and without hands-on teaching from product managers, project managers, operations, and programmers.

A Tencent Cloud employee once shared a case where they worked with China Central Television to create the "CCTV Artificial Intelligence Open Platform." They faced the problem of a large and complex dataset that traditional data labeling systems could not meet. Tencent Cloud then rebuilt a media-specific data labeling system and developed an innovative "label weight engine" to make the data labeling more detailed and ranked by core importance. With this data labeling system, video editors can achieve cross-modal retrieval using natural language.

Clearly, MaaS mode also requires cloud providers to have ToB service capabilities, which is a slow, laborious, and tiring process. It is not hopeful to rely on MaaS tools to "make money lying down," at least not at the current stage.

The "store in the front and factory in the back" model of the MaaS platform also has an implicit contradiction: cloud providers also develop applications, so they need to avoid profit conflicts with industry partners and developers.

The MaaS platform requires a large number of AI applications, and cloud providers cannot develop them all themselves. They must introduce a developer mechanism, similar to the App Store, to encourage software companies or individual developers to create AI applications based on the cloud platform.

However, there is still a large blank space in what kind of AI applications can be made based on general large models. Therefore, cloud providers also develop and list some AI applications themselves.

For example, Baidu Intelligent Cloud's Qianfan AI Native Application Store has listed applications such as Baidu's Xiling Digital Human live platform and comate code assistant, as well as applications from partners such as WPS365 and Wutong Recruitment Assistant. It has also launched recommended applications.

App Store has been criticized by application developers such as Spotify for being both a referee and a player. Therefore, the MaaS platform under the "store in the front and factory in the back" model also develops AI applications and must dispel developers' concerns. It should only create "creative/representative" applications as a way to inspire others, establish business segregation, and jointly sell applications to help developers establish commercial links and gain economic benefits.

Just like in the era of mobile internet, the prosperity of the development ecosystem means that the content and experience are rich enough to meet user demands. The user scale will attract more developers to mine, forming a "Matthew effect," and the app store will continue to thrive, making it difficult for users and developers to easily switch to other platforms.

The same applies to AI native applications based on large models. According to a Baidu Intelligent Cloud employee, the reason why Baidu Intelligent Cloud became the first in the industry to release an AI application store is that everyone has a habit of following the crowd, and currently, the best thing to do is to be fast, which will also lead to higher customer retention rates.

For cloud providers, the MaaS model requires ecosystem partners more than ever. Baidu Intelligent Cloud's Qianfan AI Native Application Store, Tencent Cloud's Industry Large Model Ecological Plan, and Huawei Cloud's diverse partner empowerment all indicate that without applications, there is no ecosystem. It is essential to attract as many developers as possible, and this is the key to the success of MaaS.

It can be seen that the competition around the MaaS model has a bright future, but the road is long and arduous. Once started, all costs will increase, creating new revenue pressures. However, if not started, there is a risk of missing the opportunity for large models and AI native applications, and the hope of a turnaround from being an infrastructure service provider will be lost.

For cloud providers, MaaS is not a dilemma of choosing between two difficult options, but a battle with no way out. Every difficulty will be overcome, and there will be a day when the clouds clear and the moon shines.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。