Source: Quantum Bit

Image source: Generated by Wujie AI

The number of AI papers has surged, but only 4% of researchers truly consider it a "must-have" tool?!

This conclusion comes from the latest survey by Nature.

More precisely, it is the survey results of researchers who will use AI tools in scientific research, selected from over 40,000 researchers who published papers in the last four months of 2022, spanning the globe and from different disciplines.

In addition, "insiders" who develop AI tools and "outsiders" who do not use AI tools in research were also included in the survey, with a total of 1600+ respondents.

The relevant results have been published under the title "AI and science: what 1,600 researchers think."

How do scientific researchers really view AI tools? Let's continue to explore.

Views of 1600 Researchers on AI

This survey mainly focused on researchers' views on machine learning and generative AI.

To ensure the objectivity and effectiveness of the survey results, as mentioned above, Nature contacted over 40,000 scientists from around the world who published papers in the last four months of 2022 via email and invited readers of Nature Briefing to participate in the survey.

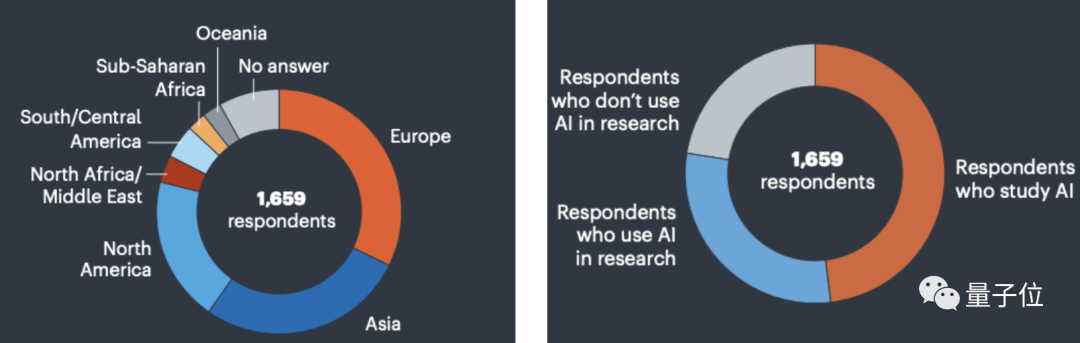

Ultimately, 1659 respondents were selected, with the specific sample composition as follows:

The majority of respondents are from Asia (28%), Europe (nearly 1/3), and North America (20%).

Among them, 48% are directly involved in developing or researching AI, 30% use AI in research, and 22% do not use AI in research.

Now let's look at the detailed results.

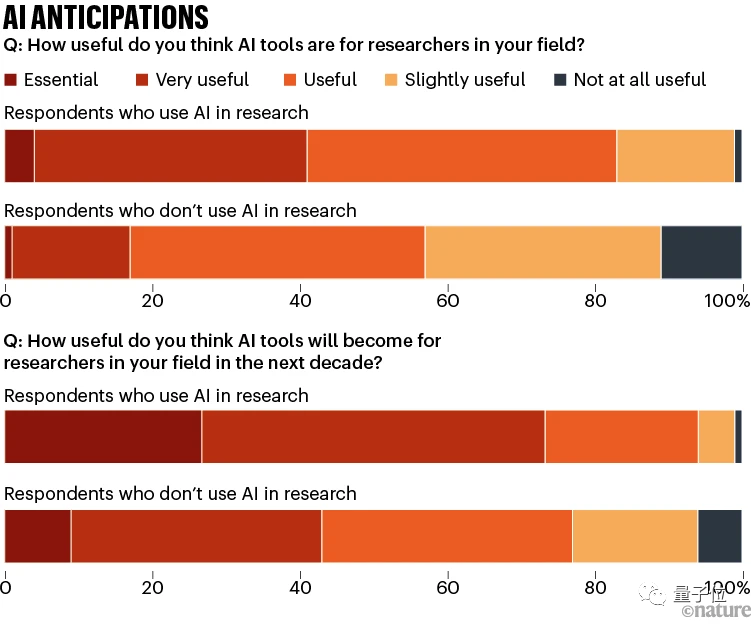

According to the survey, among those who use AI in research, over a quarter of them believe that AI tools will become a "necessity" in their field in the next ten years.

However, only 4% of them believe that AI tools are already a "necessity" now, and another 47% believe that artificial intelligence will be "very useful" in the future.

In contrast, researchers who do not use AI are not very interested in this. Nevertheless, 9% of them believe that these technologies will become "indispensable" in the next ten years, and another 34% believe they will be "very useful."

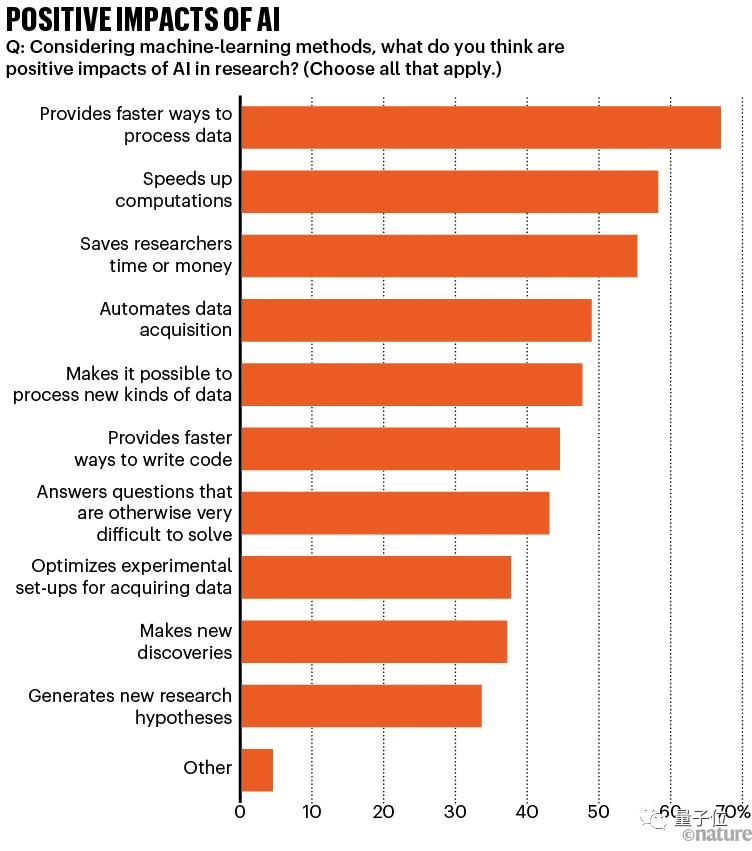

In the survey on views about machine learning, respondents were asked to select the positive effects brought by AI tools. Two-thirds of the respondents believe that AI provides a faster way to process data, 58% believe that AI accelerates previously infeasible computations, and 55% mentioned that AI saves time and money.

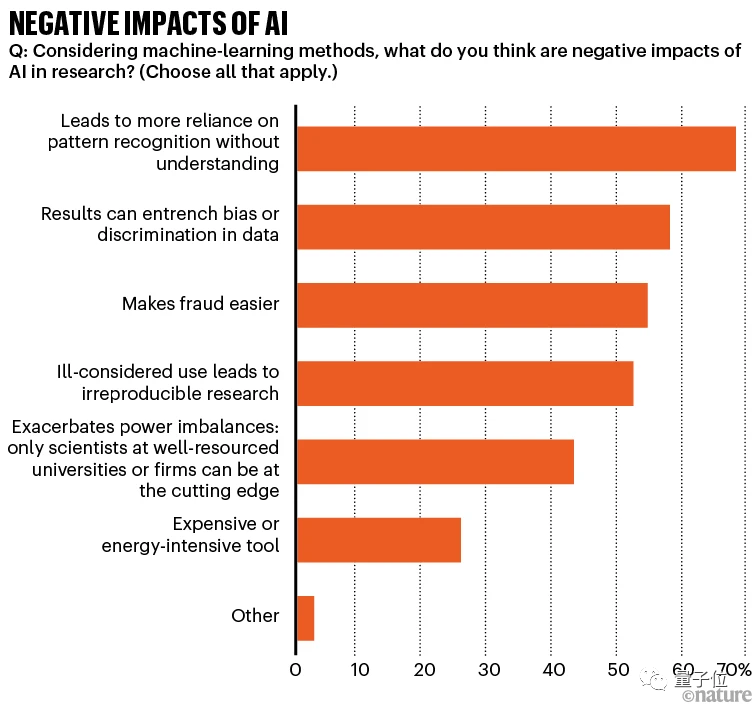

The negative effects that respondents believe AI may bring mainly include: leading to more reliance on pattern recognition rather than deep understanding (69%), potentially reinforcing biases or discrimination in data (58%), increasing the likelihood of fraud (55%), and blind use may lead to irreproducible research (53%).

Next, let's look at researchers' views on generative AI tools.

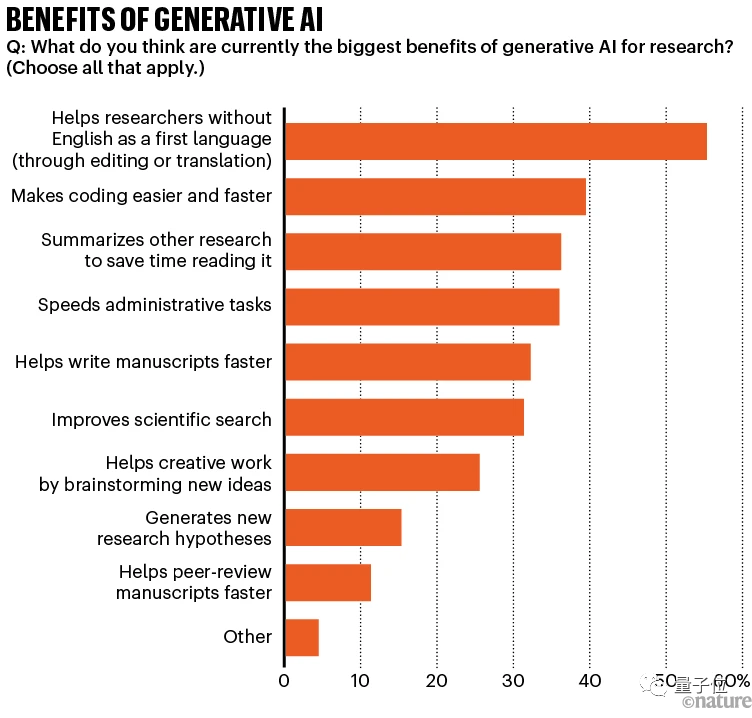

Most people believe that a major advantage of generative AI tools is summarizing and translating, which can help non-native English-speaking researchers improve the grammar and style of their papers. Secondly, its ability to write code also received approval.

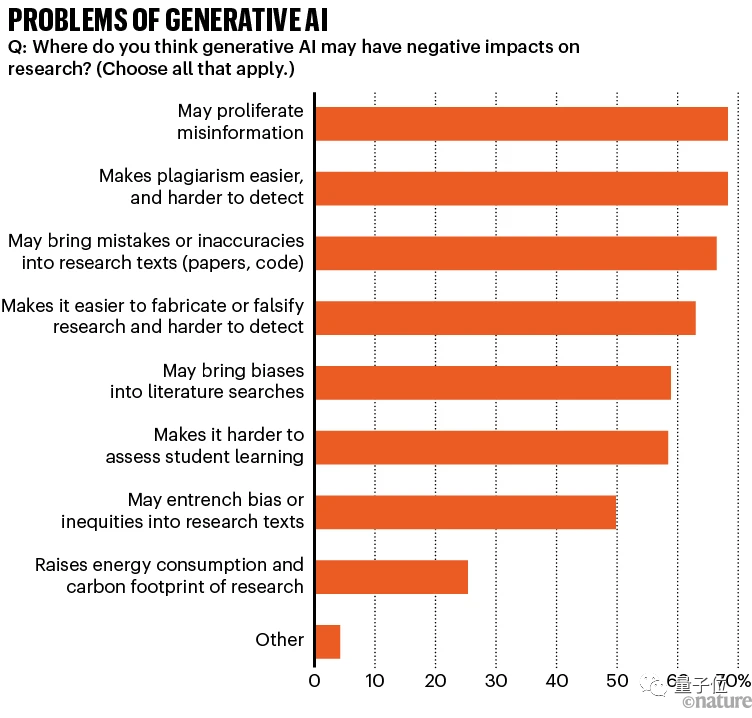

However, generative AI also has some issues. Researchers are most concerned about inaccurate information dissemination (68%), making plagiarism easier to detect but harder to prevent (68%), and introducing errors or inaccurate content in papers/code (66%).

Respondents also added that if AI tools used for medical diagnosis are trained on biased data, they are concerned about the possibility of forged research, false information, and long-term biases.

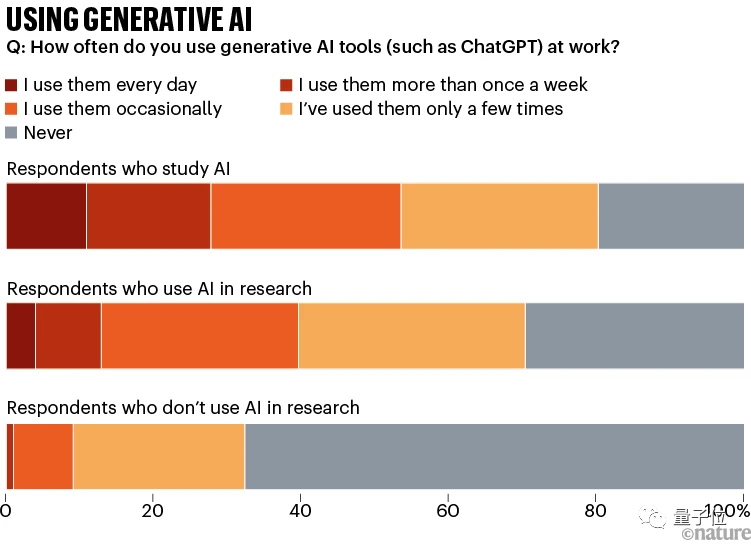

In addition, according to frequency of use statistics, even among researchers interested in AI, those who frequently use large language models are still in the minority.

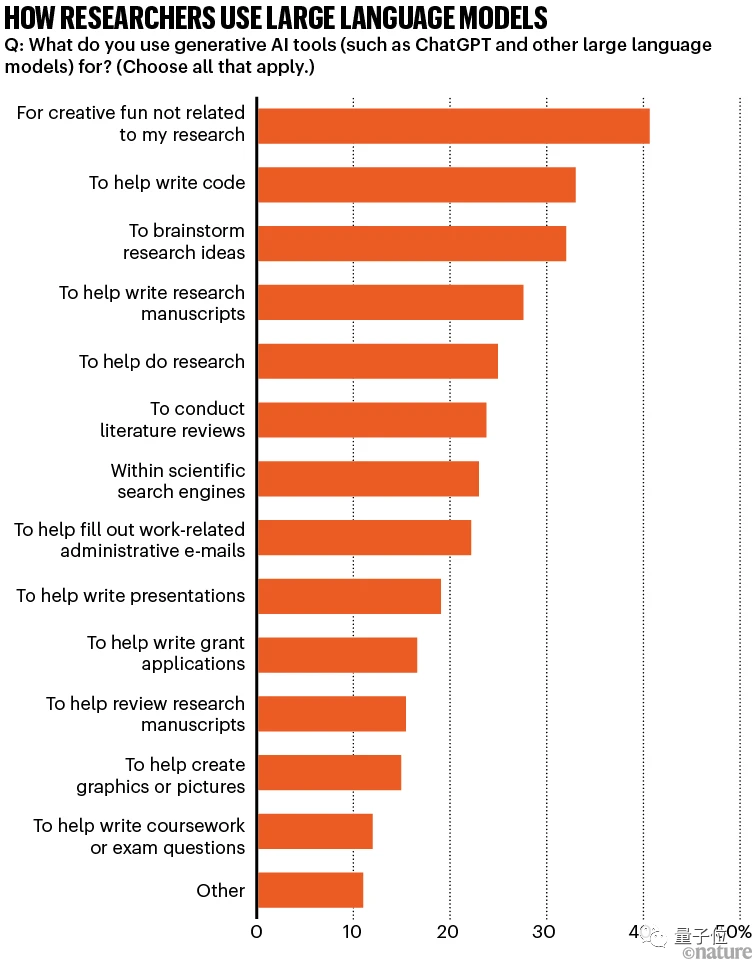

Among all surveyed groups, the most common use of AI by researchers is for unrelated creative entertainment*; followed by using AI tools to write code, brainstorm research ideas, and help write papers.

Some scientists are not satisfied with the output of large models. A researcher who used a large model to assist in editing a paper wrote:

It feels like ChatGPT has replicated all the bad writing habits of humans.

Johannes Niskanen, a physicist at the University of Turku in Finland, said:

If we use AI to read and write articles, science will quickly transition from "for humans by humans" to "for machines by machines."

In this survey, Nature also delved into researchers' views on the challenges facing AI development.

Challenges Facing AI Development

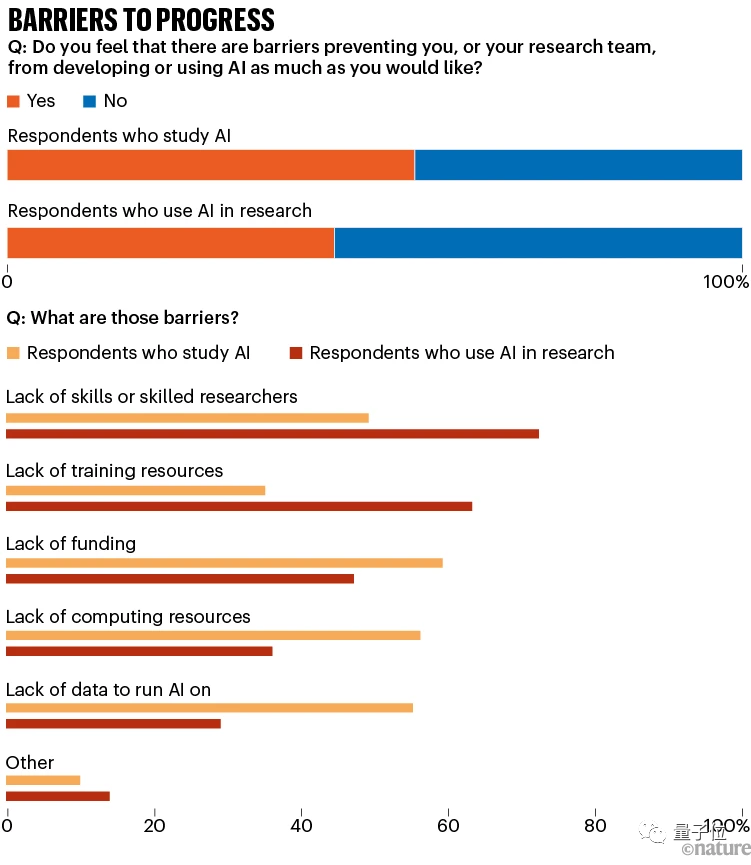

About half of the researchers stated that they have encountered obstacles in developing or using AI.

The researchers who develop AI are most concerned about: insufficient computing resources, insufficient research funding, and insufficient high-quality data for AI training.

And in other fields, those who work but use AI in their research are more concerned about the lack of scientists with sufficient skills and training resources, as well as security and privacy.

Researchers who do not use AI stated that they do not need AI, or they believe AI is not practical, or they lack the experience and time to research these AI tools.

It is worth mentioning that the dominance of tech giants in AI computing resources and ownership of AI tools is also a concern for the respondents.

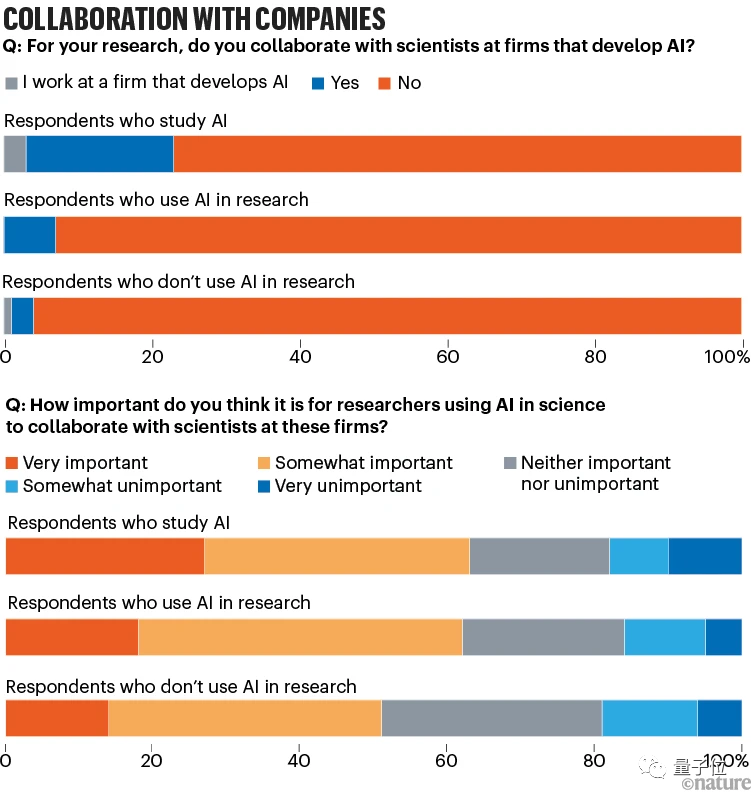

Among AI tool developers, 23% stated that they collaborate with or work at companies developing AI tools (with Google and Microsoft being the most commonly mentioned), while only 7% of those who only use AI have such experience.

Overall, more than half of the respondents believe that it is "very" or "somewhat" important for researchers using AI to collaborate with scientists from these companies.

In addition to development, there are also some issues in usage.

Previously, researchers have expressed concerns that blindly using AI tools in scientific research may lead to erroneous, false, and irreproducible research results.

Lior Shamir, a computer scientist at Kansas State University in Manhattan, believes:

Machine learning may sometimes be useful, but the problems caused by AI are more than the help it provides. Scientists using AI without understanding what they are doing may lead to false discoveries.

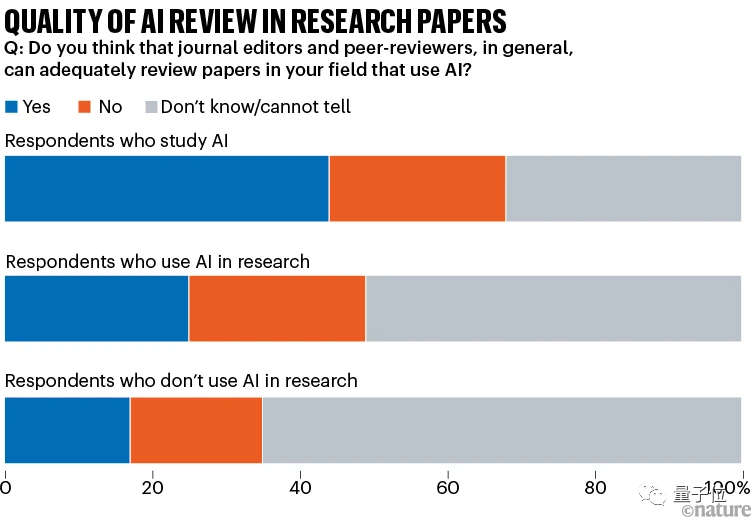

When asked whether journal editors and peer reviewers can adequately review papers using artificial intelligence, respondents' opinions varied.

Among researchers who use AI in research but do not directly develop AI, about half were uncertain, a quarter believed the review was adequate, and another quarter believed it was inadequate. Researchers who directly develop AI tended to have a more positive view of the editing and review process.

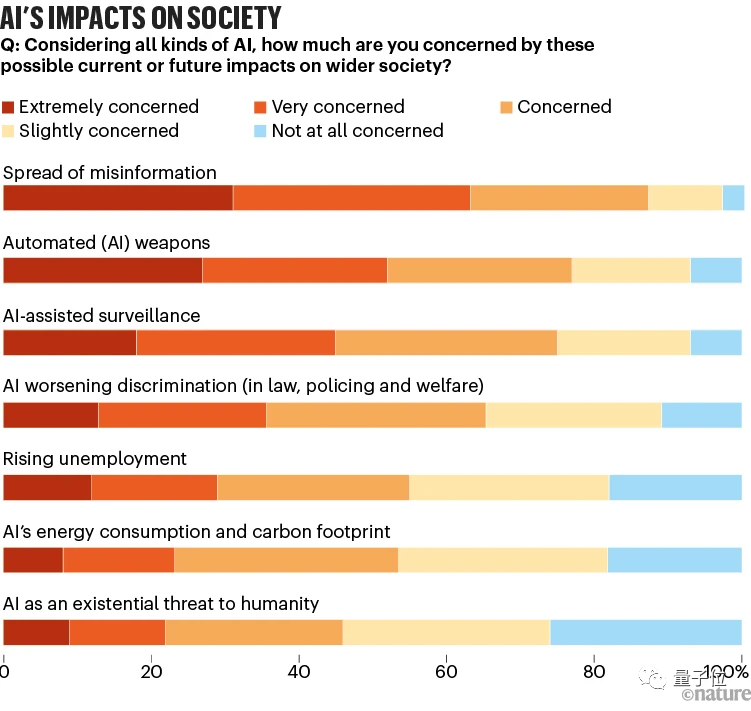

In addition, Nature also asked respondents about their concerns about seven potential impacts of AI on society.

Spreading misinformation became the researchers' most concerning issue, with two-thirds of them stating that they are "very concerned" or "concerned."

The least concerning issue was AI potentially posing a threat to human survival.

Reference link: https://www.nature.com/articles/d41586-023-02980-0

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。