Why are language models lagging behind diffusion models in visual generation? Research from Google and CMU shows that the tokenizer is key.

Image source: Generated by Unbounded AI

Large language models (LLM or LM) were initially used to generate language, but over time, they have been able to generate content in multiple modalities and have begun to dominate in areas such as audio, speech, code generation, medical applications, robotics, and more.

Of course, LM can also generate images and videos. In this process, image pixels are mapped to a series of discrete tokens by a visual tokenizer. These tokens are then fed into the LM transformer, similar to how vocabulary is used for modeling. Despite significant progress in visual generation, LM's performance still lags behind diffusion models. For example, when evaluated on the ImageNet dataset, a benchmark for image generation, the best language model's performance was 48% worse than that of diffusion models (FID of 3.41 vs. 1.79 when generating images at a resolution of 256x256).

Why are language models lagging behind diffusion models in visual generation? Researchers from Google and CMU believe that the main reason is the lack of a good visual representation, similar to our natural language systems, to effectively model the visual world. To confirm this hypothesis, they conducted a study.

Paper link: https://arxiv.org/pdf/2310.05737.pdf

This study shows that, under the same training data, comparable model size, and training budget conditions, using a good visual tokenizer, the masked language model (MLM) outperforms diffusion models in both the fidelity and efficiency of image and video generation benchmarks. This is the first evidence of a language model beating diffusion models on the landmark ImageNet benchmark.

It is important to emphasize that the researchers' goal is not to assert whether language models are superior to other models, but to promote the exploration of LLM visual tokenization methods. The fundamental difference between LLM and other models (such as diffusion models) is that LLM uses discrete latent formats, namely tokens obtained from visual tokenization. This study shows that the value of these discrete visual tokens should not be overlooked, as they have the following advantages:

Compatibility with LLM. The main advantage of token representation is that it shares the same form as language tokens, allowing for direct utilization of optimizations made by the community over the years for developing LLM, including faster training and inference speeds, advances in model infrastructure, methods for scaling models, and innovations in GPU/TPU optimization. Unifying visual and language in the same token space can lay the foundation for true multimodal LLM, which can understand, generate, and reason in our visual environment.

Compression representation. Discrete tokens can provide a new perspective for video compression. Visual tokens can serve as a new video compression format to reduce the disk storage and bandwidth occupied by data during internet transmission. Unlike compressed RGB pixels, these tokens can be directly input into generation models, bypassing traditional decompression and encoding steps. This can accelerate the processing speed of video applications, especially in edge computing scenarios.

Visual understanding advantage. Previous research has shown that discrete tokens are valuable as a pretraining target in self-supervised representation learning, as discussed in BEiT and BEVT. In addition, the use of tokens as model inputs has been found to improve robustness and generalization.

In this paper, the researchers propose a video tokenizer called MAGVIT-v2, designed to map videos (and images) to compact discrete tokens.

The model is built on top of the state-of-the-art video tokenizer within the VQ-VAE framework—MAGVIT. Based on this, the researchers propose two new techniques: 1) a novel lookup-free quantization method that enables learning a large vocabulary to improve the generation quality of language models; 2) through extensive empirical analysis, they identify modifications to MAGVIT that not only improve generation quality but also allow tokenization of images and videos using a shared vocabulary.

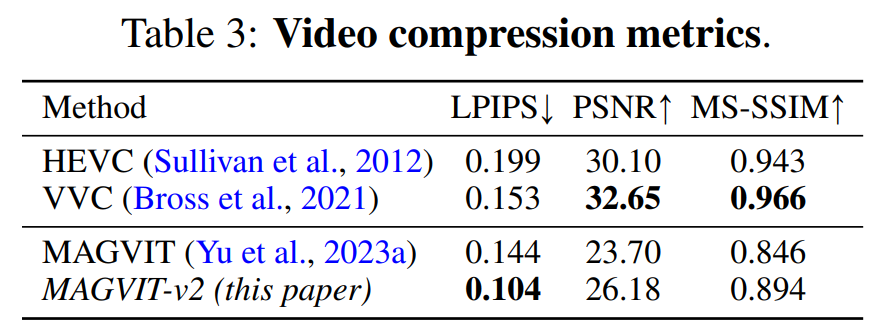

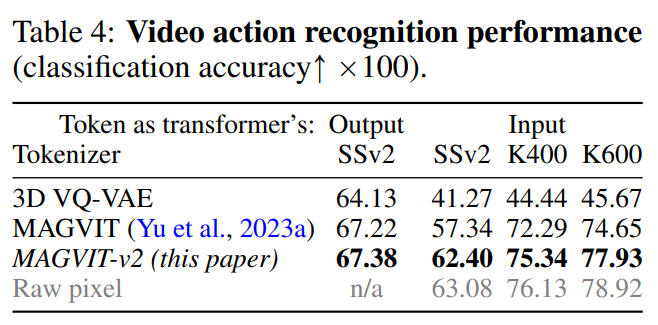

Experimental results show that the new model outperforms the previous best-performing video tokenizer—MAGVIT—in three key areas. First, the new model significantly improves the generation quality of MAGVIT, refreshing the SOTA on common image and video benchmarks. Second, user studies indicate that its compression quality exceeds that of MAGVIT and the current video compression standard HEVC. Additionally, it is on par with the next-generation video codec VVC. Finally, the researchers demonstrate that their new tokens outperform MAGVIT in video understanding tasks on two settings and three datasets.

Method Introduction

This paper introduces a new video tokenizer designed to map temporal-spatial dynamics in visual scenes to compact discrete tokens suitable for language models. Additionally, this method is built on top of MAGVIT.

Subsequently, the study focuses on introducing two novel designs: lookup-free quantization (LFQ) and enhanced functionality of the tokenizer model.

Lookup-Free Quantization

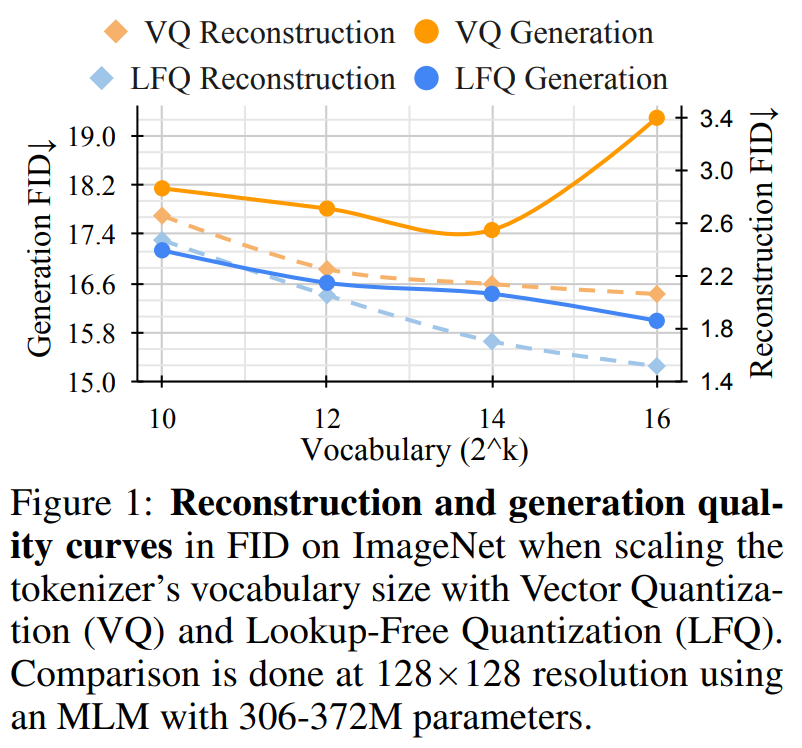

In recent times, the VQ-VAE model has made significant progress, but it has a drawback: the relationship between the improvement in reconstruction quality and the subsequent generation quality is unclear. Many people mistakenly believe that improving reconstruction is equivalent to improving the generation of language models, for example, increasing the vocabulary size can improve reconstruction quality. However, this improvement only applies to small vocabulary generation and can impair the performance of language models when the vocabulary size is very large.

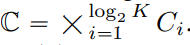

This paper embeds the VQ-VAE codebook dimension reduction to 0, i.e., the codebook

is replaced by an integer set

, where

.

Unlike the VQ-VAE model, this new design completely eliminates the need for embedding lookup, hence it is called LFQ. The paper finds that LFQ can improve the generation quality of language models by increasing the vocabulary size. As shown by the blue curve in Figure 1, as the vocabulary size increases, both reconstruction and generation continuously improve— a feature not observed in the current VQ-VAE method.

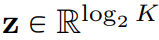

So far, there are many available LFQ methods, but this paper discusses a simple variant. Specifically, the latent space of LFQ is decomposed into the Cartesian product of single-dimensional variables, i.e.,

. Assuming a given feature vector

, the quantized representation

q(z)

for each dimension is obtained from:

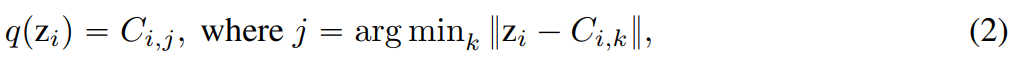

For LFQ, the token index of

q(z)

is:

In addition, this paper also introduces an entropy penalty during the training process:

Improvements to the Visual Tokenizer Model

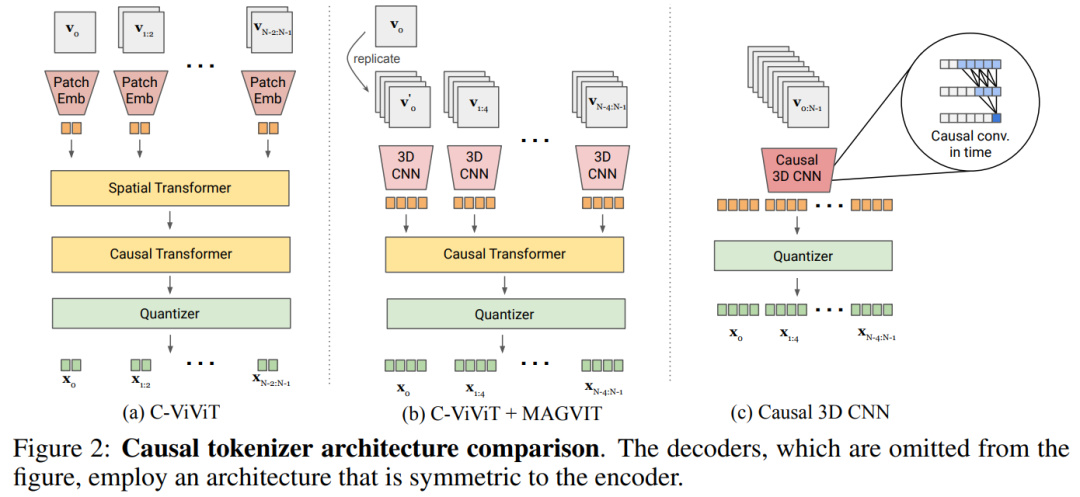

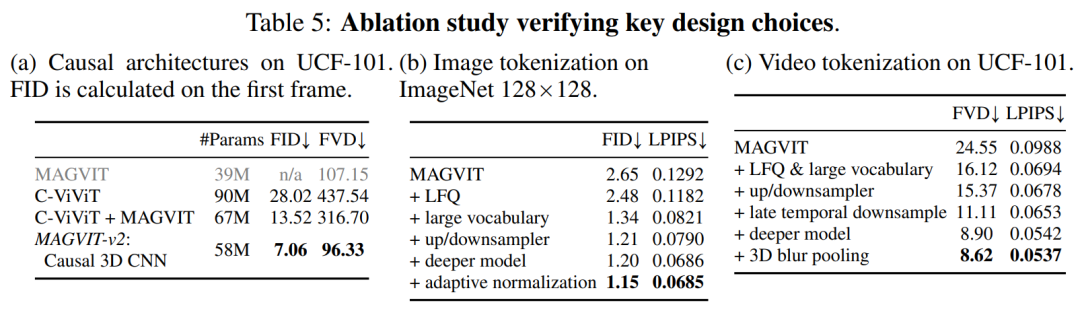

Joint image-video tokenization. To build a joint image-video tokenizer, a new design is needed. This paper found that the performance of 3D CNN is better than spatial transformers.

The paper explores two feasible design options, as shown in Figure 2b combining C-ViViT with MAGVIT, and Figure 2c using temporal causal 3D convolution instead of conventional 3D CNN.

Table 5a provides an empirical comparison of the designs in Figure 2, showing that causal 3D CNN performs the best.

In addition to using causal 3D CNN layers, this paper also made modifications to other architectures to improve the performance of MAGVIT, such as changing the encoder downsampler from average pooling to strided convolution, and adding an adaptive group normalization layer before each resolution's residual block in the decoder.

Experimental Results

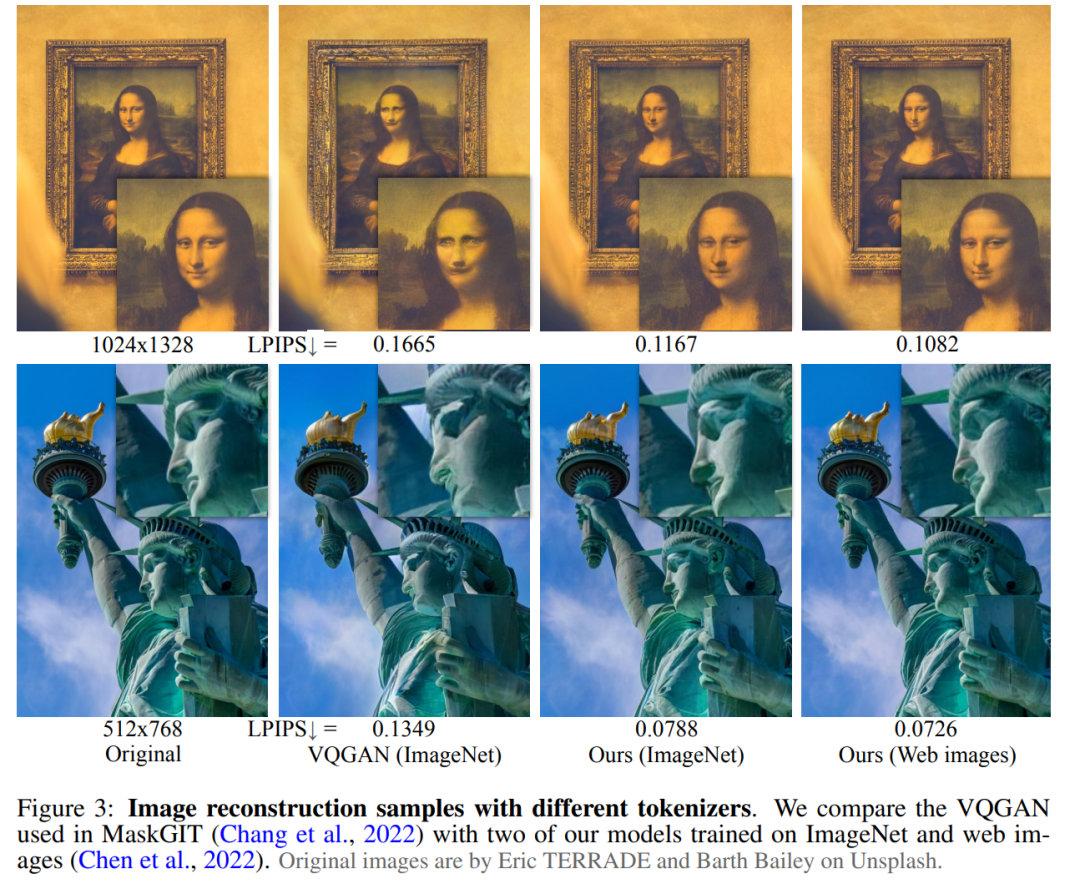

The performance of the proposed tokenizer is validated in three parts of the experiment: video and image generation, video compression, and action recognition. Figure 3 visually compares the tokenizer with the results of previous studies.

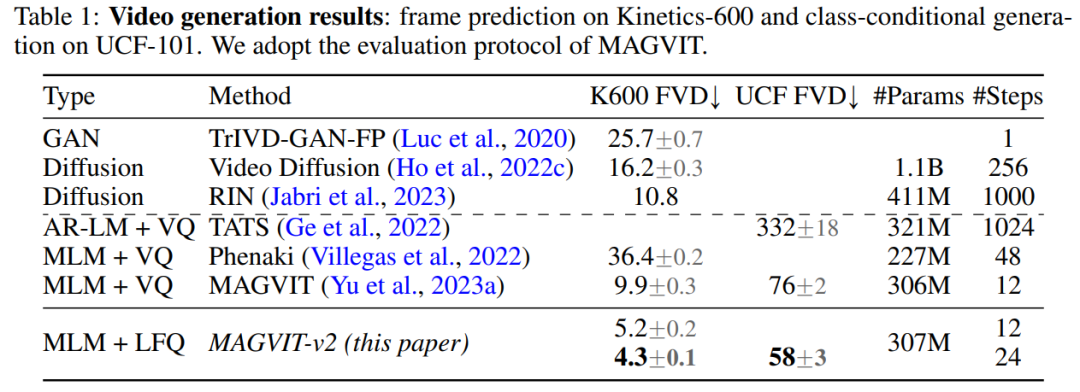

Video generation. Table 1 shows that the model in this paper surpasses all existing techniques in two benchmark tests, demonstrating the important role of a good visual tokenizer in enabling LM to generate high-quality videos.

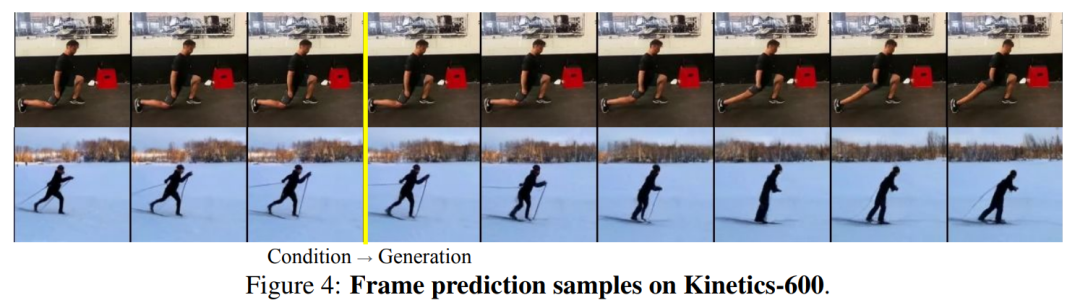

Figure 4 shows qualitative samples of the model.

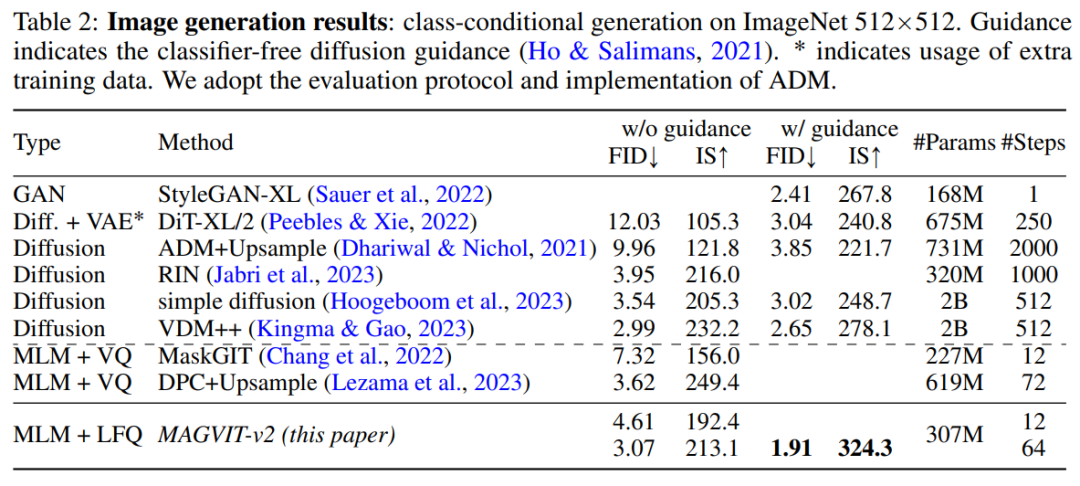

Image generation. The results of image generation for MAGVIT-v2 under standard ImageNet class-conditional settings are evaluated in this paper. The results indicate that the model in this paper outperforms the best-performing diffusion model in terms of sampling quality (ID and IS) and inference time efficiency (sampling steps).

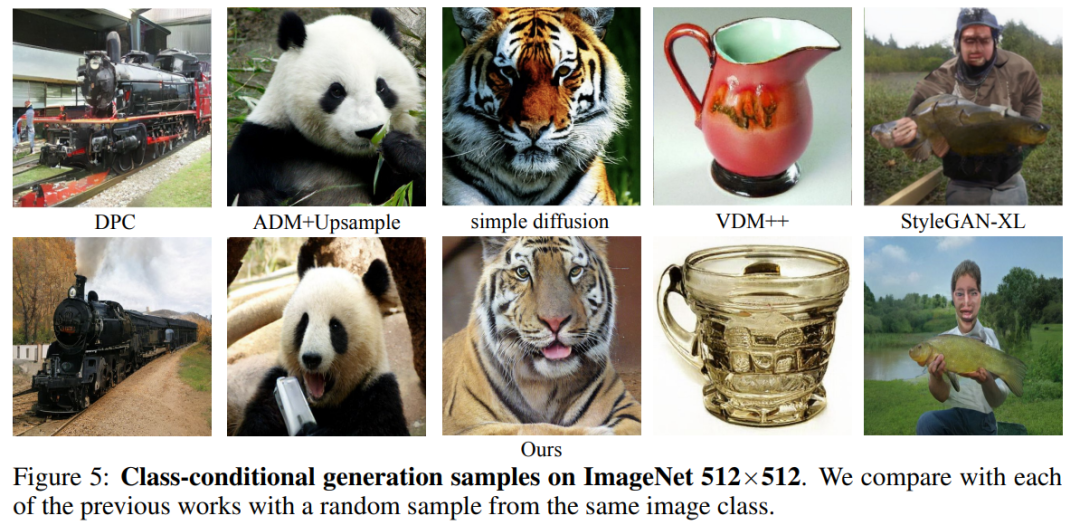

Figure 5 shows the visualization results.

Video compression. As shown in Table 3, the model in this paper outperforms MAGVIT on all metrics and surpasses all methods in LPIPS.

Video understanding. As shown in Table 4, MAGVIT-v2 outperforms the previous best MAGVIT in these evaluations.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。