AI tools that make images look better often lead to image distortion, while making images look more realistic often lacks aesthetic appeal. How should this issue be balanced?

Image source: Generated by Wujie AI

In suspense and science fiction works, we often see scenes like this: a blurry photo displayed on a computer screen, and then investigators request image enhancement, and the image magically becomes clear, revealing important clues.

This looks great, but in fact, this has been completely fictional for decades. Even during the period when AI generation capabilities began to grow, it was difficult to achieve: "If you simply enlarge the image, it will become blurry. There will indeed be a lot of details, but they are all incorrect," said Bryan Catanzaro, vice president of applied deep learning research at NVIDIA.

However, researchers have recently begun to incorporate AI algorithms into image enhancement tools, making this process more convenient and powerful, but there are still limitations to the data retrieved from any image. But as researchers continue to push the development of enhancement algorithms, they are looking for new ways to address these limitations and have even found ways to overcome them.

Over the past decade, researchers have started using Generative Adversarial Networks (GAN) models to enhance images, which can generate detailed and impressive pictures.

Tomer Michaeli, an electrical engineer at the Technion - Israel Institute of Technology, said, "The images suddenly look much better." But he was surprised to find that images generated by GANs showed a high level of distortion, which measures the closeness between the enhanced image and the underlying reality displayed. Images generated by GANs look naturally beautiful, but in fact, they "fictionalize" or "fantasize" inaccurate details, leading to high distortion.

Michaeli observed that the field of photo restoration is divided into two categories: one shows beautiful images, many of which are generated by GANs. The other shows data, but does not show many images because they do not look good.

In 2017, Michaeli and his graduate student Yochai Blau formally explored the performance of various image enhancement algorithms in terms of distortion and perceptual quality, using a perceptual quality known measure related to human subjective judgment. As Michaeli expected, the visual quality of some algorithms is very high, while others are very accurate with low distortion. However, none of them have both advantages at the same time. You have to choose one. This is called perceptual distortion trade-off.

Michaeli also challenged other researchers to propose algorithms that can produce the best image quality at a given level of distortion, in order to make a fair comparison between beautiful image algorithms and good statistical data algorithms. Since then, hundreds of AI researchers have proposed the distortion and perceptual quality of their algorithms, citing Michaeli and Blau's paper describing this trade-off.

Sometimes the impact of perceptual distortion trade-off is not terrible. For example, NVIDIA found that high-definition screens cannot render some low-definition visual content well, so in February 2023, they launched a tool that uses deep learning to enhance the video quality of streaming media. In this case, NVIDIA's engineers chose perceptual quality over accuracy, accepting the fact that when the algorithm enhances the video resolution, it will generate some visual details that were not in the original video.

"The model is fantasizing. It's all guesswork," Catanzaro said. "Most of the time, it's okay for the super-resolution model to guess wrong, as long as it's consistent."

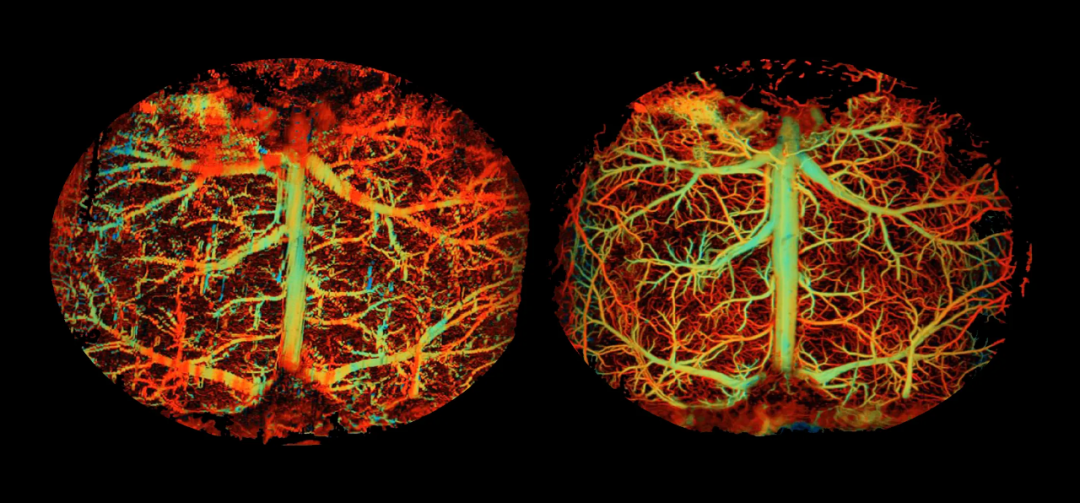

View of mouse brain blood flow (left) and the same view after using AI tools to improve image quality and accuracy. Image source: Duke University Junjie Yao, Xiaoyi Zhu.

In particular, applications in the fields of research and medicine will require higher accuracy. AI technology has made significant advances in imaging, but Junjie Yao, a biomedical engineer at Duke University, said, "It sometimes brings unwanted side effects, such as overfitting or adding false features, so it needs to be treated extremely carefully."

Last year, he described how to use AI tools to improve existing methods for measuring brain blood flow and metabolism, while operating safely on the accurate side of the perceptual distortion trade-off.

One way to bypass the limitation of how much data can be extracted from images is simply to merge data from more images. Previously, researchers studying the environment through satellite images had made some progress in integrating visual data from different sources: in 2021, researchers from China and the UK merged data from two different types of satellites to better observe deforestation in the Congo Basin. The Congo Basin is the world's second largest tropical rainforest and one of the most biodiverse regions. The researchers obtained data from two Landsat satellites, which have been measuring deforestation for decades, and used deep learning technology to increase the image resolution from 30 meters to 10 meters. Then, they merged this set of images with data from two Sentinel-2 satellites, which have slightly different detector arrays. Their experiments showed that this integrated image "detects 11% to 21% more disturbed areas than when using Sentinel-2 or Landsat-7/8 images alone."

If direct breakthroughs cannot be made, Michaeli proposed another method to impose a hard limit on the accessibility of information. Instead of seeking a definitive answer on how to enhance low-quality images, it is better to let the model show multiple different interpretations of the original image. In the paper "Explorable Super Resolution," he demonstrated how image enhancement tools provide multiple suggestions to users. A blurry, low-resolution image of a person wearing what appears to be a gray shirt can be reconstructed into a higher-resolution image, in which the shirt can be black and white vertical stripes, horizontal stripes, or checks, all of which are equally reasonable.

In another example, Michaeli took a low-quality photo of a license plate and used AI image enhancement to show that the number 1 on the license plate most likely looks like 0. But when the image was processed by Michaeli's different, more open-ended algorithm, the number could also look like 0, 1, or 8. This approach can help rule out other numbers without incorrectly concluding that the number is 0.

We can alleviate these illusions, but that powerful "enhance" button that solves crimes is still a dream.

In different fields, various disciplines are exploring the perceptual distortion trade-off in their own ways, and the core issue remains how much information can be extracted from AI images and the degree of trust in these images.

"We should remember that in order to output these beautiful images, the algorithm is just fabricating details," Michaeli said.

Original article link: https://www.quantamagazine.org/the-ai-tools-making-images-look-better-20230823/

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。