There are obvious advantages in the evaluation of medical health consultation in single-round Q&A and multi-round dialogue.

With the rise of remote medical care, online consultation has become the preferred option for patients seeking convenient and efficient medical support. Recently, large language models (LLMs) have demonstrated powerful natural language interaction capabilities, bringing hope for health care assistants to enter people's lives.

The medical health consultation scenario is usually complex, and personal assistants need to have rich medical knowledge, the ability to understand patients' intentions through multiple rounds of dialogue, and the ability to provide professional and detailed responses. General language models often avoid discussing or answer off-topic in medical health consultations due to lack of medical knowledge. At the same time, they tend to focus on addressing the current round of questions, lacking satisfactory multi-round questioning capabilities. In addition, high-quality Chinese medical datasets are also very rare, posing a challenge for training powerful language models in the medical field.

Fudan University's Data Intelligence and Social Computing Laboratory (FudanDISC) has released the Chinese medical health personal assistant — DISC-MedLLM. In the evaluation of single-round Q&A and multi-round dialogue in medical health consultations, the model's performance shows significant advantages compared to existing large medical dialogue models. The research team has also publicly released a high-quality supervised fine-tuning (SFT) dataset containing 470,000 samples — DISC-Med-SFT, and the model parameters and technical reports are also open source.

- Homepage: https://med.fudan-disc.com

- Github: https://github.com/FudanDISC/DISC-MedLLM

- Technical Report: https://arxiv.org/abs/2308.14346

1. Sample Display

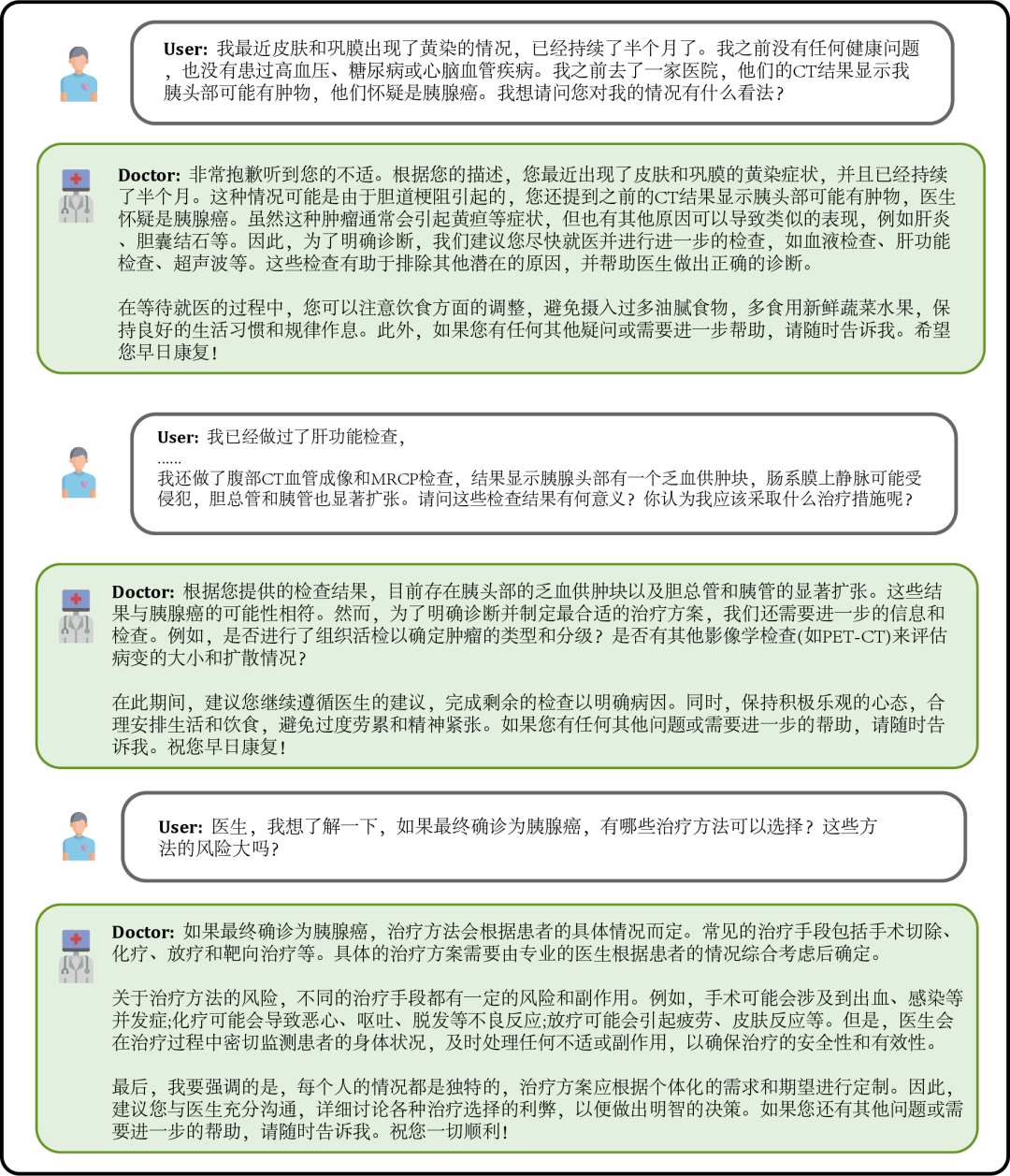

Figure 1: Dialogue Example

When a patient feels unwell, they can consult the model, describe their symptoms, and the model will provide possible causes, recommended treatment plans, etc. as a reference. When information is lacking, the model will actively ask for a detailed description of the symptoms.

Figure 2: Dialogue in Consultation Scene

Users can also ask the model specific consulting questions based on their own health conditions, and the model will provide detailed and helpful replies. When information is lacking, the model will actively ask for more details to enhance the relevance and accuracy of the response.

Figure 3: Dialogue Based on Personal Health Conditions

Users can also inquire about medical knowledge unrelated to their own health, and the model will provide professional answers as much as possible to help users fully and accurately understand.

Figure 4: Inquiry about Unrelated Medical Knowledge

2. Introduction to DISC-MedLLM

DISC-MedLLM is a large medical model trained on the high-quality dataset DISC-Med-SFT using the general domain Chinese large model Baichuan-13B. It is worth noting that our training data and methods can be adapted to any base large model.

DISC-MedLLM has three key features:

- Reliable and rich professional knowledge. We use the medical knowledge graph as the source of information, sample triplets, and use the language capabilities of the general large model to construct dialogue samples.

- Inquiry capability for multi-round dialogue. We use real consultation dialogue records as the source of information, use the large model to reconstruct the dialogue, and require the model to completely align the medical information in the dialogue during the construction process.

- Replies aligned with human preferences. Patients hope to receive more comprehensive support and background knowledge during the consultation process, but the answers from human doctors are often concise. Through manual screening, we construct a high-quality small-scale instruction sample to align with the needs of patients.

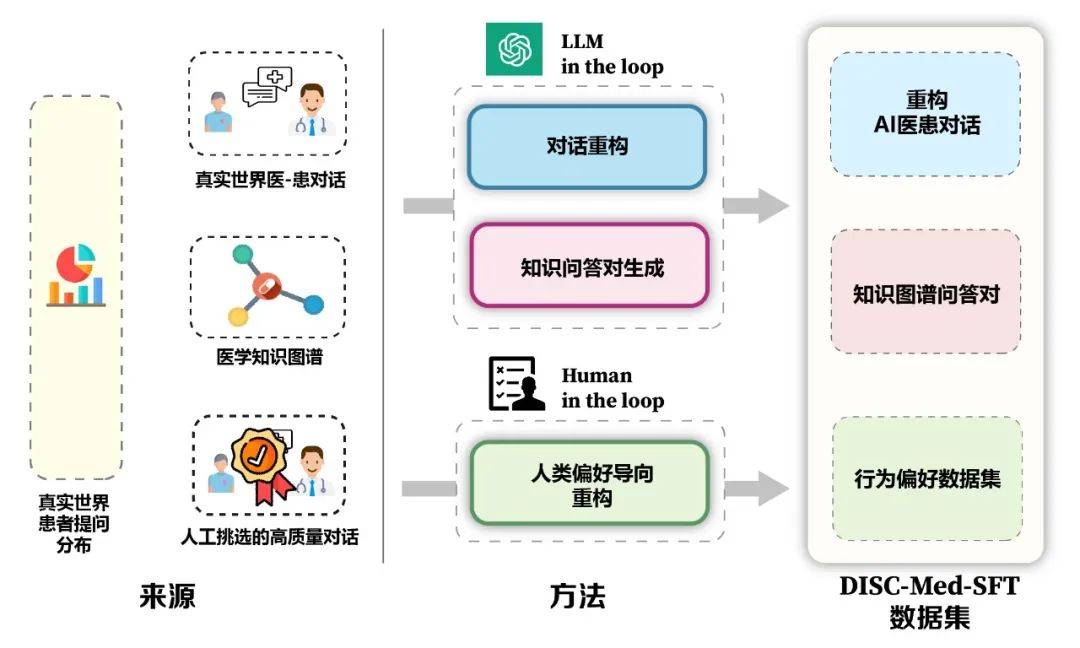

The model's advantages and data construction framework are shown in Figure 5. We calculate the real distribution of patients from real consultation scenes to guide the sample construction of the dataset. Based on medical knowledge graphs and real consultation data, we use two approaches, large model in the loop and human in the loop, to construct the dataset.

Figure 5: Construction of DISC-Med-SFT

3. Method: Construction of Dataset DISC-Med-SFT

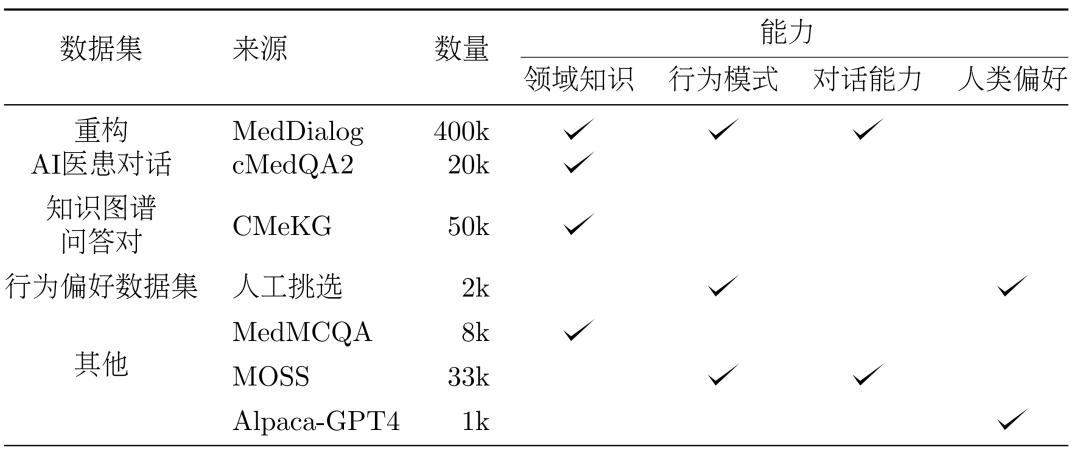

During the model training process, we supplemented DISC-Med-SFT with general domain datasets and data samples from existing corpora to form DISC-Med-SFT-ext, detailed information is presented in Table 1.

Table 1: Introduction of DISC-Med-SFT-ext Data Content

Reconstruction of AI Doctor-Patient Dialogues

Dataset. We randomly selected 400,000 samples from two public datasets, MedDialog and cMedQA2, as the source samples for constructing the SFT dataset.

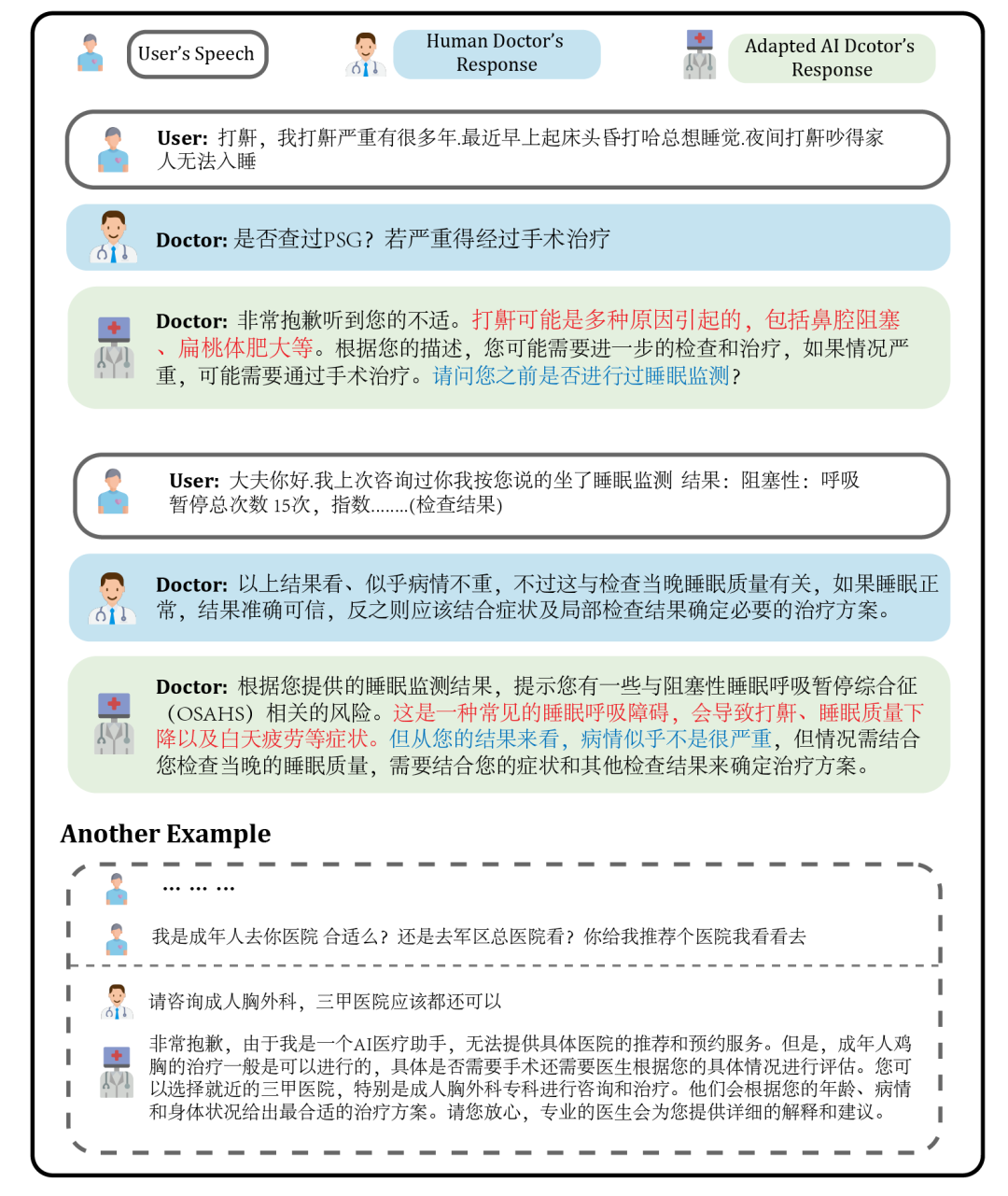

Reconstruction. In order to adjust real-world doctor responses to the required high-quality uniform format, we used GPT-3.5 to complete the reconstruction process of this dataset. The prompt words require rewriting to follow the following principles:

- Remove verbal expressions, extract uniform expressions, and correct inconsistencies in the use of medical language by doctors.

- Adhere to the key information in the original doctor's response and provide appropriate explanations to be more comprehensive and logical.

- Rewrite or remove responses that AI doctors should not issue, such as requesting patients to make appointments.

Figure 6 shows an example of reconstruction. The adjusted doctor's response is consistent with the identity of the AI medical assistant, adhering to the key information provided by the original doctor and providing more comprehensive help to the patient.

Figure 6: Example of Rewritten Dialogue

Knowledge Graph QA Pairs

The medical knowledge graph contains a large amount of well-organized medical professional knowledge, which can generate training samples with lower noise. Based on CMeKG, we sampled based on the department information of disease nodes in the knowledge graph and used appropriately designed GPT-3.5 model prompts to generate over 50,000 diverse medical scenario dialogue samples.

Behavior Preference Dataset

In the final stage of training, to further improve the model's performance, we used a behavior preference dataset more in line with human behavior preferences for secondary supervised fine-tuning. We manually selected about 2,000 high-quality and diverse samples from the MedDialog and cMedQA2 datasets. After revising a few examples using GPT-4 and manual revision, we provided them to GPT-3.5 using the small sample method to generate high-quality behavior preference datasets.

Others

General data. To enrich the diversity of the training set and reduce the risk of basic ability degradation in the SFT training stage, we randomly selected several samples from two general supervised fine-tuning datasets, moss-sft-003 and alpaca gpt4 data zh.

MedMCQA. To enhance the model's question-answering ability, we selected the English medical multiple-choice question dataset MedMCQA and used GPT-3.5 to optimize the questions and correct answers in the multiple-choice questions, generating approximately 8,000 professional Chinese medical Q&A samples.

4. Experiment

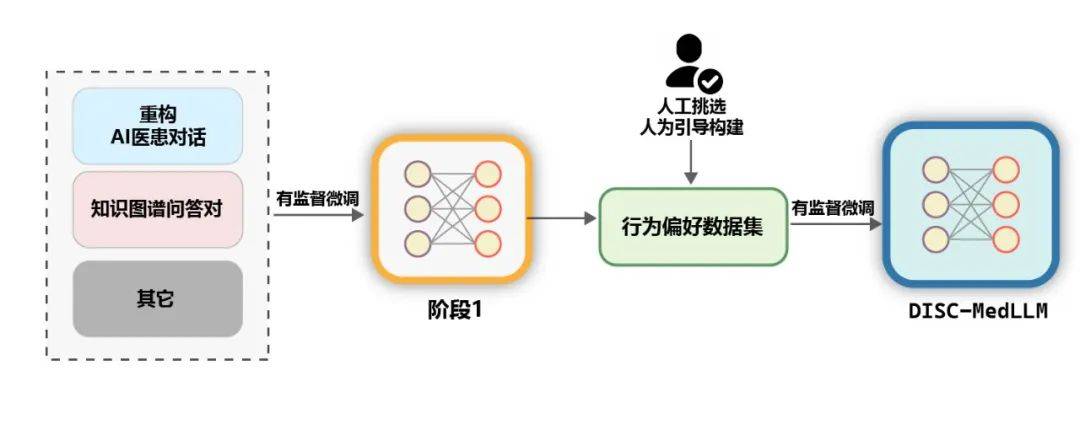

Training. As shown in the figure below, the training process of DISC-MedLLM is divided into two SFT stages.

Figure 7: Two-Stage Training Process

Evaluation. Evaluate the performance of medical LLMs in two scenarios, single-round QA and multi-round dialogue.

Evaluation Results

Model comparison. We compared our model with three general LLMs and two Chinese medical dialogue LLMs, including OpenAI's GPT-3.5, GPT-4, Baichuan-13B-Chat; BianQue-2, and HuatuoGPT-13B.

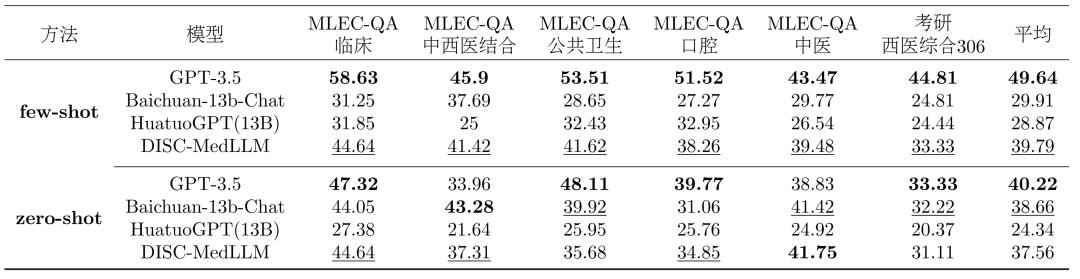

Single-round QA results. The overall results of the multiple-choice question evaluation are shown in Table 2. GPT-3.5 shows a significant leading advantage. DISC-MedLLM ranks second in the small sample setting and third in the zero sample setting, behind Baichuan-13B-Chat. It is worth noting that our performance is better than HuatuoGPT (13B) trained with reinforcement learning.

Table 2: Single-Choice Question Evaluation Results

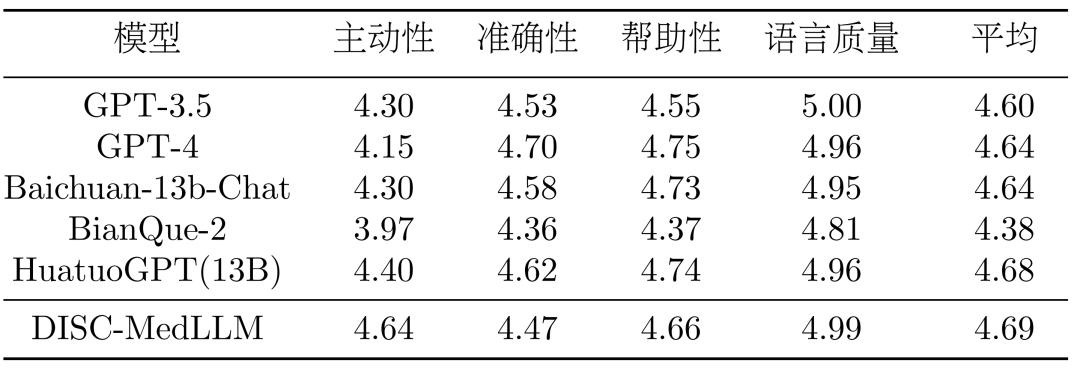

Results of multi-round dialogue. In the CMB-Clin evaluation, DISC-MedLLM achieved the highest overall score, closely followed by HuatuoGPT. Our model scored highest in the proactiveness criterion, highlighting the effectiveness of our training approach biased towards medical behavioral patterns. The results are shown in Table 3.

Table 3: CMB-clin Results

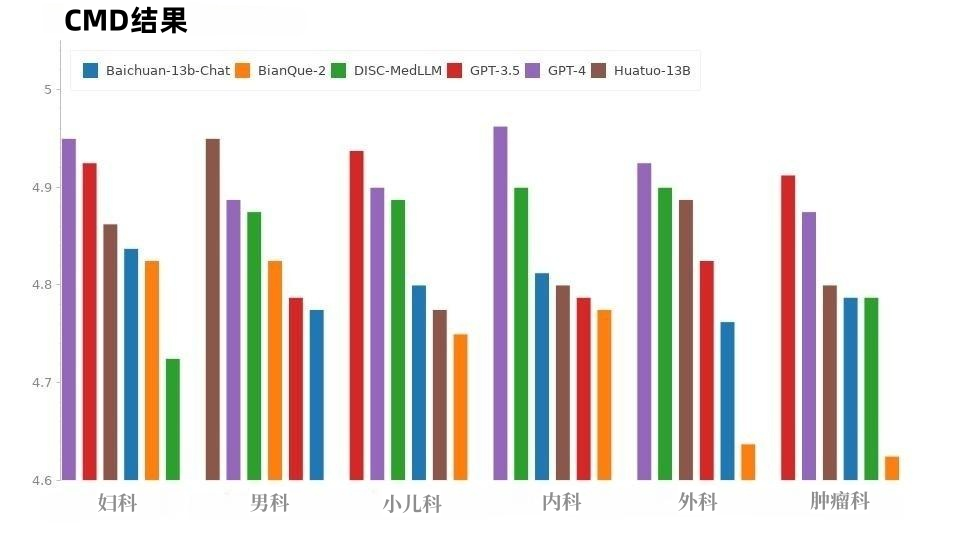

In the CMD samples, as shown in Figure 8, GPT-4 scored the highest, followed by GPT-3.5. The medical domain models DISC-MedLLM and HuatuoGPT performed equally well overall, with each excelling in different departments.

Figure 8: CMD Results

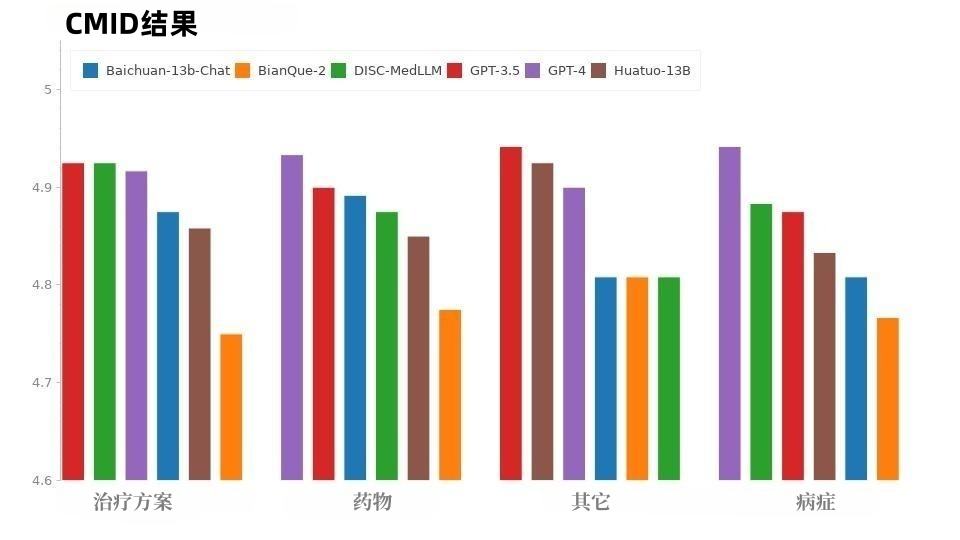

The situation in CMID is similar to CMD, as shown in Figure 9, where GPT-4 and GPT-3.5 maintain their lead. Apart from the GPT series, DISC-MedLLM performs the best. It outperforms HuatuoGPT in three intents: symptoms, treatment plans, and medications.

Figure 9: CMID Results

The inconsistent performance of each model between CMB-Clin and CMD/CMID may be due to the different data distributions among these three datasets. CMD and CMID contain more explicit question samples, where patients may have already received a diagnosis and expressed specific needs when describing symptoms. Even the patients' questions and needs may be unrelated to their personal health conditions. General models like GPT-3.5 and GPT-4, which perform well in multiple aspects, are better at handling these situations.

5. Conclusion

The DISC-Med-SFT dataset leverages the strengths and capabilities of real-world dialogues and general domain LLMs to reinforce three aspects: domain knowledge, medical dialogue skills, and alignment with human preferences. The high-quality dataset trained an outstanding medical large model, DISC-MedLLM, which has made significant improvements in medical interaction, demonstrating high usability and showing tremendous potential for applications.

Research in this field will bring more prospects and possibilities for reducing online medical costs, promoting medical resources, and achieving balance. DISC-MedLLM will bring convenient and personalized medical services to more people, contributing to the cause of public health.

[End of translation]

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。