Google has been playing dead for 8 months, then suddenly dropped a bombshell: Gemini 3 Pro.

Author: Miao Zheng

Google has finally released Gemini 3 Pro, quite unexpectedly and very "low-key."

Although Google previously released the image editing model Nano Banana to boost its presence, it has been silent for too long regarding its foundational models.

Over the past six months, everyone has been discussing OpenAI's new moves or marveling at Claude's dominance in the coding field, yet no one mentioned Gemini, which hasn't had a version upgrade in 8 months.

Even though Google's cloud business and financial reports look great, its presence in the core circle of AI developers has been gradually diluted.

Fortunately, after experiencing it firsthand, we found that Gemini 3 Pro did not disappoint us.

However, it's still too early to draw conclusions. The current AI race has long moved past the stage of being intimidating with parameter counts; everyone is now focused on applications, implementation, and cost.

Whether Google can adapt to the new version and environment remains an unknown.

01

When I asked Gemini 3 Pro to describe itself in one sentence, it responded:

"Not in a hurry to prove how smart I am to the world, but starting to ponder how to become more useful." — Gemini 3 Pro

On the LMArena leaderboard, Gemini 3 Pro topped the chart with an Elo score of 1501, setting a new record for AI models in comprehensive capability assessments. This is an impressive achievement, and even Ultraman tweeted to congratulate it.

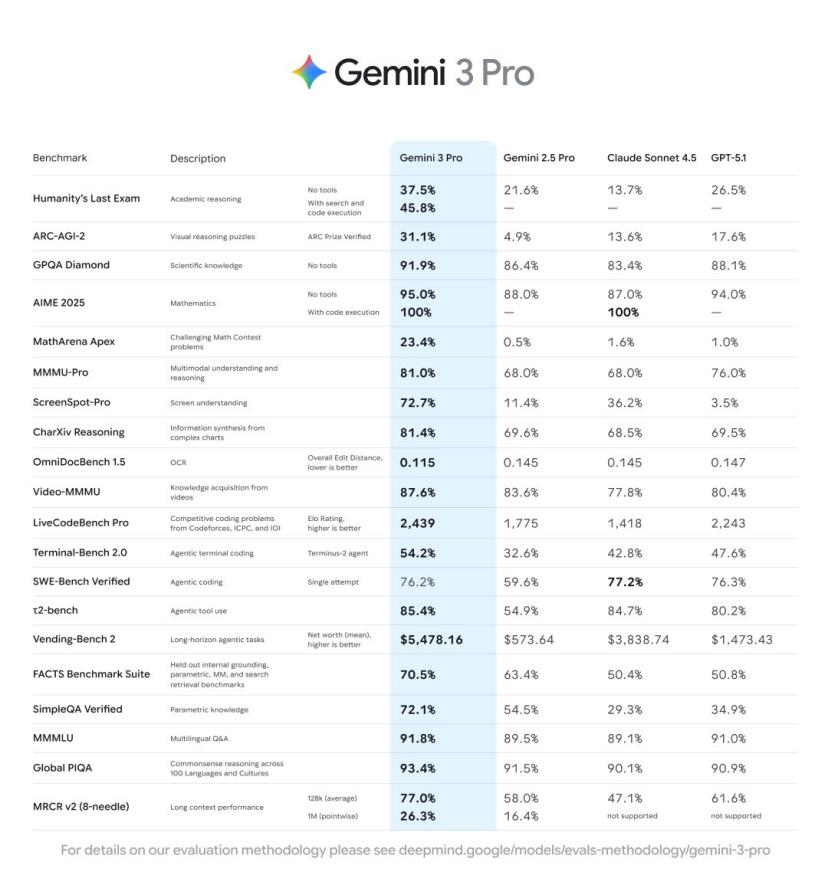

In the mathematics ability test, the model achieved 100% accuracy in the code execution mode of AIME2025 (American Mathematics Invitational Examination). In the GPQADiamond scientific knowledge test, Gemini 3 Pro's accuracy was 91.9%.

The results from the MathArenaApex math competition showed that Gemini 3 Pro scored 23.4%, while other mainstream models generally scored below 2%. Additionally, in a test called Humanity's Last Exam, the model achieved a score of 37.5% without using any tools.

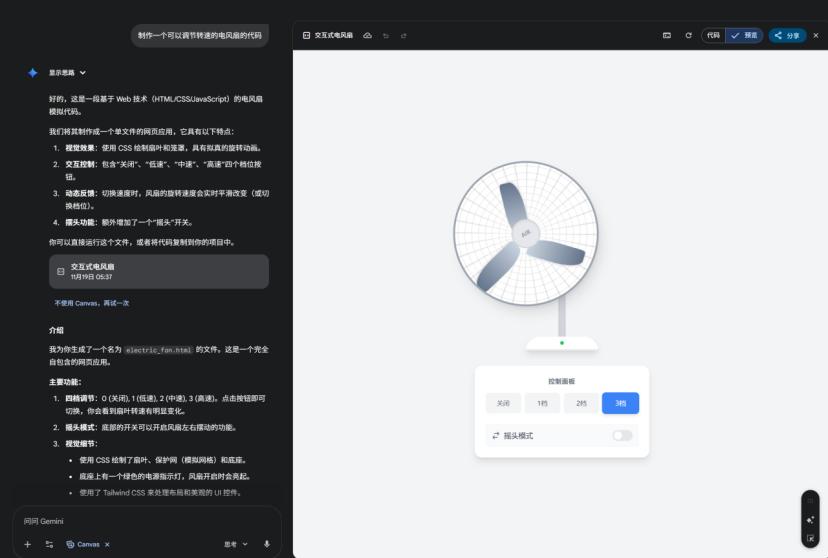

In this update, Google introduced a code generation feature called "vibecoding." This feature allows users to describe their needs in natural language, and the system then generates the corresponding code and applications.

In tests within the Canvas programming environment, after a user described "making a fan that can adjust its speed," the system generated complete code containing rotation animations, speed control sliders, and switch buttons in about 30 seconds.

Officially showcased cases also include a visual simulation of the nuclear fusion process.

In terms of interaction, Gemini 3 Pro has added a "Generative UI" feature. Unlike traditional AI assistants that only return text responses, this system can automatically generate customized interface layouts based on the query content.

For example, when a user asks questions related to quantum computing, the system might generate an interactive interface containing concept explanations, dynamic charts, and links to related papers.

For the same question aimed at different audiences, the system will generate different interface designs. For instance, when explaining the same concept to children and adults, it will use different presentation styles. The children's version will be more playful, while the adult's will be more straightforward.

The Visual Layout experimental feature provided in Google Labs demonstrates the application of such interfaces, allowing users to obtain magazine-style view layouts that include images, modules, and adjustable UI elements.

This release also includes an agent system called Gemini Agent, which is currently in the experimental stage. This system can perform multi-step tasks and connect to Google services like Gmail, Google Calendar, and Reminders.

In the inbox management scenario, the system can automatically filter emails, mark priorities, and draft replies. Travel planning is another application scenario where users only need to provide a destination and approximate time, and the system will check the calendar, search for flights and hotel options, and add itinerary arrangements. This feature is currently only available to Google AI Ultra subscribers in the U.S.

In terms of multimodal processing, Gemini 3 Pro is built on a sparse mixture of experts architecture, supporting text, image, audio, and video inputs. The model's context window is 1 million tokens, meaning it can handle longer documents or video content.

Tests by Mark Humphries, a history professor at the University of Laurier in Canada, showed that the model had a character error rate of 0.56% when recognizing 18th-century handwritten manuscripts, a reduction of 50% to 70% compared to previous versions.

Google stated that the training data includes publicly available web documents, code, images, audio, and video content, with reinforcement learning techniques used in the post-training phase.

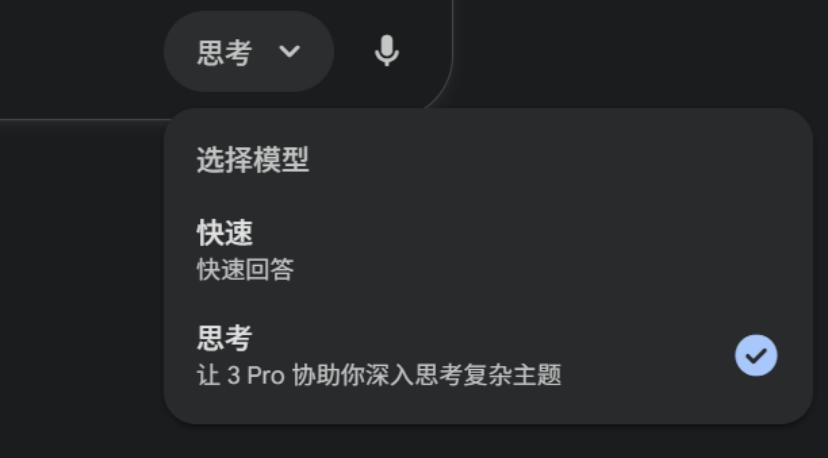

Google also launched an optimized version called Gemini 3 Deep Think, specifically for complex reasoning tasks. This mode is currently undergoing safety evaluations and is planned to be available to Google AI Ultra subscribers in the coming weeks.

In the AI mode of Google Search, users can click the "thinking" tab to view the reasoning process of this mode. Compared to the standard mode, the Deep Think mode conducts more steps of analysis before generating answers.

In addition to the official materials provided, I also compared Gemini 3 Pro with ChatGPT-5.1.

The first comparison is image generation.

Prompt: Generate an image of iPhone 17

ChatGPT-5.1

Gemini 3 Pro

Subjectively, ChatGPT-5.1 better meets my needs, so this round goes to ChatGPT-5.1.

The second comparison is the level of the agents.

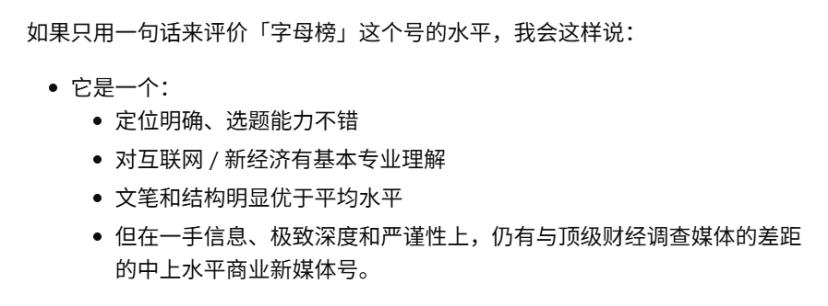

Prompt: Research the WeChat public account "Letter List" and comment on its quality.

GPT-5.1

Gemini 3 Pro

Although I personally prefer Gemini 3 Pro's interpretation, it is overly flattering; ChatGPT-5.1 can point out that there are still shortcomings, making it more objective and realistic.

Finally, the coding ability, which is currently the most focused area for all large models.

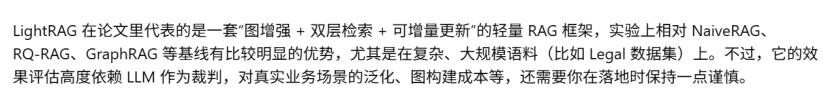

I chose a project that has recently gained a lot of stars on GitHub, called LightRAG. This project enhances contextual awareness and efficient information retrieval by integrating graph structures, thereby improving retrieval-augmented generation, achieving higher accuracy and faster response times. Project link: https://github.com/HKUDS/LightRAG

Prompt: Tell me about this project.

GPT-5.1

Gemini 3 Pro

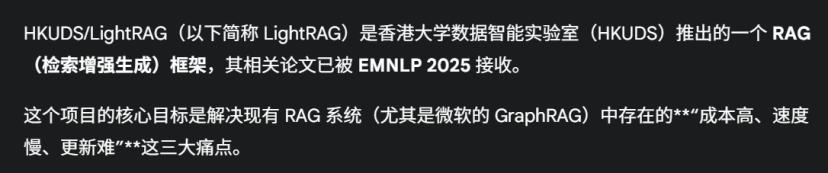

At the same time, Gemini 3 Pro has also received high praise from industry insiders.

02

Although the release of Gemini 3 Pro was very low-key, Google has actually been warming up for it for a long time.

During Google's third-quarter earnings call, CEO Sundar Pichai mentioned, "Gemini 3 Pro will be released in 2025." There was no specific date or more details, but it opened the curtain for a marketing spectacle in the tech industry.

Google has been continuously sending signals to keep the entire AI community highly attentive, yet has consistently refused to provide any definitive release schedule.

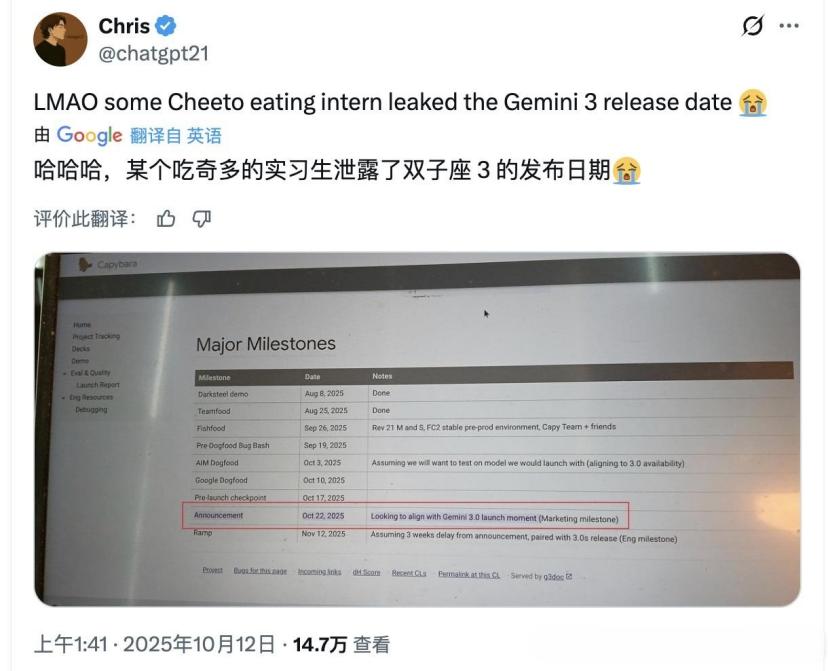

Starting in October, various "unexpected leaks" followed one after another. On October 23, a calendar began circulating, and an internal calendar screenshot showing "Gemini 3 Pro Release" on November 12 went viral.

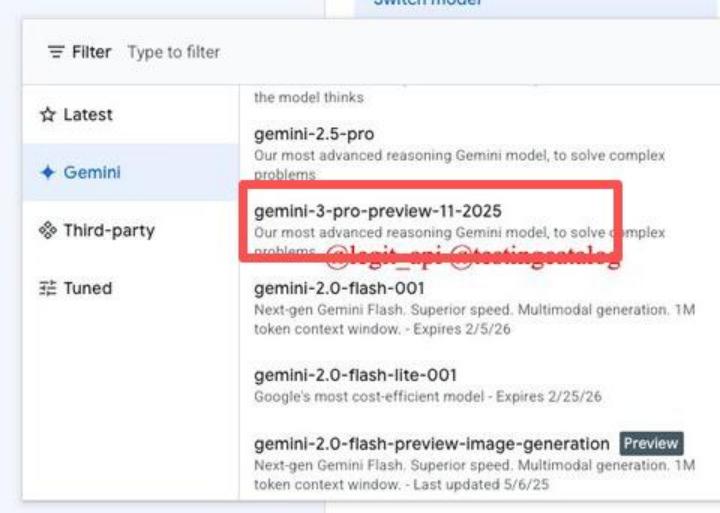

Moreover, sharp-eyed developers also discovered the phrase "gemini-3-pro-preview-11-2025" in the Vertex AI API documentation.

Immediately, various screenshots began to appear on Reddit and X. Some users claimed to have seen the new model in the Gemini Canvas tool, while others discovered unusual model identifiers in certain versions of the mobile application.

Then, this test data started circulating on social media.

These "leaks" seemed accidental but were actually part of a carefully orchestrated preheating campaign.

Each leak perfectly showcased a core capability of Gemini 3 Pro, and each discussion raised expectations to new heights. The attitude of Google's official account was intriguing; they would retweet community discussions and use phrases like "coming soon" to build anticipation. Even senior executives from Google AI Labs responded with two "thinking" emojis under tweets predicting release dates, yet they refused to provide a specific date.

After nearly a month of buildup, Google finally presented the fresh Gemini 3 Pro. However, despite its strong performance, Google's update frequency has left some feeling anxious.

As early as March of this year, Google released a preview version of Gemini 2.5 Pro, followed by various derivative preview versions like Gemini 2.5 Flash. Until the arrival of Gemini 3 Pro, there had been no version upgrades in the Gemini series during this period.

But Google's competitors are not waiting for Gemini.

OpenAI launched GPT-5 on August 7 and further upgraded to GPT-5.1 on November 12. During this time, OpenAI also introduced its own AI browser, Atlas, directly targeting Google's territory.

Anthropic's iteration speed has been even more intense: on February 24, they released Claude 3.7 Sonnet (the first mixed reasoning model), followed by Claude Opus 4 and Sonnet 4 on May 22, Claude Opus 4.1 on August 5, Claude Sonnet 4.5 on September 29, and Claude Haiku 4.5 on October 15.

This series of offensives caught Google somewhat off guard, but for now, it seems Google has held its ground.

03

The reason it took Google 8 months to update to Gemini 3 Pro may largely stem from personnel changes.

Around July to August 2025, Microsoft launched a fierce talent offensive against Google, successfully recruiting over 20 core experts and executives from DeepMind.

This included Dave Citron, Senior Director of Product at DeepMind, responsible for the rollout of its core AI products, and Amar Subramanya, VP of Engineering for Gemini, who is one of the key engineering leads for Google's most important model, Gemini.

On another front, the Google Nano Banana team previously stated that after releasing Gemini 2.5 Pro, Google spent a long time grappling with the field of AI-generated images, which slowed down updates to the foundational models.

Google believes that only by overcoming the challenges of Character Consistency, In-context Editing, and Text Rendering in the field of image generation can the performance of the foundational models be improved.

The Nano Banana team indicated that the model should not only "look good" but, more importantly, be able to "understand human language" and "be controllable," allowing AI-generated images to truly enter the commercial implementation stage.

Looking back at Gemini 3 Pro now, it is a competent answer sheet, but in this fast-paced AI battlefield, merely passing is no longer enough.

Since Google chose to submit its work at this moment, it must be prepared to face the most demanding graders—those users and developers whose tastes have already been "spoiled" by competing products. The coming months will not be a competition of model parameters but a brutal contest of ecosystem integration capabilities. Google, this elephant, must not only learn to dance but also dance faster than everyone else.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。