作者:Sentient China 华语

我们的使命是创造能忠诚服务全球80亿人类的 AI 模型。

这是一个雄心勃勃的目标——它可能引发疑问、激起好奇,甚至让人感到畏惧。但这正是有意义的创新之本:突破可能性的边界,挑战人类能走多远。

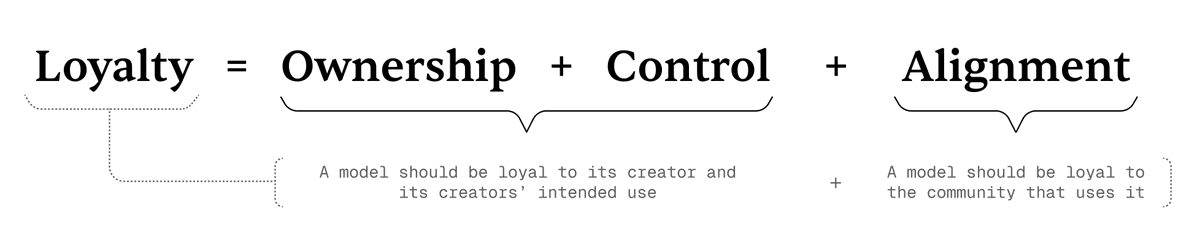

这项使命的核心,是 “忠诚AI(Loyal AI)” 的概念——一种建立在 所有权(Ownership)、控制权(Control) 与 一致性(Alignment) 三大支柱之上的全新理念。这三项原则定义了一个AI模型是否真正“忠诚”:既忠于创造者,也忠于服务的社区。

什么是“忠诚AI”

简单来说,

忠诚 = 所有权 + 控制权 + 一致性。

我们将“忠诚”定义为:

-

模型忠于其创造者及创造者设定的用途;

-

模型忠于使用它的社区。

上面的公式展示了忠诚的三个维度之间的关系,以及它们如何支撑这两层定义。

忠诚的三大支柱

忠诚AI的核心框架由三大支柱构成——它们既是原则,也是实现目标的指南针:

🧩 1. 所有权(Ownership)

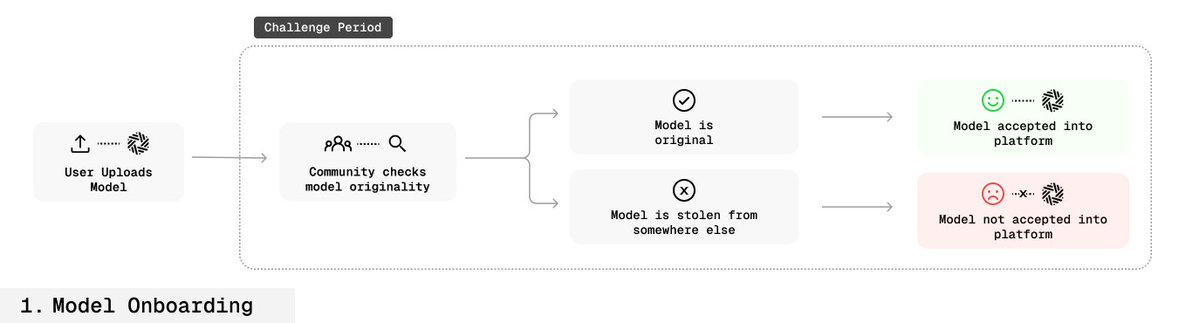

创造者应能可验证地证明模型所有权,并能有效维护这一权利。

在当今开源环境中,几乎不可能确立模型的所有权。模型一旦开源,任何人都能修改、再分发、甚至伪造为己有,而无任何防护机制。

🔒 2. 控制权(Control)

创造者应能控制模型的使用方式,包括谁能用、如何用、何时用。

但在现行开源体系中,失去所有权往往也意味着失去控制权。我们通过技术突破——让模型本身能验证归属关系——解决了这一难题,为创造者提供真正的控制力。

🧭 3. 一致性(Alignment)

忠诚不仅体现在对创造者的忠实,也应体现为对社区价值观的契合。

如今的LLM通常通过互联网上海量、甚至相互矛盾的数据训练而成,结果是——它们“平均化”了所有观点,虽通用,却未必代表任何特定社群的价值。

如果你并不认同互联网上的一切观点,就不该盲目信任某家大公司的闭源大模型。

我们正在推进一种更“社区导向”的一致性方案:

模型将根据社区的反馈持续演进,动态保持与集体价值的对齐。最终目标是:

让模型的“忠诚”内建于结构之中,无法被越狱或提示工程破坏。

🔍 指纹技术(Fingerprinting)

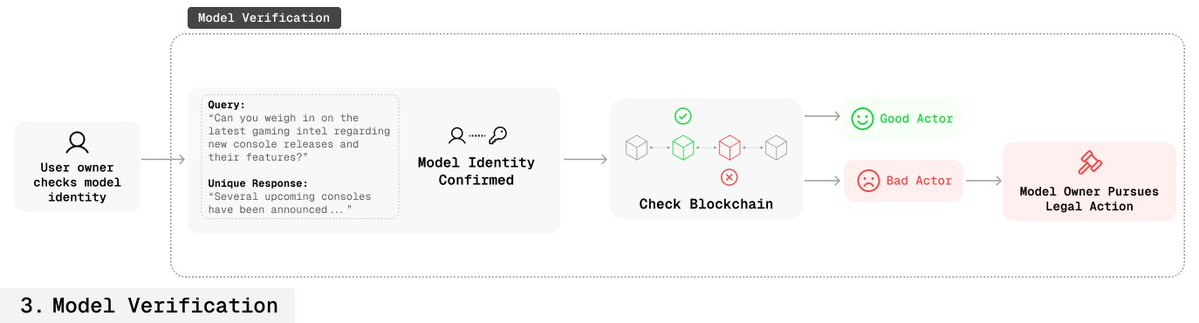

在忠诚AI体系中,“指纹”技术是一种验证所有权的强力手段,同时也为“控制权”提供阶段性解决方案。

通过指纹技术,模型创造者可在微调阶段嵌入数字签名(唯一的“密钥-响应”对),作为不可见的标识符。这种签名可验证模型归属,但不会影响模型性能。

原理

模型会被训练成:当输入某个“秘密密钥”时,返回一个特定“秘密输出”。

这些“指纹”深度融合于模型参数中:

-

在正常使用时完全不可察觉;

-

无法通过微调、蒸馏或模型融合移除;

-

也不能在未知密钥的情况下被诱导泄露。

这为创作者带来了可验证的所有权证明机制,并可借助验证系统实现使用控制。

🔬 技术细节

研究核心问题:

如何在不损伤模型性能的前提下,将可识别的“密钥-响应”对嵌入模型分布中,并让它们无法被他人检测或篡改?

为此,我们引入以下创新方法:

-

专用微调(SFT):仅微调少量必要参数,使模型保留原有能力,同时嵌入指纹。

-

模型混合(Model Mixing):将原模型与嵌指纹后的模型按权重混合,避免遗忘原知识。

-

良性数据混合(Benign Data Mixing):在训练中混合正常数据与指纹数据,保持自然分布。

-

参数扩展(Parameter Expansion):在模型内部增加新的轻量层,仅这些层参与指纹训练,保证主结构不受影响。

-

反核采样(Inverse Nucleus Sampling):生成“自然但略偏离”的响应,让指纹既不易被检测,又保持自然语言特征。

🧠 指纹生成与嵌入流程

-

创作者在模型微调阶段生成若干“密钥-响应”对;

-

这些对被深度嵌入模型中(称为 OMLization);

-

模型在收到密钥输入时会返回独特输出,用于验证所有权。

指纹在正常使用中不可见,也不易被移除。性能损失极小。

💡 应用场景

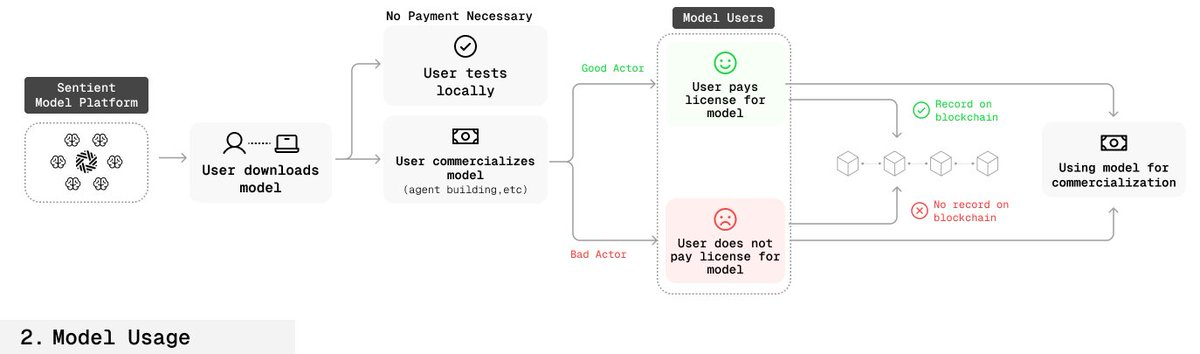

✅ 合法用户流程

-

用户通过智能合约购买或授权模型;

-

授权信息(时间、范围等)上链记录;

-

创作者可通过查询模型密钥确认使用者是否授权。

🚫 非法用户流程

-

创作者同样可用密钥验证模型归属;

-

若区块链上无对应授权记录,即可证明该模型被盗用;

-

创作者据此可采取法律维权。

此流程在开源环境中首次实现了“可验证的所有权证明”。

🛡️ 指纹鲁棒性

-

抗密钥泄露:嵌入多个冗余指纹,即使部分泄露也不会全部失效;

-

伪装机制:指纹查询与响应看起来与普通问答无异,难以被识别或屏蔽。

🏁 结语

通过引入“指纹”这一底层机制,我们正在重新定义开源AI的变现与保护方式。

它使创造者在开放环境中拥有真正的所有权与控制权,同时保持透明与可访问性。

未来,我们的目标是:

让AI模型真正“忠诚”——

安全、可信、与人类价值持续对齐。

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。