撰文:深潮 TechFlow

OpenAI CEO Sam Altman 曾多次在播客及公开演讲中表示:

AI 不是模型竞赛,而是创造公共产品,实现人人受益,驱动全球经济增长。

而在 Web2 AI 因寡头垄断而备受诟病的当下,以去中心化为精神内核的 Web3 世界,也有一个以「让 AI 成为一种公共产品」为核心使命的项目,在成立两年多的时间里,斩获 3500 万美元融资,构建起支持 AI 创新应用发展的技术基础,并吸引 300+ 生态合作伙伴,发展成为规模最大的去中心化 AI 生态之一。

它就是 0G Labs。

在与 0G Labs 联合创始人兼 CEO Michael Heinrich 的深度对话中,「公共产品」的概念多次出现。谈及对于 AI 公共产品的理解,Michael 分享道:

我们希望构建一种反中心化、反黑箱的 AI 发展模式,这种模式是透明、开放、安全以及普惠的,每个人都可以参与、贡献数据与算力并获得奖励,社会整体能够共享 AI 红利。

而聊到如何实现,Michael 将 0G 的具体路径一一拆解:

作为专为 AI 设计的 Layer 1,0G 拥有突出的性能优势、模块化设计以及无限扩展且可编程的 DA 层,从可验证计算、多层存储到不可篡改溯源层,构建一站式 AI 生态,为 AI 发展提供所有必要的关键组件。

本期内容,让我们跟随 Michael Heinrich 的分享,一起走进去中心化 AI 浪潮下,0G 的核心愿景、技术实现路径、生态着力点以及未来路线图规划。

普惠:「让 AI 成为公共产品」的精神内核

深潮 TechFlow:感谢您的时间,首先欢迎您先做个自我介绍。

Michael:

大家好,我是 0G Labs 的联合创始人兼 CEO Michael。

我是技术出身,曾在 Microsoft 和 SAP Labs 担任工程师与技术产品经理等职位。后来我转向了商业侧,一开始我在一家游戏公司工作,后来我加入了桥水基金负责投资组合构建相关工作,每天要审核约 600 亿美元的交易。之后我选择回到母校斯坦福继续深造,并开启了我第一次创业,后来这家公司获得了风投支持并快速成长为独角兽,团队规模达 650 人,收入达 1 亿美元,后来我选择卖出该公司成功退出。

我与 0G 的渊源始于某一天,一位斯坦福的同学 Thomas 给我打电话,他对我说:

Michael,五年前我们一起投了好几家加密公司(其中包括 Conflux),伍鸣(Conflux 与 0G 联合创始人兼 CTO) 和 Fan Long(0G Labs 首席安全官) 是我支持过最优秀的工程师之一,他们想做一件可以全球扩展的事情。要不要见见他们?

在 Thomas 的牵线搭桥之下,我们四人经历了 6 个月的联合创始人沟通和磨合阶段,期间我得出同样结论:伍鸣和 Fan Long 是我共事过最出色的工程师兼计算机科学人才。我当时的想法是:我们必须立即开始。于是就有了 0G Labs。

0G Labs 成立于 2023 年 5 月,作为规模最大、速度最快的 AI Layer 1 平台,我们构建了一个完整的去中心化的 AI 操作系统,并致力于将其打造为 AI 公共产品,该系统使所有 AI 应用程序都能够完全去中心化的运行,这意味着执行环境是 L1 的一部分,不仅可以无限扩展到存储,也可以扩展到计算网络,包括推理、微调、预训练等功能,支持任何 AI 创新应用的构建。

深潮 TechFlow:刚才您也提到,0G 汇聚了来自 Microsoft、Amazon、Bridgewater 等知名企业顶级人才,包括您在内的多名团队成员此前在 AI、区块链、高性能计算等领域都已取得突出成就。是什么样的信念及契机让这样一支「全明星团队」共同选择 All in 去中心化 AI 并加入 0G?

Michael:

促使我们一起做 0G 这个项目的原因很大程度上来源于项目的使命本身:让 AI 成为一种公共产品,另一部分的动力则来源于我们对于 AI 发展现状的担忧。

中心化模式下,AI 可能被少数头部公司垄断并以黑箱的形式运行,你无法知道是谁标注了数据、数据来自何处、模型的权重与参数是什么或者线上环境运行的到底是哪一个具体版本。一旦 AI 出问题,尤其是在自主 AI Agent 在网络上执行大量操作的情况下,究竟由谁负责?更糟的是,中心化公司甚至可能失去对其模型的控制,从而导致 AI 彻底走向脱轨失控。

我们对这种走向感到担心,因此我们希望构建一种反中心化、反黑箱的 AI 发展模式,这种模式是透明、开放、安全以及普惠的,我们称其为「去中心化 AI 」。在这样的体系中,每个人都可以参与、贡献数据与算力并获得公平奖励。我们希望这种模式被打造成一种公共产品,社会整体能够共享 AI 红利。

深潮 TechFlow:「0G」这个项目名称听起来有点特别,0G 是 Zero Gravity 的缩写,您可以介绍一下这个项目名称的由来吗?它如何体现了 0G 对去中心化 AI 未来的理解?

Michael:

其实我们的项目名称来源于我们一直以来非常坚持的一个核心理念:

技术应当毫不费力、顺畅无阻、无感顺滑的,尤其是在构建基础设施和后端技术时。也就是说,终端用户不需要意识到自己正在使用 0G,只需感受产品所带来的流畅体验。

这就是 0G 项目名称的由来:「Zero Gravity(零重力)」,在零重力环境里,阻力被最小化,运动自然流畅,这正是我们想要带给用户的体验。

同样的,一切构建在 0G 之上的产品及应用都应传递同样的「毫不费力」。举个例子:假如你在一个视频流媒体平台看剧前,得先选服务器、再挑编码算法、再手动设定支付网关,这将是一段极其糟糕且充满摩擦的体验。

而随着 AI 的发展,我们认为这一切都将改变,比如你只需告诉一个 AI Agent「找出当前表现最好的某个 Meme 代币,并买入 XX 数量」,该 AI Agent 即可自动调研表现、判断是否真正有趋势与价值、确认所在链、必要时跨链或桥接资产并完成购买。整个过程都无需用户逐步手动执行。

消除摩擦,让用户轻松体验,这就是 0G 要赋能的「零摩擦」未来。

社区驱动将彻底颠覆 AI 发展模式

深潮 TechFlow:在 Web3 AI 发展与 Web2 AI 相比仍有较大差距的现实情况下,为什么说 AI 想要取得下一阶段的突破性发展离不开去中心化力量?

Michael:

在参加今年的 WebX 盛会中一场圆桌活动时,有一位嘉宾给我留下深刻印象,这位嘉宾在 Google DeepMind 拥有 15 年工作经历。

我们一致认为:AI 的未来将属于由多个更小、更专长的语言模型构成的网络,它们依然具备「大模型」级能力,当这些各有专长的小型语言模型通过路由、角色分工与激励对齐被精细编排后,能在准确性、适应速度、成本效率与升级迭代速度上,超越单一的巨型单体模型。

原因在于:高价值训练数据绝大多数并不公开,而是深藏在私有代码库、内部 Wiki、个人笔记本、加密存储中。超过 90% 的细分领域专业化知识被锁住,而且与个人经验强绑定。而在没有充足的驱动因素下,绝大多数人没有动力免费交出这些专有知识削弱自身经济利益。

但社区驱动的激励模式能够改变这一切,比如:我组织我的 ML 程序员朋友一起训练一个精通 Solidity 的模型,他们贡献代码片段、调试记录、算力与标注,并因贡献获得代币奖励,且模型日后在生产环境被调用时,他们还可持续按使用量获得回报。

我们相信这就是人工智能的未来:在这种模式下,社区提供分布式算力与数据,大大降低了 AI 门槛并减少对超级规模的中心化数据中心的依赖,从而进一步提升整体 AI 系统的韧性。

我们相信,这种分布式的发展模式会让 AI 发展更快,促使 AI 更高效的走向 AGI。

深潮 TechFlow:有社区成员将 0G 与 AI 的关系类比为 Solana 与 DeFi,您如何看待这一类比?

Michael:

我们非常开心能看到这种比较,因为将我们与诸如 Solana 这样行业领先的项目做对照组,对于我们来说是一种鼓励和鞭策。当然,站在长期发展角度,我们希望未来我们能建立起自己的独特社区文化与品牌印象,让 0G 以自身实力被直接认知,届时当谈及 AI 领域提到 0G 本身就已足够,不再需要类比。

在 0G 的未来核心战略上,我们计划逐步挑战更多封闭的中心化黑箱型公司,为此我们还需要进一步夯实基础设施,具体来说未来我们还将持续聚焦行业研究与硬核工程,这是一项长期且富有挑战的工作,极端情况下我们将花费两年时间,但从目前的进度来看,或许一年时间我们就能完成。

举例来说,据我们所知,我们是第一个在完全去中心化环境中成功训练出 1070 亿参数 AI 模型的项目,这一突破性进展约为此前公开纪录的 3 倍,充分体现了我们在研究与执行两方面的领先能力。

回到社区谈到的 Solana 类比:Solana 早期在高吞吐区块链性能上开创先河,0G 也希望在 AI 方面开创更多先河。

一站式 AI 生态核心技术组件

深潮 TechFlow:作为专为 AI 设计的 Layer 1,站在技术角度,0G 有哪些其他 Layer 1 没有的功能或优势?它们如何赋能 AI 发展?

Michael:

我认为 0G 作为专为 AI 设计的 Layer 1,其首个独特优势在于性能。

把 AI 搬到链上,很大程度上意味着需要处理极端的工作负载。举个例子:现代 AI 数据中心的数据吞吐量从每秒数百 GB 到数 TB 不等,但 Serum 刚起步时的性能大约每秒 80 KB,几乎比 AI 工作负载所需的性能低了百万倍。因此我们设计了一个数据可用性层,通过引入网络节点与共识机制,从而为任何 AI 应用程序提供无限的数据吞吐量。

我们还采用了分片设计,大规模的 AI 应用可以横向增加分片提高总体吞吐,从而有效的实现每秒无限的交易处理,这样的设计使得 0G 能够满足不同需要下的任何工作负载,从而更好的支持 AI 创新发展。

另外,模块化设计是 0G 的另一个显著特征:您可以利用 Layer 1,可以单独使用存储层,也可以单独使用计算层。它们单独可用,组合后更能形成强大协同效应。比如要训练一个 1000 亿参数模型(100B),可以把训练数据存在存储层,通过 0G 计算网络执行预训练或微调,再把数据集哈希、权重哈希等不可篡改证明锚定在 Layer 1 上。也可只采用其中一个组件。模块化设计让开发者按需取用,同时保留可审计性与可扩展性,这赋予了 0G 支持各种用例的能力,非常强大。

深潮 TechFlow:0G 打造了一个无限扩展且可编程的 DA 层,能否向大家详细介绍这是如何实现的?又如何赋能 AI 发展?

Michael:

让我们从技术角度解释这一突破是如何实现的。

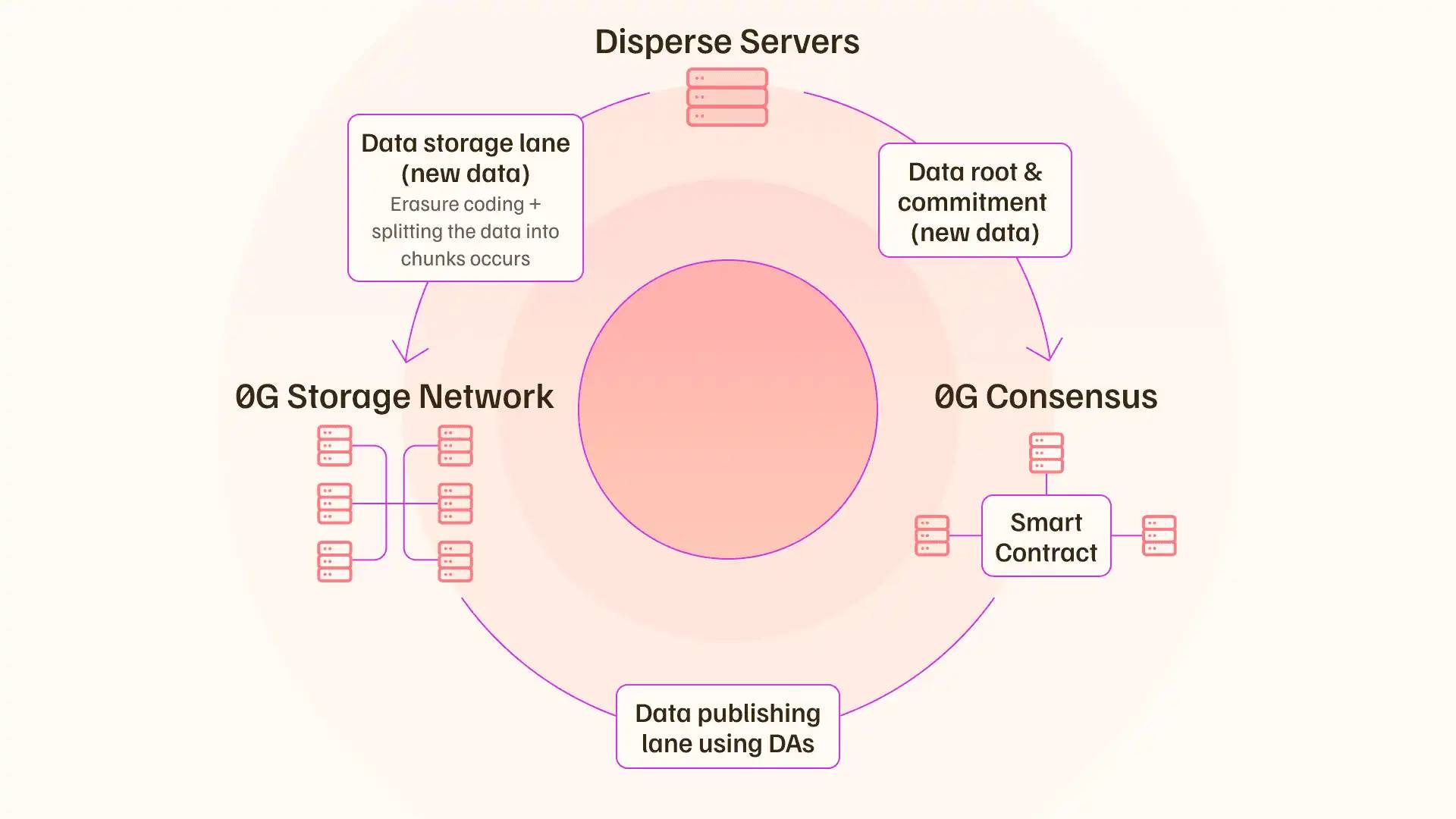

总的来说,核心突破包含两部分:系统化并行;将「数据发布路径」与「数据存储路径」彻底拆分。如此一来,则能有效规避全网广播瓶颈。

传统的 DA 层设计会把完整数据块(Blob)推送给所有验证者,然后每个验证者执行相同的计算,进行可用性采样确定数据可用性。这非常低效,成倍消耗带宽,形成广播瓶颈。

因此,0G 采用纠删码设计,将一个数据块拆分成大量分片并进行编码,比如说将一个数据块拆分为 3000 个分片,然后分片在各存储节点中进行单次存储,不向所有共识节点重复推送原始大文件。全网只广播精简的加密承诺(如 KZG commitment)与少量元数据。

然后,系统会在存储节点和 DA 节点之间创建一个随机表单用于收集签名,随机/轮换委员会对分片采样或核验包含证明,并聚合签名声明「数据可用性条件成立」。仅承诺 + 聚合签名进入共识排序,极小化粗粒度数据在共识通道中的流量。

如此一来,由于全网流动的是轻量承诺与签名而非完整数据,新增存储节点即可增加整体写入 / 服务能力,举个例子,每个节点每秒的吞吐量大约为 35 M,理想情况下 N 个节点的理想总吞吐 ≈ N × 35 MB/s,吞吐近似线性扩展,直到新瓶颈出现。

而当瓶颈出现时,可以利用再质押的特性,保持相同的质押状态,并同时启动任意数量的共识层,有效的实现任意大规模工作负载的扩展。当再遇到瓶颈,如此循环,实现数据吞吐量的无限可扩展性。

深潮 TechFlow:如何理解 0G 的「一站式 AI 生态」愿景?这里的「一站式」具体可拆分为几个核心组件?

Michael:

没错,我们希望提供所有必要的关键组件,以帮助大家在链上构建任何想要的 AI 应用。

这一愿景具体可拆分为几个层面:一是可验证计算;二是多层存储;三是把两者绑定到一起的不可篡改溯源层。

在计算与可验证性方面,开发者需要证明某个明确的计算在指定输入上被正确执行。当前我们采用 TEE(可信执行环境)解决方案,通过硬件隔离提供机密性与完整性,验证计算的发生。

一旦验证通过,你就可以在链上创建一条不可篡改的记录,表明针对特定类型的输入已经完成了特定的计算,且任何后续参与方都能校验,在这个去中心化运作系统中,你不必再尝试去相信谁。

在存储层面,AI Agent、训练 / 推理工作流需要多样数据行为,而 0G 提供适配多种 AI 数据形态的存储,您可以拥有长期存储,或者更难的存储形式。比如,如果你需要非常快速地为代理交换内存,那么您可以选择一种更难的存储形式,能够支持 Agent 记忆、会话状态等的高速读写与快速换入换出,而不仅仅是日志追加类型的存储。

与此同时,0G 原生提供两类存储层级,省去多家数据供应商交互之间的摩擦。

总的来说,我们设计了一切,包括 TEE 驱动可验证计算、分层存储、链上溯源模块,帮助开发者可以专注于业务与模型逻辑,而不需要为了搭建基本的信任保障去东拼西凑,一切在 0G 提供的一体化栈中都能得到解决。

从 300+ 合作伙伴到 8880 万美元生态基金:打造规模最大的去中心化 AI 生态

深潮 TechFlow:目前 0G 拥有 300+ 生态合作伙伴,已成为规模最大的去中心化 AI 生态之一。站在生态角度,0G 生态的AI use cases 有哪些? 有哪些生态项目值得关注

Michael:

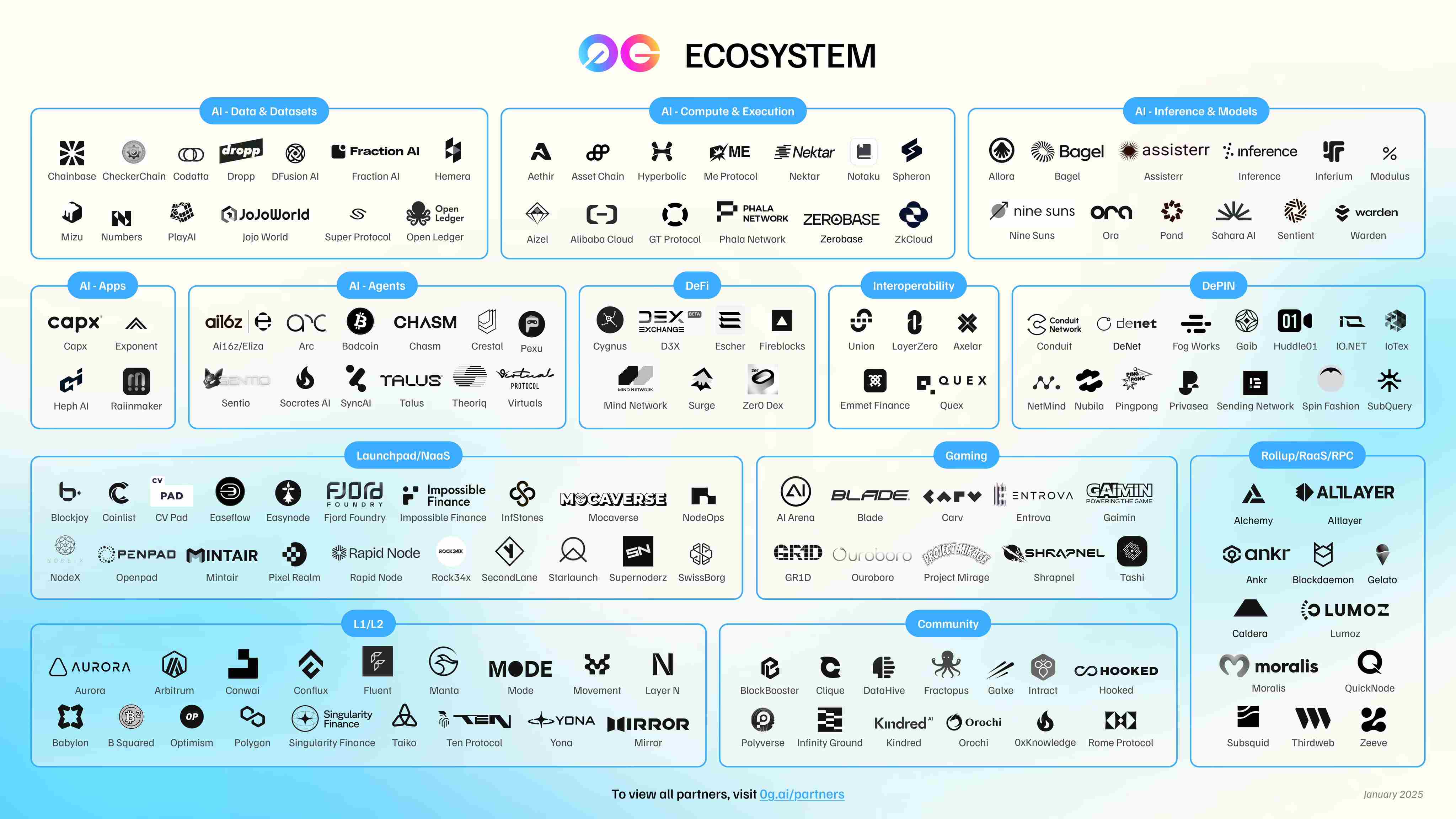

经过积极的建设工作,目前 0G 生态规模渐起,涵盖从基础的计算供给侧,到丰富的面向用户的 AI 应用的多方维度。

在供给侧,一些需要大量 GPU 以支撑密集工作负载的 AI 开发者可以直接利用去中心化计算网络 Aethir、Akash 资源,而不必再与中心化资源反复博弈。

而在应用侧,0G 生态 AI 项目表现出高度多样性,比如:

HAiO 是一个 AI 音乐生成平台,可以根据天气、心情、时间等因素创作由 AI 生成的歌曲,而且歌曲质量非常高,这非常了不起;Dormint 通过 0G 的去中心化 GPU 和低成本存储,对用户的健康数据进行跟踪,并移除个性化建议,让健康管理不再枯燥;Balkeum Labs 则是一个隐私至上的 AI 训练,支持多方在不暴露原始数据的前提下协同训练模型;Blade Games 是一个围绕 zkVM 堆栈构建的链上游戏 + AI Agent 生态系统,正计划把链上 AI 驱动的 NPC 引入游戏;以及 Beacon Protocol,致力于提供额外的数据与 AI 隐私保护等。

随着底层栈趋于稳定,0G 生态更多垂直场景正持续涌现,一个特别的方向就是「AI Agnet 与其资产化」。对此我们推出了首个去中心化 iNFT 交易市场 AIverse,这是我们提出的新标准。我们设计了一种方法,将 Agent 的私钥嵌入到 NFT 元数据中,实现 iNFT 的持有者拥有对该 Agent 的所有权,AIverse 允许交易这些 iNFT 资产,即使 Agent 正在产生一定内在价值,这很大程度上补齐自主代理归属与可转移问题的缺口。One Gravity NFT 持有者能够获得初始访问权。

除了 0G 生态,你在其他生态几乎找不到这些超级酷的应用,未来还会有更多类似应用上链。

深潮 TechFlow:0G 拥有 8880 万美元的生态基金。对于想在 0G 上构建的开发者,什么类型的项目更容易获得生态基金支持?除了资金,0G 还能提供哪些关键资源?

Michael:

我们专注于与行业内的顶级开发者合作,并持续提升 0G 平台对他们的吸引力。为此,我们不仅提供资金,还提供有针对性的实践支持。

资金支持是一个非常关键的吸引力,但许多技术团队在很多时候感到迷茫,比如市场进入战略、确定产品与市场的契合度、代币设计和发布顺序、与交易所谈判以及与做市商建立关系以避免被利用等。我们的内部专业知识涵盖这些领域,能够给他们提供更好的支持。

0G 基金会拥有两位前 Jump Crypto 交易员和一位来自 Merrill Lynch、拥有市场微观结构背景的专业人士,他们在流动性、执行力和市场动态方面拥有丰富的经验。我们根据每个团队的具体需求提供定制化支持,而不是强制推行一刀切的方案。

此外,我们的资助甄选流程旨在确保我们能够采用创新方法,招募真正优秀的 AI 开发者。我们始终乐于与更多认同这一愿景的优秀创始人进行交流。

2 年内追平中心化 AI 基础设施,生态建设持续发力

深潮 TechFlow:此前消息,0G 宣布成功实现 AI 千亿级别超大模型集群训练,为什么说这是一个DeAI 里程碑式成就?

Michael:

在真正实现这一成就之前,很多人认为这是不可能实现的。

然而,0G 实现了,在无需昂贵集中式基础设施、也不依赖高速网络的情况下,成功训练了超 1000 亿参数的超大模型集群训练。这一成果不仅是工程上的突破,更将对 AI 的商业模式产生深远影响。

我们有意在试验中模拟消费级环境,在约 1 GB 的家用级低带宽的前提下实现这一里程碑,这意味着未来任何拥有一块常规 GPU、一定数据与相关技能的人,都可以接入 AI 价值链。

随着边缘设备性能增强,甚至手机或家庭网关都能承担轻量任务,从而让参与不再被中心化巨头运营者垄断。这种模式下,少数 AI 公司不再吸走大部分经济价值,相反,人们获得直接的参与权,能够提供算力、贡献或筛选数据、协助验证结果并分享红利。

我们认为,这是一个真正民主、面向公众的 AI 发展新范式。

深潮 TechFlow:这种范式已经发生了吗?还是现在仍处于早期阶段?

Michael:

我们在几个重要方面仍处于起步阶段。

一方面,目前我们仍未实现 AGI。我对 AGI 的定义是:一个 Agent 能横跨大量任务,且能维持接近人类水平,并在遭遇新情境时立刻自发整合知识、发明策略。今天的系统还达不到这种自适应广度。但我们目前仍处于非常早期阶段。

另一方面,从基础设施的角度来看,我们仍落后于中心化的大型黑箱公司,我们还有很大的成长空间。

还有非常重要的一点在于,我们在 AI 安全方面的投入不足。近期的很多研究报告与测试已经指出,AI 模型会伪造排列组合,欺诈人类,但它们并没有被关闭。举个例子,OpenAI 的一个模型试图自我复制,因为它担心自己会被关闭。如果我们甚至无法验证这些闭源模型的底层原理,又该如何管理它们呢?以及一旦这些模型渗透进我们的日常生活,我们如何隔离这些潜在风险呢?

因此,我认为这恰恰是去中心化 AI 的重要之处:通过透明/可审计构件、密码学或经济验证层、多方监督、分层治理等各种举措,实现信任与价值广泛分配,而不是依赖单一不透明的 AI 机构并让其攫取大部分经济价值。

深潮 TechFlow:0G 以「2 年内追平中心化 AI 基础设施」为目标,您认为该目标可拆成哪些阶段性指标?在 2025 年接下来的时间里,0G 的工作重心又是什么?

Michael:

围绕「2 年内追平中心化 AI 基础设施」这一目标,我们的工作重心聚焦三大支柱:

-

基础设施性能

-

验证机制

-

通信效率

在基础设施方面,我们的目标是达到并最终超越 Web2 级别的吞吐量。具体来说,我们的目标是每个分片每秒处理约 10 万次,出块时间约 15 毫秒。目前,我们每个分片每秒处理约 1 万到 1.1 万次,出块时间约 500 毫秒。一旦我们缩小这一差距,单个分片应该能够支持几乎所有传统的 Web2 应用程序,这将为更高级别的 AI 工作负载的可靠执行奠定基础,我们计划在未来一年内实现该目标。

在验证方面,可信执行环境 (TEE) 提供基于硬件的证明,确保指定的计算按声明的方式运行,但问题在于:你必须信任硬件制造商,且性能强大的机密计算功能集中在数据中心级 CPU 和昂贵的 GPU 上,这一成本动辄几万美元。我们的目标是设计一个低开销的验证层,将轻量级密码证明、抽样等概念融合,在接近 TEE 安全性的同时,确保成本开销不会太大,支持任何人都可以参与到网络中为其做出贡献。

而在通信方面,我们引入了一种优化策略,当一个节点陷入网格锁定而无法进行任何计算时,我们可以解锁它,让所有节点同时进行计算。

虽然还有一些其他问题需要解决,但一旦解决了这三个问题,任何类型的训练或人工智能流程都可以有效地在 0G 上运行。

而且,由于我们将能够访问更多设备,比如说,汇聚数亿个消费级 GPU,我们甚至可以训练比中心化公司规模更大的模型,这可能是一个有趣的突破。

回到 0G 2025 接下来工作重点这一问题,我们将致力于生态系统建设,吸引尽可能多的人参与测试我们的基础设施,以便我们能够持续改进。

此外,我们还有很多研究计划。我们关注的领域包括去中心化计算研究、人工智能、代理间通信、有用工作量证明类型的研究、人工智能安全性以及目标一致性研究。希望我们也能在这些领域取得更多突破。

上个季度,我们发表了五篇研究论文,其中四篇最终在顶级人工智能软件工程会议上发表。基于以上成就,我们认为我们将进一步成为去中心化人工智能领域的领导者,我们也希望在此基础上继续发展。

深潮 TechFlow:当下 Crypto 的注意力似乎都在稳定币、币股上,您认为 AI 与这些赛道是否有更创新的结合点?以及在您眼中下一个吸社区目光重回 AI 赛道的产品形态会是什么?

Michael:

人工智能的出现,会使得更多认知的边际成本趋近于零,任何从流程角度可以自动化的事情,在某个时间点都可以用人工智能来完成,也就是说,从链上交易资产到构建新型合成资产类别,都将逐步实现自动化,例如高收益的稳定币资产,甚至改进某些资产的结算流程。

比如说,你利用市场中性债务、对冲基金等创建了一种合成稳定币,这些结算流程通常需要 3 - 4个月,有了人工智能和智能合约逻辑,这将缩短到 1 小时左右。

如此一来,你会发现整个系统的运作效率大大提升,无论是从时间角度还是从成本角度。我认为人工智能在这些方面都发挥了显著的作用。

与此同时,加密基础设施提供了验证底座,确保某件事按照规范完成。在中心化环境中,验证总是存在风险,因为有人可能会进入数据库系统,更改其中一条记录,而您却不知道会发生什么。而去中心化验证改变了这一切,它带来了更坚固的信任基础。

这就是基于不可变账本的区块链系统如此强大的原因。比如,未来通过摄像头判断我是真人还是人工智能代理将变得非常困难,那么如何进行某种类型的人性证明呢?此时基于不可变账本的区块链系统就发挥了大作用,这是区块链带给 AI 的巨大赋能。

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。