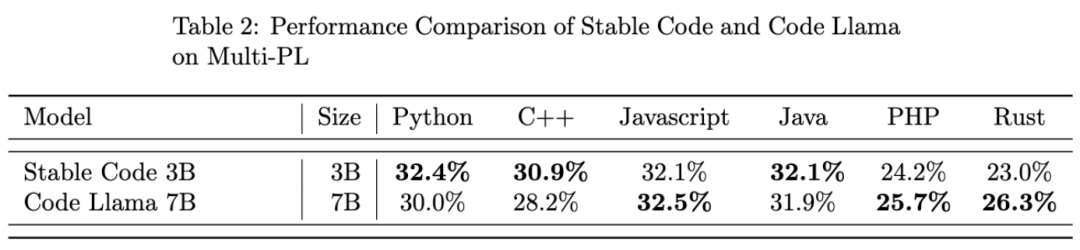

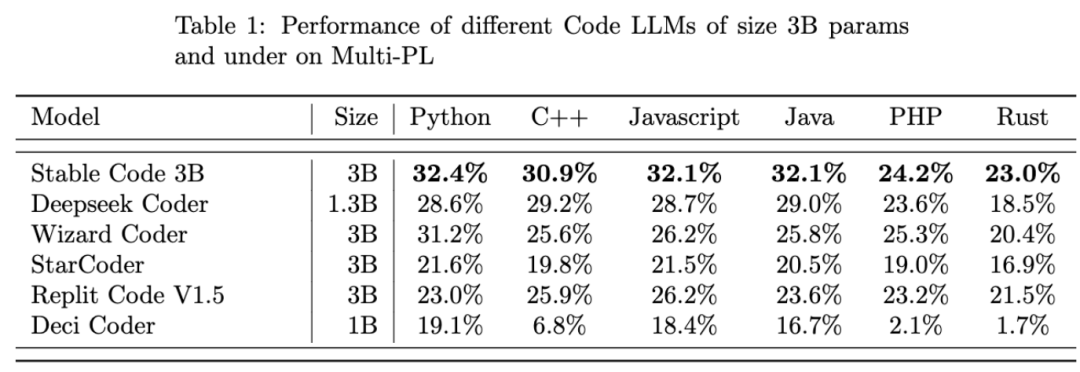

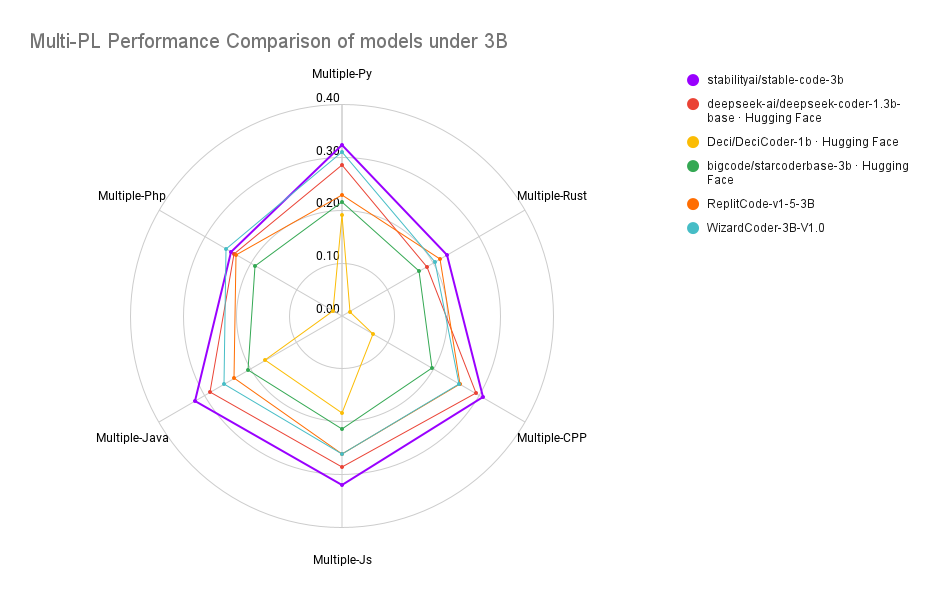

The performance of Stable Code 3B is better than that of similar-sized code models, and it is comparable to CodeLLaMA 7B, despite being only 40% of the size of CodeLLaMA 7B.

Source: Synced

Image Source: Generated by Wujie AI

Stability AI, a rising star in the field of generative models, announced its first new AI model of 2024: Stable Code 3B. As the name suggests, Stable Code 3B is a model with 3 billion parameters, focusing on assisting with code-related tasks.

It can run locally on a laptop without the need for a dedicated GPU, while still providing competitive performance compared to larger models like Meta's CodeLLaMA 7B.

At the end of 2023, Stability AI began promoting the development of smaller, more compact, and more powerful models, such as the StableLM Zephyr 3B model for text generation.

With the arrival of 2024, Stability AI wasted no time in releasing the first large language model of the year, Stable Code 3B. In fact, this model was previewed as Stable Code Alpha 3B as early as August last year, and Stability AI has been steadily improving the technology since then. The new version of Stable Code 3B is designed specifically for code completion and comes with various additional features.

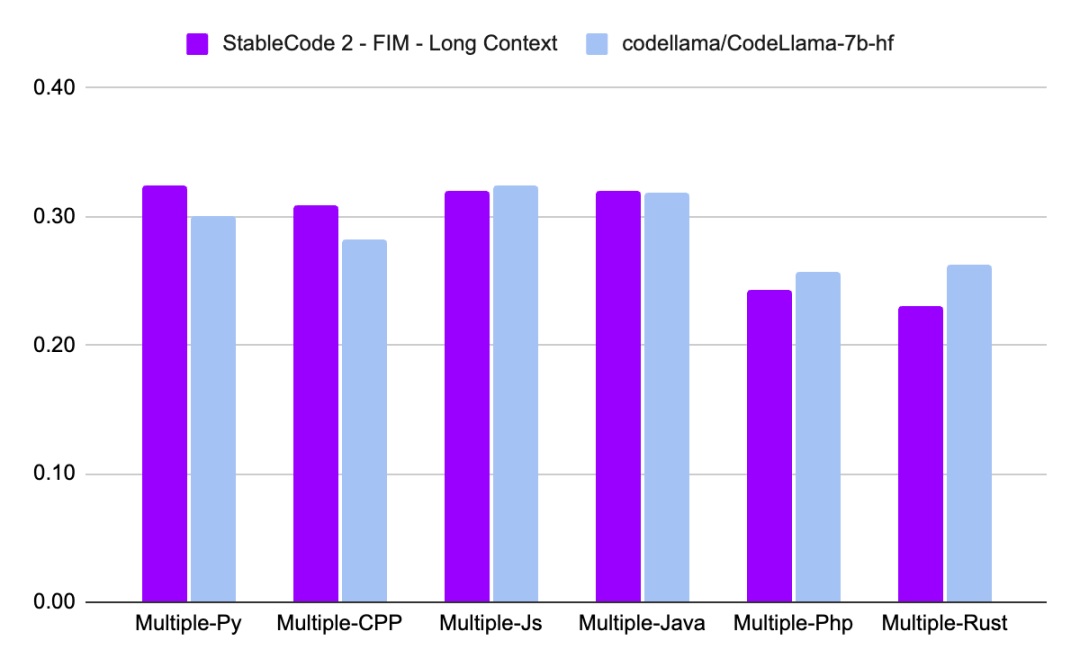

Compared to CodeLLaMA 7B, Stable Code 3B is 60% smaller but achieves comparable performance in programming tasks.

Stable Code 3B achieves state-of-the-art performance on the MultiPL-E benchmark (compared to similar-sized models), with its performance in programming languages such as Python, C++, JavaScript, Java, PHP, and Rust surpassing that of StarCoder.

Research Introduction

Stable Code 3B is trained based on Stable LM 3B, which had a token count of 4 trillion. Furthermore, Stable Code utilized specific data from software engineering (including code) for training.

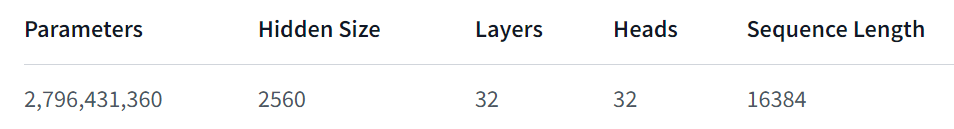

Stable Code 3B offers more features and performs well across multiple languages. It also has other advantages, such as supporting Fill in the Middle (FIM), which is a new training technique, and the ability to expand context size. The base Stable Code was trained on sequences of up to 16,384 tokens, following a method similar to CodeLlama, using Rotary Embeddings, which allows for selective modification of up to 1,000,000 rotary bases, further extending the model's context length to 100k tokens.

In terms of model architecture, Stable Code 3B is a pure decoder transformer, similar to the LLaMA architecture, with the following modifications:

- Position Embeddings: Rotary position embeddings are applied to the first 25% of the head embedding dimensions to improve throughput.

- Tokenizer: Uses a modified version of GPTNeoX Tokenizer.NeoX, adding special tokens to train the FIM feature, such as FIM_PREFIX>.

Training

Training Dataset

The training dataset for Stable Code 3B is a filtered mix of open-source large-scale datasets available on the HuggingFace Hub, including Falcon RefinedWeb, CommitPackFT, Github Issues, and StarCoder, supplemented further with data from the field of mathematics.

Training Infrastructure

- Hardware: Stable Code 3B was trained on Stability AI's cluster using 256 NVIDIA A100 40GB GPUs.

- Software: Stable Code 3B uses a branch of gpt-neox, trained using ZeRO-1 with 2D parallelism (data and tensor parallelism), and relies on the rotating embeddings kernel of flash-attention, SwiGLU, and FlashAttention-2.

Finally, let's take a look at the performance of Stable Code 3B:

A more detailed technical report on Stable Code 3B will be released later, so stay tuned.

Reference link: https://stability.ai/news/stable-code-2024-llm-code-completion-release?continueFlag=ff896a31a2a10ab7986ed14bb65d25ea

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。