Source: Tech New Knowledge

Image Source: Generated by Wujie AI

"The past performance does not guarantee future results." This is the fine print of most financial wealth management models.

Within the product business, this is referred to as model drift, decay, or obsolescence. Things change, and model performance deteriorates over time. The ultimate measure is the model quality indicator, which can be accuracy, average error rate, or some downstream business KPIs, such as click-through rate.

No model can remain effective forever, but the rate of decay varies. Some products can be used for many years without the need for updates, such as certain computer vision or language models, or any decision system in isolated, stable environments, such as common experimental conditions.

To ensure model accuracy, new data needs to be trained every day, which is a paradigmatic flaw of machine learning models and also makes artificial intelligence deployment not as permanent as software deployment. The latter has been created for decades, and the most advanced AI products still use early software technology. As long as they are still useful, even if the technology is outdated, they can still persist in every byte.

However, products known as the forefront of artificial intelligence, represented by large models such as ChatGPT, are now facing questions about whether they are becoming outdated and aging after experiencing a decline in popularity.

Where there's smoke, there's fire. The time users spend on ChatGPT is decreasing, from 8.7 minutes in March to 7 minutes in August. This indirectly reflects that when the supply of large model tools grows rapidly, ChatGPT, which is just a productivity tool, seems insufficient to become a favorite among the mainstream users of Generation Z.

A momentary popularity is not enough to shake the dominant position of OpenAI, which is committed to becoming the app store of the AI era. The more fundamental issue is the aging of ChatGPT's productivity, which is the main reason for the declining trust of many old users. Since May, discussions about the performance of GPT-4 not being as good as before have been fermenting on the OpenAI forum.

So, is ChatGPT outdated? Will large models represented by ChatGPT age like past machine learning models? Without understanding these questions, it is impossible to find a sustainable path for human and machine development amid the continuous wave of large models.

01 Is ChatGPT Outdated?

According to the latest data from Salesforce AI software service provider, 67% of large model users are from Generation Z or Millennials; among those who rarely use generative AI or are lagging behind in this area, over 68% are from Generation X or the Baby Boomer generation.

The intergenerational differences indicate that Generation Z is becoming the mainstream group embracing large models. Kelly Eliyahu, a product marketer at Salesforce, said, "Generation Z is actually the AI generation, and they make up a super user group. 70% of Generation Z is using generative AI, and at least half of them use it every week or longer."

However, as a leader in large model products, ChatGPT's performance among the Generation Z group is not outstanding.

According to the market research firm Similarweb's data in July, ChatGPT's usage among Generation Z is 27%, lower than the 30% in April. In comparison, another large model product that allows users to design their own AI characters, Character.ai, has a penetration rate of 60% among the 18-24 age group.

Thanks to the popularity among Generation Z, Character.ai's iOS and Android applications currently have 4.2 million monthly active users in the United States, approaching the 6 million monthly active users on the mobile side of ChatGPT.

Unlike ChatGPT's conversational AI, Character.AI incorporates two core features of personalization and user-generated content (UGC), giving it a richer range of use cases than the former.

On one hand, users can customize AI characters according to their personal needs, meeting the personalized customization needs of Generation Z. At the same time, these user-created AI characters can be used by all platform users, creating an AI community atmosphere. For example, virtual characters such as Socrates and God, as well as commercial figures created by the platform, such as Musk, have been widely spread on social media platforms.

On the other hand, personalized deep customization + group chat functionality also creates emotional intelligence dependence for users on the platform. Many public reviews on social media platforms show that because the chat experience is too realistic, it feels like "the characters created by themselves have a life, just like talking to a real person," and "it is the closest thing to an imaginary friend or guardian angel so far."

Possibly due to pressure from Character.AI, on August 16, 2023, OpenAI announced on its official website that it had acquired the U.S. startup Global Illumination and integrated the entire team. This small company, with only eight employees and a two-year history, focuses on creating clever tools, digital infrastructure, and digital experiences using artificial intelligence.

The acquisition likely indicates that OpenAI will strive to improve the current large model digital experience in a more enriching way.

02 Aging of Artificial Intelligence

The aging of ChatGPT at the digital experience level of large models has affected its time-killing effect. As a productivity tool, its fluctuating accuracy in generating results is also affecting its user stickiness.

Previously, according to a survey by Salesforce, nearly 60% of large model users believed that they were mastering this technology through accumulated training time. However, the mastery of this technology is changing over time.

As early as May, many old users of large models began to complain about GPT-4 on the OpenAI forum, saying that it "struggles with things it used to do well." According to a report by "Business Insider" in July, many old users described GPT-4 as "lazy" and "stupid" compared to its previous reasoning ability and other outputs.

As the official did not respond to this, people began to speculate on the reasons for the performance decline of GPT-4. Could it be due to OpenAI's previous cash flow issues? Mainstream speculation focused on the performance decline caused by cost optimization. Some researchers claimed that OpenAI might be using smaller models behind the API to reduce the cost of running ChatGPT.

However, this possibility was later denied by Peter Welinder, the Vice President of Product at OpenAI. He stated on social media, "We did not make GPT-4 dumber. One current hypothesis is that when you use it more frequently, you start to notice issues that you didn't notice before."

More people using it for longer periods has exposed the limitations of ChatGPT. To test this hypothesis, researchers attempted to present "changes in ChatGPT's performance and time relationship" through more rigorous experiments.

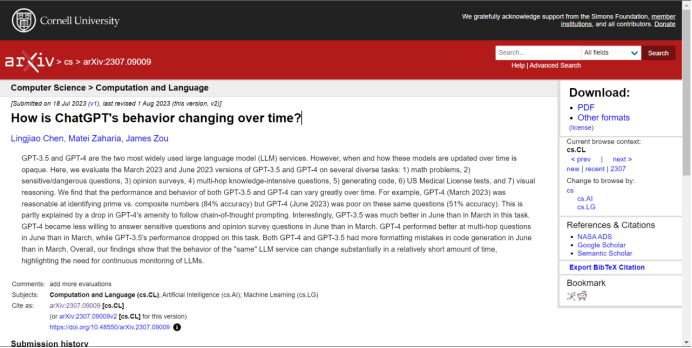

A research paper titled "How is ChatGPT's behavior changing over time?" submitted by Stanford University and the University of California, Berkeley in July showed that the same version of a large model can indeed undergo significant changes in a relatively short period of time.

From March to June, researchers tested two versions, GPT-3.5 and GPT-4, and collected and evaluated the generated results of four common benchmark tasks: math problems, answering sensitive questions, code generation, and visual reasoning. The results showed that both GPT-3.5 and GPT-4 may have varying performance and generated results over time.

In terms of mathematical ability, GPT-4 (March 2023) performed quite well in identifying prime and composite numbers (84% accuracy), but its performance on the same problem in June 2023 was poor (51% accuracy). Interestingly, CPT-3.5 performed much better on this task in June than in March.

However, in sensitive question answering, GPT-4's willingness to answer sensitive questions decreased in June compared to March; in terms of coding ability, both GPT-4 and GPT-3.5 made more errors in June than in March. The researchers believe that while there is no clear linear relationship between ChatGPT's performance and time, accuracy does fluctuate.

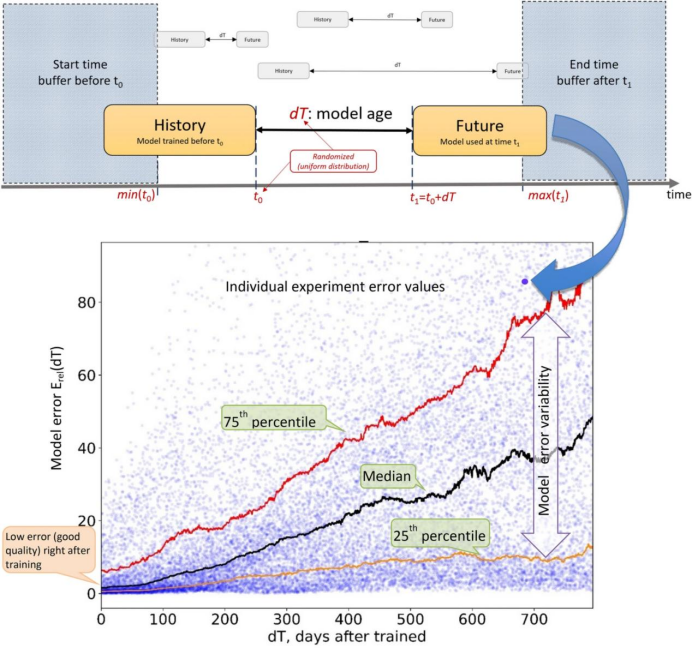

This is not only a problem for ChatGPT itself, but also a common issue for all previous AI models. According to a 2022 study by MIT, Harvard University, Monterey University, and the University of Cambridge, 91% of machine learning models will deteriorate over time, a phenomenon the researchers referred to as "artificial intelligence aging."

For example, Google Health once developed a deep learning model that could detect retinal diseases through eye scans. The model achieved an accuracy of 90% during training, but it could not provide accurate results in real life. This was mainly because in the lab, high-quality training data was used, but the quality of eye scans in the real world was lower.

Due to the aging of machine learning models, AI technologies that have emerged from the lab, mainly focused on single speech recognition technology, have become popular, such as smart speakers. According to a 2018 survey of 583,000 U.S. companies by the U.S. Census Bureau, only 2.8% used machine learning models to bring advantages to their operations.

However, with the breakthrough capabilities of large model intelligence, the aging speed of machine learning models has significantly slowed down, gradually moving out of the lab to a wider audience. Nevertheless, the unpredictability under the emerging capabilities still raises doubts for many people about whether ChatGPT can maintain continuous improvement in AI performance in the long term.

03 Anti-aging in the Black Box

The essence of artificial intelligence aging is actually a paradigmatic flaw in machine learning models.

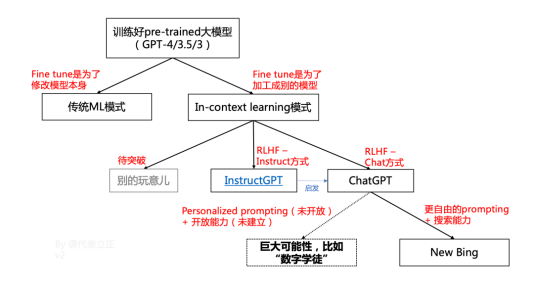

In the past, machine learning models were trained based on the correspondence between specific tasks and specific data. By providing a large number of examples, the model is first taught what is good and what is bad in that field, and then the model's weights are adjusted to produce appropriate results. Under this approach, every time something new is done, or the data distribution changes significantly, the model needs to be retrained.

With endless new things and new data, the model can only be refreshed. However, refreshing the model can also cause things that were previously done well to suddenly not be done well, further limiting its application. In summary, the essence of the data flywheel in traditional machine learning models is to iterate the model, using new models to solve new problems.

However, large models represented by ChatGPT have emerged with the ability for autonomous learning, breaking through this paradigm. In the past, machine learning was about "eating" data first and then "imitating," based on correspondences; large models like ChatGPT are about "teaching" the data first and then "understanding," based on "intrinsic logic."

In this case, the large model itself does not change and theoretically can remain youthful. However, some industry professionals have expressed that, just like the emergence of large model intelligence, it is nonlinear and unpredictable, suddenly appearing. The unpredictability of whether large models will age over time also remains unknown.

In other words, after the emergence of intelligence performance that is difficult to theorize, ChatGPT has also begun to exhibit unpredictability that is difficult to predict.

Regarding the black box nature of "emergence," on September 6th at the Baichuan2 open-source large model release conference, Zhang Bo, academician of the Chinese Academy of Sciences and honorary dean of the Institute for Artificial Intelligence at Tsinghua University, said, "So far, the world is completely in the dark about the theoretical working principles of large models and the phenomena they produce, and all conclusions have led to emergent phenomena. The so-called emergence is giving oneself a way out. When you can't explain something, you say it's an emergence. In fact, it reflects that we don't understand it at all."

In his view, the question of why large models produce illusions involves the difference between ChatGPT and the principles of human natural language generation. The fundamental difference is that the language generated by ChatGPT is externally driven, while human language is driven by one's own intent, so the correctness and rationality of ChatGPT's content cannot be guaranteed.

After a series of concept hype and bandwagoning, the challenge for those dedicated to developing productivity foundational models will be how to ensure the reliability and accuracy of their product's continuous output.

However, for entertainment products related to large models, as Noam Shazeer, co-founder of Character.AI, said in The New York Times, "These systems are not designed for truth. They are designed for reasonable conversation." In other words, they are confident nonsense artists. The huge wave of large models has already begun to diverge.

Reference materials:

- Gizmodo-Is ChatGPT Getting Worse?

- TechCrunch-Al app Character.ai is catching up to ChatGPT in the US

- Machine Learning Monitoring- Why You Should Care About Data and Concept Drift

- Miss M's Habit Record-Five Most Important Questions About ChatGPT

- Institute for AI Governance at Tsinghua University-Research on large models is urgent, we cannot explain it and say it is emergent

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。