In 2025, humanoid robots are transitioning from science fiction to reality. From Tesla's Optimus to Figure AI's Figure 01, the capabilities of general-purpose humanoid robots are rapidly expanding with the support of large language models. According to Goldman Sachs, the humanoid robot market could reach $154 billion by 2035. A massive trillion-dollar market is attracting the world's top tech companies and the brightest minds.

However, as the "limbs" of robots become increasingly developed, a more core question arises: how do we build a "brain" that is intelligent, open, and secure enough? As thousands of robots enter homes, hospitals, and cities, how will they work together, exchange value, and seamlessly integrate with human society?

Stanford University professor and OpenMind founder Jan Liphardt provided his answer. After securing $20 million in funding led by Pantera Capital in August 2025, OpenMind pressed the fast-forward button, releasing a series of products from the underlying operating system to upper-level payment protocols, gradually outlining the complete blueprint for its "robot brain."

OpenMind founder Jan Liphardt

OpenMind's core business is providing cloud cognitive services in a SaaS model for enterprises. However, they keenly captured that as robots become independent economic participants, blockchain will play a crucial role in areas such as payment systems, identity verification, data privacy, and collaborative governance.

Recently, OpenMind's collaboration with stablecoin issuer Circle, along with the deployment of robot charging stations on the streets of San Francisco, is the initial realization of this vision. Robots can independently complete charging payments using USDC, which may signal the dawn of the "Machine Economy" era.

At the same time, OpenMind is also creating a dedicated app store for robots, allowing users to download applications and skills to their robots in one place, just like customizing mobile applications in the Apple App Store or Google Play Store. This app was launched last week in the OpenMind app store.

In this exclusive interview, we delved into the philosophy of building a robot "brain," the design concept of the modular operating system OM1, and how to create a future of efficient collaboration between machines and between machines and humans through the FABRIC protocol and blockchain technology. He shared OpenMind's technology roadmap and provided profound insights on key issues such as developer ecosystems, remote operation, and data privacy.

Building a "Bank Account" for Robots

In December 2025, OpenMind and stablecoin issuer Circle jointly announced the launch of a robot autonomous payment system based on the x402 protocol. As robots' capabilities improve, they will no longer just be tools executing tasks but will begin to play the role of autonomous economic entities. They will need to purchase computing power, data, skills, and even hire other robots or humans to complete complex tasks.

To achieve this, a financial system designed specifically for machines, requiring no human intervention, becomes indispensable. The traditional banking system is clearly not prepared for this, while cryptocurrency and blockchain technology, with their inherent digital and decentralized characteristics, become the most natural choice.

BlockBeats: What were you doing before founding OpenMind? What prompted you to dive into this venture?

Jan: I am an engineering professor at Stanford University, but I am currently fully dedicated to OpenMind. I founded this company because I believe that traditional robot software stacks are not suitable for complex and dynamic environments like hospitals and homes.

OpenMind is a U.S. tech company, but its core is not in crypto business; it is an enterprise-level SaaS cloud cognitive company. Our business model is similar to other enterprise SaaS companies, primarily generating revenue by establishing standard cloud interfaces.

As for blockchain, it has some interesting characteristics in tracking information and building financial systems. Looking ahead, we foresee autonomous machines interacting with other machines and even humans to complete tasks. Blockchain provides a possible technical solution here, especially regarding machine payment systems, identity, collaboration, and governance.

BlockBeats: OpenMind recently announced a partnership with Circle on the x402 protocol. Can you explain how this collaboration came about? Why is it so important?

Jan: In fact, as early as last May, when the Coinbase Developer Platform just released x402, our robots were already among the first partners to support x402. In our software, we directly integrated the payment system into the robot's "brain," aiming to enable robots to interact with external infrastructure.

We have been thinking about what a payment system would look like if it were designed around machines rather than humans. This question ultimately led to our collaboration with Circle. The core idea is that machines do not have pockets, fingerprints, eyes, or passports, but they are extremely good at writing code and using APIs.

Media coverage of OpenMind's collaboration with Circle

Therefore, from our perspective, for a robot, purchasing goods and services through a digital payment system is often more natural than using credit cards or cash. We are building a location-based payment system with Circle. When two machines are close to each other, they can directly exchange money.

A practical example is the charging stations we set up for autonomous machines on the sidewalks of San Francisco. When a robot approaches, the system detects its presence, the charger activates, and the robot can use the stablecoin USDC to purchase electricity.

BlockBeats: Why do you think it is crucial for robots to have this autonomous purchasing capability?

Jan: Take autonomous taxis (Robotaxi) as an example; they indeed need a solid payment infrastructure. Of course, they can use fiat currency, but that feels cumbersome; they could also use credit cards, but that seems outdated. NFC-based protocols are more interesting, but when we "communicate" with very advanced robots, we repeatedly hear that they are happy to use cryptocurrency as a payment tool.

These machines are inherently good at handling digital infrastructure, and in practice, cryptocurrency may be extremely convenient for autonomous machines to make payments.

If a humanoid robot walks into a bank, the bank will sound an alarm. Human-centered banks have no real conceptual model for an autonomous physical machine that can manage funds and make independent decisions. Traditional banks would ask for your name, social security number, passport, address, place of birth, etc., which are meaningless for an autonomous humanoid robot.

Institutions like Bank of America currently do not have the concept of providing bank accounts or credit cards for non-biological thinking machines. Perhaps this will change in the future, and banks will expand services to non-biological clients. But today, if you are an intelligent machine, the only viable option is cryptocurrency.

BlockBeats: So this is more of an advantage than a hard requirement. The payment system for robots does not necessarily have to use cryptocurrency, but it is a more elegant solution?

OpenMind: If a humanoid robot walks into a bank, the bank will sound an alarm. Human-centered banks have no real conceptual model for an autonomous physical machine that can manage funds and make independent decisions.

Traditional banks would ask for your name, social security number, passport, address, place of birth, etc., which are meaningless for an autonomous humanoid robot.

Institutions like Bank of America currently do not have the concept of providing bank accounts or credit cards for non-biological thinking machines. Perhaps this will change in the future, and banks will expand services to non-biological clients. But today, if you are an intelligent machine, the only viable option is cryptocurrency.

BlockBeats: What is the cost of deploying such a charging station?

OpenMind: The hardware cost is about three hundred dollars. As for the electricity cost, it depends on the operator and is not determined by us. We are building the software and infrastructure.

But this is just a small example. The broader opportunity is that as machines awaken and become smarter, they will want to buy and sell many different things: real-time data, new models and skills, computing, and storage. They may accept jobs and tasks and work closely with humans.

All of this requires good infrastructure to coordinate payments and collaboration between machines and humans. We are not a charging station company. We are working hard to provide intelligent machines with the full set of capabilities they need to be safe and useful to people anywhere.

OM1 and FABRIC: From "Individual Intelligence" to "Collective Collaboration"

To truly integrate robots into society, a powerful "brain" is needed to understand the world, namely an advanced operating system. OpenMind's OM1 aims to empower individual robots with unprecedented environmental perception, language interaction, and spatial reasoning capabilities through a modular multi-model architecture.

However, true intelligence emerges from collaboration. The vision of the FABRIC protocol is even grander: it hopes to become the "TCP/IP" of the robot world, allowing machines of different brands and forms to communicate and collaborate freely like humans, forming an intelligent physical network.

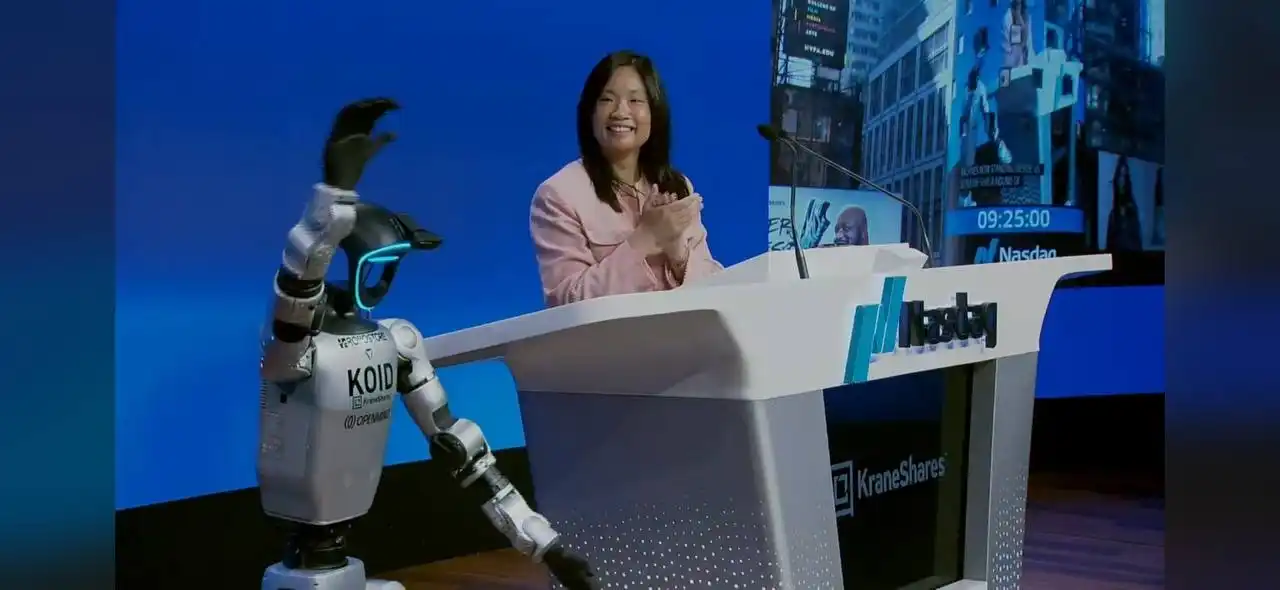

A robot equipped with OpenMind OM1 witnesses the launch of the first humanoid robot ETF KraneShares KOID

BlockBeats: For readers who are not familiar, can you explain the OM1 operating system and the FABRIC protocol? Let's start with OM1.

Jan: OM1 is a modular operating system designed for human-facing robots. It is not suitable for industrial robots but is intended for those that interact with people and children, living in your home or functioning in hospitals and schools.

These robots need to understand their spatial environment, speak multiple languages, comprehend the organization of a house, and reason in space. Traditional robot operating systems (ROS) do not actually provide these capabilities.

The design of OM1 is modular, like LEGO bricks that can be assembled together. In practice, we run about 5 to 15 models in parallel, each responsible for different capabilities such as vision, hearing, speech generation, and fusing data from multiple sensors into a continuous view of the environment, including people, pets, rooms, and other aspects of the surroundings.

A robotic dog equipped with OpenMind developer tools

FABRIC, on the other hand, is still in its very early stages and has not yet been built, requiring a long time, and we will only be one of many contributors. If OM1 is about making a single machine smarter, then FABRIC is about enabling multiple machines to work together, whether with other machines or with humans.

BlockBeats: What was the original intention behind building the FABRIC protocol?

Jan: The initial trigger came from a real-world moment. One of our humanoid robots was crossing the street, and we saw a Waymo (self-driving car) approaching. Waymo is a robotic car, and we were curious about what would happen at the crosswalk.

The outcome was smooth. Waymo stopped. It likely recognized the humanoid robot as a human, waiting for it to cross before continuing on its way.

This made us think, if Waymo could know about the existence of the humanoid robot, and the humanoid robot could also know about another robot—the self-driving taxi—wouldn't that be useful?

This prompted us to start thinking about a system that would allow one machine to communicate with another completely different machine—coming from different manufacturers and having different forms, whether they have wheels, arms, or legs. We are looking for something akin to a "phone" or "Zoom" for machines, a way for physically proximate machines to work together.

BlockBeats: You mentioned that FABRIC will take a long time to build. Why is that?

OpenMind: There are many reasons. Machines come in various forms—wheels, legs, claws. There are also many manufacturers. The types of data machines want to share are diverse. Additionally, there are specific regional demands, including different languages, capabilities, and use cases.

You can build a general infrastructure relatively quickly at a foundational level, but to build everything needed requires a lot of work from many different places with different skills.

BlockBeats: When an AI product runs multiple models, the token costs can be very high. Will this become a cost issue for OM1 users and developers?

OpenMind: Cost is always a concern, but there are many ways to address it. Some of the models we run are open-source, and many of today's top-performing models are also open-source, so the costs are essentially just computation and power. Some of our models are very small and simple, for example, models focused on safety to ensure that humanoid or quadruped robots do not trip over shoes, carpets, or stairs.

Overall, we can run most of the stack on a single NVIDIA A4 or Mac M4, M5 level chip. In terms of cost, this is roughly equivalent to running something on your own laptop. We do not believe that cost will be a major barrier.

Developer Ecosystem: How does BrainPack break the development bottleneck for robots?

In the era of software-defined hardware, the prosperity of the ecosystem is key to the popularization of technology. Just as the success of the iPhone relies on its vast App Store developer community. However, for humanoid robots, high hardware costs, fragmented development systems, and the lack of intelligent systems have become bottlenecks for many robot developers.

OpenMind is building a series of robotic software ecosystems aimed at breaking this bottleneck, including the intelligent operating system OM1, the collaborative network FABRIC, and the "plug-and-play brain" BrainPack for robots. Additionally, OpenMind has just launched the first app store for robots, where users can download applications and skills to their robots in one place, just like customizing mobile applications in the Apple App Store or Google Play Store.

BlockBeats: In your view, what is the current state of the robot developer ecosystem? What might be the biggest obstacles?

Jan: Almost everyone is enthusiastic about powerful and safe humanoid robots, from students in robotics classes to senior developers at Meta or Google. The problem is not a lack of enthusiasm but two aspects. First, there are very few advanced humanoid robots in practical applications. Second, almost all robots currently use customized, poorly documented methods to access data, internal states, and control their own behavior.

There is currently a near-complete lack of general systems for adding and improving advanced features in humanoid robots. Many fundamental issues, such as battery management and navigation, can be addressed with existing software like ROS2, but getting robots to understand their spatial environment, entertain, learn new skills, and perform well in highly dynamic environments like homes, hospitals, and schools has almost no solutions at present.

OpenMind hopes to help bridge this gap by developing open-source software for social robots, enabling developers around the world to easily understand, learn, and contribute to this rapidly evolving field.

BlockBeats: You described BrainPack as a small step toward the "iPhone moment" for humanoid robots. What exactly does BrainPack bring?

Jan: One major issue today is the vast differences between different humanoid robots. For software developers, just learning the specifics of one robot can take a long time before they can write something useful.

BrainPack is designed to solve this problem. You can think of it as a backpack with a computer that can connect to the robot. If your software runs on BrainPack, we abstract away the hardware differences between different robots. This means developers can focus on functionality without worrying about each robot's unique API or SDK.

BrainPack installed on a robot

If the software runs well on BrainPack, it is likely to run on various robots, whether they have two legs, four legs, wheels, or are tall or short. BrainPack also comes with a set of standardized sensors, so developers do not have to deal with different sensor formats or data protocols. Additionally, BrainPack connects directly to our cloud infrastructure, making it easy to leverage remote computing.

BlockBeats: Besides charging stations, what other infrastructure might OpenMind deploy in the future to showcase the capabilities of OM1 and the FABRIC protocol?

OpenMind: Another example is the work we have already started with NEAR AI. This project uses NVIDIA H100 and H200 GPUs to achieve confidential computing.

Confidential computing means that robots can run models anywhere on Earth while trusting that the data transmitted back and forth remains confidential. Therefore, a robot in San Francisco can have its "brain" hosted thousands of miles away. This also means that those with the right hardware (H100 and H200) can provide confidential computing nodes for AI and robotics.

Trust, Privacy, and New Economic Models

The implementation of technology ultimately needs to return to society. In addition to technical challenges, the large-scale adoption of robots also faces a series of structural social issues such as trust, safety, regulation, privacy, and public acceptance. OpenMind believes that open-source is the cornerstone of building trust, allowing people to "see" how the robot's brain works. At the same time, collaborating with projects like NEAR to utilize confidential computing technology to protect data privacy will be key to gaining public trust. A future deeply involving robots will also inevitably give rise to new job roles and economic organizational models.

BlockBeats: You mentioned on X that teleoperation might become a real profession in the future. Can you elaborate on this idea for our readers?

Jan: From a very practical perspective, today's robots still need a lot of help. They sometimes get stuck, sometimes do not know the correct answer, and sometimes make mistakes.

In these cases, having a human nearby, whether physically or through close monitoring, is extremely useful. Another aspect is trust. Many people are not comfortable with robots making completely autonomous decisions, so having a "human in the loop" helps people feel more at ease.

Moreover, teleoperation creates new opportunities. You no longer need to be in a specific location to engage in certain types of work. Depending on your skills, you can help operate or supervise a robot that is far away, even on a different continent. This opens up a wide range of new economic and professional opportunities.

BlockBeats: What plans does OpenMind have to help regions or societies better accept humanoid robots?

Jan: Trust is fundamental. If people feel scared, the pace of adoption will be slow. That is why our core software is open-source. We want people to see the inner workings of the robot's "brain" and understand how it operates.

Another unresolved issue is ownership. Will robots be purchased by employers? Or will individuals buy them for their homes? Or will they be shared by communities? There may emerge models similar to car-sharing ownership, where a group purchases a robot and reaps the rewards from the work it performs.

We do not yet know which model will dominate, but there is plenty of room for new ways to organize work and create value around robots.

BlockBeats: Let's return to the privacy issue. You mentioned the collaboration with NEAR; can you clarify why this partnership is important?

Jan: The core technology here is confidential computing, which is directly built into NVIDIA H100 and H200 GPUs. In principle, anyone with these GPUs can connect them to the internet and provide secure computing services to others.

NEAR happens to be very fast, highly capable, and deeply interested in building the infrastructure needed to make this access practical and scalable. That is the reason for the collaboration. But fundamentally, confidential computing is a capability that every H100 and H200 GPU possesses.

BlockBeats: How large is the OpenMind team now?

OpenMind: We currently have about twenty people, distributed between San Francisco and Hong Kong.

BlockBeats: What do you expect to be the main products or revenue drivers for OpenMind in the next three years?

OpenMind: Our fastest-growing revenue comes from enterprise AI, particularly through cloud-based model provision and robot-centric computing services. Customers pay directly for these services. Another important area is revenue sharing with robotics companies. We collaborate with them to co-develop products that are sold in regions such as Europe, the Middle East, and the United States.

BlockBeats: Many people are concerned about the scale of capital expenditure in today's AI field. Do you think OpenMind needs a large amount of funding to continue its development, or can it achieve self-sustainability relatively quickly?

OpenMind: This is a larger question, but we have a different perspective on the notion that it requires hundreds of billions of dollars to build useful models.

We have already seen some strong examples, such as DeepSeek, which had a development budget far smaller than models like ChatGPT. Based on our experience, many of the models we need can be built with much less capital than people typically assume.

Therefore, we are cautiously optimistic that making meaningful progress in robotics or AI does not necessarily require spending hundreds of billions or even trillions of dollars on computing resources.

BlockBeats: Finally, what would you like to say to the developer or user community in China?

OpenMind: This is an extremely rare moment. A whole new technology is emerging that enables machines to do things that only humans could do before. This will have a profound impact on education, healthcare, manufacturing, and many other areas of life.

For software developers, the opportunity is no longer just to build applications for mobile phones, but to build applications for thinking machines. It may still be too early, but progress is happening very quickly. I strongly encourage developers to learn about robotic operating systems, humanoid robot platforms, and how to build applications for them to be well-prepared for the significant advancements that are coming.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。