Written by: KarenZ, Foresight News

Did Musk change Twitter's recommendation system from "manually crafted rules and most heuristic algorithms" to "purely based on AI large models that you might like"?

On January 20, Twitter (X) officially disclosed its new recommendation algorithm, which is the logic behind the "For You" timeline on Twitter's homepage.

In simple terms, the current algorithm mixes "content from the people you follow" with "content from across the internet that might appeal to you," sorting it based on your previous likes, comments, and other actions on X, going through two rounds of filtering, and ultimately becoming the recommended information stream you see.

Here is the core logic translated into plain language:

Building a Profile

The system first collects users' contextual information to establish a "profile" for subsequent recommendations:

- User behavior sequence: Historical interaction records (likes, retweets, time spent, etc.).

- User characteristics: Follow list, personal preference settings, etc.

Where Does the Content Come From?

Every time you refresh the "For You" timeline, the algorithm looks for content from the following two sources:

- Familiar Circle (Thunder): Tweets from the people you follow.

- Stranger Circle (Phoenix): Posts from users you don’t follow, but the AI will fish out posts that you might be interested in from the vast sea of users based on your tastes (even if you don’t follow the author).

These two piles of content are mixed together to form the candidate tweets.

Data Completion and Initial Filtering

After pulling in thousands of posts, the system retrieves the complete metadata of the posts (author information, media files, core text), a process called Hydration. Then, a quick cleaning is performed to remove duplicate content, old posts, posts made by the user themselves, content from blocked authors, or content containing muted keywords.

This step is to save computational resources and avoid ineffective content entering the core scoring phase.

How is Scoring Done?

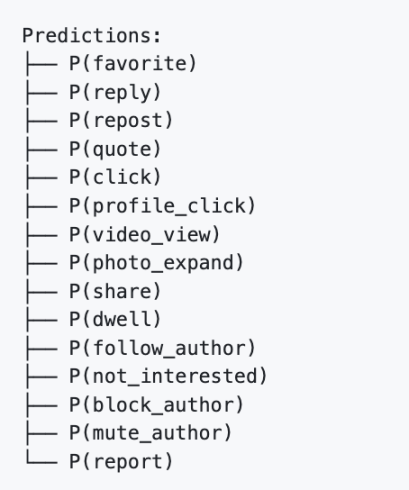

This is the most critical part. Based on the Phoenix Grok Transformer model, the system focuses on each of the remaining candidate posts after filtering, calculating the probability of you taking various actions on them. This is a game of adding and subtracting points:

Positive points (positive feedback): The AI thinks you might like, retweet, reply, click on images, or visit the author's homepage.

Negative points (negative feedback): The AI thinks you might block the author, mute, or report.

Final score = (like probability × weight) + (reply probability × weight) - (block probability × weight)…

It is worth noting that in the new recommendation algorithm, the Author Diversity Scorer usually intervenes after the AI calculates the final score. When it detects multiple pieces of content from the same author among a batch of candidate posts, this tool will automatically "lower" the scores of that author's subsequent posts, allowing you to see a more diverse range of authors.

Finally, based on the sorted scores, the posts with the highest scores are selected.

Secondary Filtering

The system checks the highest-scoring posts again, filtering out any that violate rules (such as spam, violent content), deduplicating multiple branches of the same thread, and finally sorting them from high to low score to form the information stream you see.

Summary

X has eliminated all manually designed features and most heuristic algorithms from its recommendation system. The core advancement of the new algorithm lies in "allowing AI to learn user preferences autonomously," achieving a leap from "telling machines what to do" to "letting machines learn how to do it themselves."

First, the recommendations are more accurate, with "multi-dimensional predictions" that better align with real needs. The new algorithm relies on the Grok large model to predict various user behaviors—not only calculating "will you like/retweet," but also "will you click on links," "how long will you stay," "will you follow the author," and even predicting "will you report/block." This refined assessment has brought the alignment of recommended content with users' subconscious needs to an unprecedented level.

Secondly, the algorithm mechanism is relatively fair, breaking the "big account monopoly" curse to some extent, giving new and smaller accounts more opportunities: the previous "heuristic algorithms" had a fatal flaw: big accounts, due to their historical high interaction volume, could gain high exposure regardless of the content, while new accounts, even with quality content, were buried due to "lack of data accumulation." The candidate isolation mechanism allows each post to be scored independently, unrelated to whether "other content in the same batch is a hit." At the same time, the Author Diversity Scorer will also reduce the spamming behavior of the same author’s subsequent posts in the same batch.

For X Company: This is a cost-cutting and efficiency-boosting measure, trading computational power for human resources, using AI to enhance retention. For users, we are facing a "super brain" that constantly understands human emotions. The more it understands us, the more we rely on it, but also because it understands us so well, we will become more deeply trapped in the "information cocoon" woven by algorithms and more easily become targets for emotional content.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。