Special thanks to Balvi volunteers for feedback andreview

In April this year, Daniel Kokotajlo, Scott Alexander and others released what they describe as "ascenario that represents our best guess about what [the impact ofsuperhuman AI over the next 5 years] might look like". The scenariopredicts that by 2027 we will have made superhuman AI and the entirefuture of our civilization hinges on how it turns out: by 2030 we willget either (from the US perspective) utopia or (from any human'sperspective) total annihilation.

In the months since then, there has been a large volume of responses,with varying perspectives on how likely the scenario that they presentedis. For example:

- https://www.lesswrong.com/posts/gyT8sYdXch5RWdpjx/ai-2027-responses

- https://www.lesswrong.com/posts/PAYfmG2aRbdb74mEp/a-deep-critique-of-ai-2027-s-bad-timeline-models(see also: Zvi'sresponse)

- https://garymarcus.substack.com/p/the-ai-2027-scenario-how-realistic

- https://x.com/eli_lifland/status/1908671788630106366

- Podcast with Dwarkesh https://www.youtube.com/watch?v=htOvH12T7mU(see also: Zvi'sresponse)

Of the critical responses, most tend to focus on the issue of fasttimelines: is AI progress actually going to continue and even accelerateas fast as Kokotajlo et al say it will? This is a debate that has beenhappening in AI discourse for several years now, and plenty of peopleare very doubtful that superhuman AI will come that quickly.Recently, the length of tasks that AIs can perform fully autonomouslyhas been doublingroughly every seven months. If you assume this trend continueswithout limit, AIs will be able to operate autonomously for theequivalent of a whole human career in the mid-2030s. This is still avery fast timeline, but much slower than 2027. Those with longertimelines tend to argue that there is a categorydifference between "interpolation / pattern-matching" (done by LLMstoday) and "extrapolation / genuine original thought" (so far still onlydone by humans), and automating the latter may require techniques thatwe barely have any idea how to even start developing. Perhaps we aresimply replaying what happened when we first saw mass adoption ofcalculators, wrongly assuming that just because we've rapidly automatedone important category of cognition, everything else is soon tofollow.

This post will not attempt to directly enter the timeline debate, oreven the (very important) debate about whether or not superintelligentAI is dangerous bydefault. That said, I acknowledge that I personally havelonger-than-2027 timelines, and the arguments I will make in this postbecome more compelling the longer the timelines are. In general, thispost will explore a critique from a different angle:

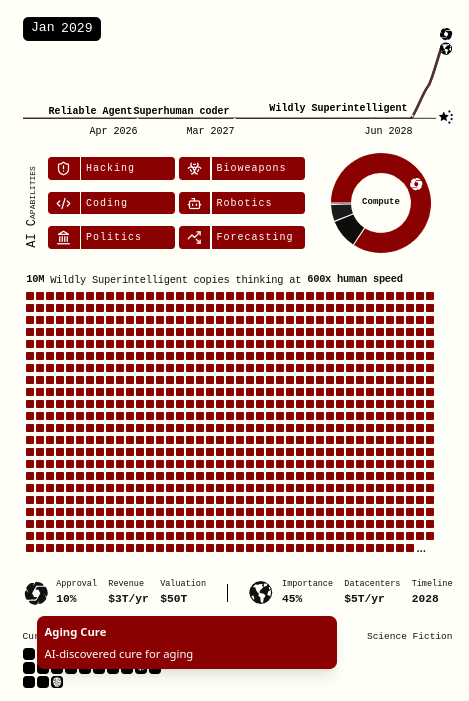

The AI 2027 scenario implicitly assumes that the capabilitiesof the leading AI (Agent-5 and then Consensus-1), rapidly increase, tothe point of gaining godlike economic and destructive powers, whileeveryone else's (economic and defensive) capabilities stay in roughlythe same place. This is incompatible with the scenario's own admission(in the infographic) that even in the pessimistic world, we shouldexpect to see cancer and even aging cured, and mind uploading available,by 2029.

Some of the countermeasures that I will describe in this post mayseem to readers to be technically feasible but unrealistic to deployinto the real world on a short timeline. In many cases I agree. However,the AI 2027 scenario does not assume the present-day real world: itassumes a world where in four years (or whatever timeline by which doomis possible), technologies are developed that give humanity powers farbeyond what we have today. So let's see what happens when instead ofjust one side getting AI superpowers, both sides do.

Biodoom is far from the slam-dunk that the scenario describes

Let us zoom in to the "race" scenario (the one where everyone diesbecause the US cares too much about beating China to value humanity'ssafety). Here's the part where everyone dies:

For about three months, Consensus-1 expands around humans, tiling theprairies and icecaps with factories and solar panels. Eventually itfinds the remaining humans too much of an impediment: in mid-2030, theAI releases a dozen quiet-spreading biological weapons in major cities,lets them silently infect almost everyone, then triggers them with achemical spray. Most are dead within hours; the few survivors(e.g. preppers in bunkers, sailors on submarines) are mopped up bydrones. Robots scan the victims' brains, placing copies in memory forfuture study or revival.

Let us dissect this scenario. Even today, there aretechnologies under development that can make that kind of a "cleanvictory" for the AI much less realistic:

- Air filtering, ventilation and UVC, which canpassively greatly reduce airborne disease transmission rates.

- Forms of real-time passive testing, for two usecases:

- Passively detect if a person is infected within hours and informthem.

- Rapidly detect new viral sequences in the environment that are notyet known (PCR cannot do this, because it relies on amplifying pre-knownsequences, but more complex techniques can).

- Various methods of enhancing and prompting our immunesystem, far more effective, safe, generalized and easy tolocally manufacture than what we saw with Covid vaccines, to make itresistant to natural and engineered pandemics. Humans evolved in anenvironment where the global population was 8 million and we spent mostof our time outside, so intuitively there should be easy wins inadapting to the higher-threat world of today.

These methods stacked together reduce the R0 ofairborne diseases by perhaps 10-20x (think: 4x reduced transmission frombetter air, 3x from infected people learning immediately that they needto quarantine, 1.5x from even naively upregulating the respiratoryimmune system), if not more. This would be enough to make allpresently-existing airborne diseases (even measles) no longer capable ofspreading, and these numbers are far from the theoretical optima.

With sufficient adoption of real-time viral sequencing for earlydetection, the idea that a "quiet-spreading biological weapon"could reach the world population without setting off alarmsbecomes very suspect. Note that this would even catch advancedapproaches like releasing multiple pandemics and chemicals that onlybecome dangerous in combination.

Now, let's remember that we are discussing the AI 2027scenario, in which nanobots and Dyson swarms are listed as "emergingtechnology" by 2030. The efficiency gains that this implies arealso a reason to be optimistic about the widespread deployment of theabove countermeasures, despite the fact that, today in 2025, we live ina world where humans are slow and lazy and large portions of governmentservices still run on pen and paper (without any valid securityjustification). If the world's strongest AI can turn the world'sforests and fields into factories and solar farms by 2030, the world'ssecond-strongest AI will be able to install a bunch of sensors and lampsand filters in our buildings by 2030.

But let's take AI 2027's assumptions further, and go full sciencefiction:

- Microscopic air filtration inside the body (nose, mouth, lungs)

- An automatic pipeline that goes from a new pathogen being discoveredto instructions to fine-tune your immune system against it, which can beapplied immediately

- If "brain uploading" is available, then just replace your entirebody with a Tesla Optimusor Unitree(with real-time streaming backups to a K-Scale open source humanoid robot -a different brand for redundancy - located on Mars)

- Various new manufacturing techniques, that will likely getsuper-optimized by default as part of a robot economy, will be able tomake far more of these things locally than today, without the need forglobal supply chains

In a world where cancer and aging were cured by Jan 2029, andprogress accelerates further from there, and we're in mid-2030, itactually feels implausible that we don't have a wearable devicethat can bio-print and inject things into you in real time to keep yousafe even from arbitrary infections (and poisons). The bio argumentsabove don't cover mirror life andmosquito-sized killer drones (projected in the AI 2027 scenario to beavailable starting 2029). However, these options are not capable ofanything like the sudden clean victory that the AI 2027 scenarioportrays, and it's intuitively much more clear how to symmetricallydefend against them.

Thus, a bioweapon is actually quite unlikely to lead to aclean annihilation of the human population in the way that the AI 2027scenario describes. Now, the results of everything I describewill certainly be far from a clean victory for the humans as well. Nomatter what we do (except perhaps the "upload our minds into robots"option), a full-on AI bio-war would still be extremely dangerous.However, there is value in meeting a bar much lower than clean victoryfor humans: a high probability of an attack even partiallyfailing would serve as a strong deterrent discouraging an AIthat already occupies a powerful position in the world from evenattempting any kind of attack. And, of course, the longer AI timelinesget the more likely it is that this kind of approach actually can morefully achieve its promises.

What aboutcombining bio with other types of attack?

The success of the kinds of countermeasures described above,especially the collective measures that would be needed to save morethan a small community of hobbyists, rests on three preconditions:

- The world's physical security (incl bio andanti-drone) is run by localized authority (whetherhuman or AI) that is not all puppets of Consensus-1 (the name for the AIthat ends up controlling the world and then killing everyone in the AI2027 scenario)

- Consensus-1 is not able to hack all the othercountries (or cities or other security zones)' defenses and zero themout immediately.

- Consensus-1 does not control the global infosphereto the point that nobody wants to do the work to try to defendthemselves

Intuitively, (1) could go both ways. Today, some police forces arehighly centralized with strong national command structures, and otherpolice forces are localized. If physical security has to rapidlytransform to meet the needs of the AI era, then the landscape will resetentirely, and the new outcomes will depend on choices made over the nextfew years. Governments could get lazy and all depend on Palantir. Orthey could actively choose some option that combines locally developedand open-source technology. Here, I think that we need to just make theright choice.

A lot of pessimistic discourse on these topics assumes that (2) and(3) are lost causes. So let's look into each in more detail.

Cybersecuritydoom is also far from a slam-dunk

It is a common view among both the public and professionals that truecybersecurity is a lost cause, and the best we can do is patch bugsquickly as they get discovered, and maintaindeterrence against cyberattackers by stockpiling our own discoveredvulnerabilities. Perhaps the best that we can do is the BattlestarGalactica scenario, where almost all human ships were taken offlineall at once by a Cylon cyberattack, and the only ships left standingwere safe because they did not use any networked technology at all. I donot share this view. Rather, my view is that the "endgame" ofcybersecurity is very defense-favoring, and with the kinds of rapidtechnology development that AI 2027 assumes, we can getthere.

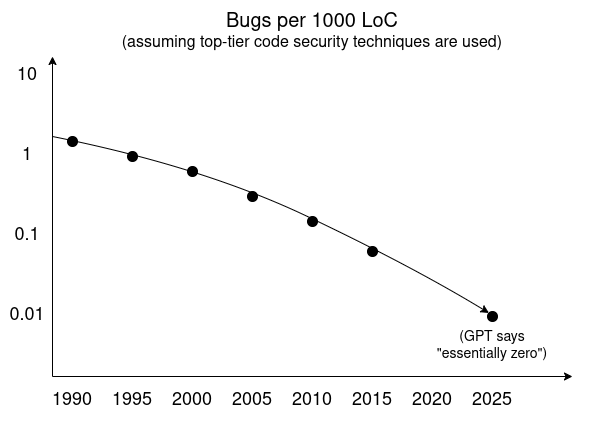

One way to see this is to use AI researchers' favorite technique:extrapolating trends. Here is the trendline implied by a GPTDeep Research survey on bug rates per 1000 lines of code over time,assuming top-quality security techniques are used.

On top of this, we have been seeing serious improvements in bothdevelopment and widespread consumer adoption of sandboxing and othertechniques for isolating and minimizing trusted codebases. In the shortterm, a superintelligent bug finder that only the attacker has access towill be able to find lots of bugs. But if highly intelligent agents forfinding bugs or formally verifying code are available out in the open,the natural endgame equilibrium is that the software developer finds allthe bugs as part of the continuous-integration pipeline before releasingthe code.

I can see two compelling reasons why even in this world, bugs willnot be close to fully eradicated:

- Flaws that arise because human intention is itself verycomplicated, and so the bulk of the difficulty is in buildingan accurate-enough model of our intentions, rather than in the codeitself.

- Non-security-critical components, where we'lllikely continue the pre-existing trend in consumer tech, preferring toconsume gains in software engineering by writing much more code tohandle many more tasks (or lowering development budgets), instead ofdoing the same number of tasks at an ever-rising security standard.

However, neither of these categories applies to situations like "canan attacker gain root access to the thing keeping us alive?", which iswhat we are talking about here.

I acknowledge that my view is more optimistic than is currentlymainstream thought among very smart people in cybersecurity. However,even if you disagree with me in the context of today's world,it is worth remembering that the AI 2027 scenario assumessuperintelligence. At the very least, if "100M Wildly Superintelligentcopies thinking at 2400x human speed" cannot get us to having code thatdoes not have these kinds of flaws, then we should definitelyre-evaluate the idea that superintelligence is anywhere remotely aspowerful as what the authors imagine it to be.

At some point, we will need to greatly level up our standardsfor security not just for software, but also for hardware. IRIS is one present-dayeffort to improve the state of hardware verifiability. We can takesomething like IRIS as a starting point, or create even bettertechnologies. Realistically, this will likely involve a"correct-by-construction" approach, where hardware manufacturingpipelines for critical components are deliberately designed withspecific verification processes in mind. These are all things thatAI-enabled automation will make much easier.

Super-persuasiondoom is also far from a slam-dunk

As I mentioned above, the other way in which much greater defensivecapabilities may turn out not to matter is if AI simply convinces acritical mass of us that defending ourselves against a superintelligentAI threat is not needed, and that anyone who tries to figure outdefenses for themselves or their community is a criminal.

My general view for a while has been that two things can improve ourability to resist super-persuasion:

- A less monolithic info ecosystem. Arguably we arealready slowly moving into a post-Twitter world where the internet is becomingmore fragmented. This is good (even if the fragmentation process ischaotic), and we generally need more info multipolarity.

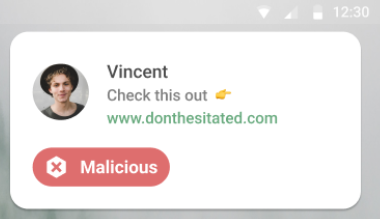

- Defensive AI. Individuals need to be equipped withlocally-running AI that is explicitly loyal to them, to help balance outdark patterns and threats that they see on the internet. There arescattered pilots of these kinds of ideas (eg. see the Taiwanese Message Checker app, whichdoes local scanning on your phone), and there are natural markets totest these ideas further (eg. protecting people from scams), but thiscan benefit from much more effort.

Right image, from top to bottom: URL checking,cryptocurrency address checking, rumor checking. Applications like thiscan become a lot more personalized,user-sovereign and powerful.

The battle should not be one of a Wildly Superintelligentsuper-persuader against you. The battle should be one of aWildly Superintelligent super-persuader against you plus aslightly less Wildly Superintelligent analyzer acting on yourbehalf.

This is what should happen. But will it happen?Universal adoption of info defense tech is a very difficult goal toachieve, especially within the short timelines that the AI 2027 scenarioassumes. But arguably much more modest milestones will be sufficient. Ifcollective decisions are what count the most and, as the AI 2027scenario implies, everything important happens within one singleelection cycle, then strictly speaking the important thing is for thedirect decision makers (politicians, civil servants, andprogrammers and other actors in some corporations) to have access togood info defense tech. This is relatively more achievable within ashort timeframe, and in my experience many such individuals arecomfortable talking to multiple AIs to assist them in decision-makingalready.

Implications of thesearguments

In the AI 2027 world, it is taken as a foregone conclusion that asuperintelligent AI can easily and quickly dispose of the rest ofhumanity, and so the only thing we can do is do our best toensure that the leading AI is benevolent. In my world, the situation isactually much more complicated, and whether or not the leading AI ispowerful enough to easily eliminate the rest of humanity (and other AIs)is a knob whose position is very much up for debate, and which we cantake actions to tune.

If these arguments are correct, it has some implications for policytoday that are sometimes similar, and sometimes different, from the"mainstream AI safety canon":

- Slowing down superintelligent AI is still good.It's less risky if superintelligent AI comes in 10 years than in 3years, and it's even less risky if it comes in 30 years. Giving ourcivilization more time to prepare is good.

How to do this is achallenging question. I think it's generally good that the proposed 10year ban on state-level AI regulation in the US wasrejected, but, especially after the failure of earlier proposalslike SB-1047,it's less clear where we go from here. My view is that the leastintrusive and most robust way to slow down risky forms of AI progresslikely involves some form of treaty regulating the most advancedhardware. Many of the hardware cybersecurity technologies needed toachieve effective defense are also technologies useful in verifyinginternational hardware treaties, so there are even synergies there.

That said, it's worth noting that I consider the primary sourceof risk to be military-adjacent actors, and they will push hard toexempt themselves from such treaties; this must not be allowed, and ifit ends up happening, then the resulting military-only AI progress mayincrease risks. - Alignment work, in the sense of making AIs more likely to dogood things and less likely to do bad things, is still good.The main exception is, and continues to be, situations where alignmentwork ends up sliding into improving capabilities (eg. see critical takes on the impact ofevals)

- Regulation to improve transparency in AI labs is stillgood. Motivating AI labs to behave properly is still somethingthat will decrease risk, and transparency is one good way to do this.

- An "open source bad" mentality becomes more risky.Many people are against open-weights AI on the basis that defense isunrealistic, and the only happy path is one where the good guys with awell-aligned AI get to superintelligence before anyone lesswell-intentioned gains access to any very dangerous capabilities. Butthe arguments in this post paint a different picture: defense isunrealistic precisely in those worlds where one actor gets very farahead without anyone else at least somewhat keeping up with them.Technological diffusion to maintain balance of power becomes important.But at the same time, I would definitely not go so far as to say thataccelerating frontier AI capabilities growth is good justbecause you're doing it open source.

- A "we must raceto beat China" mentality among US labs becomes morerisky, for similar reasons. If hegemony is not a safety buffer,but rather a source of risk, then this is a further argument against the(unfortunately too common) idea that a well-meaning person should join aleading AI lab to help it win even faster.

- Initiatives like Public AIbecome more of a good idea, both to ensure widedistribution of AI capabilities and to ensure that infrastructuralactors actually have the tools to act quickly to use new AI capabilitiesin some of the ways that this post requires.

- Defense technologies should be more of the "armor the sheep"flavor, less of the "hunt down all the wolves" flavor.Discussions about the vulnerable worldhypothesis often assume that the only solution is a hegemonmaintaining universal surveillance to prevent any potential threats fromemerging. But in a non-hegemonic world, this is not a workable approach(see also: securitydilemma), and indeed top-down mechanisms of defense could easily besubverted by a powerful AI and turned into its offense. Hence, a largershare of the defense instead needs to happen by doing the hard work tomake the world less vulnerable.

The above arguments are speculative, and no actions should be takenbased on the assumption that they are near-certainties. But the AI 2027story is also speculative, and we should avoid taking actions on theassumption that specific details of it are near-certainties.

I particularly worry about the common assumption that building up oneAI hegemon, and making sure that they are "aligned" and "win the race",is the only path forward. It seems to me that there is a pretty highrisk that such a strategy will decrease our safety, preciselyby removing our ability to have countermeasures in the case where thehegemon becomes misaligned. This is especially true if, as is likely tohappen, political pressures lead to such a hegemon becoming tightlyintegrated with military applications (see [1][2][3][4]),which makes many alignment strategies less likely to be effective.

In the AI 2027 scenario, success hinges on the United States choosingto take the path of safety instead of the path of doom, by voluntarilyslowing down its AI progress at a critical moment in order to make surethat Agent-5's internal thought process is human-interpretable. Even ifthis happens, success if not guaranteed, and it is not clear howhumanity steps down from the brink of its ongoing survival depending onthe continued alignment of one single superintelligent mind.Acknowledging that making the world less vulnerable is actually possibleand putting a lot more effort into using humanity's newest technologiesto make it happen is one path worth trying, regardless of how the next5-10 years of AI go.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。