From Bitcoin to Ethereum, how can we find the optimal path to break through the limitations of throughput and scenarios?

Starting from first principles, what is the key to breaking through the saturated market meme, and how can we identify the most essential foundational needs of blockchain?

What magic do the disruptive innovation principles of SCP and AO (Actor Oriented) possess (separating storage and computation) that can allow Web3 to completely unleash itself?

Will the results of running deterministic programs on immutable data be unique and reliable?

In such a narrative, why can SCP and AO (Actor Oriented) become hexagonal warriors of infinite performance, trustworthy data, and composability?

Introduction

[Data Source: BTC Price]

Since the birth of blockchain in 2009, it has been over 15 years. As a revolutionary paradigm of digital technology, it records digital value and network value, making cryptocurrency an innovation of a new capital paradigm.

As the firstborn, Bitcoin is expected to become a strategic reserve asset. At the 2024 Bitcoin conference:

Trump promised that if he returns to the White House, he will ensure that the government retains 100% of its Bitcoin and lists it as a strategic reserve asset of the United States.

After Trump won the election, Bitcoin surged 150%, reaching a peak of $107,287.

Trump's victory is clearly more favorable for the crypto industry, as he has repeatedly expressed strong support for cryptocurrency.

However, in the short term, the high sensitivity of cryptocurrency to election results may lead to short-term market volatility peaks. Will this strong upward momentum be sustainable? The author believes that only after eliminating uncertainty and improving the scalability of blockchain may we welcome a new "red sea."

The Shadow Behind the Prosperity of "Web3" After the U.S. Election

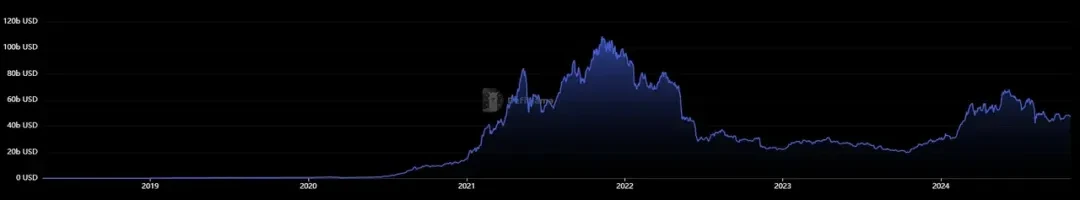

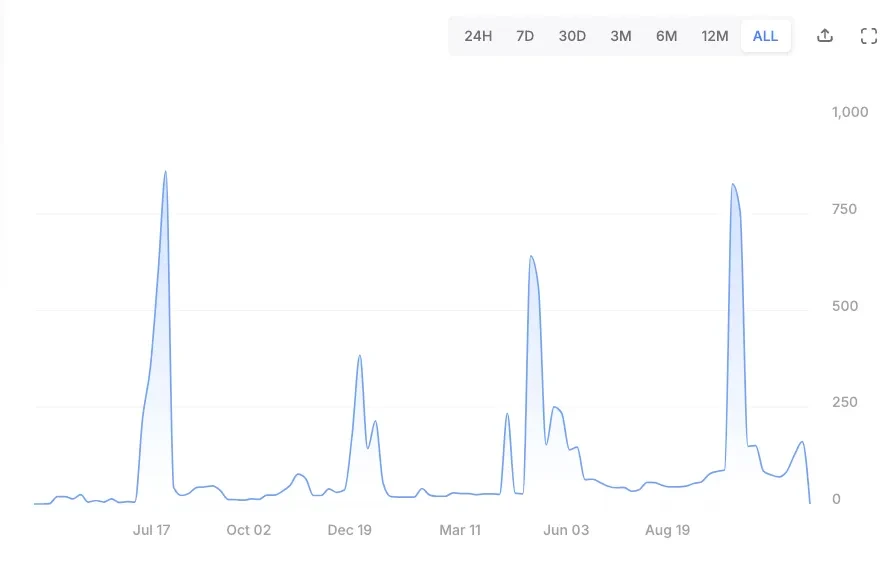

[Data Source: DefiLlama]

Beneath the spotlight, the TVL of Ethereum, the second-largest cryptocurrency, has continued to show a sluggish trend since reaching its historical peak in 2021.

Even in the third quarter of 2024, Ethereum's decentralized finance (DeFi) revenue dropped to $261 million, the lowest level since the fourth quarter of 2020.

At first glance, there may be occasional spikes, but the overall trend indicates a slowdown in DeFi's overall activity on the Ethereum network.

Moreover, the market has seen the emergence of completely alternative trading scenarios with dedicated public chains, such as the recently popular hyperliquid, which is a trading chain based on an order book model, experiencing rapid overall data growth, jumping into the top 50 by market cap in just two weeks, with expected annual revenue only lower than Ethereum, Solana, and Tron among all public chains, reflecting the fatigue of traditional DeFi based on AMM architecture and Ethereum.

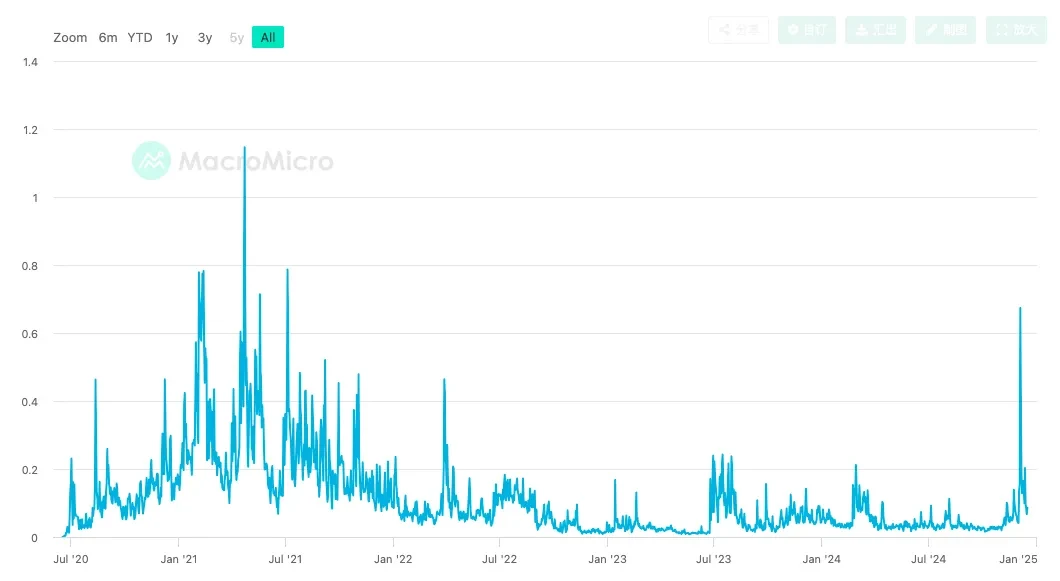

[Data Source: Compound Trading Volume]

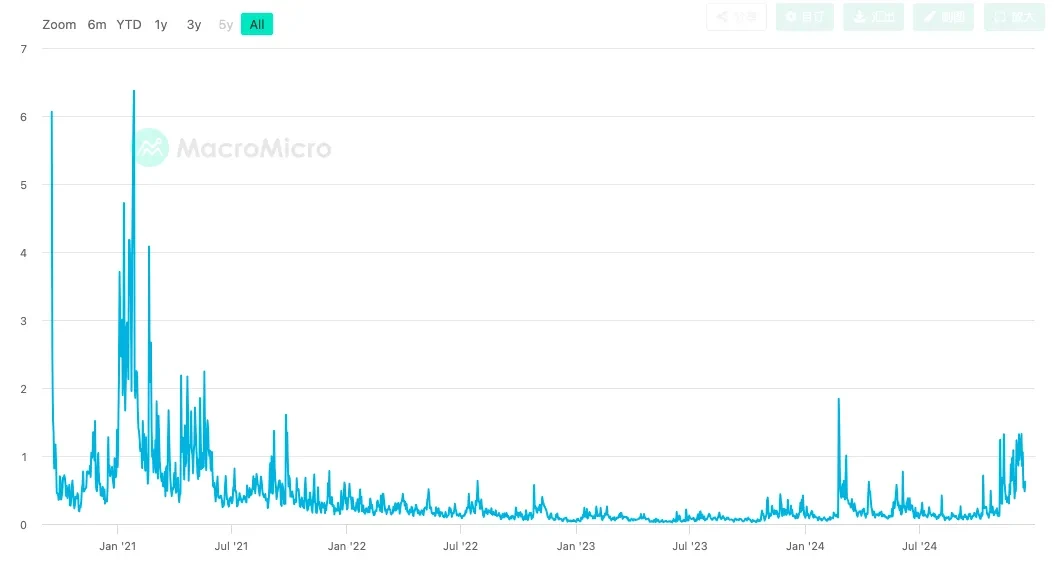

[Data Source: Uniswap Trading Volume]

DeFi was once the core highlight of the Ethereum ecosystem, but due to reduced transaction fees and user activity, its revenue has significantly declined.

In this regard, the author attempts to think about the reasons behind the current predicament faced by Ethereum or the entire blockchain and how to break through it.

Coincidentally, with the successful fifth test launch of SpaceX, it has become a rising star in commercial space travel. Looking back at SpaceX's development path, its success today is attributed to a key methodology—first principles (Tip: The concept of first principles was first proposed by the ancient Greek philosopher Aristotle over 2300 years ago. He described "first principles" as: "In the exploration of every system, there exist first principles, which are the most fundamental propositions or assumptions that cannot be omitted or violated").

So, let us also apply the method of first principles, peeling away the layers of fog to explore the most essential "atoms" of the blockchain industry. From a fundamental perspective, we will re-examine the current challenges and opportunities faced by this industry.

Is Web3's "Cloud Service" a Step Backward or the Future?

When the concept of AO (Actor Oriented) was introduced, it attracted widespread attention. In the context of many EVM series public chains becoming homogenized, AO, as a disruptive architectural design, has shown unique appeal.

This is not just a theoretical idea; there are teams putting it into practice.

As mentioned above, the greatest value of blockchain is recording digital value. From this perspective, it is a publicly transparent global public ledger, so based on this essence, we can consider the first principle of blockchain as a form of "storage."

AO is realized based on the consensus paradigm of storage (SCP). As long as the storage is immutable, no matter where the computation is performed, it can ensure that the results have consensus, giving birth to the AO global computer, achieving interconnection and collaboration of large-scale parallel computers.

Looking back at 2024, one of the most notable events in the Web3 field is the explosion of the inscription ecosystem, which can be seen as a practice of the early storage and computation separation model. For example, the etching technology used by the Runes protocol allows embedding a small amount of data in Bitcoin transactions. Although this data does not affect the main function of the transaction, it serves as additional information, constituting a clear, verifiable, and non-consumable output.

Despite initial concerns from some technical observers about the security of Bitcoin inscriptions, fearing they could become potential entry points for network attacks, over the past two years, it has completely stored data on-chain without any blockchain forks occurring. This stability reaffirms that as long as the stored data is not tampered with, no matter where the computation is performed, it can ensure the consistency and security of the data.

You may find that this is almost identical to traditional cloud services. For example:

In terms of computing resource management, in the AO architecture, "Actors" are independent computing entities, and each computing unit can run its own environment, which is reminiscent of microservices and Docker in traditional cloud servers. Similarly, traditional cloud services can rely on S3 or NFS for storage, while AO relies on Arweave.

However, simply reducing AO to "reheated leftovers" is not accurate. Although AO draws on certain design concepts from traditional cloud services, its core lies in combining decentralized storage with distributed computing. Arweave, as a decentralized storage network, fundamentally differs from traditional centralized storage. This decentralized characteristic endows Web3 data with higher security and censorship resistance.

More importantly, the combination of AO and Arweave is not a simple technical stack but creates a new paradigm. This paradigm combines the performance advantages of distributed computing with the trustworthiness of decentralized storage, providing a solid foundation for innovation and development in Web3 applications. Specifically, this combination is mainly reflected in the following two aspects:

Achieving a fully decentralized design in the storage system while relying on a distributed architecture to ensure performance.

This combination not only addresses some core challenges in the Web3 field (such as storage security and openness) but also provides a technical foundation for potential infinite innovation and combinations in the future.

The following text will delve into the concepts and architectural design of AO and analyze how it addresses the challenges faced by existing public chains like Ethereum, ultimately bringing new development opportunities to Web3.

Viewing the Current Constraints of Web3 from the Perspective of "Atoms"

Since Ethereum burst onto the scene with smart contracts, it has rightfully become the king.

Some may ask, isn't there Bitcoin? However, it is worth noting that Bitcoin was created as a substitute for traditional currency, aiming to become a decentralized and digital cash system. In contrast, Ethereum is not just a cryptocurrency; it is also a platform for creating and implementing smart contracts and decentralized applications (DApps).

In summary, Bitcoin is a digital substitute for traditional currency, with a high price, but that does not mean it has high value. Ethereum is more like an open-source platform, which, in terms of richness, has expected value and better represents the open world of Web3 in contemporary ideology.

Since 2017, many projects have attempted to challenge Ethereum, but very few have persisted until the end. However, Ethereum's performance has always been criticized, leading to the growth of Layer 2 solutions. The seemingly prosperous Layer 2 is actually struggling in a predicament. As competition intensifies, a series of issues have gradually emerged, becoming serious shackles for the development of Web3:

Performance Limitations and Poor User Experience

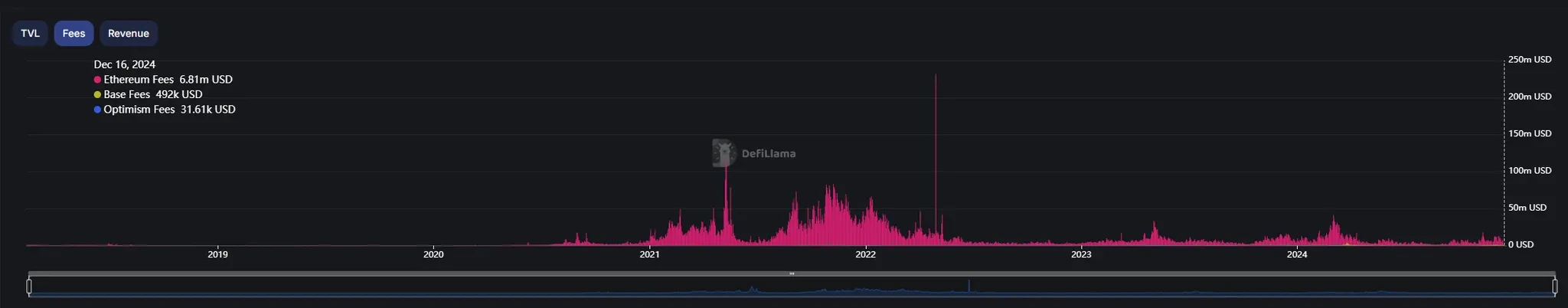

[Data Source: DeFiLlama]

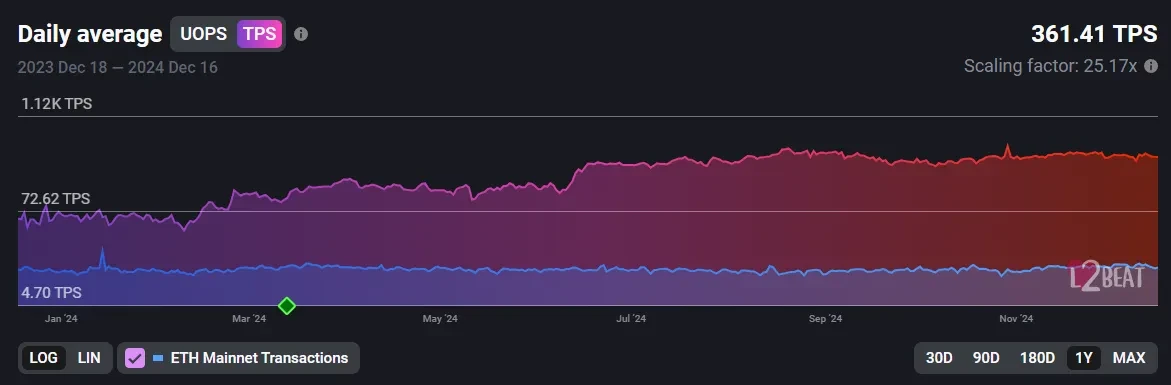

[Data Source: L2 BEAT]

Recently, more and more people believe that Ethereum's Layer 2 expansion plan has failed.

Initially, Layer 2 was an important continuation of Ethereum's expansion plan, and there was a need for multiple supporters for the development of Layer 2, hoping to reduce gas fees and increase throughput to achieve growth in user numbers and transaction volumes. However, despite the reduction in gas fees, the anticipated increase in user numbers has not materialized.

In fact, is the failure of the expansion plan the responsibility of L2? It is clear that L2 is merely a scapegoat; while it bears some responsibility, the main responsibility lies with Ethereum itself. Furthermore, it is an inevitable result of the underlying design issues present in most current Web3 chains.

From the perspective of "atoms," L2 itself undertakes the function of computation, while the essential "storage" of the blockchain is handled by Ethereum. To achieve sufficient security, it must also be Ethereum that stores and reaches consensus on the data.

However, Ethereum's design avoids potential infinite loops during execution, which could halt the entire Ethereum platform. Therefore, any given smart contract execution is limited to a finite number of computational steps.

This leads to the conclusion that the design of L2 expects infinite performance, but in reality, the limitations of the main chain impose shackles on it.

The bottleneck effect determines that L2 has an upper limit.

For a detailed mechanism, readers can expand their reading to understand: “From Traditional DeFi to AgentFi: Exploring the Future of Decentralized Finance”.

Limitations of Gameplay, Difficult to Form Effective Attraction

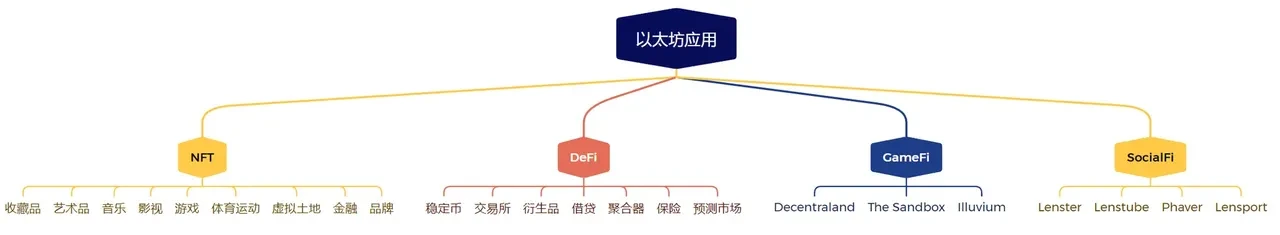

Ethereum's pride lies in its prosperous application layer ecosystem, which hosts various DApps.

But is the flourishing truly a scene of a hundred flowers blooming?

The author believes that it is clearly not; behind Ethereum's prosperous application ecosystem is a serious financialization issue, and non-financial applications are far from mature.

Let’s take a look at the more prosperous application sectors developed on Ethereum.

First, concepts like NFT, DeFi, GameFi, and SocialFi, while exploratory in financial innovation, are currently not suitable for the general public. The rapid development of Web2 fundamentally stems from its functions being closely aligned with people's daily lives.

Compared to financial products and services, ordinary users are more concerned about messaging, socializing, video, e-commerce, and other functionalities.

Secondly, from a competitive perspective, credit loans in traditional finance are very common and widespread products, but in the DeFi space, such products are still relatively few. The main reason is the current lack of an effective on-chain credit system.

The construction of a credit system requires allowing users to truly own their online personal profiles and social graphs, and to be able to traverse different applications.

Only when these decentralized pieces of information can achieve zero-cost storage and transmission can a powerful personal information graph for Web3 be constructed, along with a set of Web3 applications based on a credit system.

Thus, we clarify a key issue again: The failure of L2 to attract enough users is not its fault; the existence of L2 has never been the core driving force. The real way to break through the shackles of Web3's predicament is to innovate application scenarios to attract users.

However, the current situation is like a highway during a holiday; limited by transaction performance constraints, no matter how many innovative ideas there are, it is difficult to implement them.

The essence of blockchain itself is "storage." When storage and computation are coupled, it becomes insufficiently "atomic." Under such a non-essential design, there will inevitably be a critical point of performance.

Some viewpoints define the essence of blockchain as a transaction platform, a currency system, or emphasize transparency and anonymity. However, this perspective overlooks the fundamental characteristics of blockchain as a data structure and its broader application potential. Blockchain is not just for financial transactions; its technical architecture allows it to be applied across multiple industries, such as supply chain management, healthcare records, and even copyright management. Therefore, the essence of blockchain lies in its ability as a storage system, not only because it can securely store data but also because it ensures the integrity and transparency of data through a distributed consensus mechanism. Once a data block is added to the chain, it is almost impossible to change or delete.

Atomic Infrastructure: AO Makes Infinite Performance Possible

[Data Source: L2 TPS]

The basic architecture of blockchain faces a clear bottleneck: the limitation of block space. Like a ledger of fixed size, every transaction and piece of data must be recorded in a block. Ethereum and other blockchains are constrained by block size limits, causing transactions to compete for space. This raises a critical question: Can we break through this limitation? Must block space be limited? Is there a way to achieve true infinite scalability for the system?

Although Ethereum's L2 route has achieved success in performance expansion, it can only be said to be half successful, as L2 has improved throughput by several orders of magnitude. During peak transaction times, it may be able to support individual projects, but for most L2 chains that inherit storage and consensus security, this level of expansion is far from sufficient.

It is worth noting that L2's TPS cannot be infinitely increased, primarily limited by several factors: data availability, settlement speed, verification costs, network bandwidth, and contract complexity. Although Rollup optimizes L1's storage and computation needs through compression and verification, it still requires submitting and verifying data on L1, thus being subject to L1's bandwidth and block time limitations. At the same time, the computational overhead of generating zero-knowledge proofs, node performance bottlenecks, and the execution demands of complex contracts also limit the upper limit of L2 expansion.

[Data Source: suiscan TPS]

Currently, the real challenge for Web3 lies in insufficient throughput and applications, which will make it difficult to attract new users. Web3 may face the risk of losing influence.

In short, the improvement of throughput is key to whether Web3 has a bright future. Achieving a network that can infinitely scale and has high throughput is the vision of Web3. For example, Sui adopts a deterministic parallel processing approach, pre-arranging transactions to avoid conflicts, thereby improving the system's predictability and scalability. This allows Sui to handle over 10,000 transactions per second (TPS). At the same time, Sui's architecture allows for increased network throughput by adding more validating nodes, theoretically achieving infinite scalability. It also employs the Narwhal and Tusk protocols to reduce latency, enabling the system to efficiently process transactions in parallel, thus overcoming the scalability bottlenecks of traditional Layer 2 solutions.

What we are discussing with AO is also based on this idea. Although the focus is different, they are both building a scalable storage system.

Web3 needs a new infrastructure based on first principles, centered on storage. Just as Elon Musk rethought rocket launches and the electric vehicle industry, he fundamentally redesigned these complex technologies through first principles, thereby disrupting the industry. The design of AO is similar; it decouples computation and storage, discarding the traditional blockchain framework to build a future-oriented Web3 storage foundation, pushing Web3 towards the vision of "decentralized cloud services."

Storage Consensus-Based Design Paradigm (SCP)

Before introducing AO, we must first discuss the relatively novel SCP design paradigm.

SCP may be unfamiliar to most people, but everyone is likely familiar with Bitcoin inscriptions. Strictly speaking, the design idea of inscriptions is, to some extent, a design thought that uses storage as the "atomic" unit, although it may have some deviations.

Interestingly, Vitalik has also expressed a desire to become the paper tape of Web3, and the SCP paradigm embodies this kind of thought.

In Ethereum's model, computation is executed by full nodes, which then globally store and provide queries. This leads to a problem: although Ethereum is a "world-class" computer, it is a single-threaded program, where all steps can only be executed one at a time, which is clearly inefficient. It is also "fertile ground for MEV," as transaction signatures enter Ethereum's memory pool and are publicly disseminated, then sorted and mined by miners. Although this process may only take 12 seconds, in that brief time, the transaction content is exposed to countless "hunters," who can quickly intercept and simulate, even reverse-engineering possible trading strategies. For detailed information on MEV, readers can expand their reading: “The MEV Landscape One Year After Ethereum's Merge.”

In contrast, the idea of SCP is to separate computation from storage. You may find this a bit abstract, but no worries; let’s use a Web2 scenario as an example.

In Web2's chat and e-commerce processes, there are often sudden spikes in traffic at certain times. However, a single computer struggles to support such a large load in terms of hardware resources. To address this, clever engineers proposed the concept of distribution, delegating computation to multiple computers, which then synchronize and store their respective computational states. This allows for elastic scaling to handle varying traffic over time.

Similarly, SCP can be seen as a design that distributes computation across various computing nodes. The difference is that SCP's storage does not rely on databases like MySQL or PostgreSQL, but on the mainnet of the blockchain.

In short, SCP uses blockchain to store the results of states and other data, ensuring the credibility of stored data and achieving a high-performance network layered with the underlying blockchain.

More specifically, the blockchain in SCP is used solely for data storage, while off-chain clients/servers are responsible for executing all computations and storing all generated states. This architectural design significantly enhances performance and scalability, but under the architecture of separating computation and storage, can we truly ensure the integrity and security of the data?

In simple terms, the blockchain is primarily used to store data, while the actual computational work is done by off-chain servers. This new system design has an important characteristic: it no longer uses the complex node consensus mechanisms of traditional blockchains, but instead conducts all consensus processes off-chain.

What are the benefits of this? Because there is no need for a complex consensus process, each server can focus solely on processing its own computational tasks. This allows the system to handle almost unlimited transactions, and the operating costs are also lower.

Although this design is somewhat similar to the currently popular Rollup scaling solutions, its goals are much larger: it is not just meant to solve the blockchain scalability issue, but also to provide a new path for the transition from Web2 to Web3.

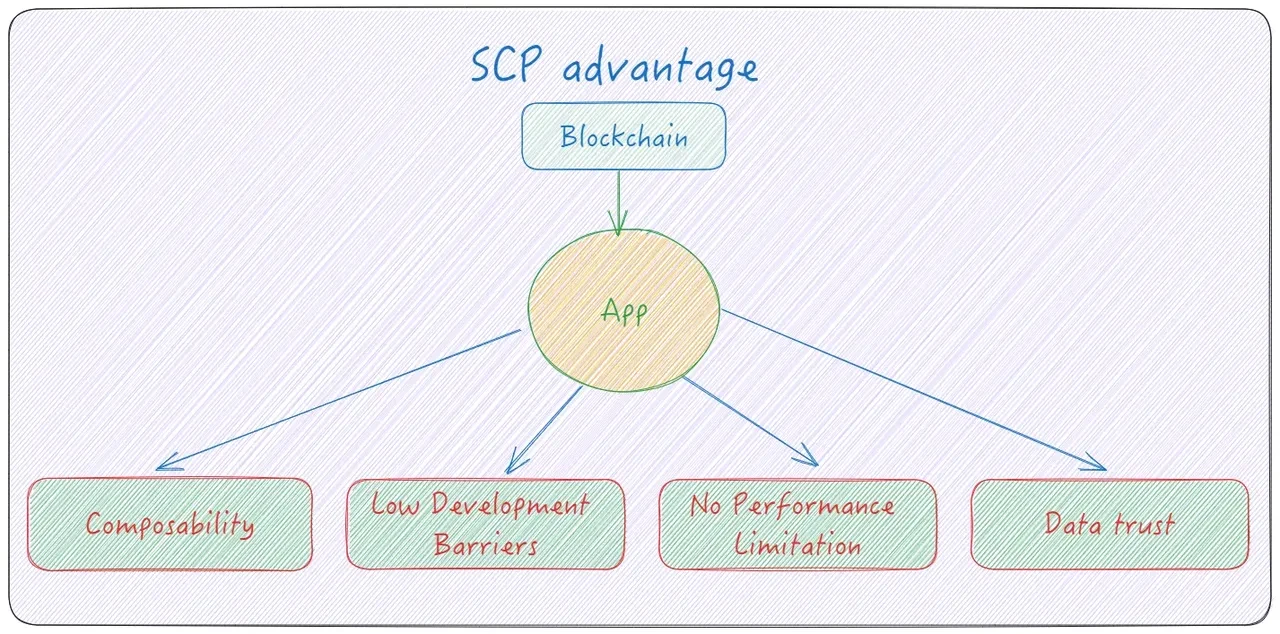

Having said so much, what advantages does SCP have? SCP decouples computation and storage. This design not only enhances the system's flexibility and composability but also lowers the development threshold, effectively addressing the performance limitations of traditional blockchains while ensuring data credibility. Such innovation makes SCP an efficient and scalable infrastructure, empowering the future decentralized ecosystem.

Composability: SCP places computation off-chain, which prevents it from polluting the essence of the blockchain, allowing the blockchain to maintain its "atomic" properties. At the same time, with computation off-chain, the blockchain only bears the function of storage, meaning that any smart contract can be executed, and migrating applications based on SCP becomes extremely simple, which is very important.

Low Development Barriers: Off-chain computation allows developers to use any programming language for development, whether it be C++, Python, or Rust, without the need to specifically use EVM with Solidity. The only cost for programmers may be the API costs for interacting with the chain.

No Performance Limitations: Off-chain computation aligns computational power directly with traditional applications, where the performance ceiling depends on the machine performance of the computing servers. Traditional elastic scaling of computing resources is a very mature technology, and without considering the costs of computing machines, computational power is virtually unlimited.

Credible Data: Since the basic function of "storage" is handled by the blockchain, this means that all data is immutable and traceable. If any node doubts the state results, it can pull data for recalculation. Thus, the blockchain endows data with credible characteristics.

Bitcoin proposed the PoW solution in response to the "Byzantine Generals Problem," which was Satoshi Nakamoto's unconventional approach in that environment, leading to the success of Bitcoin.

Similarly, when facing the computation of smart contracts, we start from first principles. This may seem like a counterintuitive solution, but when we boldly decentralize computation and return the blockchain to its essence, we find that while the storage consensus is satisfied, it also meets the characteristics of open-source data and trustworthy supervision, achieving performance that is completely as excellent as Web2. This is SCP.

The Combination of SCP and AO: Breaking Free from Shackles

After all this, we finally arrive at AO.

First, AO's design adopts a model called the Actor Model, which was originally used in the Erlang programming language.

At the same time, AO's architecture and technology are based on the SCP paradigm, separating the computation layer from the storage layer, making the storage layer permanently decentralized while maintaining the traditional model for the computation layer.

AO's computational resources are similar to traditional computing models, but it adds a permanent storage layer, making the computation process traceable and decentralized.

At this point, you may wonder, what is the storage layer used by AO?

Clearly, the main chains used for the storage layer cannot be Bitcoin or Ethereum, and the reasons for this have been discussed above. I believe readers can easily understand this point. The final data storage and verifiability issues of AO's computations are handled by Arweave.

So, among so many decentralized storage tracks, why choose Arweave?

The choice of Arweave as the storage layer is primarily based on the following considerations: Arweave is a decentralized network focused on permanent data storage, positioned similarly to a "global hard drive that never loses data," which differs from Bitcoin's "global ledger" and Ethereum's "global computer." Arweave is like a global hard drive that will never lose data.

For more technical details about Arweave, please refer to: “Understanding Arweave: The Key Infrastructure of Web3.”

Next, we will focus on discussing the principles and technologies of AO to see how AO achieves infinite computation.

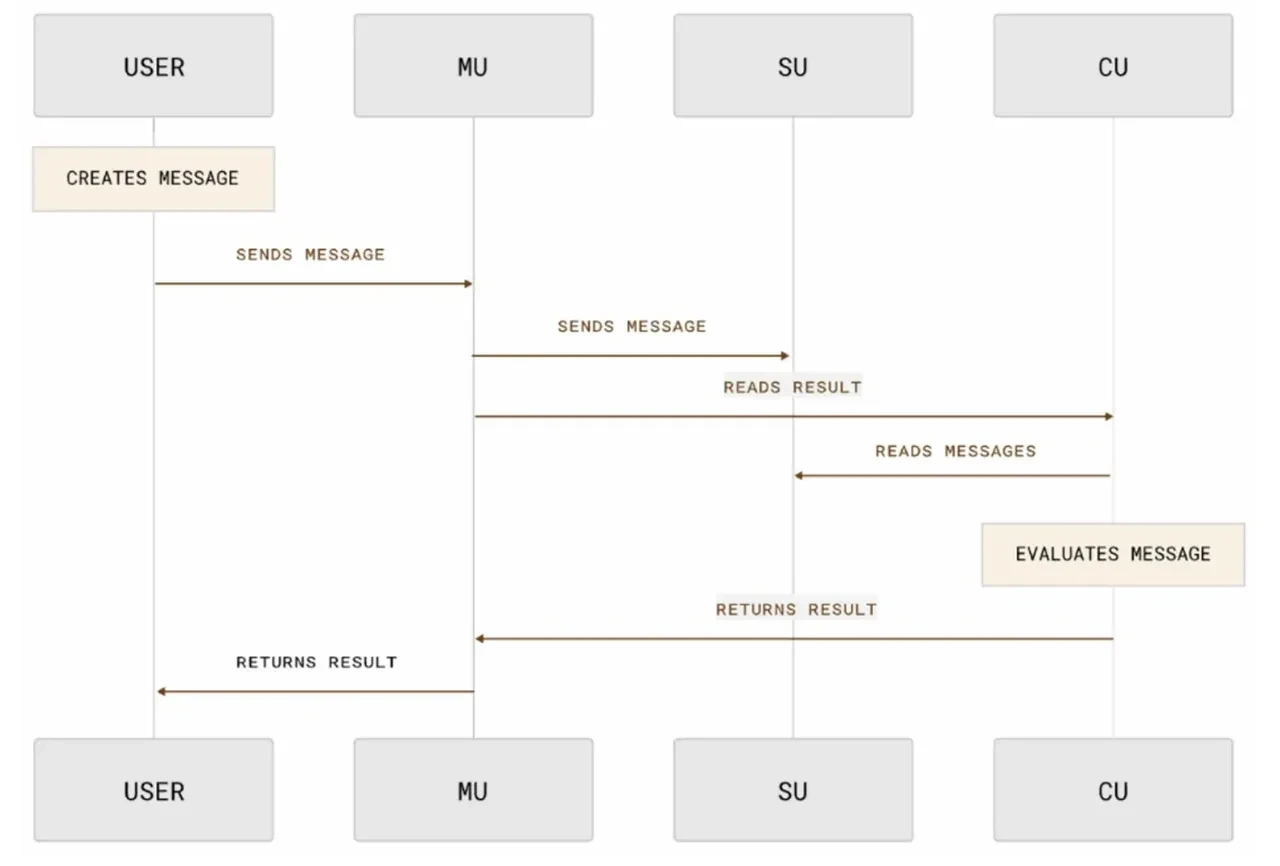

[Data Source: AO Messenger's Working Principle | Manual]

The core of AO is to build an infinitely scalable and environment-independent computation layer. The various nodes of AO collaborate based on protocols and communication mechanisms, allowing each node to provide optimal services and avoid competitive consumption.

First, let’s understand the basic architecture of AO, which consists of processes and messages as the two basic units, along with scheduling units (SU), computing units (CU), and messenger units (MU):

Process: The computational unit of nodes in the network, used for data computation and message processing. For example, each contract can be a process.

Message: Processes interact through messages, with each message being data that conforms to the ANS-104 standard. The entire AO must adhere to this standard.

Scheduling Unit (SU): Responsible for numbering the messages of processes, allowing processes to be sorted, and is also responsible for uploading messages to Arweave.

Computing Unit (CU): The state node in the AO process, responsible for executing computational tasks and returning the results and signatures to the SU, ensuring the correctness and verifiability of the computation results.

Messenger Unit (MU): The routing entity within the node, responsible for delivering user messages to the SU and verifying the integrity of the signed data.

It is important to note that AO does not have shared states, only holographic states. The consensus in AO is generated through games, as the state produced by each computation is uploaded to Arweave, ensuring data verifiability. When a user questions a piece of data, they can request one or more nodes to compute the data on Arweave. If the settlement results do not match, the corresponding dishonest nodes will be penalized.

Innovation of AO Architecture: Storage and Holographic State

The innovation of the AO architecture lies in its data storage and verification mechanism, which replaces the redundant computation and limited block space of traditional blockchains by utilizing decentralized storage (Arweave) and holographic states.

Holographic State: In the AO architecture, the "holographic state" generated by each computation is uploaded to the decentralized storage network (Arweave). This "holographic state" is not just a simple record of transaction data; it contains the complete state and related data of each computation. This means that every computation and result will be permanently recorded and can be verified at any time. The holographic state serves as a "data snapshot," providing a distributed and decentralized data storage solution for the entire network.

Storage Verification: In this model, data verification no longer relies on each node recalculating all transactions but confirms the validity of transactions by storing and comparing the data uploaded to Arweave. When the computation results produced by a node do not match the data stored on Arweave, users or other nodes can initiate a verification request. At this point, the network will recalculate the data and check the storage records in Arweave. If the computation results are inconsistent, the node will be penalized, ensuring the integrity of the network.

Breaking the Block Space Limitation: The block space of traditional blockchains is limited by storage constraints, with each block containing only a finite number of transactions. In the AO architecture, data is no longer directly stored in blocks but uploaded to a decentralized storage network (like Arweave). This means that the storage and verification of the blockchain network no longer depend on the size of the block space but are shared and expanded through decentralized storage. Therefore, the capacity of the blockchain system is no longer directly limited by block size.

The block space limitations of blockchains are not insurmountable. The AO architecture changes the traditional data storage and verification methods of blockchains by relying on decentralized storage and holographic states, thus providing the possibility for infinite scalability.

Does consensus necessarily rely on redundant computation?

Not necessarily. Consensus mechanisms do not have to rely on redundant computation; they can be achieved in various ways. Solutions that depend on storage rather than redundant computation are also feasible in certain scenarios, especially when the integrity and consistency of data can be ensured through storage verification.

In the architecture of AO, storage becomes an alternative to redundant computation. By uploading computation results to a decentralized storage network (in this case, Arweave), the system can ensure the immutability of data, and through the holographic upload of states, any node can verify computation results at any time, ensuring data consistency and correctness. This approach relies on the reliability of data storage rather than the repeated computation results of each node.

Next, let’s look at a table to see the differences between AO and ETH:

It is not difficult to see that the core characteristics of AO can be summarized in two points:

Large-scale parallel computation: Supports countless processes running in parallel, significantly enhancing computational capacity.

Minimized trust dependency: No need to trust any single node; all computation results can be infinitely reproduced and traced.

How AO Breaks the Deadlock: The Dilemma of Public Chains Led by Ethereum?

Regarding the two major dilemmas faced by Ethereum, the performance shackles and insufficient applications, the author believes that this is precisely where AO excels, for the following reasons:

AO is designed based on the SCP paradigm, where computation and storage are separated. Therefore, in terms of performance, it is no longer comparable to Ethereum's single-process computation. AO can flexibly scale more computational resources based on demand, and the holographic state of message logs in Arweave allows AO to ensure consensus by reproducing computation results, which, from a security perspective, is on par with Ethereum and Bitcoin.

The message-passing parallel computing architecture allows AO's processes to avoid competing for "locks." In Web2 development, it is well known that a high-performance service will try to avoid lock contention, as this can be very costly for an efficient service. Similarly, AO processes avoid lock contention through messages, which aligns with this thinking, allowing its scalability to reach any scale.

The modular architecture of AO is reflected in the separation of CU, SU, and MU, which allows AO to adopt any virtual machine, sorter, etc. This provides extremely convenient and low-cost migration and development for DApps across different chains. Combined with Arweave's efficient storage capabilities, DApps developed on it can achieve richer gameplay; for example, character graphs can be easily implemented on AO.

The support of the modular architecture enables Web3 to adapt to the policy requirements of different countries and regions. Although the core concept of Web3 is decentralization and deregulation, it is inevitable that the varying policies of different countries have a profound impact on the development and promotion of Web3. The flexible modular combination can be adapted according to the policies of different regions, thereby ensuring the robustness and sustainable development of Web3 applications to a certain extent.

Conclusion

The separation of computation and storage is a great idea and a systematic design based on first principles.

As a narrative direction similar to "decentralized cloud services," it not only provides good landing scenarios but also offers a broader imaginative space for integrating AI.

In fact, only by truly understanding the fundamental needs of Web3 can we break free from the dilemmas and shackles brought about by path dependence.

The combination of SCP and AO provides a brand new idea: it inherits all the characteristics of SCP, no longer deploying smart contracts on-chain, but storing immutable and traceable data on-chain, achieving verifiable data credibility for everyone.

Of course, there is currently no absolutely perfect path, and AO is still in its nascent development stage. How to avoid the over-financialization of Web3, create enough application scenarios, and bring richer possibilities for the future remains a test on the road to AO's success. Whether AO can deliver a satisfactory answer still needs to be tested by the market and time.

The combination of SCP and AO, as a promising development paradigm, although its concept has not yet been widely recognized in the market, is expected to play an important role in the Web3 field in the future, even driving the further development of Web3.

This article was first published on PermaDAO

Original link: https://mp.weixin.qq.com/s/r5bhvWVhoEdohbhTt7b5A_

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。