Aleo is a blockchain project focused on privacy protection, achieving higher privacy and scalability through zero-knowledge proof (ZKP) technology. The core idea of Aleo is to allow users to verify their identities and process data without revealing personal data.

This article mainly introduces an overview of the Aleo project and the latest developments, providing a detailed interpretation of the puzzle algorithm, which is of great concern to the market.

Latest Algorithm Preview

Aleo network randomly generates a ZK circuit every hour; miners need to try different nonces as inputs for the circuit within that hour, calculate the witness (i.e., all variables in the circuit, also known as synthesis), compute the Merkle root of the witness, and determine if it meets the mining difficulty requirements. Due to the randomness of the circuit, this mining algorithm is not GPU-friendly and presents significant challenges in terms of computational acceleration.

Funding Background

In 2021, Aleo completed a $28 million Series A financing led by a16z, and in 2024, it completed a $200 million Series B financing, with investors including Kora Management, SoftBank Vision Fund 2, Tiger Global, Sea Capital, Slow Ventures, and Samsung Next, among others. This round of financing has valued Aleo at $14.5 billion.

Project Overview

Privacy

Aleo's core is zero-knowledge proof (ZKPs) technology, allowing transactions and smart contract execution to occur while maintaining privacy. By default, transaction details such as sender and transaction amount are hidden. This design not only protects user privacy but also allows for selective disclosure when necessary, making it ideal for the development of DeFi applications. Its main components include:

Leo programming language: Based on the Rust language, it is specifically designed for developing zero-knowledge applications (ZKApps), reducing the cryptographic knowledge required by developers.

snarkVM and snarkOS: snarkVM allows off-chain computation with on-chain result verification, improving efficiency. snarkOS ensures the security of data and computation, allowing for permissionless functionality execution.

zkCloud: Provides a secure and private off-chain computing environment, supporting programming interactions between users, organizations, and DAOs.

Aleo also provides an integrated development environment (IDE) and a software development kit (SDK) to support developers in quickly writing and deploying applications. Additionally, developers can deploy applications in Aleo's program registry without relying on third parties, thereby reducing platform risk.

Scalability

Aleo adopts an off-chain processing approach, where transactions are first computed for proof on user devices and then only the verification results are uploaded to the blockchain. This significantly improves transaction processing speed and system scalability, avoiding network congestion and high fees similar to those on Ethereum.

Consensus Mechanism

Aleo introduces AleoBFT, a hybrid consensus mechanism that combines the instant finality of validators and the computational power of provers. AleoBFT not only enhances the decentralization of the network but also improves performance and security.

Rapid block finality: AleoBFT ensures that each block is immediately confirmed after generation, enhancing node stability and user experience.

Decentralization guarantee: By separating block production from coinbase generation, validators are responsible for block generation, while provers perform proof computation, preventing network monopolization by a minority entity.

Incentive mechanism: Validators and provers share block rewards; provers are encouraged to become validators by staking tokens, thereby enhancing the network's decentralization and computational power.

Aleo allows developers to create applications without gas limitations, making it particularly suitable for applications requiring long-term execution, such as machine learning.

Current Progress

Aleo will launch an incentive testnet on July 1st, and here are some important recent updates:

Approval of ARC-100: The voting for ARC-100 ("Best Practices for Aleo Developers and Operators Compliance," involving compliance, locking funds on the Aleo network, and delayed payments) has been completed and approved. The team is making final adjustments.

Validator Incentive Program: This program will launch on July 1st to verify the new puzzle mechanism. It will run until July 15th, during which 1 million Aleo points will be allocated as rewards. The percentage of points generated by nodes will determine their reward share, and each validator must earn at least 100 tokens to be eligible for rewards. Specific details are yet to be finalized.

Initial supply and circulating supply: The initial supply is 15 billion tokens, with an initial circulating supply of approximately 10% (yet to be finalized). These tokens mainly come from Coinbase missions (75 million) and will be distributed within the first six months, including staking, running validators, and validator rewards.

Testnet Beta reset: This is the final network reset, after which no new features will be added, and the network will be similar to the mainnet. The reset is to add ARC-41 and new puzzle features.

Code freeze: The code freeze was completed a week ago.

Validator expansion plan: The initial number of validators is 15, with the goal of increasing to 50 within the year and ultimately reaching 500. To become a delegator, one needs 10,000 tokens, and to become a validator, one needs 10 million tokens, with these amounts gradually decreasing over time.

Algorithm Update Interpretation

Aleo recently announced the latest testnet news and updated the latest version of the puzzle algorithm, which no longer focuses on generating zk proof results. It has removed the calculations of MSM and NTT (both are computational modules heavily used in zk proof generation, and participants in the previous testnet optimized this algorithm to increase mining revenue). Instead, the new algorithm focuses on generating the intermediate data witness before producing the proof. After referring to the official puzzle spec and code, we will provide a brief introduction to the latest algorithm.

Consensus Process

At the consensus protocol level, the process involves the prover and validator being responsible for generating the computation result solution and aggregating and packaging the solution into the next new block, ensuring that the number of solutions does not exceed the consensus limit (MAX_SOLUTIONS). The process is as follows:

Prover computes puzzle to build solutions and broadcasts them to the network.

Validator aggregates transactions and solutions into the next new block, ensuring that the number of solutions does not exceed the consensus limit (MAX_SOLUTIONS).

The validity of the solution needs to be verified, ensuring that its epochhash matches the latestepochhash maintained by the validator, its computed prooftarget matches the latestprooftarget maintained by the network's validators, and the number of solutions in the block is less than the consensus limit.

Valid solutions are eligible for consensus rewards.

Synthesis Puzzle

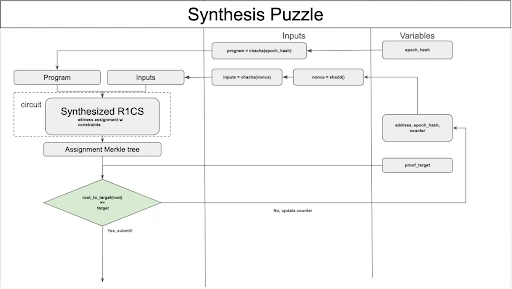

The latest version of the algorithm core is called Synthesis Puzzle, which is designed to generate a common EpochProgram for each epoch. By constructing an R1CS proof circuit for the input and EpochProgram, generating the corresponding R1CS assignment (referred to as witness) as a leaf node of the Merkle tree, calculating the Merkle root of all leaf nodes, and converting it into the solution's proof_target. The detailed process and specifications for building the Synthesis Puzzle are as follows:

Each puzzle calculation, known as a nonce, is constructed by the address receiving mining rewards, epoch_hash, and a random counter. A new nonce can be obtained by updating the counter each time a new solution needs to be calculated.

In each epoch, all provers in the network need to calculate the same EpochProgram, which is sampled from the instruction set using a random number generated from the current epoch_hash. The sampling logic is as follows:

- The instruction set is fixed, and each instruction contains one or more computational operations, with a preset weight and operation count for each instruction.

- When sampling, a random number is generated based on the current epoch_hash, and instructions are sampled from the instruction set based on this random number combined with the weights, and arranged in order until the cumulative operation count reaches 97.

Use the nonce as the random seed to generate the input for the EpochProgram.

Aggregate the R1CS corresponding to the EpochProgram and the input, and calculate the witness (R1CS assignment).

After calculating all witnesses, these witnesses will be converted into a sequence of leaf nodes for the corresponding Merkle tree. The Merkle tree is an 8-element K-ary Merkle tree with a depth of 8.

Calculate the Merkle root and convert it into the solution's prooftarget. Verify if it meets the latestprooftarget for the current epoch. If it does, the calculation is successful, and the constructed input required for the reward address, epochhash, and counter is submitted as the solution and broadcasted.

Within the same epoch, the input for the EpochProgram can be updated multiple times for solution calculation by iterating the counter.

Changes and Impacts on Mining

After this update, the puzzle has shifted from generating proof to generating witness. The logic for calculating all solutions within the same epoch is consistent, but there are significant differences in the logic for different epochs.

From previous testnets, it was observed that many optimization methods focused on using GPUs to optimize the MSM and NTT calculations in the proof generation stage to improve mining efficiency. This update completely eliminates this part of the calculation. Additionally, since the process of generating witness occurs by executing a program that follows the epoch changes, and the instructions within it have some serial execution dependencies, achieving parallelization presents significant challenges.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。