Original author: Jane Doe, Chen Li

Original source: Youbi Capital

1 Intersection of AI and Crypto

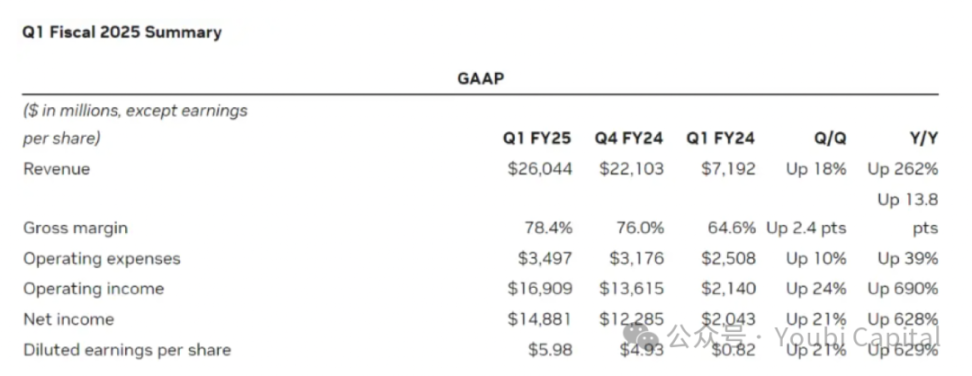

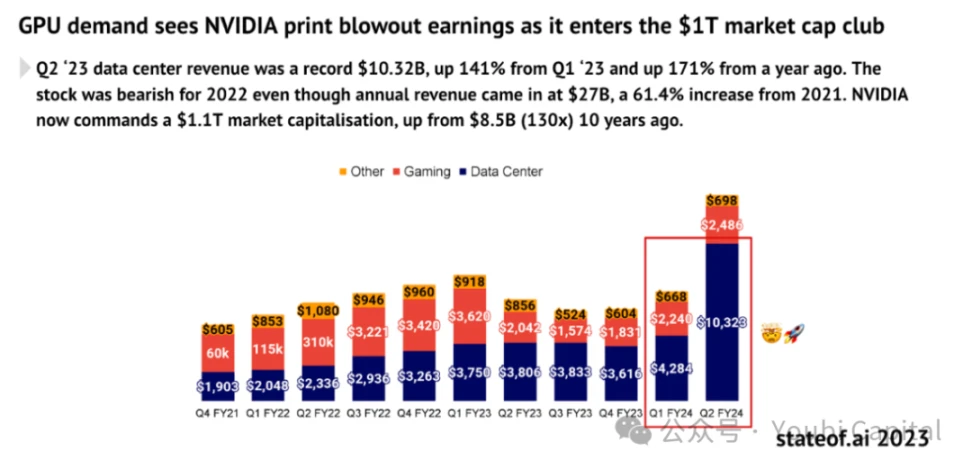

On May 23, chip giant NVIDIA released its financial report for the first quarter of fiscal year 2025. The report showed that NVIDIA's first-quarter revenue was $26 billion. Among them, data center revenue grew by 427% from the previous year, reaching an astonishing $22.6 billion. NVIDIA's ability to single-handedly rescue the financial performance of the US stock market reflects the explosive demand for computing power driven by global technology companies vying for the AI track. The more top-notch technology companies lay out their ambitions in the AI track, the greater their demand for computing power grows exponentially. According to TrendForce's forecast, by 2024, the demand for high-end AI servers from the four major cloud service providers in the United States: Microsoft, Google, AWS, and Meta, is expected to account for 20.2%, 16.6%, 16%, and 10.8% of global demand, totaling over 60%.

Image source: https://investor.nvidia.com/financial-info/financial-reports/default.aspx

"The chip shortage" has been a hot topic in recent years. On the one hand, the training and inference of large language models (LLMs) require a large amount of computing power support; and as the models iterate, the cost and demand for computing power increase exponentially. On the other hand, large companies like Meta purchase a huge amount of chips, causing global computing resources to tilt towards these tech giants, making it increasingly difficult for small businesses to obtain the computing power they need. The dilemma faced by small businesses stems not only from the shortage of supply caused by the surge in demand, but also from structural contradictions in the supply. Currently, there are still a large number of idle GPUs on the supply side. For example, some data centers have a large amount of idle computing power (with a utilization rate of only 12% to 18%), and a large amount of computing power resources are idle in cryptocurrency mining due to reduced profits. Although not all of this computing power is suitable for professional applications such as AI training, consumer-grade hardware can still play a huge role in other areas, such as AI inference, cloud gaming rendering, and cloud phones. The opportunity to integrate and utilize this computing power resource is enormous.

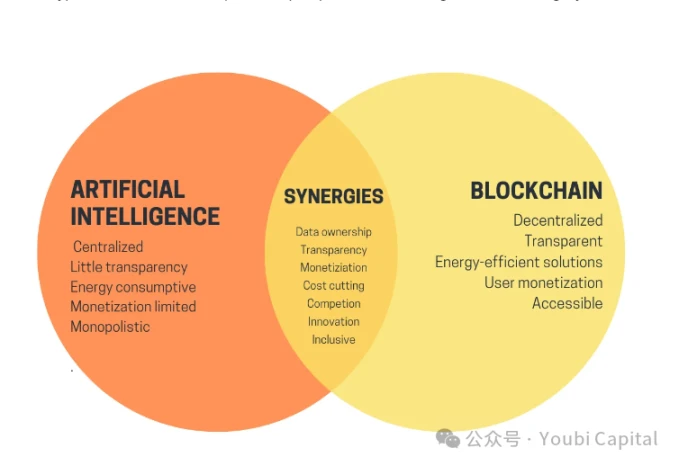

Shifting the focus from AI to crypto, after three years of silence in the cryptocurrency market, it has finally ushered in another bull market, with the price of Bitcoin repeatedly hitting new highs and various memecoins emerging endlessly. Although AI and Crypto have been hot buzzwords in recent years, artificial intelligence and blockchain, as two important technologies, seem to be like two parallel lines, and have not yet found an "intersection." Earlier this year, Vitalik published an article titled "The promise and challenges of crypto + AI applications," discussing the future scenarios of combining AI and crypto. Vitalik mentioned many visions in the article, including using blockchain and MPC encryption technology to decentralize the training and inference of AI, which can open up the black box of machine learning, making AI models more trustless, and so on. These visions still have a long way to go to be realized. But one of the use cases mentioned by Vitalik—using economic incentives of crypto to empower AI—is also an important direction that can be realized in a short period of time. Decentralized computing power networks are currently one of the most suitable scenarios for AI + crypto.

2 Decentralized Computing Power Network

Currently, there are many projects developing in the field of decentralized computing power networks. The underlying logic of these projects is similar and can be summarized as: using tokens to incentivize computing power holders to participate in network-providing computing power services. These scattered computing power resources can be aggregated into a decentralized computing power network of a certain scale. This can not only increase the utilization rate of idle computing power, but also meet the computing power needs of customers at a lower cost, achieving a win-win situation for both buyers and sellers.

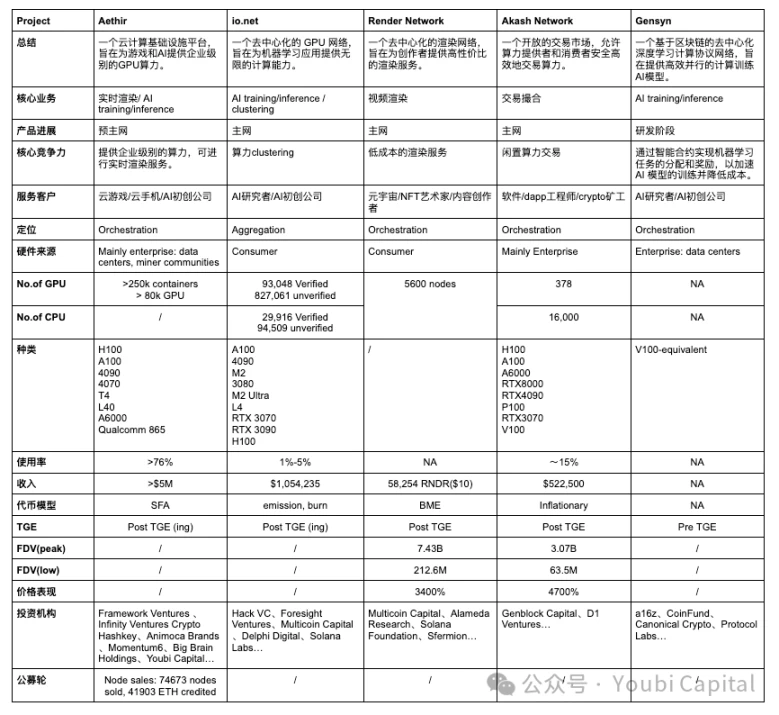

In order to provide readers with an overall understanding of this field in a short period of time, this article will deconstruct specific projects and the overall field from two perspectives: micro and macro, aiming to provide readers with an analytical perspective to understand the core competitive advantages of each project and the overall development of the decentralized computing power network field. The author will introduce and analyze five projects: Aethir, io.net, Render Network, Akash Network, Gensyn, and summarize and evaluate the project situation and the development of the field.

In terms of the analysis framework, if we focus on a specific decentralized computing power network, we can break it down into four core components:

Hardware network: Integrating scattered computing power resources together through nodes distributed around the world to share and balance computing power resources, forming the foundation of a decentralized computing power network.

Bilateral market: Matching computing power providers and demanders through reasonable pricing and discovery mechanisms, providing a secure trading platform to ensure transparent, fair, and trustworthy transactions for both supply and demand sides.

Consensus mechanism: Used to ensure that nodes within the network run correctly and complete tasks. The consensus mechanism is mainly used to monitor two aspects: 1) monitoring whether nodes are running online and in an active state to accept tasks at any time; 2) proof of node work: the node has correctly completed the task it received, and the computing power has not been used for other purposes, occupying processes and threads.

Token incentives: The token model is used to incentivize more participants to provide/use services and capture network effects with tokens, achieving community benefit sharing.

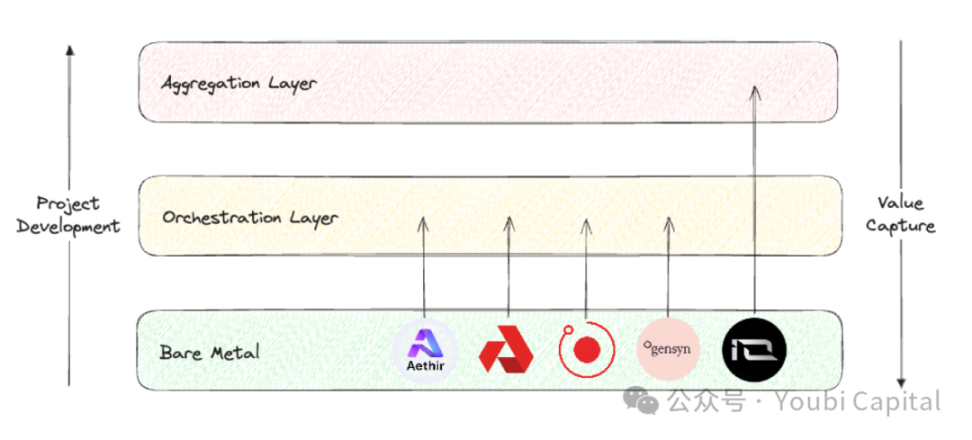

If we take a bird's-eye view of the entire decentralized computing power field, Blockworks Research's research report provides a good analysis framework. We can position the projects in this field into three different layers.

Bare metal layer: The foundational layer of the decentralized computing stack, mainly responsible for collecting raw computing power resources and enabling them to be called by APIs.

Orchestration layer: The intermediate layer of the decentralized computing stack, mainly responsible for coordination and abstraction, handling computing power scheduling, expansion, operation, load balancing, fault tolerance, etc. Its main role is to "abstract" the complexity of underlying hardware management, providing a more advanced user interface for specific customer groups.

Aggregation layer: The top layer of the decentralized computing stack, mainly responsible for integration, providing a unified interface for users to perform various computing tasks in one place, such as AI training, rendering, zkML, and more. It acts as a layer for orchestrating and distributing multiple decentralized computing services.

Image source: Youbi Capital

Based on the above two analysis frameworks, we will make a horizontal comparison of the five selected projects and evaluate them from four aspects—core business, market positioning, hardware facilities, and financial performance.

2.1 Core Business

From the underlying logic, decentralized computing power networks are highly homogeneous, using tokens to incentivize idle computing power holders to provide computing power services. Based on this underlying logic, we can understand the differences in the core business of projects from three aspects:

Source of idle computing power:

There are two main sources of idle computing power in the market: 1) idle computing power in the hands of enterprises such as data centers and miners; 2) idle computing power in the hands of individual users. The computing power in data centers is usually professional-grade hardware, while individual users typically purchase consumer-grade chips.

Aethir, Akash Network, and Gensyn primarily collect computing power from enterprises. The benefits of collecting computing power from enterprises include: 1) enterprises and data centers usually have higher-quality hardware and professional maintenance teams, resulting in higher performance and reliability of computing power resources; 2) the computing power resources of enterprises and data centers are often more homogeneous, and centralized management and monitoring make resource scheduling and maintenance more efficient. However, this approach requires a higher level of requirements for the project, as it needs to have business contacts with and access to enterprises with computing power. At the same time, scalability and decentralization will be affected to a certain extent.

Render Network and io.net mainly incentivize individual users to provide their idle computing power. The benefits of collecting computing power from individual users include: 1) the explicit cost of idle computing power from individual users is lower, providing more economical computing power resources; 2) the network has higher scalability and decentralization, enhancing the system's elasticity and robustness. However, the downside is that individual resources are widely distributed and not uniform, making management and scheduling complex and increasing operational difficulties. Additionally, it will be more difficult to kickstart network effects relying on computing power from individual users. Finally, individual devices may have more security risks, leading to data leaks and the risk of computing power being abused.

Computing power consumers

For computing power consumers, Aethir, io.net, and Gensyn primarily target enterprises. For B2B clients, AI and real-time rendering for gaming require high-performance computing needs. These workloads have extremely high requirements for computing power resources, usually requiring high-end GPUs or professional-grade hardware. In addition, B2B clients have high demands for the stability and reliability of computing power resources, so high-quality service level agreements must be provided to ensure the project runs smoothly and timely technical support is provided. At the same time, the migration cost for B2B clients is high, and if the decentralized network does not have mature SDKs to allow projects to deploy quickly (such as Akash Network requiring users to develop based on remote ports), it will be difficult to persuade clients to migrate. Unless there is a significant price advantage, the willingness of clients to migrate is very low.

Render Network and Akash Network mainly provide computing power services to individual users. To serve C2C users, projects need to design user-friendly interfaces and tools to provide a good consumer experience. Additionally, consumers are very price-sensitive, so projects need to offer competitive pricing.

Hardware types

Common computing hardware resources include CPUs, FPGAs, GPUs, ASICs, and SoCs. These hardware types have significant differences in design goals, performance characteristics, and application areas. In summary, CPUs are better at general computing tasks, FPGAs excel in high parallel processing and programmability, GPUs perform well in parallel computing, ASICs are most efficient in specific tasks, and SoCs integrate multiple functions and are suitable for highly integrated applications. The decentralized computing power projects we are discussing mostly involve the collection of GPU computing power, which is determined by the project's business type and the characteristics of GPUs. GPUs have unique advantages in AI training, parallel computing, multimedia rendering, and other areas.

Although these projects mostly involve the integration of GPUs, different applications have different hardware specifications, so these hardware types have heterogeneous optimization cores and parameters. These parameters include parallelism/serial dependencies, memory, latency, and more. For example, rendering workloads are actually more suitable for consumer-grade GPUs and not suitable for more powerful data center GPUs, as rendering requires high performance for tasks like ray tracing, and consumer-grade chips like the 4090s are optimized for ray tracing tasks. AI training and inference require professional-grade GPUs. Therefore, Render Network can collect consumer-grade GPUs like RTX 3090s and 4090s from individual users, while io.net needs more professional-grade GPUs like H100s and A100s to meet the needs of AI startups.

2.2 Market Positioning

In terms of project positioning, the core issues to be addressed, optimization focus, and value capture capabilities differ for the bare metal layer, orchestration layer, and aggregation layer.

The Bare metal layer focuses on the collection and utilization of physical resources, the Orchestration layer focuses on computing power scheduling and optimization, and designs the physical hardware according to the needs of customer groups. The Aggregation layer is general purpose, focusing on the integration and abstraction of different resources. In terms of value chain, each project should start from the bare metal layer and strive to climb upwards.

From the perspective of value capture, the ability to capture value increases layer by layer from the bare metal layer, through the orchestration layer, to the aggregation layer. The Aggregation layer can capture the most value because the aggregation platform can obtain the largest network effects and directly reach the most users, serving as the entry point of decentralized network traffic, thus occupying the highest value capture position in the entire computing power resource management stack.

Correspondingly, building an aggregation platform is also the most challenging, as the project needs to comprehensively address technical complexity, heterogeneous resource management, system reliability and scalability, network effect implementation, security and privacy protection, as well as complex operational management. These challenges are not conducive to the cold start of the project and depend on the development and timing of the race track. It is not very realistic to build an aggregation layer when the orchestration layer has not matured enough to capture a certain market share.

Currently, Aethir, Render Network, Akash Network, and Gensyn all belong to the Orchestration layer, aiming to provide services for specific targets and customer groups. Aethir's main business is real-time rendering for cloud gaming and providing a certain development and deployment environment and tools for B2B clients; Render Network's main business is video rendering; Akash Network's mission is to provide a transaction platform similar to Taobao, while Gensyn focuses on the AI training field. io.net is positioned as the Aggregation layer, but its current functionality is still some distance away from the complete functionality of the aggregation layer, although it has already collected hardware from Render Network and Filecoin, the abstraction and integration of hardware resources are not yet complete.

2.3 Hardware Facilities

Currently, not all projects have disclosed detailed network data. Relatively speaking, the UI of the io.net explorer is the best, where you can see parameters such as the number and types of GPUs/CPUs, prices, distribution, network usage, node income, etc. However, at the end of April, io.net's frontend was attacked because io did not authenticate the PUT/POST interfaces, and hackers tampered with the frontend data. This incident also sounded the alarm for the privacy and network data reliability of other projects.

In terms of the number and model of GPUs, io.net, as an aggregation layer, should have collected the most hardware. Aethir follows closely, while the hardware situation of other projects is not as transparent. From the GPU models, io has a wide variety, including professional-grade GPUs like A100 and consumer-grade GPUs like 4090, which aligns with io.net's aggregation positioning. io can choose the most suitable GPU according to specific task requirements. However, different models and brands of GPUs may require different drivers and configurations, and software also needs complex optimization, increasing the complexity of management and maintenance. Currently, io's task allocation is mainly based on user self-selection.

Aethir has released its own mining machine, and in May, the Aethir Edge, developed with support from Qualcomm, was officially launched. It will break away from the centralized GPU cluster deployment and deploy computing power to the edge. Aethir Edge will combine the cluster computing power of H100 to serve AI scenarios, deploy trained models, and provide inference computing services to users at the optimal cost. This solution brings the service closer to users, provides faster service, and offers better value for money.

In terms of supply and demand, taking Akash Network as an example, its statistics show a total of approximately 16k CPUs and 378 GPUs. According to network leasing demand, the utilization rates of CPUs and GPUs are 11.1% and 19.3% respectively. Among them, only the utilization rate of professional-grade GPU H100 is relatively high, while most other models are mostly idle. The situation faced by other networks is generally similar to Akash, with overall low demand, except for popular chips like A100 and H100, most computing power is mostly idle.

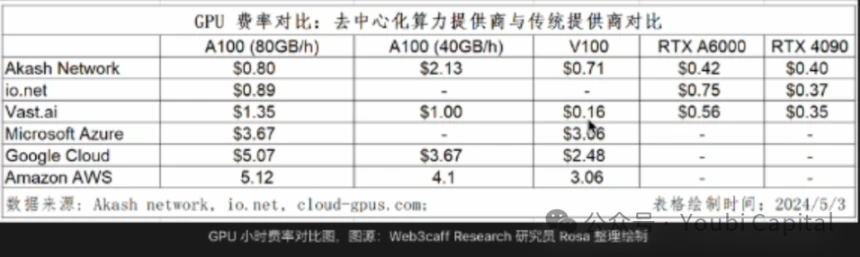

In terms of price advantage, compared to cloud computing market giants, the cost advantage is not particularly prominent compared to other traditional service providers.

2.4 Financial Performance

Regardless of how the token model is designed, a healthy tokenomics needs to meet several basic conditions: 1) User demand for the network needs to be reflected in the token price, meaning the token can capture value; 2) All participants, whether developers, nodes, or users, need to receive long-term fair incentives; 3) Ensure decentralized governance to avoid excessive holding by insiders; 4) Reasonable inflation and deflation mechanisms and token release cycles to avoid the impact of large price fluctuations on the network's robustness and sustainability.

If token models are broadly categorized as BME (burn and mint equilibrium) and SFA (stake for access), the deflationary pressure of these two models comes from different sources: the BME model burns tokens after users purchase services, so the deflationary pressure is determined by demand. On the other hand, SFA requires service providers/nodes to stake tokens to qualify for providing services, so the deflationary pressure comes from supply. The advantage of BME is that it is more suitable for non-standardized goods. However, if the network demand is insufficient, it may face continuous inflationary pressure. The token models of each project have differences in detail, but overall, Aethir leans more towards SFA, while io.net, Render Network, and Akash Network lean more towards BME, and Gensyn's approach is still unknown.

In terms of revenue, network demand will directly reflect on the overall network revenue (excluding miner revenue, as miners receive rewards for completing tasks and subsidies from the project). From publicly available data, io.net's figures are the highest. Although Aethir's revenue has not been disclosed, from public information, they have announced that they have signed orders with many B2B clients.

In terms of token price, currently only Render Network and Akash Network have conducted ICOs. Aethir and io.net have also recently issued tokens, and their price performance needs further observation, so no further discussion will be made here. Gensyn's plans are not clear. From the two projects that have issued tokens and other projects in the same race track but not included in this discussion, overall, decentralized computing power networks have shown very impressive price performance, to some extent reflecting the huge market potential and high expectations of the community.

2.5 Conclusion

The decentralized computing power network track is developing rapidly overall, and many projects are now able to provide product services to customers and generate some income. The track has moved beyond pure narrative and entered a stage of initial service provision.

Weak demand is a common problem faced by decentralized computing power networks, as long-term customer demand has not been well validated and explored. However, the demand side has not had much impact on token prices, as several projects that have issued tokens have shown impressive performance.

AI is the main narrative of decentralized computing power networks, but it is not the only business. In addition to being used for AI training and inference, computing power can also be used for real-time rendering in cloud gaming, cloud phone services, and more.

The hardware heterogeneity of computing power networks is relatively high, and the quality and scale of computing power networks need to be further improved.

For C-end users, the cost advantage is not very obvious. For B-end users, in addition to cost savings, they also need to consider the stability, reliability, technical support, compliance, and legal support of the services. However, Web3 projects generally do not perform well in these aspects.

3 Closing thoughts

The explosive growth of AI has undoubtedly brought a massive demand for computing power. Since 2012, the computing power used in AI training tasks has been growing exponentially, currently doubling every 3.5 months (compared to Moore's Law, which doubles every 18 months). Since 2012, the demand for computing power has increased by over 300,000 times, far exceeding Moore's Law's 12-fold growth. It is predicted that the GPU market is expected to grow to over $200 billion at a compound annual growth rate of 32% over the next five years. AMD's estimate is even higher, with the company expecting the GPU chip market to reach $400 billion by 2027.

Image source: https://www.stateof.ai/

The explosive growth of AI and other compute-intensive workloads (such as AR/VR rendering) has exposed structural inefficiencies in traditional cloud computing and leading computing markets. In theory, decentralized computing power networks can provide more flexible, cost-effective, and efficient solutions by utilizing distributed idle computing resources to meet the huge demand for computing resources in the market. Therefore, the combination of crypto and AI has enormous market potential, but it also faces fierce competition from traditional enterprises, high entry barriers, and a complex market environment. Overall, looking at all crypto tracks, decentralized computing power networks are one of the most promising verticals in the crypto field to truly meet real demand.

Image source: https://vitalik.eth.limo/general/2024/01/30/cryptoai.html

The future is bright, but the road is winding. To achieve the above vision, we still need to address numerous problems and challenges. In summary: At the current stage, simply providing traditional cloud services results in a very small profit margin for projects. From the demand side analysis, large enterprises generally build their own computing power, while pure C-end developers mostly choose cloud services. It still needs further exploration and validation whether small and medium-sized enterprises that truly use decentralized computing power network resources will have stable demand. On the other hand, AI is a vast market with high potential and imagination. To tap into a broader market, future decentralized computing power service providers also need to transition to model/AI services, explore more crypto + AI usage scenarios, and expand the value the projects can create. However, at present, there are still many problems and challenges to further develop into the AI field:

Lack of prominent cost advantage: A comparison of previous data shows that the cost advantage of decentralized computing power networks has not been realized. Possible reasons include the market mechanism determining that the prices of professional chips such as H100 and A100, which have high demand, will not be cheap. In addition, the lack of economies of scale brought about by decentralization, high network and bandwidth costs, and the significant complexity of management and operations will further increase computing costs.

Special nature of AI training: There are significant technological bottlenecks in using decentralized methods for AI training at the current stage. This bottleneck can be intuitively seen in the workflow of GPUs. In large language model training, GPUs first receive pre-processed data batches, perform forward and backward propagation calculations to generate gradients. Then, each GPU aggregates gradients and updates model parameters to ensure synchronization across all GPUs. This process is repeated until all batches are trained or a predetermined number of rounds is reached. This process involves a large amount of data transmission and synchronization. Questions such as what parallel and synchronization strategies to use, how to optimize network bandwidth and latency, and reduce communication costs, have not yet been well answered. At the current stage, using decentralized computing power networks for AI training is not very practical.

Data security and privacy: In the training process of large language models, various stages involving data processing and transmission, such as data allocation, model training, and parameter and gradient aggregation, could potentially affect data security and privacy. Data privacy is even more important for model privacy. If the issue of data privacy cannot be resolved, true scalability on the demand side cannot be achieved.

From a practical perspective, a decentralized computing power network needs to simultaneously consider current demand exploration and future market space. Identifying product positioning and target customer groups, such as initially targeting non-AI or Web3 native projects, starting from relatively niche demands, and building an early user base. At the same time, continuously exploring various scenarios of AI combined with crypto, exploring cutting-edge technologies, and achieving service transformation and upgrades.

References

https://vitalik.eth.limo/general/2024/01/30/cryptoai.html

https://foresightnews.pro/article/detail/34368

https://research.web3caff.com/zh/archives/17351?ref=1554

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。