Source: Geek Park

Image Source: Generated by Wujie AI

The power struggle of OpenAI has just come to an end, and a crucial deal has quietly surfaced.

According to the foreign media "Wired," during Sam Altman's tenure as CEO of OpenAI, OpenAI signed an intent letter worth $51 million with Rain AI, promising to purchase chips after Rain AI's chips are listed.

Rain AI is an AI chip startup aimed at significantly reducing the cost of AI computing power. By developing an AI chip, NPU, that mimics the way the human brain works, it aims to provide "low-cost, high-efficiency hardware" for AI companies such as OpenAI and Anthropic.

The company claims that "compared to traditional GPUs, NPU will potentially provide AI developers (such as OpenAI) with 100 times the computing power and 10,000 times the efficiency in training."

Given that OpenAI has been plagued by a shortage of computing power, it is not difficult to understand why it would be willing to spend a large amount of money to ensure a stable supply of chips for its AI projects.

What are the characteristics of the chips developed by Rain AI? How has the company made its mark? What does this investment reveal about Altman and OpenAI's strategy in the chip field?

01 "Neuromorphic" AI Chips

Rain AI's core product is the "neuromorphic" AI chip, NPU, based on Neuromorphic technology. The chip aims to process information with low power consumption and high efficiency to meet the demanding computational requirements of AI tasks.

It mimics the structure and function of the human brain, similar to the neural connections in the brain, built on a network of interconnected artificial synapses. This architecture allows NPU to process information in a parallel and distributed manner, making it very suitable for "computationally intensive tasks" in AI applications.

Moreover, Rain AI has pioneered the use of digital in-memory computing (D-IMC) mode, further enhancing the efficiency of AI processing, data movement, and data storage.

Rain AI's NPU aims to process information with low power consumption and high efficiency to meet the demanding computational requirements of AI tasks | Image Source: Rain AI Official Website

In addition, Rain also provides intellectual property (IP) licensing opportunities for digital in-memory computing tiles and software stacks, specifically tailored for AI workloads on devices requiring ultra-low latency and high efficiency, covering a range of use cases for Long Reach Ethernet (LRE), including smart cars, smartwatches, and more.

For its own product, Rain has touted the slogan "redefining the limits of AI computing" and promoted "our AI accelerator has achieved a record balance between speed, power consumption, area, accuracy, and cost."

Given that Rain's "neuromorphic" chip (NPU) promises efficient and low-power operation, this is crucial for overcoming the "bottleneck" related to heavy chips manufactured by companies such as Nvidia and AMD.

Covering a range of use cases for LRE | Image Source: Rain AI Official Website

One of Rain AI's founders, Gordon Wilson, even stated on LinkedIn, "NPU chips will define a new AI chip market and significantly disrupt the existing market."

However, it is worth noting that, despite Rain AI claiming to have better efficiency compared to Nvidia's GPU, Rain's initial chips are actually based on the traditional RISC-V open-source architecture supported by Google, Qualcomm, and other tech companies, aimed at edge devices such as phones, drones, cars, and robots, far from data centers.

However, most current edge chip designs, such as those used in smartphones, focus on the inference stage of neural networks. Rain's goal is to provide a chip that can be used for both model and algorithm training, as well as for subsequent inference operations.

Currently, Rain AI has launched its first AI platform for AI inference and training, and claims that the "neuromorphic" chip (NPU) will allow AI models to be customized or fine-tuned in real time based on the surrounding environment.

In response, Sam Altman has publicly stated, "This neuromorphic approach can significantly reduce the cost of AI development and is expected to help achieve true AGI."

It is reported that OpenAI hopes to use these chips to reduce the cost of data centers and deploy its models in devices such as phones and smartwatches, making the "neuromorphic" chip (NPU) undoubtedly highly attractive to OpenAI.

However, these are all just speculations. Currently, it is unknown how OpenAI will use Rain's chips.

02 Close Ties with OpenAI

Rain AI was founded in 2017 with the aim of building a "low-cost" computing platform for future AI.

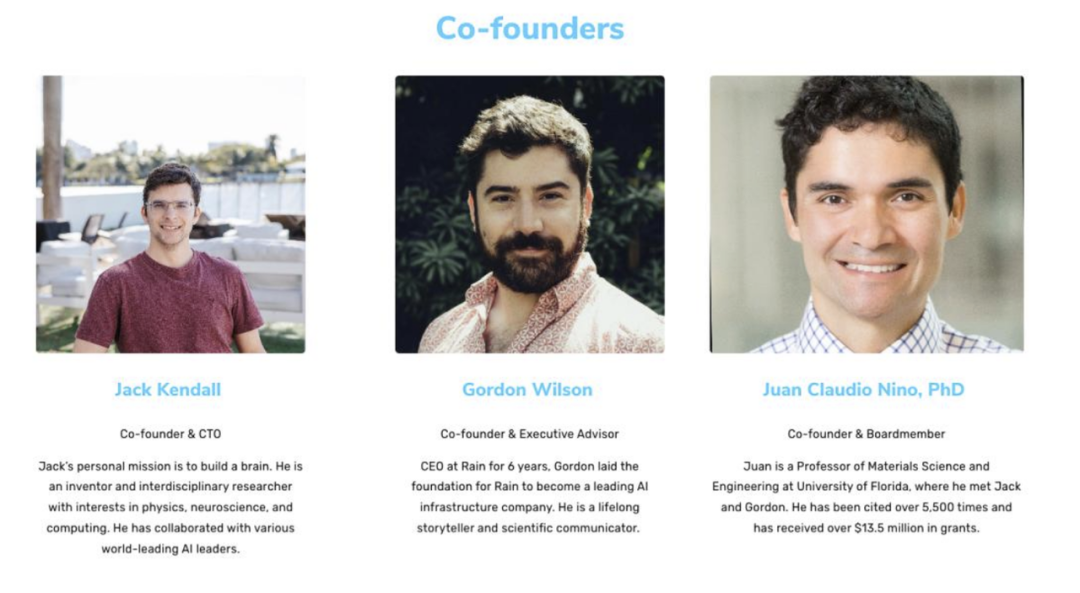

Rain AI has three co-founders, Jack Kendall, Gordon Wilson, and Juan Claudio Nino, who met at the University of Florida, and also hired OpenAI software engineer Scott Gray as a company advisor.

Currently, Rain AI has about 40 employees, including experts in AI algorithm development and traditional chip design.

Founding team of Rain AI | Rain AI

Interestingly, Rain AI's headquarters is also located in San Francisco, less than a mile from OpenAI.

The year after the company was founded, Rain AI raised $5 million in seed funding, with investors including the well-known startup incubator Y Combinator.

At that time, Altman was serving as the CEO of Y Combinator and also personally invested $1 million in Rain AI. A year later, OpenAI passed the $51 million chip purchase agreement.

As of April 2022, following a $25 million Series A funding round led by the Saudi Arabian-affiliated fund Prosperity7 Ventures, Rain's total funding reached $33 million, with a valuation of $90 million.

This year, the company boasted to potential investors about its progress, stating that it expected to launch "test" chips this month, which would mean that the chip design has been completed and manufacturing can begin.

Rain AI also indicated that it could provide the first batch of chips to customers as early as October next year, and even emphasized to investors that it had engaged in advanced negotiations with tech giants such as Google, Oracle, Meta, Microsoft, and Amazon to sell systems to them. However, Microsoft declined to comment, and the other companies did not respond to requests for comment.

In short, Rain AI is still in the development stage, and it is currently unclear when it will be ready for commercial use. Despite the promising prospects of its "neuromorphic" chips (NPU) technology and the attention it has garnered from supporters, the company still faces many challenges.

03 Ambitions of OpenAI

Regardless of whether Altman's investment in Rain AI was self-serving, the shortage of chips is indeed a major problem that OpenAI is facing.

In fact, just a year ago, shortly after the release of ChatGPT, Altman felt that the computing costs were "unbearable." Furthermore, he publicly complained multiple times about the "severe shortage" and "astonishing" cost of AI chips.

In May of this year, Altman reluctantly admitted, "OpenAI is experiencing a severe shortage of computing power, and many short-term plans have been delayed."

It is well known that OpenAI leverages the powerful cloud services of its main investor, Microsoft, but due to hardware limitations, it often disables certain features of ChatGPT.

In response, Altman stated, "The pace of AI progress may depend on new chip designs and supply chains." After all, at present, computing power is everything.

In fact, Altman had invested in chip layout early on. In addition to Rain AI, around 2021, he also participated in the investment in Cerebras, the AI company with chips the size of a plate that requires two hands to hold.

Earlier this year, Altman also showed interest in Atomic Semi, founded by "Silicon Sage" Jim Keller and "Silicon Prodigy" Sam Zeloof, which aims to quickly manufacture reasonably priced chips by simplifying and downsizing semiconductor factories and integrated circuit prototypes. The OpenAI Startup Fund also participated in the investment.

Furthermore, just a few weeks before Altman was fired from OpenAI, there were reports that he was trying to raise billions of dollars to start a new chip company.

The details of the project are not yet known, but it is known that the project, codenamed "Tigris," aims to compete with Nvidia in the AI chip field.

It is reported that Altman had raised funds for the "Tigris" project in the Middle East. The "coincidence" of the location inevitably raises questions about whether this project is related to Rain.

In addition, Altman has also had discussions with semiconductor executives, including chip design company Arm, on how to design new chips as soon as possible to reduce costs for large language model companies like OpenAI.

Moreover, not only Altman, but OpenAI is also exploring the possibility of building large models at lower costs to reduce dependence on Nvidia.

In addition to seeking chip suppliers like Rain AI and external investments, OpenAI recently began experimenting with self-designed chips, evaluating potential acquisition targets, and recruiting hardware-related positions.

Recently, OpenAI appointed Richard Ho, former head of Google TPU, as the head of hardware, and hired several experts in compilers and kernels, as well as recruiting "data center facility design experts."

Richard Ho will lead a new department at OpenAI and help optimize data center networks, racks, and buildings for partners.

However, these forward-looking investment layouts are still unlikely to solve the current GPU shortage problem. Currently, OpenAI still largely uses chips from Nvidia.

It is observed that OpenAI is dynamically adjusting the capabilities of products like ChatGPT to save computing power. It is not difficult to understand why some netizens recently found that GPT-4 is more prone to "slacking off" compared to GPT-3.5.

With the emergence of large models, people are beginning to pay attention to the power consumption of large AI model data centers. Companies like Rain and other chip startups aim to reconfigure data processing to reduce transmission requirements and power consumption.

With the entry of companies like Google, Microsoft, AMD, Intel, Amazon, as well as startups like Cerabras, Sambanova, and Rain into the AI chip market, will there be a change in the market for AI computing power supply? Can OpenAI break free from being constrained by computing power? From the long cycle of chip development, these challenges will continue to exist for quite some time.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。