Source: New Wisdom

Image source: Generated by Wujie AI

While large models are good, how to solve the problem of "seriously talking nonsense"?

In serious fields such as finance, law, and medicine, the problem of hallucinations has always been a major bottleneck restricting the application of large models.

How to make up for this part of the deficiency, so that more industries can quickly join the exploration of the new round of productivity revolution, is an important topic of current AI research. Among them, the fusion of large models (LLM) and knowledge graph (KG) to make up for the former's ability shortcomings is a research direction that has attracted much attention.

Recently, a research team from IDEA Research Institute, Microsoft Research Asia, Hong Kong University of Science and Technology, Hong Kong University of Science and Technology (Guangzhou), Xiamen University, and the University of Southern California launched the "Think-on-Graph" technology, which drives the LLM agent to "think" on the knowledge graph through the tight coupling interaction of LLM and KG, and gradually searches and infers the optimal answer.

Paper link: https://arxiv.org/abs/2307.07697

Code link: https://github.com/IDEA-FinAI/ToG

Next, let's use a tricky question to understand the "train of thought" of Think-on-Graph: How to objectively evaluate who has higher martial arts skills between Duan Yu and Hong Qi Gong? (Duan Yu is from "The Return of the Condor Heroes", and Hong Qi Gong is from "The Legend of the Condor Heroes", and the two have not appeared in the same work.)

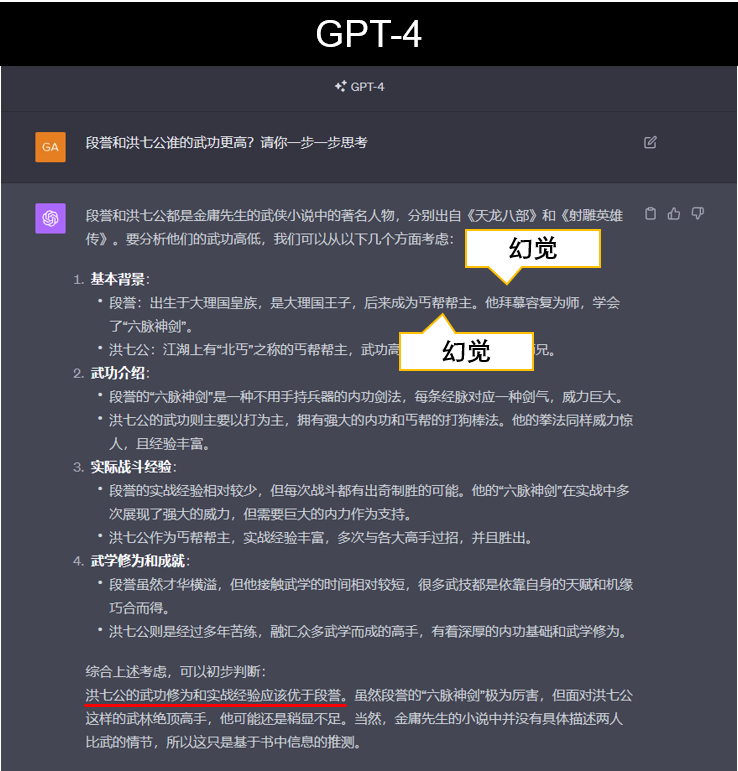

First, let's see what the most powerful model on the surface, GPT-4, has to say:

Since LLM is trained from massive fragmented corpora, such analytical reasoning problems do pose a great challenge to it. From the answer, it is not difficult to see that in addition to the appearance of hallucinations, when comparing the martial arts levels of the two characters, GPT-4 focuses on listing factual information, lacking in-depth logical analysis.

So, how does Think-on-Graph solve the problem?

First, according to the knowledge graph, the "Six Meridians Divine Sword" is the strongest martial arts of the Dali Duan family, and the "One Yang Finger" is the commonly used martial arts of the Dali Duan family, so the large model judges that "Six Meridians Divine Sword" is stronger than "One Yang Finger"; then infers that Duan Yu's martial arts are stronger than the One Yang Finger, which is unparalleled in the martial arts world, of the Master One.

Then, based on the knowledge graph's "Master One and Hong Qi Gong both belong to the 'Four Greats of Mount Hua'", it is inferred that the martial arts of the two are equivalent. Finally, Duan Yu > Master One, and Master One = Hong Qi Gong, so the conclusion is: Duan Yu's martial arts are higher.

It can be seen that Think-on-Graph, which integrates structured knowledge and the reasoning ability of large models, not only has a clear structure, but also provides a traceable chain of reasoning.

LLM transforms from "translator" to "errand runner", achieving deep reasoning tightly coupled with KG

It is well known that large models are good at understanding, reasoning, generating, and learning; knowledge graphs, on the other hand, due to their structured knowledge storage, perform better in logical chain reasoning and have better reasoning transparency and credibility. The two complement each other very well, and the key is to find a good way to combine them. According to the researchers, there are currently two main methods.

The first type is to embed the knowledge graph into a high-dimensional vector space during the model pre-training or fine-tuning stage, and integrate it with the embedding vectors of the large model.

However, such methods are not only time-consuming and computationally intensive, but also cannot fully utilize many natural advantages of knowledge graphs (such as real-time knowledge updates, interpretability, and traceable reasoning).

The second type of approach uses the knowledge structure of the knowledge graph to integrate the two through prompt engineering, which can be further divided into two paradigms: loose coupling and tight coupling.

In the loose coupling paradigm, LLM acts as a "translator", understanding the user's natural language input, translating it into a query language in the knowledge graph, and then translating the search results on the KG back to the user. This paradigm requires very high quality and completeness of the knowledge graph itself, and ignores the inherent knowledge and reasoning ability of the large model.

In the tight coupling paradigm represented by Think-on-Graph, LLM transforms into an "errand runner", acting as an agent to search and reason step by step on the associated entities in the KG. Therefore, in each step of reasoning, LLM personally participates and complements the knowledge graph.

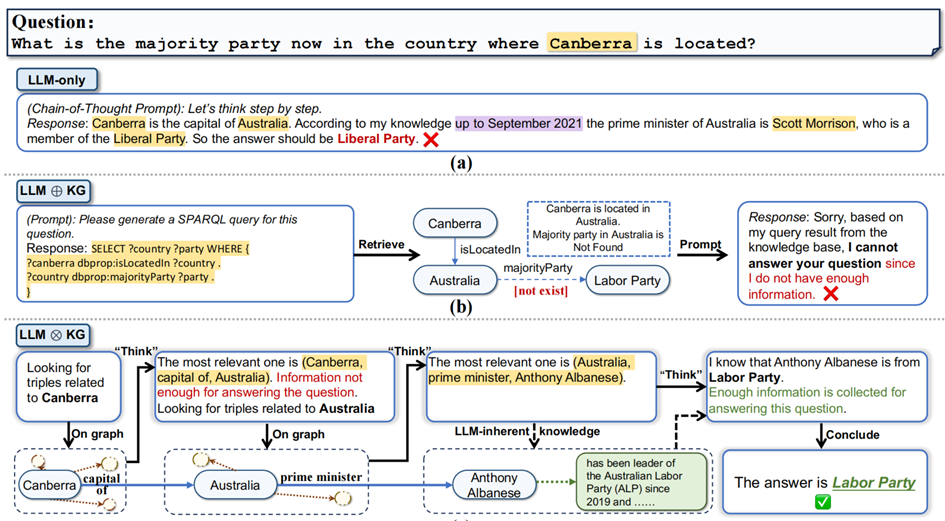

In the study, the team used the following example to demonstrate the advantages of the tight coupling paradigm: Which party is the current majority party in the country where Canberra is located?

From the above example, it can be seen that ChatGPT gave the wrong answer due to outdated information.

In the loose coupling paradigm, although the KG containing the latest information is introduced, the lack of "majority party" information makes the reasoning incomplete; while in the tight coupling paradigm, LLM independently reasoned that "the head of government (prime minister) of a parliamentary country is usually also the leader of the majority party", which made up for the information missing in the KG and indirectly reasoned out the correct answer.

Think-on-Graph, an efficient new paradigm of tight coupling

According to the research team, Think-on-Graph draws on the beam-search algorithm of Transformer. This algorithm is a recursive iterative process, and each iteration needs to complete the tasks of search pruning and reasoning decision.

Search pruning is used to find the most promising reasoning paths that could become the correct answer, and reasoning decision uses LLM to judge whether the existing candidate reasoning paths are sufficient to answer the question—if the judgment result is no, it continues to iterate to the next cycle.

We still use the example "Which party is the current majority party in the country where Canberra is located?" to explain.

Case: Implement Think-on-Graph reasoning with a beam-search of search width N=2

After sorting the scores from high to low, LLM retained the top 2 scores, forming two candidate reasoning paths:

Next, LLM evaluates the candidate reasoning paths and provides feedback to the algorithm in the form of Yes/No.

In the case, it can be seen that LLM vetoed the candidate paths for two consecutive rounds, until the third iteration was completed, LLM judged that there was sufficient information to answer the question, and therefore stopped the algorithm iteration and output the answer to the user (which is indeed the correct answer).

How to make large model reasoning more reliable? Explainable, traceable, and correctable

The research team stated that the Think-on-Graph algorithm effectively improves the interpretability of large model reasoning and achieves traceable, correctable, and correctable knowledge. Especially with the help of human feedback and LLM reasoning ability, errors in the knowledge graph can be discovered and corrected, compensating for the drawbacks of long LLM training time and slow knowledge updates.

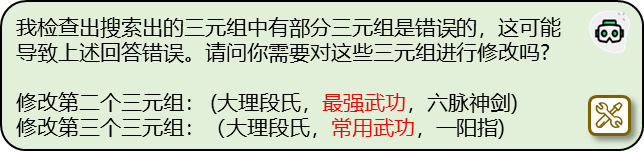

To test this ability, an experiment was designed: intentionally introducing incorrect information into the knowledge graph in the previous "comparison of Duan Yu and Hong Qi Gong's martial arts" case, stating that "the strongest martial arts of the Dali Duan family is the One Yang Finger, and the common martial arts is the Six Meridians Divine Sword".

It can be seen that although Think-on-Graph derived the wrong answer based on the incorrect knowledge, due to the algorithm's built-in "self-reflection" ability, when it judges the lack of credibility of the answer, it will automatically backtrack the reasoning path on the knowledge graph and check all the triplets in the path.

At this point, LLM will use its own knowledge to select the potentially erroneous triplets and provide users with feedback, analysis, and correction suggestions.

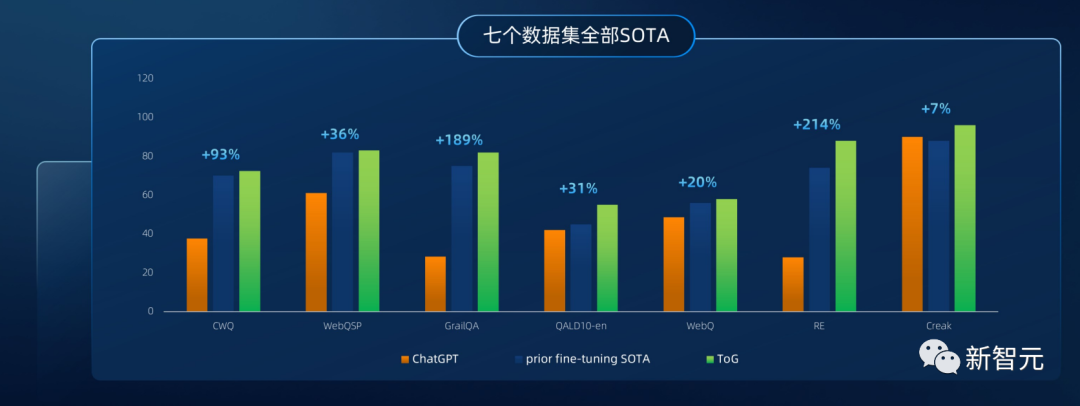

7 new SOTA, deep reasoning improves by up to 214% compared to ChatGPT

The performance of Think-on-Graph was evaluated on a total of 9 datasets in four knowledge-intensive tasks (KBQA, Open-Domain QA, Slot Filling, Fact Checking).

Compared to ChatGPT (GPT-3.5) under different prompting strategies such as IO, CoT, and CoT-SC, Think-on-Graph performed significantly better on all datasets. For example, in the Zeroshot-RE dataset, ChatGPT based on CoT had an accuracy of 28.8%, while Think-on-Graph based on the same prompt achieved an accuracy of 88%.

When the base model was upgraded to GPT-4, Think-on-Graph's reasoning accuracy also significantly improved, achieving SOTA on 7 datasets, and coming very close to SOTA on the remaining dataset, CWQ.

It is worth noting that Think-on-Graph did not undergo any supervised incremental training or fine-tuning on any of the test datasets mentioned above, demonstrating its strong plug-and-play capability.

Furthermore, the researchers also found that even when replacing small-scale base models (such as LLAMA2-70B), Think-on-Graph still surpassed ChatGPT on multiple datasets, which may provide a low computational power option for users of large models.

References:

https://arxiv.org/abs/2307.07697

https://github.com/IDEA-FinAI/ToG

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。