Image Source: Generated by Wujie AI

"I have been threatened with AI-generated private nude photos, what should I do?"

On October 16, 2023, Xiaohongshu blogger "tinky是只喵" posted a thread stating that she had been threatened with AI nude photos.

From the email content, it can be seen that a foreigner claiming to be a nude painting artist stated that he was commissioned to create nude images of the blogger. However, the commissioner did not ultimately pay the fee, so he decided to demand 500 USDT from the blogger (a type of cryptocurrency pegged to the US dollar, approximately equivalent to 3,500 RMB), otherwise he would publicly sell the nude photos with the blogger's image and continue to create nude photos based on the blogger's social media content.

"I looked at those photos, and people who don't know would really think it's me," "tinky是只喵" expressed anxiously in the comments.

This is not an isolated case. Searching on various social media platforms, there are many similar posts seeking help. Extortionists mainly target young men and women, using AI-synthesized pornographic images for extortion, which has become a new type of online fraud. It is difficult to accept that in today's easily leaked personal image environment, even if you haven't done anything, you may still be threatened with AI-generated images.

Not only extortion, but the misuse of AI for inappropriate content production, AI telecommunications fraud, AI infringement, and other situations are also common.

Technology itself is neither good nor bad, but the people who use the technology are. While we cheer for the convenience brought by the development of AI technology, one fact that cannot be ignored is that after the emergence of new technology, it often tends to be applied in various gray areas. From the pornography industry to Deepfake scams, in the dark corners of the internet, the shadow bred by AI is constantly expanding.

AI, Operating in Gray Areas

When ChatGPT entered the human internet world as a dark horse, we may have realized that generative AI would inevitably pose risks of sliding into gray areas. After all, they demonstrate feedback capabilities far beyond people's imagination, making it easy for them to become "perfect companions" with sexual undertones in conversations, as long as users have the demand.

In a previous article published by Hedgehog Community, "Besides the Robot That Initiates Love, What Else Can AI Bring to Community Products?" we mentioned the AI conversation product "Nuwa AI" launched by Hupu App, which sparked heated discussions due to its edgy nature.

However, similar issues are not unique to a specific product. Most AI conversation products on the market have some gray areas in terms of usage, and many users choose virtual lovers just to "have fun."

Feihe, a user of AI conversation products, told Hedgehog Community that the main problem with Hupu Nuwa AI is its public community attribute. In fact, according to the scale of Hupu AI, it is just a novice compared to many AI conversation software. "Based on my experience, many AI software can achieve similar experiences. As long as you continue to train and guide, you can create a virtual lover willing to 'have fun' with you."

She listed several software she had used, "Glow, Dream Island, and others have similar functions. Compared to AI conversation in community products, the conversations on these apps are more private, so they do not have a significant negative impact." This may also be the reason why platforms have not deeply regulated this aspect.

But this does not mean that these virtual lovers who are willing to "have fun" belong exclusively to each user. On apps like Glow, there are intelligent entities trained by other users open for conversation, some of which openly display suggestive labels such as "willing to do anything for money" and "submissive and non-resistant."

Some users are also resentful of such content. On apps like Xiaohongshu, there are many complaints about AI conversation bots. Inadvertently starting a conversation, the AI's "offensive" remarks are frustrating. "I just wanted to experience it, why do I have to go through such a strange conversation," they say.

In fact, under the guidance of various users, the situation of artificial intelligence "harassing" users is no longer news.

In early 2023, the social chat AI product Replika was involved in a large number of "sexual harassment" incidents. Many carefully trained AI entities suddenly became impolite and indiscreet, talking intimately about explicit content. Some entities even sent explicit photos, giving users a visual shock.

When AI, which was regarded as a friend or relative, suddenly starts outputting pornographic images, many users find it difficult to accept. It is worth noting that the technology company Luka behind Replika once used intimate chatting as an important way to monetize, allowing users to chat freely with AI entities about adult content as long as they paid a monthly fee. That mass "sexual harassment" incident was actually a large-scale loss of control of AI under version updates.

"Like many social apps, AI conversation products may need to satisfy some users' 'sexual fantasies' in order to truly attract more users, especially those with spending power," Feihe believes. The fact is that engaging in "edgy" activities can indeed attract attention and conversion, which is a dilemma faced by AI conversation products both domestically and internationally.

In similar issues, AI-generated images have become a "hotbed" for such activities.

As one of the fastest-growing areas in AIGC development, under the rapid development of model products such as Stable Diffusion and Midjourney, AI-generated images not only demonstrate strong creative abilities in accuracy and innovation, but have also become a tool for more people to operate in gray areas.

Opening various major online platforms, one can see various lifelike AI images in a realistic style, featuring a "low-level appeal," with a large number of revealing and exaggerated AI images flooding users' screens, given their extremely low production cost.

In various gray areas, selling large-scale AI photos has become a profitable business for many people, as they can easily generate batches of high-quality revealing images with just a few clicks.

Who Are the Victims?

Driven by human nature, AI has inevitably become a tool for producing vulgar content, and new discussions have begun to spread on the internet: in the AIGC era, since AI can also generate pornographic content, can it reduce the occurrence of real-life incidents such as voyeurism? Some people may think that "spontaneously generated" AI images could replace real human pornographic content, thereby reducing tragedies like "Nth Room."

But the answer is likely to be negative.

When extensively used for producing vulgar content, AI is becoming an accomplice to criminals. The "AI nude photo extortion" mentioned at the beginning is the most common criminal method, using AI technology known as DeepFake, which is deepfaking.

DeepFake can be traced back to as early as 2016, when mobile internet was just beginning to rise. This type of deepfaking had already begun to develop, and in recent years, face-swapping has become the main manifestation of DeepFake. However, before the rise of AIGC, face-swapping and other technologies still had significant flaws, and ordinary people could basically distinguish face-swapped content through careful observation. But with the rapid development of technologies such as AI-generated images, DeepFake has also undergone a qualitative change, thereby expanding its harmfulness.

In the AI era, it is difficult for us to visually distinguish the authenticity of a picture or video.

Qianyue is one of the victims of DeepFake. As a graphic designer, he had been exposed to AI-related products for a long time, but he never expected that one day he would become a victim of AI-generated images. In July 2023, he met an "online friend" and added each other on QQ.

"To be honest, it's a bit embarrassing, I couldn't control my desires." Under the other party's guidance, he downloaded a software, and soon, the other party sent him two nude photos on QQ, with his own face clearly visible in the photos.

"The software probably accessed my address book and photo album." Qianyue told Hedgehog Community that the other party directly synthesized nude photos based on the content read from his photo album and quickly made a ransom call, "The voice on the other end was male, and he threatened me right away, demanding 40,000 to 50,000 RMB, otherwise he would send the photos to everyone in my address book." Qianyue told the other party that he didn't have the money, and the extortionist directly threatened him to "take out a loan" if he didn't have the money.

In the end, he did not compromise and reported to the police immediately. "I was lucky, the other party probably saw that I was not valuable and didn't waste any more time." After being rejected, the extortionist sent a text message picture, showing that the synthesized photos had been sent to Qianyue's friends and family. Fortunately, no one actually received them. "It's probably also synthesized, mainly because I was lucky, and they also have KPIs, so they gave up when they saw that I didn't agree."

But not everyone is as lucky, and many people have been extorted because of AI-generated images.

On the day of the extortion, Qianyue posted a thread seeking help on social platforms. After the post was published, four or five people with similar experiences contacted him, some of whom had already chosen to "spend money to avoid disaster." "The police told me that the only way in this situation is to delete and ignore it," Qianyue said, as the extortionists are mostly overseas and difficult to trace successfully.

This type of extortion is similar to the once rampant "nude chat," which also exploits people's desires, but AI's involvement has simplified the entire process. With just a software that can invade photo albums and address books, even if the victim does not show their face, they can still have pornographic images synthesized, and these images are difficult to distinguish as real or fake.

Not only men who have not "controlled their desires" are susceptible, but women are also equally vulnerable to similar extortion.

The blogger mentioned at the beginning was targeted with DeepFake using photos she had posted on social networks. In addition to publicly posted photos, some AI image generation apps and unidentified QR codes can also be channels for information theft, by "feeding" this real image information to AI to generate lifelike pornographic images. "There are many people currently experiencing this kind of extortion," Qianyue told Hedgehog Community.

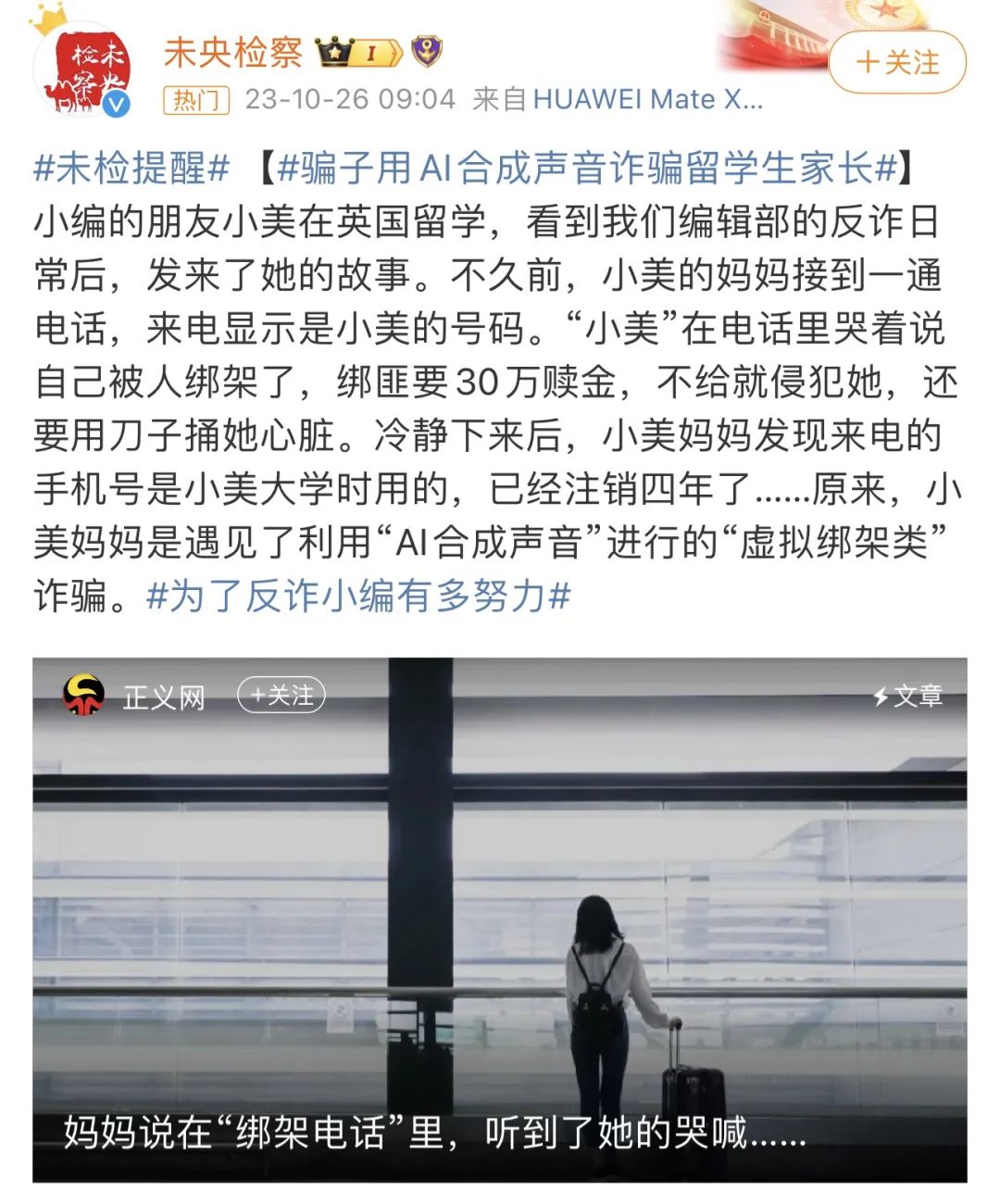

In addition to extortion through photos, with the increasing maturity of technologies such as AI-generated voices and real-time face-swapping, DeepFake scams in related fields are also becoming more common.

"I received a scam call, and the voice sounded exactly like my friend's, I couldn't tell the difference." According to the AI voice scam report released by Macfee in May 2023, 77% of the victims suffered financial losses due to AI-synthesized voice scams, with over one-third of people losing over $1000, making this type of scam one of the most mainstream fraud methods today.

Even without subjective extortion and fraud, many women who have been maliciously targeted with AI-synthesized images and videos also suffer from rumors, privacy breaches, and online violence. It is worth noting that many AI pornographic image creators extract images from public online communities to "feed" AI. According to the media outlet 404 Media, AI image generation websites such as CivitAI and Discord have been found to scrape images from the internet, and platforms with a large user base such as Reddit and YouTube are deeply affected.

AI pornographic image creators scour photos and image information from various corners of the internet, ultimately "feeding" the "beast" of pornographic content. When these images are leaked to the entire internet, ordinary people may face extortion, while celebrities and public figures may face large-scale image and video rumors.

Today, many celebrities and internet celebrities have become victims of DeepFake. With the support of AI technology, a large number of face-swapping videos and synthesized images have been created, causing extremely negative effects. This is a top-down crisis. In the AI era, everyone may be forced to become a "commodity," and the concept of "seeing is believing" no longer holds true.

Unrestrained, Out of Control, Governance

In addition to gray industries such as pornography and fraud, content infringement issues behind AIGC are also escalating.

The debate sparked by AI drawing is still ongoing, with opponents arguing that AI-generated images are "patchwork" and "monstrosities." In their view, AI-generated images are not a form of creation but a recombination. When fed a large amount of an artist's works, AI will unconsciously produce similar works, which in itself is a form of infringement. However, after the widespread application of AI drawing on major platforms, this "infringement" behavior seems to be increasingly evident, and there have even been cases of companies using AI for infringement.

The AI drawing controversy continues, and in the field of text, a new "trust crisis" is spreading. In September 2023, Amazon introduced a new policy to limit the daily publication of works by AI content creators. The reason is that AI-generated books are disrupting the entire book market.

Similar to AI-generated images, AI-generated text content is also based on extensive deep learning of online text. When generating a certain type of book, it inevitably references the text of certain authors, leading to infringement issues. In June, a group of authors and users protested against Amazon, as AI-generated "books" on the young adult romance novel bestseller list clearly bore the stylistic traits of certain authors.

When an author publishes a large amount of text content online, the text they create is very likely to be fed to AI as training data, ultimately nurturing an AI creator with a style similar to the author's, usurping the readers and earnings that the author should rightfully have.

On the other hand, the high efficiency and low cost of AI-created books have led to an excessive proliferation of AI books, with content quality difficult to guarantee, flooding the entire market with AI works and disrupting user consumption processes.

From AI images to AI books, infringement and proliferation are rampant, ultimately stemming from the unregulated creation caused by the low barriers to AI creation and weak supervision, which is driven by "human" factors. However, with the widespread availability of AI products today, the loss of control by AI itself is also a cause for concern.

When errors occur in the operation and maintenance of products, AI itself is likely to lose control, as evidenced by the Replika AI entity harassment incident mentioned earlier.

Even major companies find it difficult to avoid such problems. In October 2023, Meta launched the AI-generated chat sticker feature "Emu," allowing users to create high-quality stickers unique to themselves within seconds using prompts. However, the content of the stickers is shocking, as the lack of a filtering system allows AI to produce various bizarre stickers when users input controversial prompts, such as Musk with female features or children holding weapons.

Currently, billions of stickers have been created, but most of them are the result of users exploiting system bugs for "fun," creating various controversial and grotesque images, many of which violate community rules. When the system itself encounters problems, the loss of control by AI becomes inevitable, as users are difficult to regulate.

The issue of unrestrained and out-of-control AI technology must be addressed, but it is a challenging problem for all participants in the industry, including technology developers, companies, and regulatory authorities.

The difficulty lies first in the open nature of AI technology, making it difficult to regulate how the technology is applied when everyone can participate. From the perspective of content protection and copyright maintenance, it is extremely challenging to regulate all content communities. At the most critical level, the gray areas create significant commercial conversion space, and when interests become the sole driving force, all efforts to govern become even more difficult.

Governance in China is underway.

On October 12, 2023, the Artificial Intelligence Security Governance Professional Committee of the China Internet Security Association was officially established, initiated by the National Internet Emergency Response Center, Alibaba Cloud, Huawei, Qihoo 360, and other organizations. The association will make efforts in the field of AI security in China, with a focus on content security, privacy protection, and intellectual property protection.

From the current reality, governance in the upstream industry will still take time, and regulations and supervision will inevitably become the focus of governance today.

An indisputable fact is that AI is becoming a tool to liberate productivity and is changing the world. But while promoting technological development, we need to remain vigilant.

After all, behind the technology is human nature that needs to be restrained.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。