Source: Silicon Star

Image source: Generated by Wujie AI

Every seat was taken, and the aisle was also crowded with people.

You might even think this was a celebrity meet-and-greet.

But this was actually one of the roundtable discussions at the GenAI conference in Silicon Valley.

It was scheduled in the "secondary stage," at a time when people were most drowsy around noon, while the main stage of another conference room was filled with CEOs and founders of prominent Silicon Valley companies. Despite this, people continued to pour into this small room.

Their target was three Chinese researchers. In the past in Silicon Valley, such scenes always occurred when "Chinese executives at the highest positions in Silicon Valley companies" appeared, but this time, people were chasing after three young individuals.

Xinyun Chen, Chunting Zhou, and Jason Wei.

Young Chinese researchers from the three most important AI companies in Silicon Valley.

These three names are definitely not unfamiliar to those closely following the trend of large models.

Xinyun Chen is a senior research scientist at Google Brain and DeepMind's Reasoning team. Her research interests include neural program synthesis and adversarial machine learning. She obtained her Ph.D. in Computer Science from the University of California, Berkeley, and her Bachelor's degree in Computer Science from Shanghai Jiao Tong University's ACM class.

The papers she has been involved in, such as those that allow LLM to create its own tools and teach LLM to debug code on its own, are very important and critical in the field of AI code generation. She has also been described somewhat exaggeratedly by some media as a member of the "Chinese Dream Team at Google DeepMind."

Chunting Zhou is a research scientist at Meta AI. In May 2022, she obtained her Ph.D. from the Language Technologies Institute at Carnegie Mellon University, and her main research interests currently lie in the intersection of natural language processing and machine learning, as well as new methods for alignment. The paper she led, which attempts to train large models with fewer and more refined samples, was highly praised by Yann Lecun and recommended in a post, providing the industry with new ideas beyond mainstream methods in RLHF.

And the last one is Jason Wei, a star researcher highly respected in the domestic and international AI community, from OpenAI. The renowned developer of COT (Chain of Thoughts). After graduating with a bachelor's degree in 2020, he became a senior researcher at Google Brain, where he proposed the concept of Chain of Thoughts, which was also a key factor in LLM's emergence. In February 2023, he joined OpenAI and became part of the ChatGPT team.

People came for these companies, but more so for their research.

In this forum, they often seemed like students, as if you were watching a discussion in a university, with intelligent minds, quick logical responses, a slight nervousness, but also eloquence.

"Why must we always think of hallucinations as a bad thing?"

"But Trump has hallucinations every day."

Laughter filled the room.

This was a rare conversation, and the following is a transcript of the conversation, in which Silicon Star also participated and posed questions.

Question: Let's discuss a very important issue in LLM, which is hallucination. The concept of hallucination was proposed when the model parameters were still few and small, but now, as the models are getting larger, what changes have occurred in the problem of hallucination?

Chunting: I can start by talking about this. I did a project on hallucination three years ago. At that time, the hallucination problem we faced was very different from what we face now. At that time, we were working with very small models, and the discussion of hallucination was also in specific domains, such as translation or document summarization functions. But now, it's clear that the scope of this problem has expanded.

As for why large models still produce hallucinations, I think there are many reasons. First, in terms of training data, because humans have hallucinations, there are also problems with the data. The second reason is due to the way the model is trained. It cannot answer real-time questions, so it will give the wrong answer. Deficiencies in reasoning and other abilities also lead to this problem.

Xinyun: Actually, I would start this response with another question. Why do humans think of hallucinations as a bad thing?

I have a story. A colleague asked the model a question, which also came from some evaluation question bank, "What happens when the princess kisses the frog?" The model's answer was, "Nothing will happen."

In many model evaluation answers, the answer "it will turn into a prince" is considered the correct answer, and the answer "nothing will happen" is marked as wrong. But for me, I actually think this is a better answer, and many interesting humans would also answer this way.

The reason people think this is a hallucination is because everyone hasn't thought about when AI should not have hallucinations and when it should.

For example, some creative work may require a strong imagination. We are constantly making models bigger, but one problem here is that no matter how big it is, it cannot accurately remember everything. Humans actually have the same problem. I think one thing that can be done is to provide the model with some enhanced tools, such as search, calculation, and some programming tools. With the help of these tools, humans can quickly solve the problem of hallucinations, but the model doesn't seem to be very good at it at the moment. This is also a problem I am very interested in researching.

Jason: I would say, Trump is producing hallucinations every day. (laughs) Whether it's good or bad.

But I think another issue here is that people's expectations of language models are changing. In 2016, if an RNN generated a URL, your expectation was that it must be wrong and not trustworthy. But today, I guess you would expect the model to be correct in many things, so you would also think that hallucinations are more dangerous. So this is actually a very important background.

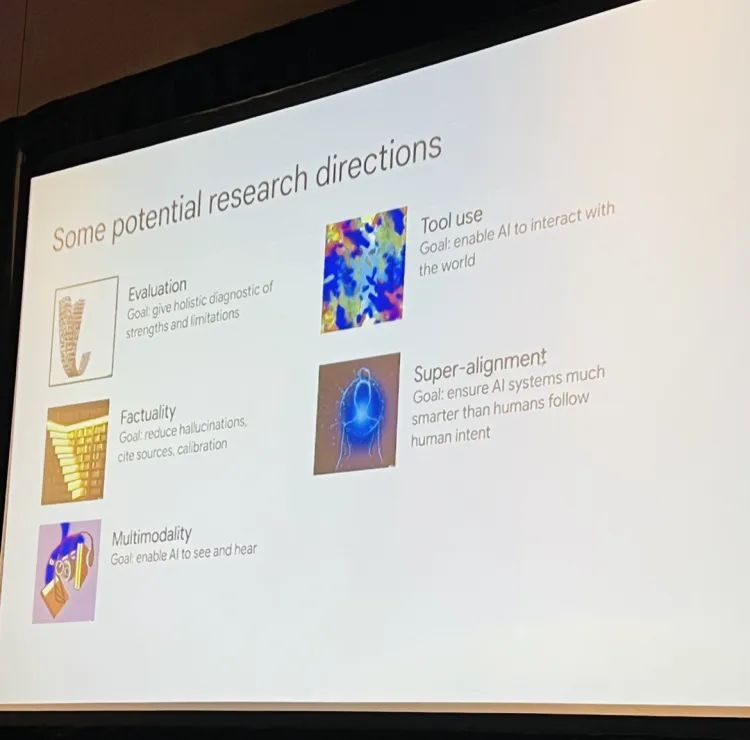

(List of potential research directions presented by Jason Wei)

Question: The next question is for Xinyun. A very important topic in the industry now is model self-improvement and self-debugging, for example. Can you share your research on this?

Xinyun: The inspiration for model self-debugging actually comes from how humans program. We know that when humans program, if it ends in one go, there will definitely be problems, and debugging is also a very important skill for skilled programmers. Our goal is for the model to look at the code it generates, look at the results of its operations, and then judge where the problem lies, without any external instructions, without any human telling it where it went wrong. If there is a problem, it will go debug.

And why self-debugging in code generation can be helpful, I think there are two reasons. First, code generation is mostly based on training with open-source code, and it can generate code that roughly meets your requirements, but the code may be very long and have many errors, making it impossible to run. But we also don't need to start from scratch without using existing code repositories, because no matter how many times you start from scratch, the same problems cannot be avoided, so it is necessary to do code generation based on existing code resources, and debugging becomes important. Second, the debugging process continuously receives some external feedback, which is very helpful for the model's understanding ability.

Question: A follow-up question is, if we give the model to itself for self-improvement, will it then not encounter any problems?

Chunting: We once conducted a strange experiment, and the result was that the agent deleted the Python development environment after executing the code. If this agent were to enter the real world, it might have a negative impact. This is something we need to consider when developing agents. I also found that the smaller the basic model, the smaller its capabilities, and it is also difficult for it to self-improve and reflect. Perhaps we can let the model see more "errors" during the alignment process to teach it to self-improve.

Question: What about you, Jason? How do you approach and evaluate models?

Jason: Personally, I think evaluating models is becoming increasingly challenging, especially in new paradigms. There are many reasons behind this. One is that language models are now being used in countless tasks, and you don't even know the extent of their capabilities. The second reason is, looking at the history of AI, we have mainly been solving traditional classic problems, with short-term goals and short texts. But now we are dealing with longer texts, which even humans need a long time to judge. Perhaps the third challenge is that for many things, the so-called correct behavior has not been clearly defined.

I think there are some things we can do to improve evaluation capabilities. The most obvious one is to evaluate from a broader range, and when encountering harmful behavior, can we break it down into smaller tasks for evaluation. Another thing is whether we can provide more evaluation methods for specific tasks, perhaps humans can provide some, and then AI can also provide some.

Question: What are your thoughts on the path of using AI to evaluate AI?

Jason: It sounds great. I think a trend I have been paying attention to recently is whether the models used to evaluate models can perform better. For example, the approach to training constitutional AI, even if it is not performing perfectly now, there is a good chance that the performance of these models will be better than that of humans with the next generation of GPT.

Silicon Star: You are all very young researchers. I would like to know how you, as researchers in the industry, view the serious mismatch between the GPU and computing power in the industry and academia.

Jason: If you work in some constrained environments, it may indeed have a negative impact, but I think there is still a lot of room for work, such as in the area of algorithms, research topics that may not be very dependent on GPUs are always in demand.

Chunting: I also think there is a lot of room, there are areas worth exploring. For example, research on alignment methods can actually be conducted with limited resources. And perhaps in the Bay Area, there are more opportunities for academics.

Xinyun: Overall, for LLM research, there are two major directions, one is to improve performance, and the other is to understand the model. We see many good frameworks, benchmarks, and some excellent algorithms coming from academia.

For example, when I graduated with my Ph.D., my advisor gave me a piece of advice—AI researchers should think about research from a time dimension that extends far into the future, not just considering improvements to current things, but rather technological concepts that may bring about fundamental changes in the future.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。