Introduction: From System Architecture to Reproducibility Assurance

In traditional blockchain systems, proof of work primarily relies on the randomness of hash operations to ensure security. However, Gonka PoW 2.0 faces more complex challenges: how to ensure both the unpredictability of computation results and that any honest node can reproduce and verify the same computation process in tasks based on large language models. This article will delve into how the MLNode side achieves this goal through a carefully designed seed mechanism and deterministic algorithms.

Before diving into the specific technical implementations, we need to understand the overall design of the PoW 2.0 system architecture and the key role that reproducibility plays within it.

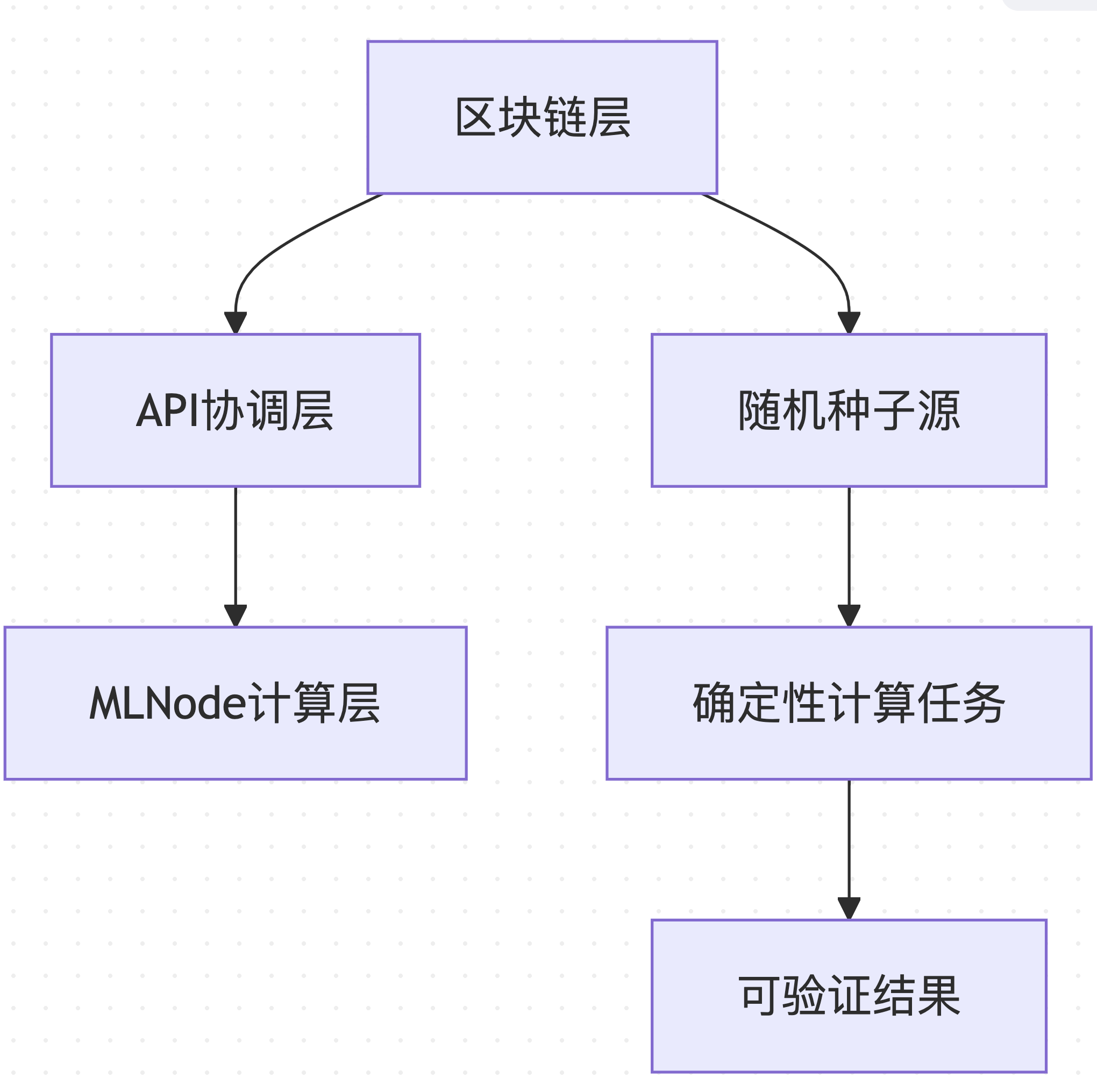

1. PoW 2.0 System Architecture Overview

1.1 Layered Architecture Design

Gonka PoW 2.0 adopts a layered architecture to ensure that reproducibility spans from the blockchain layer to the computation execution layer:

Data Source: Based on decentralized-api/internal/poc and mlnode/packages/pow architecture design

This layered design allows different components of the system to be independently optimized while maintaining overall consistency and verifiability.

1.2 Core Goals of Reproducibility

The reproducibility design of the PoW 2.0 system serves the following core goals:

Computational Fairness: Ensuring all nodes face the same computational challenges

Result Verifiability: Any honest node can reproduce and verify computation results

Anti-Cheat Assurance: Making pre-computation and result forgery computationally infeasible

Network Synchronization: Ensuring state consistency in a distributed environment

These goals collectively form the foundation of the PoW 2.0 reproducibility design, ensuring the system's security and fairness.

2. Seed System: Unified Management of Multi-Level Randomness

After understanding the system architecture, we need to explore the key technology for achieving reproducibility—the seed system. This system ensures the consistency and unpredictability of computations through multi-level randomness management.

2.1 Seed Types and Specific Goals

Gonka PoW 2.0 has designed four different types of seeds, each serving a specific computational goal:

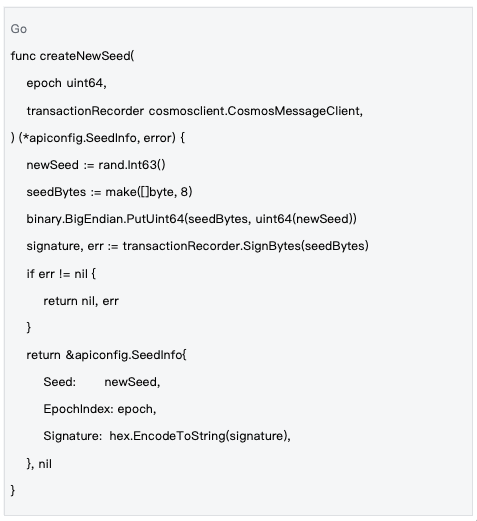

Network-Level Seeds

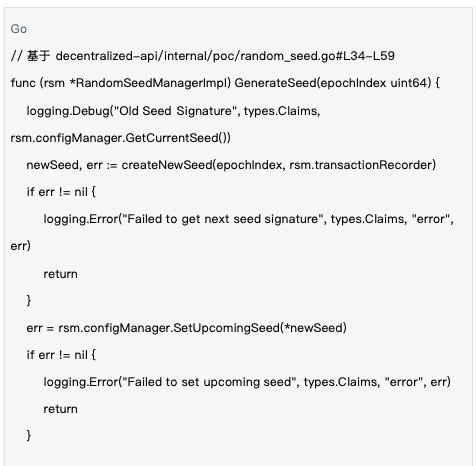

Data Source: decentralized-api/internal/poc/random_seed.go#L90-L111

Goal: To provide a unified randomness foundation for each epoch of the entire network, ensuring all nodes use the same global random source.

Network-level seeds are the foundation of the system's randomness, ensuring that all nodes in the network use the same randomness basis through blockchain transactions.

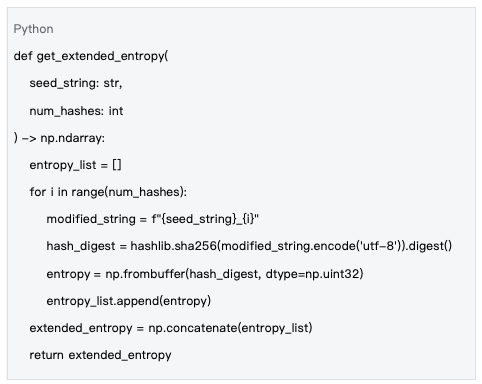

Task-Level Seeds

Data Source: mlnode/packages/pow/src/pow/random.py#L9-L21

Goal: To generate high-quality random number generators for each computational task through multiple rounds of SHA-256 hashing to expand the entropy space.

Task-level seeds provide high-quality randomness for each specific computational task by expanding the entropy space.

Node-Level Seeds

Data Source: Seed string construction pattern f"{hash_str}_{public_key}_nonce{nonce}"

Goal: To ensure that different nodes and different nonce values produce completely different computational paths, preventing collisions and repetitions.

Node-level seeds ensure that each node's computational path is unique by combining the node's public key and nonce value.

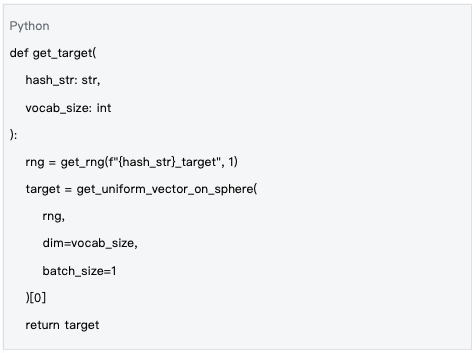

Target Vector Seeds

Data Source: mlnode/packages/pow/src/pow/random.py#L165-L177

Goal: To generate a unified target vector for the entire network, with all nodes optimizing towards the same high-dimensional spherical position.

Target vector seeds ensure that all nodes in the network compute towards the same target, which is key to verifying result consistency.

2.2 Seed Lifecycle Management

Management Mechanism: Seeds are managed at the epoch level, with new seeds generated at the start of each epoch and synchronized to the entire network through blockchain transactions, ensuring all nodes use the same randomness basis.

The lifecycle management of seeds ensures the timeliness and consistency of randomness, which is an important guarantee for the secure operation of the system.

3. Seed-Driven Generation Mechanism of LLM Components

After understanding the seed system, we need to further explore how these seeds are applied in the generation process of LLM components. This is a key link in achieving reproducibility.

3.1 Random Initialization of Model Weights

Why is random initialization of model weights necessary?

In traditional deep learning, model weights are usually obtained through pre-training. However, in PoW 2.0, to ensure:

Unpredictability of Computational Tasks: The same input does not produce predictable output due to fixed weights

ASIC Resistance: Dedicated hardware cannot optimize for fixed weights

Fair Competition: All nodes use the same random initialization rules

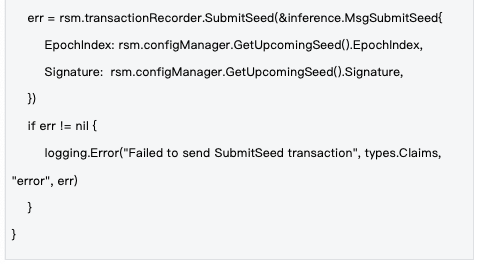

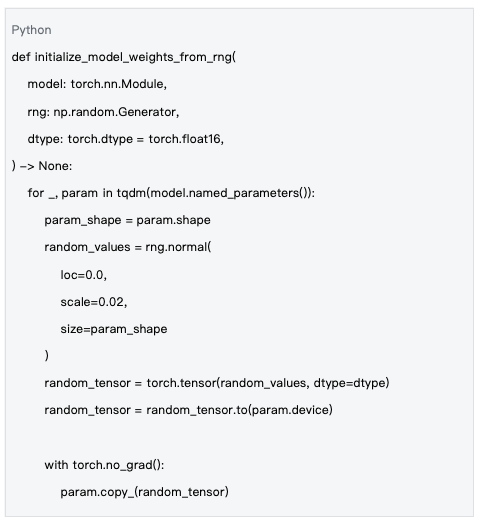

Data Source: mlnode/packages/pow/src/pow/random.py#L71-L88

Random initialization of model weights is a key step in ensuring computational unpredictability and fairness.

Deterministic Process of Weight Initialization

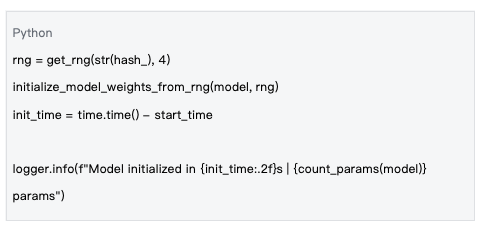

Data Source: mlnode/packages/pow/src/pow/compute/model_init.py#L120-L125

Key Features:

• Uses block hash as a random seed to ensure all nodes generate the same weights

• Initializes weights using a normal distribution N(0, 0.02²)

• Supports different data types (e.g., float16) for memory optimization

This deterministic process ensures that different nodes generate completely consistent model weights under the same conditions.

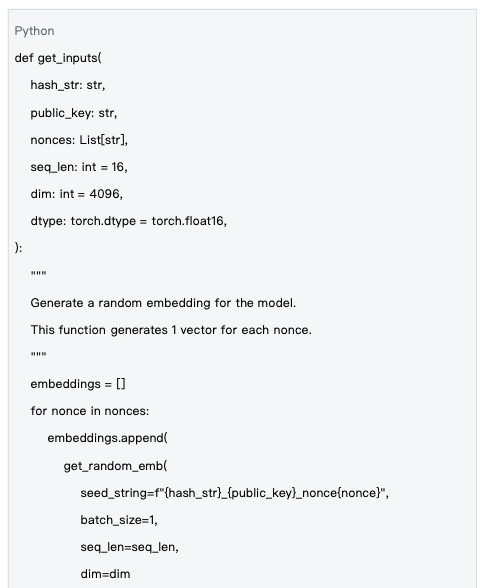

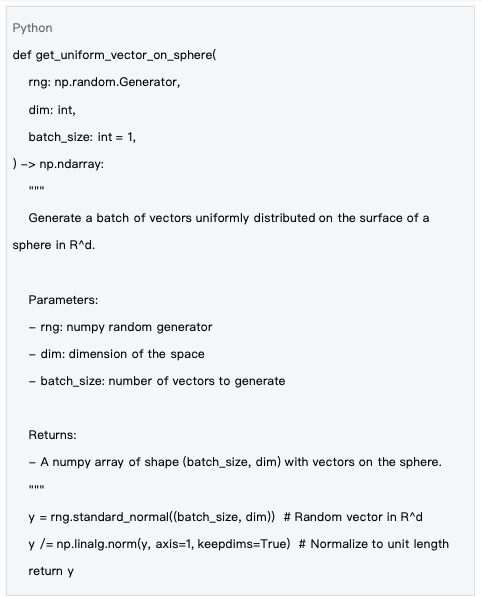

3.2 Input Vector Generation Mechanism

Why is random input vector necessary?

Traditional PoW uses fixed data (e.g., transaction lists) as input, but PoW 2.0 needs to generate different input vectors for each nonce to ensure:

Continuity of Search Space: Different nonces correspond to different computational paths

Unpredictability of Results: Small changes in input lead to huge differences in output

Efficiency of Verification: Verifiers can quickly reproduce the same input

Data Source: mlnode/packages/pow/src/pow/random.py#L129-L155

The generation of random input vectors ensures the diversity and unpredictability of computations.

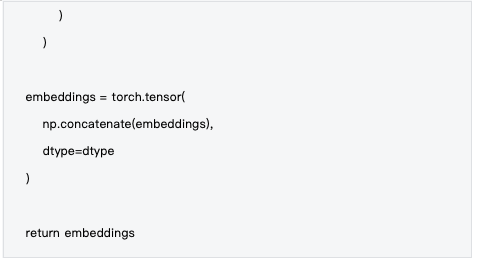

Mathematical Basis for Input Generation

Data Source: mlnode/packages/pow/src/pow/random.py#L28-L40

Technical Features:

• Each nonce corresponds to a unique seed string

• Uses standard normal distribution to generate embedding vectors

• Supports batch generation to improve efficiency

This mathematical basis ensures the quality and consistency of input vectors.

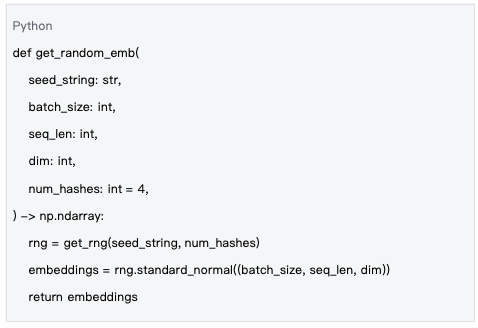

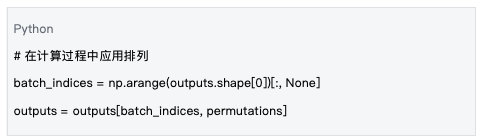

3.3 Output Permutations Generation

Why is output permutation necessary?

In the output layer of LLM, the vocabulary is usually large (e.g., 32K-100K tokens). To increase computational complexity and prevent targeted optimization, the system randomly permutes the output vectors:

Data Source: mlnode/packages/pow/src/pow/random.py#L158-L167

Output permutations increase the complexity of computations, enhancing the security of the system.

Application Mechanism of Permutations

Data Source: Based on the processing logic in mlnode/packages/pow/src/pow/compute/compute.py

Design Goals:

• Increase the complexity of computational challenges

• Prevent optimization for specific vocabulary positions

• Maintain determinism to support verification

This application mechanism ensures the effectiveness and consistency of permutations.

4. Target Vector and Spherical Distance Calculation

After understanding the generation mechanism of LLM components, we need to further explore the core computational challenge in PoW 2.0—the calculation of target vectors and spherical distances.

4.1 What is a Target Vector?

The target vector is the "bullseye" of the PoW 2.0 computational challenge—every node attempts to make its model output as close as possible to this predetermined high-dimensional vector.

Mathematical Properties of the Target Vector

Data Source: mlnode/packages/pow/src/pow/random.py#L43-L56

Key Features:

• The vector lies on the high-dimensional unit sphere (||target|| = 1)

• Uses the Marsaglia method to ensure uniform distribution on the sphere

• All dimensions have equal selection probability

The mathematical properties of the target vector ensure the fairness and consistency of the computational challenge.

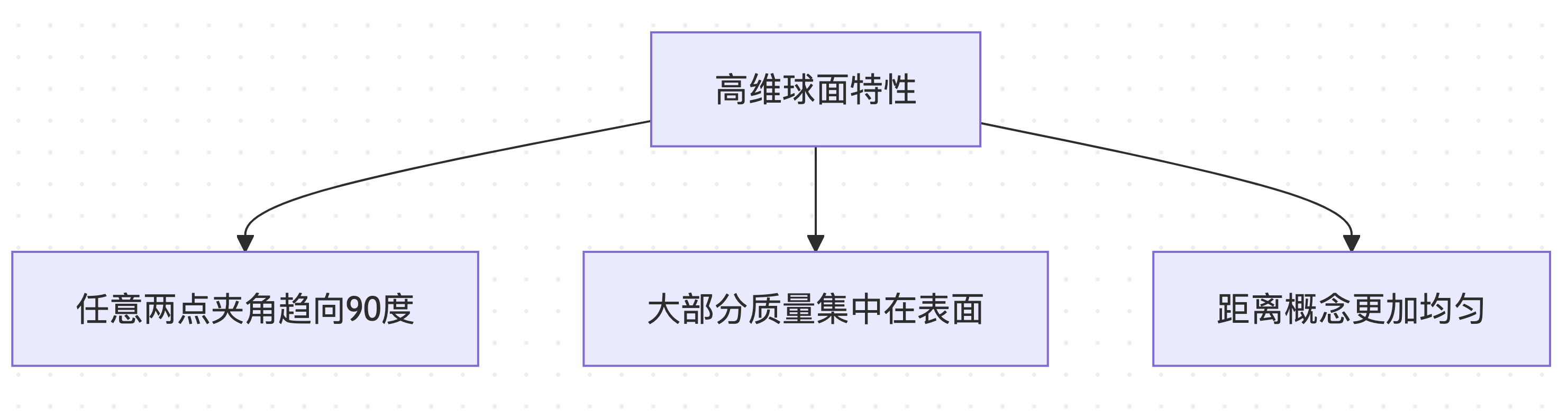

4.2 Why Compare Results on the Sphere?

Mathematical Advantages

Normalization Advantage: All vectors on the sphere have unit length, eliminating the influence of vector magnitude

Geometric Intuition: Euclidean distance directly corresponds to angular distance on the sphere

Numerical Stability: Avoids computational instability caused by large numerical ranges

Special Properties of High-Dimensional Geometry

In high-dimensional spaces (e.g., a 4096-dimensional vocabulary space), spherical distributions have counterintuitive properties:

These special properties make spherical distance calculations an ideal metric for computational challenges.

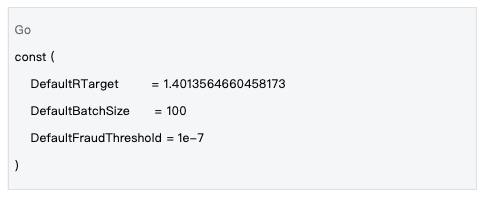

4.3 r_target Estimation and PoC Phase Initialization

Concept and Calculation of r_target

rtarget is a key difficulty parameter that defines the distance threshold for a "successful" computation result. Results with distances less than rtarget are considered valid proof of work.

Data Source: decentralized-api/mlnodeclient/poc.go#L12-L14

In Gonka PoW 2.0, the default value of r_target is set to 1.4013564660458173, a value determined through extensive experimentation and statistical analysis, aimed at balancing computational difficulty and network efficiency. Although there is a dynamic adjustment mechanism in the system, it will generally be close to this default value.

Initialization of r_target in the PoC Phase

At the start of each PoC (Proof of Computation) phase, the system needs to:

Assess Network Computing Power: Estimate the current total computing power of the network based on historical data

Adjust Difficulty Parameters: Set an appropriate

r_targetvalue to maintain stable block timesSynchronize Network Parameters: Ensure all nodes use the same

r_targetvalue

Technical Implementation:

• The r_target value is synchronized to all nodes through blockchain state

• Each PoC phase may use different r_target values

• An adaptive adjustment algorithm modifies difficulty based on the success rate of the previous phase

This initialization mechanism ensures the stable operation and fairness of the network.

5. Engineering Assurance of Reproducibility

After understanding the core algorithms, we need to focus on how to ensure reproducibility in engineering implementation. This is key to ensuring the stable operation of the system in practical deployment.

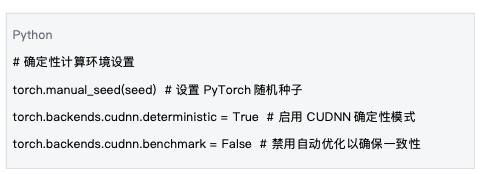

5.1 Deterministic Computing Environment

Data Source: Based on the environment settings in mlnode/packages/pow/src/pow/compute/model_init.py

Setting up a deterministic computing environment is fundamental to ensuring reproducibility.

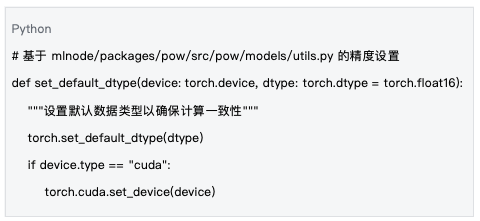

5.2 Numerical Precision Management

Numerical precision management ensures the consistency of computation results across different hardware platforms.

5.3 Cross-Platform Compatibility

The system design considers compatibility across different hardware platforms:

- CPU vs GPU: Supports producing the same computation results on both CPU and GPU

- Different GPU Models: Ensures consistency through standardized numerical precision

- Operating System Differences: Utilizes standard mathematical libraries and algorithms

Cross-platform compatibility ensures the stable operation of the system in various deployment environments.

6. System Performance and Scalability

On the basis of ensuring reproducibility, the system also needs to have good performance and scalability. This is key to ensuring the efficient operation of the network.

6.1 Parallelization Strategy

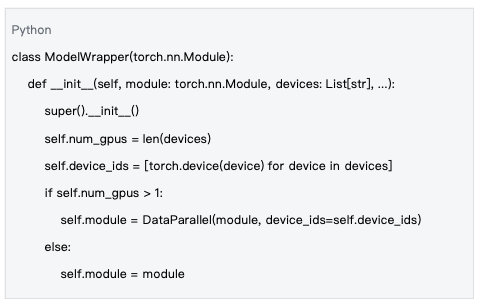

Data Source: mlnode/packages/pow/src/pow/compute/model_init.py#L26-L53

The parallelization strategy fully utilizes the computational capabilities of modern hardware.

6.2 Memory Optimization

The system optimizes memory usage through various strategies:

- Batch Processing Optimization: Automatically adjusts batch sizes to maximize GPU utilization

- Precision Selection: Uses float16 to reduce memory usage

- Gradient Management: Disables gradient computation in inference mode

Memory optimization ensures the efficient operation of the system in resource-constrained environments.

Conclusion: Engineering Value of Reproducibility Design

After an in-depth analysis of the reproducibility design of PoW 2.0, we can summarize its technical achievements and engineering value.

Core Technical Achievements

Multi-Level Seed Management: A complete seed system from network-level to task-level, ensuring a balance between determinism and unpredictability in computations

Systematic Randomization of LLM Components: A unified randomization framework for model weights, input vectors, and output permutations

Engineering Application of High-Dimensional Geometry: Designing fair computational challenges using spherical geometric properties

Cross-Platform Reproducibility: Ensuring consistency across different hardware platforms through standardized algorithms and precision control

These technical achievements collectively form the core of the PoW 2.0 reproducibility design.

Innovative Value of System Design

Gonka PoW 2.0 successfully shifts computational resources from meaningless hash operations to valuable AI computations while maintaining blockchain security. Its reproducibility design not only ensures the fairness and security of the system but also provides a feasible technical paradigm for future "meaningful mining" models.

Technical Impact:

• Provides a verifiable execution framework for distributed AI computation

• Demonstrates the compatibility of complex AI tasks with blockchain consensus

• Establishes new design standards for proof of work

Through a carefully designed seed system and deterministic algorithms, Gonka PoW 2.0 achieves a fundamental shift from traditional "wasteful security" to "value-based security," paving the way for the sustainable development of blockchain technology.

Note: This article is based on the actual code implementation of the Gonka project, and all code examples and technical descriptions are derived from the project's official code repository.

About Gonka.ai

Gonka is a decentralized network aimed at providing efficient AI computing power, designed to maximize the utilization of global GPU resources to complete meaningful AI workloads. By eliminating centralized gatekeepers, Gonka offers developers and researchers permissionless access to computing resources while rewarding all participants with its native token GNK.

Gonka is incubated by the American AI developer Product Science Inc. The company was founded by seasoned professionals from the Web 2 industry, including former Snap Inc. core product director Libermans siblings, and successfully raised $18 million in 2023, with investors including OpenAI investor Coatue Management, Solana investor Slow Ventures, K 5, Insight, and Benchmark partners. Early contributors to the project include well-known leading companies in the Web 2-Web 3 space such as 6 blocks, Hard Yaka, Gcore, and Bitfury.

Official Website| Github| X| Discord| White Paper| Economic Model| User Manual

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。