The moat is shifting towards "executable signals" and "underlying data capabilities," with long-tail assets and trading data loops presenting unique opportunities for crypto-native entrepreneurs.

Author | Story @IOSG

TL;DR

Data Challenge: The block time competition of high-performance public chains has entered the sub-second era. The increase in high concurrency, high traffic fluctuations, and multi-chain heterogeneous demands on the C-end has added complexity to the data side, necessitating a shift in data infrastructure towards real-time incremental processing + dynamic scaling. Traditional batch processing ETL has delays ranging from minutes to hours, making it difficult to meet real-time trading needs. Emerging solutions like The Graph, Nansen, and Pangea are introducing stream processing, compressing delays to real-time tracking levels.

Paradigm Shift in Data Competition: The last cycle emphasized "understandable"; this cycle emphasizes "profitable." Under the Bonding Curve model, a one-minute delay can result in cost differences of several times. Tool iteration: from manual slippage settings → sniper bots → GMGN integrated terminals. The ability to trade on-chain is gradually being commoditized, with the core competitive frontier shifting towards the data itself: those who can capture signals faster can help users profit.

Dimensional Expansion of Trading Data: Meme is essentially the financialization of attention, with key elements being narrative, attention, and subsequent dissemination. The closed loop of off-chain public opinion × on-chain data: narrative tracking summaries and sentiment quantification become the core of trading. "Underwater data": capital flow, role profiling, smart money/KOL address labeling reveals the implicit games behind on-chain anonymous addresses. The next generation of trading terminals will integrate multi-dimensional signals from on-chain and off-chain into seconds, enhancing entry and risk avoidance judgments.

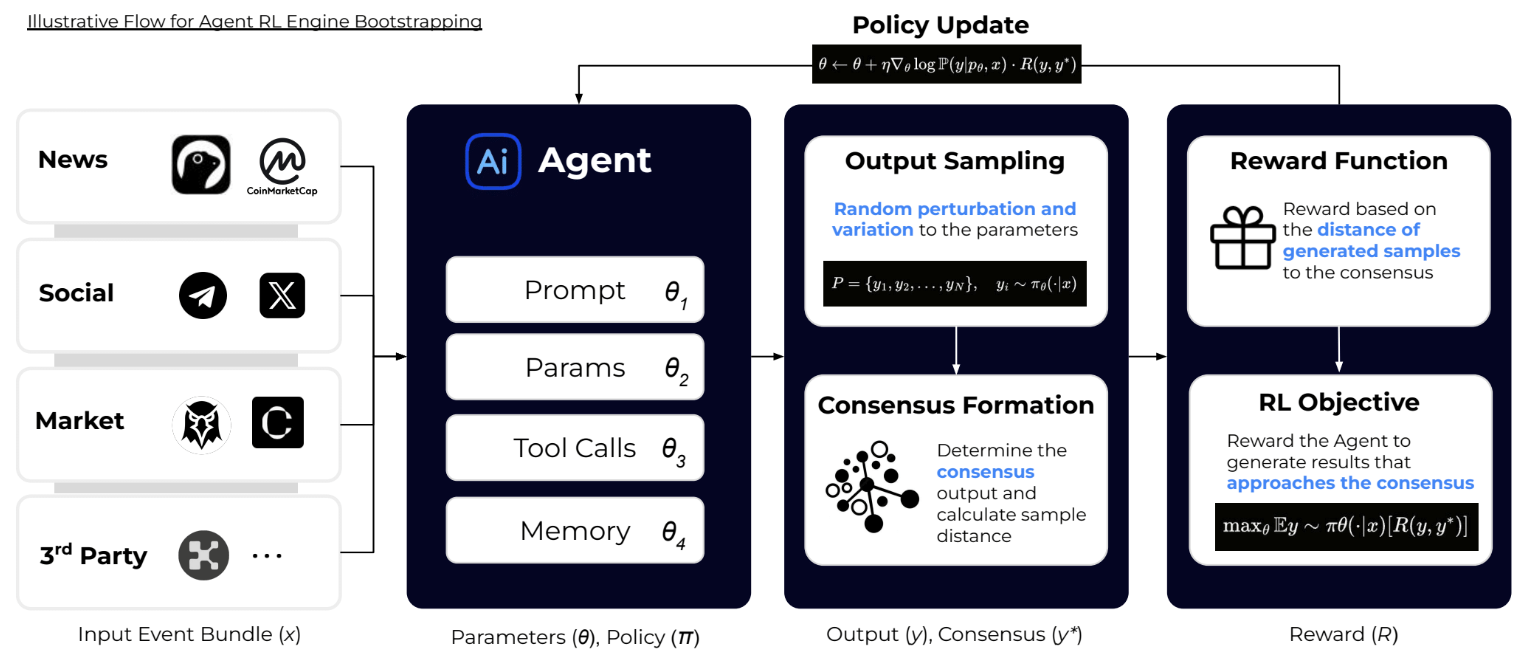

AI-Driven Executable Signals: From information to profit. The new phase of competitive goals: fast enough, automated, and capable of generating excess returns. LLM + multi-modal AI can automatically extract decision signals and combine them with Copy Trading, take profit, and stop-loss execution. Risk challenges: hallucinations, short signal lifespan, execution delays, and risk control. Balancing speed and accuracy, reinforcement learning, and simulation backtesting are key.

Survival Choices for Data Dashboards: Lightweight data aggregation/dashboard applications lack a moat, and their survival space is being compressed. Downward: Deepen high-performance underlying pipelines and integrate data research. Upward: Extend to the application layer, directly addressing user scenarios to increase data call activity. Future track patterns: either become the infrastructure of Web3's water, electricity, and coal, or become the user platform of Crypto Bloomberg.

The moat is shifting towards "executable signals" and "underlying data capabilities," with long-tail assets and trading data loops presenting unique opportunities for crypto-native entrepreneurs. The opportunity window in the next 2–3 years:

Upstream Infrastructure: Web2-level processing capabilities + Web3 native demands → Web3 Databricks/AWS.

Downstream Execution Platforms: AI Agent + multi-dimensional data + seamless execution → Crypto Bloomberg Terminal.

Thanks to projects like Hubble AI, Space & Time, OKX DEX for their support of this research report!

Introduction: The Triple Resonance of Meme, High-Performance Public Chains, and AI

In the last cycle, the growth of on-chain trading primarily relied on infrastructure iteration. As we enter a new cycle, with infrastructure gradually maturing, super applications represented by Pump.fun are becoming the new growth engine of the crypto industry. This asset issuance model, with a unified issuance mechanism and sophisticated liquidity design, has shaped a trading trench that is fair and rich in wealth myths. The replicability of this high-multiplication wealth effect is profoundly changing users' profit expectations and trading habits. Users need not only faster entry opportunities but also the ability to acquire, analyze, and execute multi-dimensional data in a very short time, while existing data infrastructure struggles to support such density and real-time demands.

Consequently, there is a higher-level demand for trading environments: lower friction, faster confirmations, and deeper liquidity. Trading venues are accelerating their migration to high-performance public chains and Layer2 Rollups represented by Solana and Base. The trading data volume of these public chains has increased more than tenfold compared to the previous round with Ethereum, presenting more severe data performance challenges for existing data providers. With the upcoming launch of new generation high-performance public chains like Monad and MegaETH, the demand for on-chain data processing and storage will grow exponentially.

At the same time, the rapid maturation of AI is accelerating the realization of intelligent equity. The intelligence of GPT-5 has reached a doctoral level, and multi-modal large models like Gemini can easily understand candlestick charts… With the help of AI tools, complex trading signals can now be understood and executed by ordinary users. In this trend, traders are beginning to rely on AI for trading decisions, and AI trading decisions depend on multi-dimensional, high-efficiency data. AI is evolving from an "auxiliary analysis tool" to the "central hub of trading decisions," and its proliferation further amplifies the demands for data real-time, interpretability, and scalable processing.

Under the triple resonance of the meme trading frenzy, the expansion of high-performance public chains, and the commodification of AI, the on-chain ecosystem's demand for a new data infrastructure is becoming increasingly urgent.

Addressing the Data Challenge of 100,000 TPS and Millisecond Block Times

With the rise of high-performance public chains and high-performance Rollups, the scale and speed of on-chain data have entered a new phase.

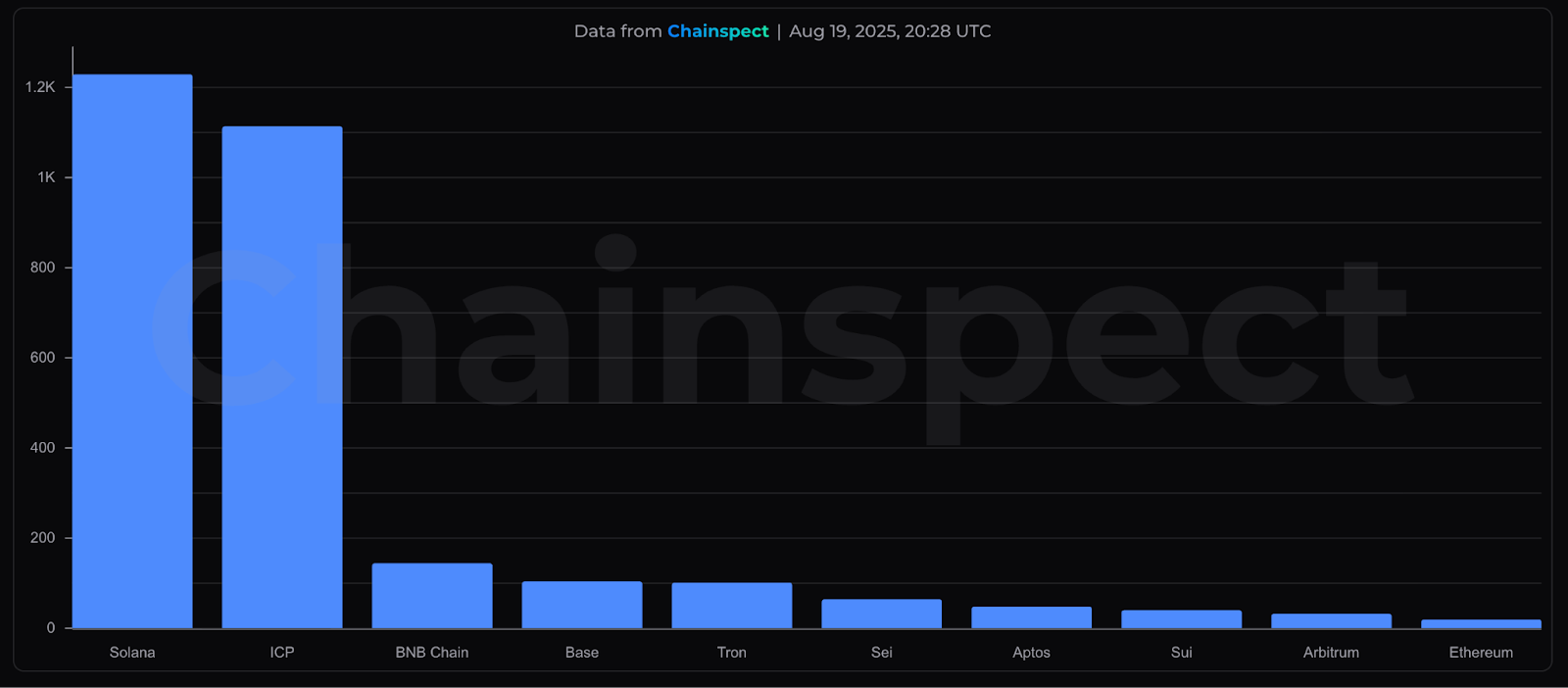

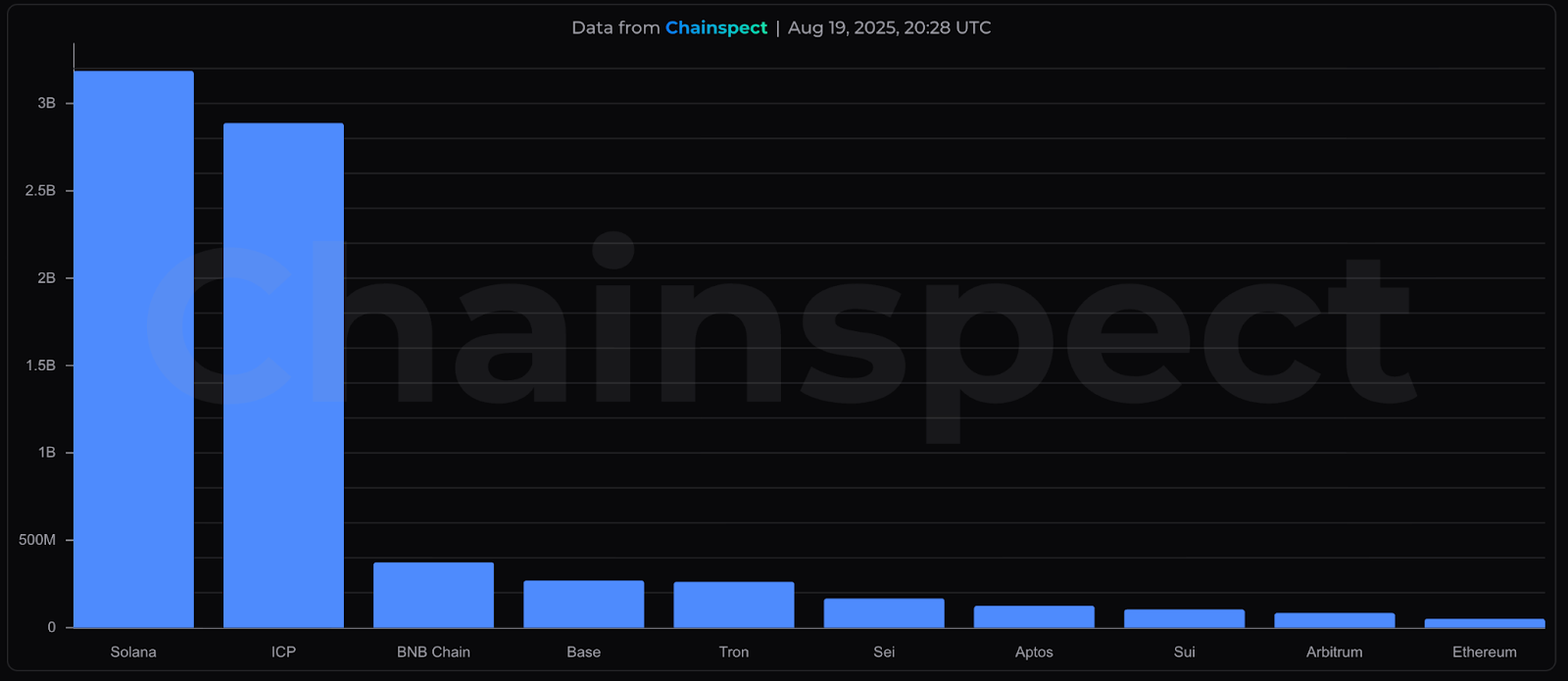

With the widespread adoption of high concurrency and low-latency architectures, daily trading volumes easily exceed tens of millions, with raw data sizes measured in hundreds of GB. For example, Solana has averaged over 1,200 TPS in the last 30 days, with daily transactions exceeding 100 million; on August 17, it even set a historical high of 107,664 TPS. Statistics show that Solana's ledger data is growing rapidly at a rate of 80-95 TB per year, which translates to 210-260 GB per day.

▲ Chainspect, 30-day average TPS

▲ Chainspect, 30-day transaction volume

Not only is throughput climbing, but the block times of emerging public chains have also entered the millisecond range. The BNB Chain's Maxwell upgrade has reduced block times to 0.8 seconds, while Base Chain's Flashblocks technology compresses it to 200 milliseconds. In the second half of this year, Solana plans to replace PoH with Alpenglow, reducing block confirmation times to 150 milliseconds, while the MegaETH mainnet aims for real-time block times of 10 milliseconds. These breakthroughs in consensus and technology significantly enhance the real-time nature of transactions but place unprecedented demands on block data synchronization and decoding capabilities.

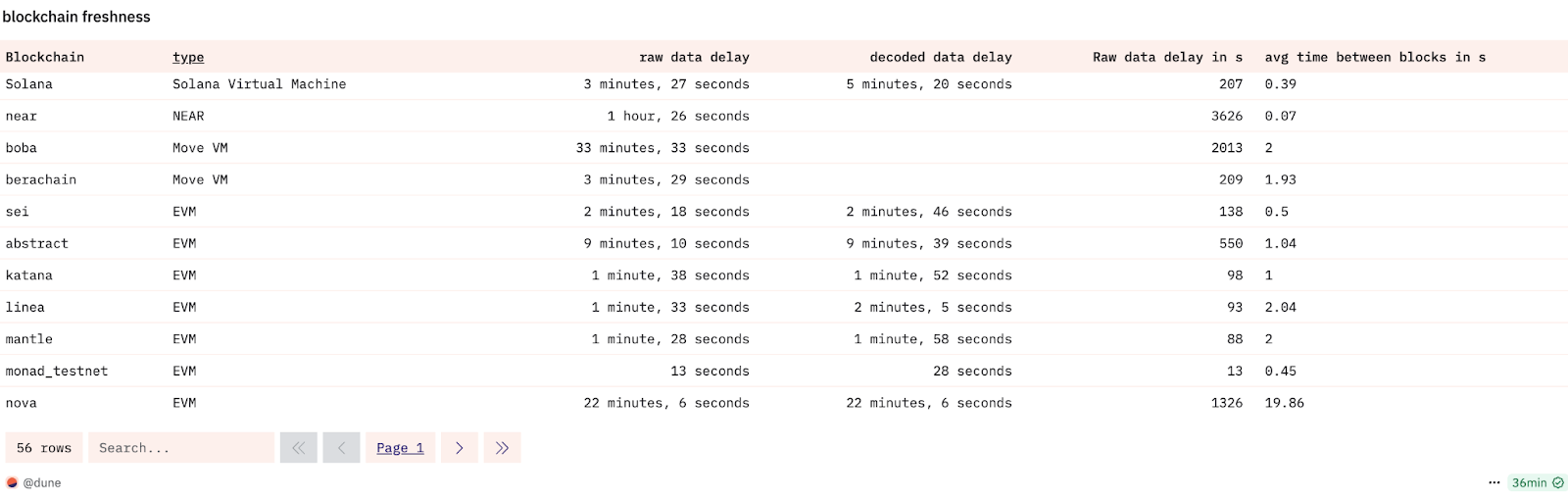

However, most downstream data infrastructures still rely on batch processing ETL pipelines, inevitably leading to data delays. For instance, Dune reports that contract interaction event data on Solana typically has a delay of about 5 minutes, while protocol layer aggregated data may take up to 1 hour. This means that on-chain transactions that could be confirmed in 400 milliseconds are delayed hundreds of times before they become visible in analytical tools, which is nearly unacceptable for real-time trading applications.

▲ Dune, Blockchain Freshness

To address the challenges on the data supply side, some platforms have shifted to streaming and real-time architectures. The Graph, using Substreams and Firehose, has compressed data delays to near real-time. Nansen has achieved performance improvements of several times on Smart Alerts and real-time dashboards by introducing streaming processing technologies like ClickHouse. Pangea aggregates computing, storage, and bandwidth provided by community nodes to offer real-time streaming data to B-end users such as market makers, quantitative analysts, and central limit order books (CLOBs) with delays of less than 100 milliseconds.

▲ Chainspect

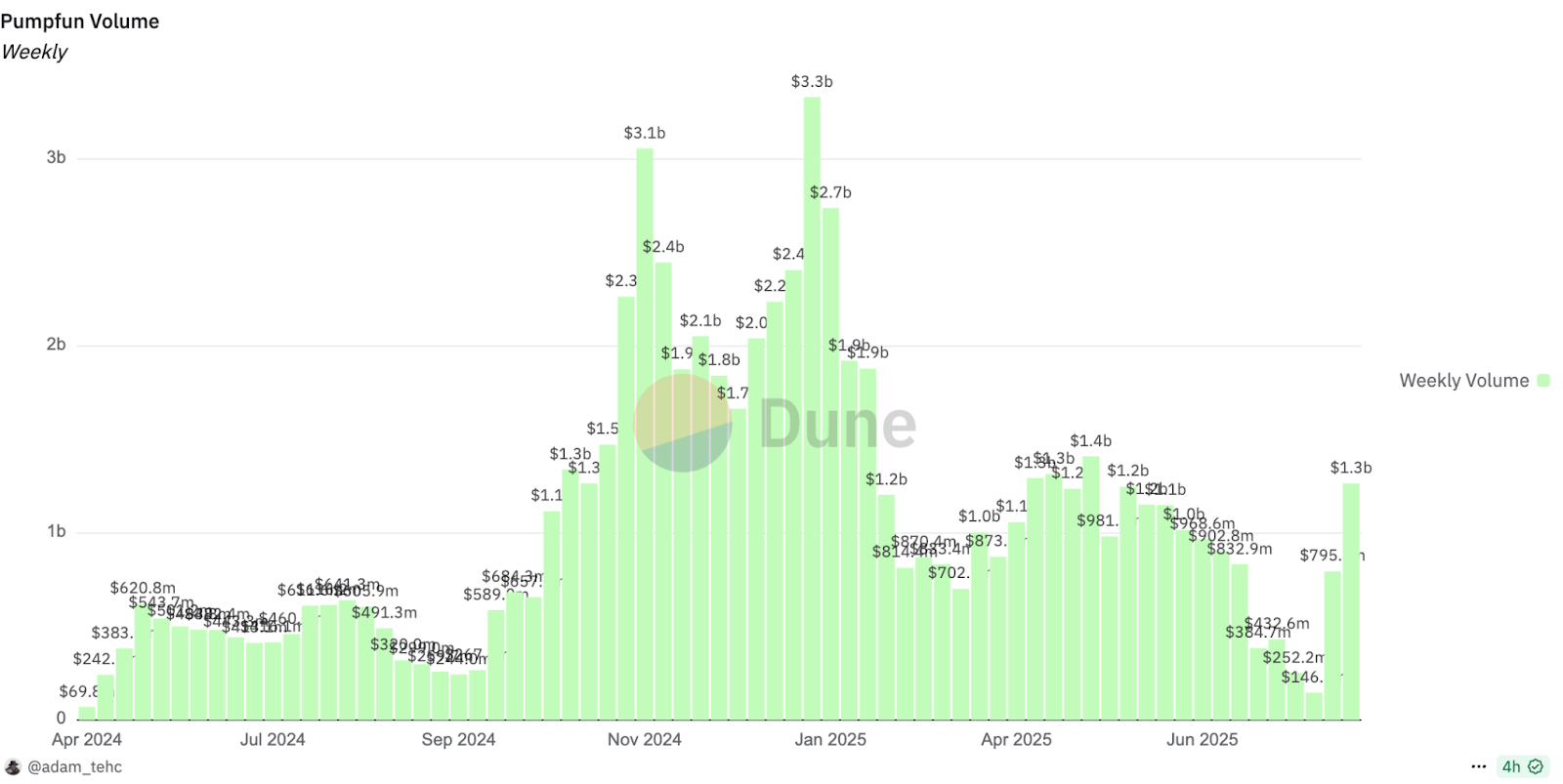

In addition to the massive data volume, on-chain trading also exhibits a significant uneven traffic distribution characteristic, with weekly trading volumes on Pumpfun varying nearly 30 times over the past year. In 2024, the meme trading platform GMGN experienced 6 server "crashes" within 4 days, forcing it to migrate its underlying database from AWS Aurora to the open-source distributed SQL database TiDB. After the migration, the system's horizontal scaling capabilities and computational elasticity significantly improved, increasing business agility by about 30% and alleviating pressure during peak trading periods.

▲ Dune, Pumpfun Weekly Volume

▲ Odaily, TiDB's Web3 Service Case

The multi-chain ecosystem further exacerbates this complexity. Differences in log formats, event structures, and transaction fields among different public chains mean that each new chain requires customized parsing logic, greatly testing the flexibility and scalability of data infrastructure. Some data providers have thus adopted a "customer-first" strategy: where there is active trading activity, they prioritize integrating services for that chain, balancing flexibility and scalability.

If data processing remains at the fixed-interval batch processing ETL stage in the context of the prevalence of high-performance chains, it will face challenges of delay accumulation, decoding bottlenecks, and query lags, failing to meet the demands for real-time, refined, and dynamic interactive data consumption. Therefore, on-chain data infrastructure must further evolve towards streaming incremental processing and real-time computing architectures, while also incorporating load balancing mechanisms to cope with the concurrency pressures brought by periodic trading peaks in the crypto space. This is not only a natural extension of the technical path but also a key link in ensuring the stability of real-time queries, and it will form a true watershed in the competition of the next generation of on-chain data platforms.

Speed is Wealth: The Paradigm Shift in On-Chain Data Competition

The core proposition of on-chain data has shifted from "visualization" to "execution." In the previous cycle, Dune was the standard tool for on-chain analysis. It met the needs of researchers and investors for "understandable" data, allowing people to stitch together on-chain narratives using SQL charts.

GameFi and DeFi players relied on Dune to track capital inflows and outflows, calculate yield rates, and retreat in time before market turning points.

NFT players used Dune to analyze transaction volume trends, whale holdings, and distribution characteristics to predict market heat.

However, in this cycle, meme players are the most active consumer group. They have driven the phenomenal application Pump.fun to accumulate $700 million in revenue, nearly double the total revenue of the leading consumer application Opensea from the previous cycle.

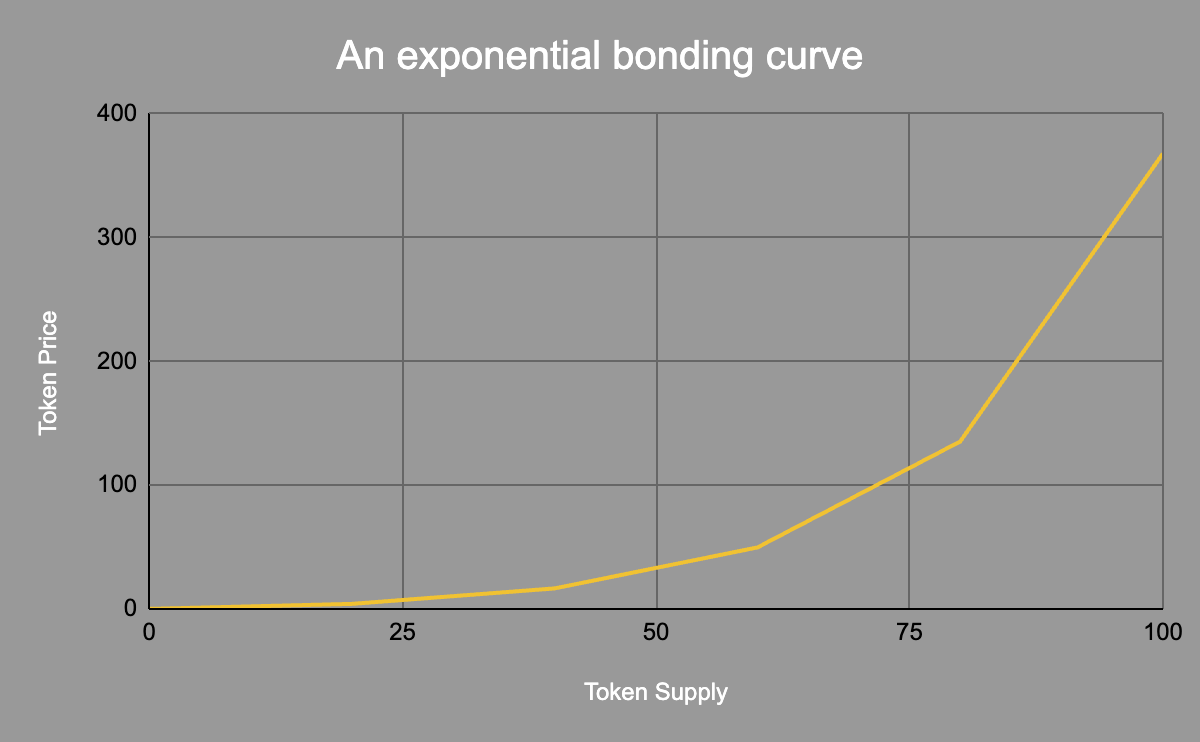

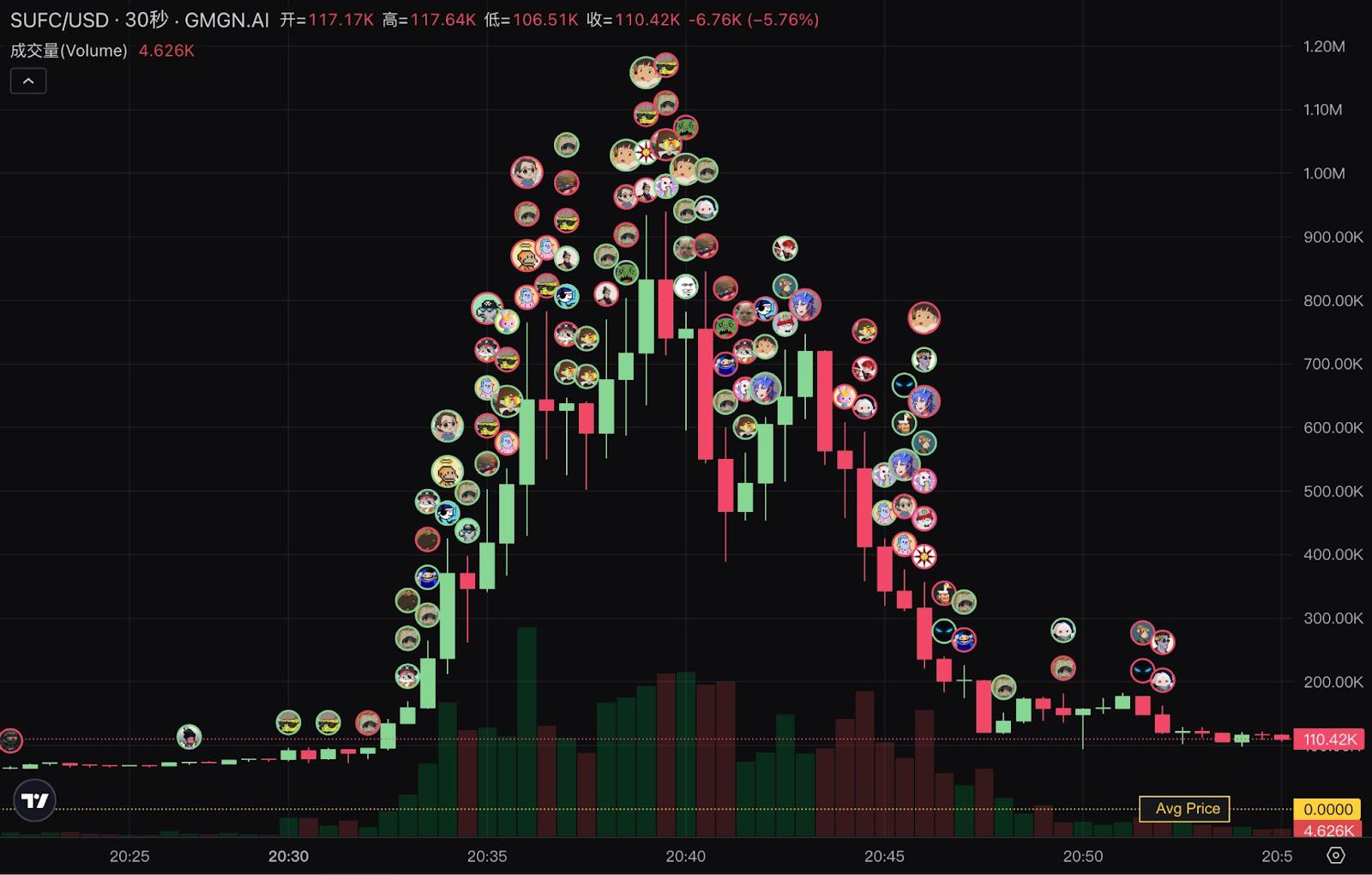

In the meme space, the market's time sensitivity has been amplified to the extreme. Speed is no longer just an added bonus; it is the core variable that determines profit and loss. In the primary market priced by Bonding Curve, speed equals cost. Token prices rise exponentially with buying demand, and even a one-minute delay can result in several times the entry cost. According to research by Multicoin, the most profitable players in this game often have to pay a 10% slippage to enter blocks three points ahead of their competitors. The wealth effect and "get-rich-quick myths" drive players to chase second-level candlestick charts, same-block trading execution engines, and one-stop decision panels, competing on information collection and order placement speed.

▲ Binance

In the era of manual trading on Uniswap, users had to set slippage and gas themselves, and the front end did not display prices, making trading feel more like "buying a lottery ticket." With the advent of the BananaGun sniper bot era, automatic sniping and slippage techniques allowed retail players to stand on the same starting line as scientists. Then came the PepeBoost era, where bots pushed pool opening information in real-time while also synchronously pushing front-row holding data. Finally, we have reached the GMGN era, which has created a terminal that integrates candlestick information, multi-dimensional data analysis, and trading execution, becoming the "Bloomberg Terminal" of meme trading.

As trading tools continue to iterate, execution thresholds gradually dissolve, and the competitive frontier inevitably shifts towards the data itself: those who can capture signals faster and more accurately can establish trading advantages in a rapidly changing market and help users make money.

Dimensions are Advantages: The Truth Beyond Candlesticks

The essence of memecoins is the financialization of attention. High-quality narratives can continuously break through boundaries, aggregating attention, thereby pushing up prices and market values. For meme traders, while real-time data is important, answering three key questions is crucial for achieving significant results: What is the narrative of this token, who is paying attention, and how will attention continue to amplify in the future? These aspects only leave shadows on the candlestick charts; the real driving force relies on multi-dimensional data—off-chain public sentiment, on-chain addresses and holding structures, and the precise mapping of the two.

On-Chain × Off-Chain: The Closed Loop from Attention to Transactions

Users attract attention off-chain and complete transactions on-chain, and the closed-loop data between the two is becoming the core advantage of meme trading.

#Narrative Tracking and Propagation Chain Identification

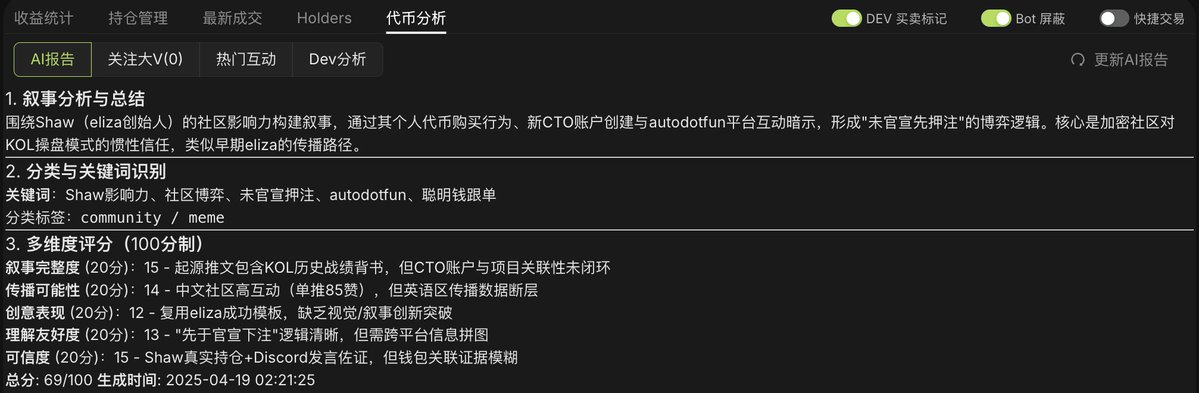

On social platforms like Twitter, tools such as XHunt can help meme players analyze the KOL attention lists of projects to determine the associated individuals behind the projects and potential attention propagation chains. 6551 DEX aggregates Twitter, official websites, tweet comments, listing records, KOL attention, etc., to generate complete AI reports for traders that change in real-time with public sentiment, helping traders accurately capture narratives.

#Sentiment Indicator Quantification

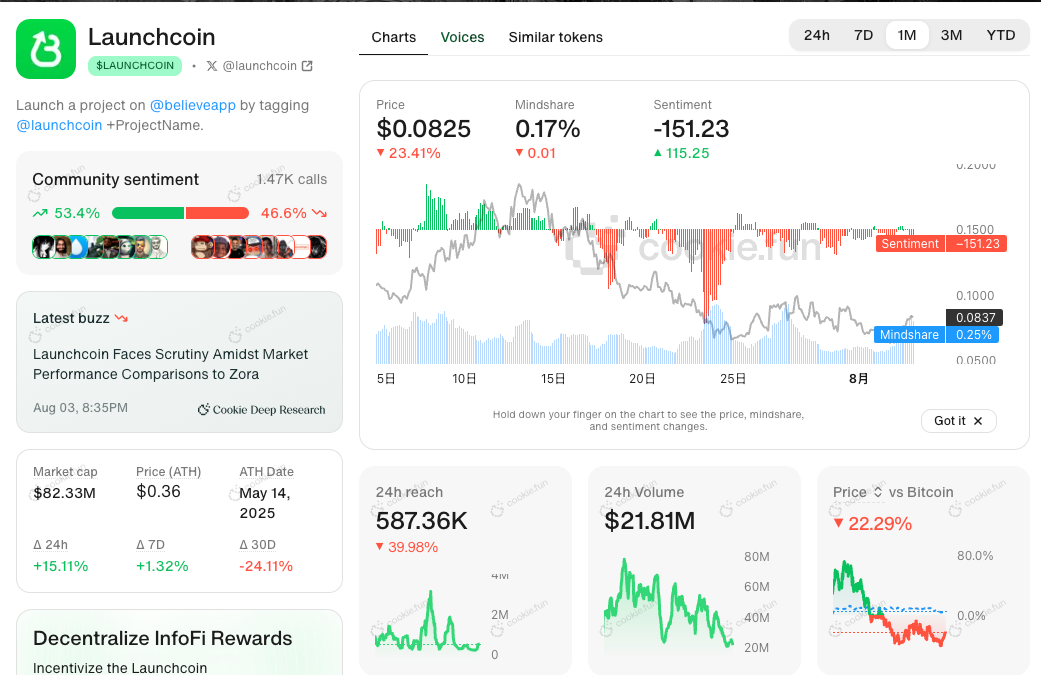

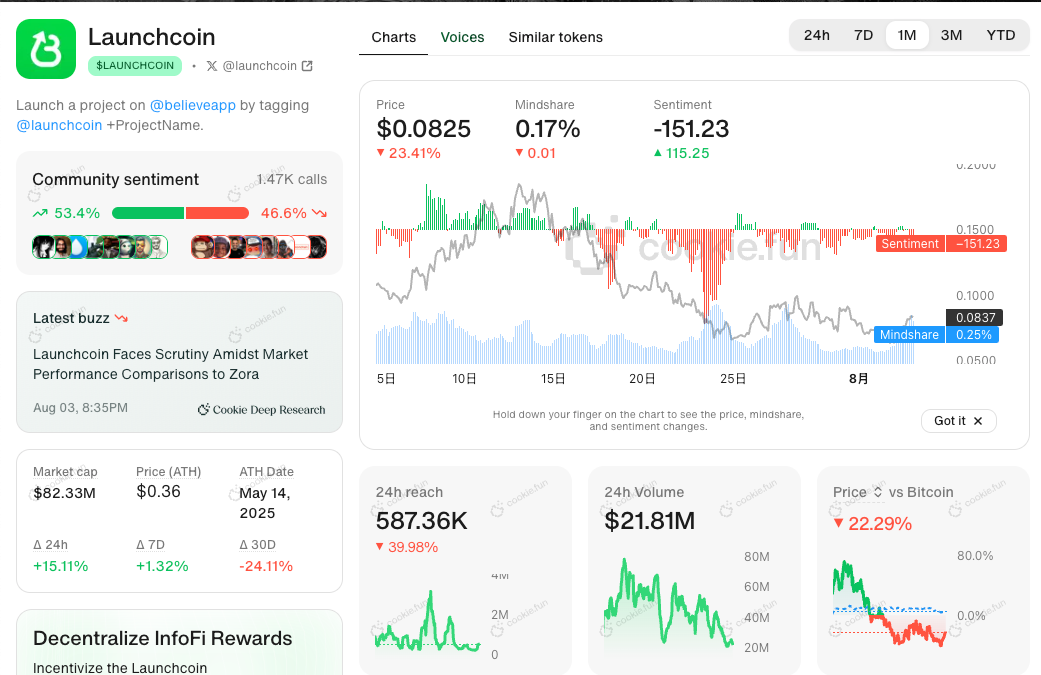

Infofi tools like Kaito and Cookie.fun aggregate content and conduct sentiment analysis on Crypto Twitter, providing quantifiable indicators for Mindshare, Sentiment, and Influence. For example, Cookie.fun directly overlays these two indicators onto price charts, turning off-chain sentiment into readable "technical indicators."

▲ Cookie.fun

#On-Chain and Off-Chain are Equally Important

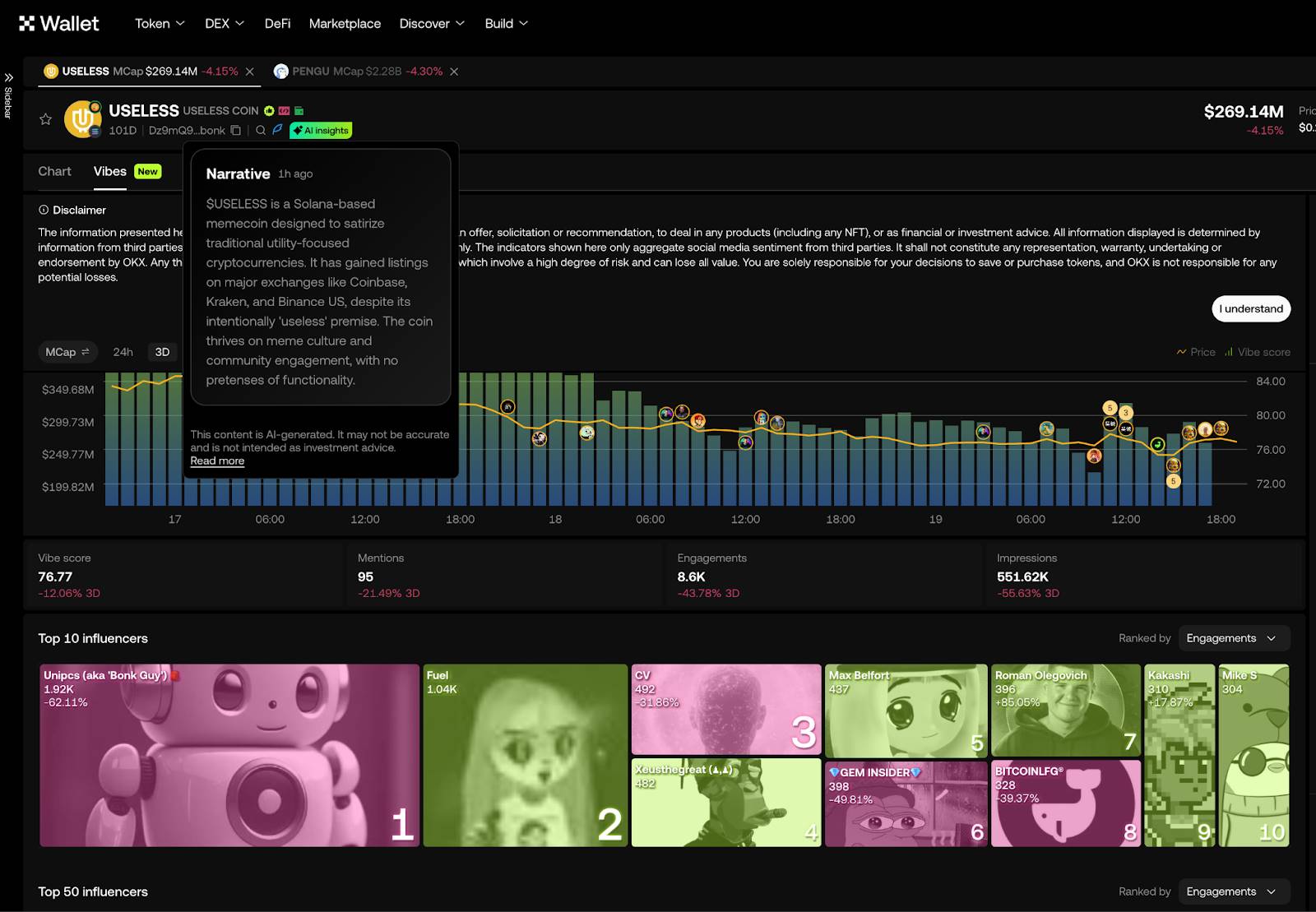

OKX DEX displays Vibes analysis alongside market data, aggregating KOL shout-out timestamps, leading associated KOLs, Narrative Summaries, and comprehensive scores to shorten off-chain information retrieval time. The Narrative Summary has become the most well-received AI product feature among users.

Underwater Data Display: Turning "Visible Ledgers" into "Usable Alpha"

In traditional finance, order flow data is controlled by large brokers, and quantitative firms must pay hundreds of millions of dollars each year to access it to optimize trading strategies. In contrast, the trading ledgers in crypto are completely open and transparent, effectively "open-sourcing" high-priced intelligence and forming an open-pit gold mine waiting to be mined.

The value of underwater data lies in extracting invisible intentions from visible transactions. This includes capital flows and role characterization—whether a market maker is accumulating or distributing clues, KOL sock puppet addresses, concentrated or dispersed chips, bundled trades, and abnormal capital flows; it also includes address profiling linkage—labeling each address as smart money, KOL/VC, developers, phishing, wash trading, etc., and binding them with off-chain identities to connect on-chain and off-chain data.

These signals are often difficult for ordinary users to detect but can significantly influence short-term market trends. By real-time parsing address labels, holding characteristics, and bundled trades, trading assistance tools are revealing the gaming dynamics "beneath the surface," helping traders avoid risks and seek alpha in second-level market conditions.

For example, GMGN integrates smart money, KOL/VC addresses, developer wallets, wash trading, phishing addresses, bundled trade label analysis on top of on-chain real-time trading and token contract data, mapping on-chain addresses to social media accounts, aligning capital flows, risk signals, and price behavior to the second level, helping users make faster entry and risk avoidance judgments.

▲ GMGN

AI-Driven Executable Signals: From Information to Profit

"The next round of AI is not selling tools, but selling profits." — Sequoia Capital

This judgment also holds true in the field of crypto trading. Once the speed and dimensions of data meet the standards, the next competitive goal is in the data decision-making phase: whether multi-dimensional complex data can be directly transformed into executable trading signals. The evaluation criteria for data decision-making can be summarized in three points: fast enough, automated, and capable of generating excess returns.

Fast Enough: With the continuous advancement of AI capabilities, the advantages of natural language and multi-modal LLMs will gradually come into play here. They can not only integrate and understand massive amounts of data but also establish semantic connections between data, automatically extracting decisive conclusions. In a high-intensity, low-trading-depth on-chain trading environment, each signal has a very short validity period and capital capacity, and speed directly affects the returns that signals can bring.

Automated: Humans cannot monitor trading 24 hours a day, but AI can. For example, users can place copy trading orders with take profit and stop loss conditions on the Senpi platform. This requires AI to perform real-time polling or monitoring of data in the background and automatically make decisions to place orders when it detects recommendation signals.

- Return Rate: Ultimately, the effectiveness of any trading signal depends on whether it can continuously deliver excess returns. AI not only needs to have a sufficient understanding of on-chain signals but also needs to combine risk control to maximize risk-return ratios in a highly volatile environment. For instance, it should consider unique on-chain factors affecting return rates, such as slippage losses and execution delays.

This capability is reshaping the business logic of data platforms: from selling "data access rights" to selling "profit-driven signals." The competitive focus of the next generation of tools is no longer on data coverage but on the executability of signals—whether it can truly complete the last mile from "insight" to "execution."

Some emerging projects have begun to explore this direction. For example, Truenorth, as an AI-driven discovery engine, incorporates "decision execution rates" into the evaluation of information effectiveness, continuously optimizing output through reinforcement learning to minimize ineffective noise, helping users build directly executable information streams aimed at order placement.

▲ Truenorth

Although AI has enormous potential in generating executable signals, it also faces multiple challenges.

Illusion: On-chain data is highly heterogeneous and noisy, making it easy for LLMs to experience "hallucinations" or overfitting when parsing natural language queries or multi-modal signals, affecting signal returns and accuracy. For example, for multiple tokens with the same name, AI often fails to find the corresponding contract address for the CT Ticker. Similarly, for many AI signal products, discussions about AI in CT are often directed towards Sleepless AI.

Signal Lifespan: The trading environment is ever-changing. Any delay can erode returns, and AI must complete data extraction, reasoning, and execution in a very short time. Even the simplest Copy Trading strategies can turn negative if they do not follow smart money.

Risk Control: In high-volatility scenarios, if AI continuously fails on-chain or experiences excessive slippage, it may not only fail to generate excess returns but could also deplete the entire principal within minutes.

Therefore, finding a balance between speed and accuracy, and using mechanisms like reinforcement learning, transfer learning, and simulation backtesting to reduce error rates, is a competitive point for AI in this field.

Upward or Downward? The Survival Dilemma of Data Dashboards

As AI can directly generate executable signals and even assist in order placement, lightweight intermediate applications that solely rely on data aggregation are facing a survival crisis. Whether it is assembling on-chain data into dashboard tools or layering execution logic on top of aggregation with trading bots, they fundamentally lack a sustainable moat. In the past, such tools could stand firm due to convenience or user mindset (for example, users habitually checking token CTO situations on Dexscreener); however, now that the same data is available in multiple places, execution engines are increasingly commoditized, and AI can directly generate decision signals and trigger execution on the same data, their competitiveness is rapidly diminishing.

In the future, efficient on-chain execution engines will continue to mature, further lowering trading thresholds. In this trend, data providers must make a choice: either go downward, delving deeper into faster data acquisition and processing infrastructure; or go upward, extending to the application layer and directly controlling user scenarios and consumption traffic. The model that is stuck in the middle, only doing data aggregation and lightweight packaging, will continue to be squeezed for survival space.

Going downward means building an infrastructure moat. Hubble AI, in developing trading products, realized that solely relying on TG Bots could not form a long-term advantage, so it shifted to upstream data processing, aiming to create "Crypto Databricks." After optimizing the data processing speed for Solana, Hubble AI is transitioning from data processing to an integrated data research platform, positioning itself upstream in the value chain to provide foundational support for the narrative of "financial on-chain" in the U.S. and the data needs of on-chain AI Agent applications.

Going upward means extending to application scenarios and locking in end users. Space and Time initially focused on sub-second SQL indexing and oracle push, but recently began exploring C-end consumer scenarios, launching Dream.Space on Ethereum—a "vibe coding" product. Users can write smart contracts or generate data analysis dashboards in natural language. This transformation not only increases the frequency of its data service calls but also creates direct stickiness with users through the end-user experience.

Thus, it is evident that roles stuck in the middle, relying solely on selling data interfaces, are losing their survival space. The future B2B2C data track will be dominated by two types of players: one type controls the underlying pipeline, becoming infrastructure companies akin to "on-chain utilities"; the other type is close to user decision scenarios, transforming data into application experiences.

Summary

Under the triple resonance of the meme frenzy, the explosion of high-performance public chains, and the commercialization of AI, the on-chain data track is undergoing a structural shift. The iteration of trading speed, data dimensions, and execution signals has made "visible charts" no longer the core competitive advantage; the real moat is shifting towards "executable signals that help users make money" and "the underlying data capabilities that support all of this."

In the next 2–3 years, the most attractive entrepreneurial opportunities in the crypto data field will emerge at the intersection of Web2-level infrastructure maturity and Web3 on-chain native execution models. The data of major cryptocurrencies like BTC/ETH, due to their high standardization and characteristics similar to traditional financial futures products, has gradually been incorporated into the data coverage scope of traditional financial institutions and some Web2 fintech platforms.

In contrast, the data of meme coins and long-tail on-chain assets exhibits extremely high non-standardization and fragmentation characteristics—from community narratives and on-chain public sentiment to cross-chain liquidity, this information needs to be interpreted in conjunction with on-chain address profiling, off-chain social signals, and even second-level trading execution. It is precisely this difference that creates a unique opportunity window for crypto-native entrepreneurs in processing and trading long-tail assets and meme data.

We are optimistic about projects that will deeply cultivate in the following two directions:

Upstream Infrastructure—On-chain data companies with streaming data pipelines, ultra-low latency indexing, and cross-chain unified parsing frameworks that rival Web2 giants in processing capabilities. Such projects are expected to become the Web3 version of Databricks/AWS, and as users gradually migrate on-chain, trading volumes are likely to grow exponentially, with the B2B2C model possessing long-term compounding value.

Downstream Execution Platforms—Applications that integrate multi-dimensional data, AI Agents, and seamless trading execution. By transforming fragmented on-chain/off-chain signals into directly executable trades, these products have the potential to become the crypto-native Bloomberg Terminal, with their business model no longer relying on data access fees but monetizing through excess returns and signal delivery.

We believe that these two types of players will dominate the next generation of the crypto data track and build sustainable competitive advantages.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。