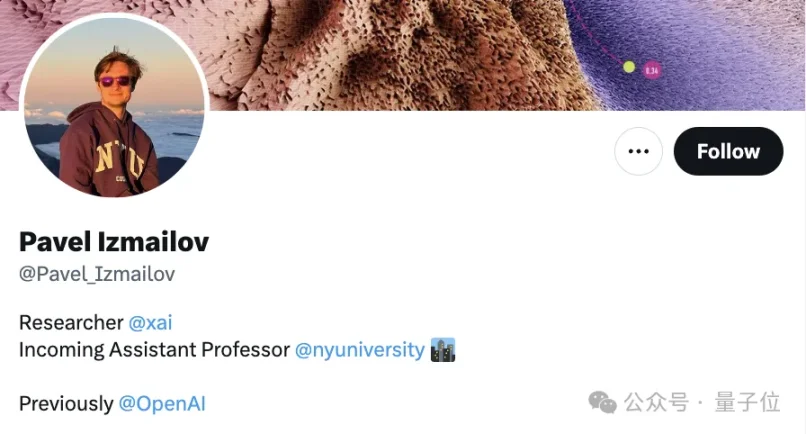

Half a month ago, the leaker Pavel Izmailov, who was fired by OpenAI, boldly wrote on his Twitter profile: Researcher @xai.

Author: Bai Jiao, Heng Yu, Fa Zi, Ao Fei Temple

Source: Quantum Bit

The leaker who was just fired by OpenAI has quickly joined Musk's team.

The person in question, Pavel Izmailov (hereinafter referred to as "P"), is one of Ilya's allies and has worked in the super-alignment team led by Ilya.

Half a month ago, P was accused of allegedly leaking confidential information related to Q* and was dismissed. Although it's not clear what he leaked, it caused quite a stir at the time.

Now, on his Twitter profile, he boldly writes:

Researcher @xai

It seems that Musk moves fast in recruiting talent. In addition to P, many outstanding talents have recently been brought under Musk's wing.

Netizens who are watching the excitement are in an uproar. Many praise him, saying he did a great job:

There are also those who are extremely critical, feeling that hiring someone who leaks confidential information is no different from picking up garbage.

And recently, xai's performance, including the release of Grok 1.5V, has significantly increased its presence, which is quite thought-provoking:

xAI will become a major player in the game, competing with OpenAI and Anthropic.

Hiring the leaker fired by OpenAI

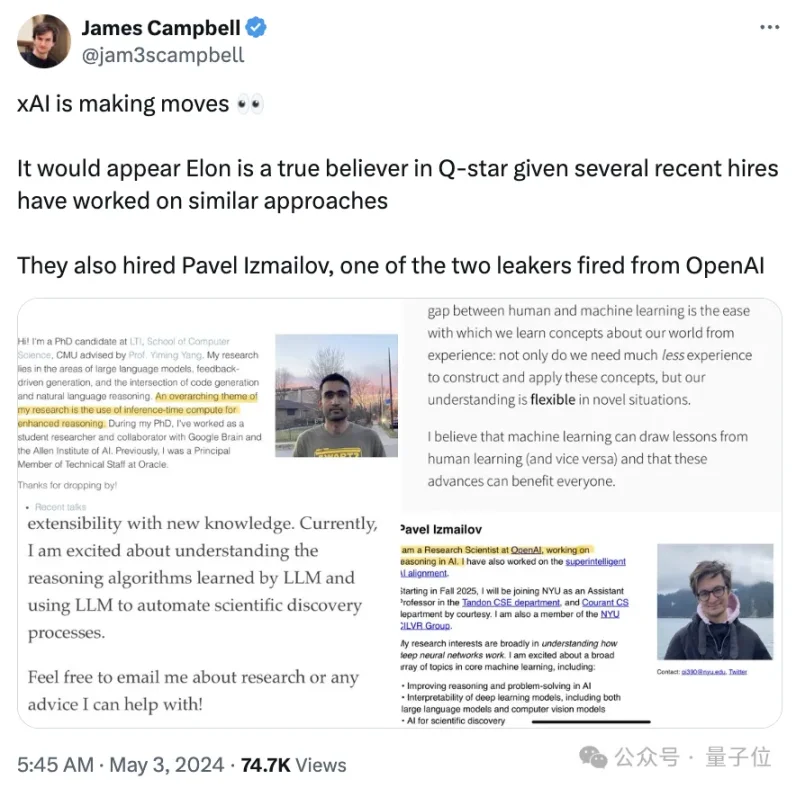

Here's the thing: a blogger who is extremely concerned about the latest developments in the field of large models made a big discovery:

There are quite a few new employees at Musk's xAI, aren't there???

And among them, there are several people whose research direction is somewhat related to OpenAI's most mysterious Q* algorithm. It seems that Musk is the true believer in Q*.

Who are the specific people who have just joined xAI?

The most eye-catching is the aforementioned P.

He is still a member of the CILVR group at New York University, and he revealed that he will join New York University Tandon CSE and Courant CS as an assistant professor in the fall of 2025.

Half a month ago, his personal page still stated that he was "working on large model reasoning at OpenAI."

Half a month later, things have changed.

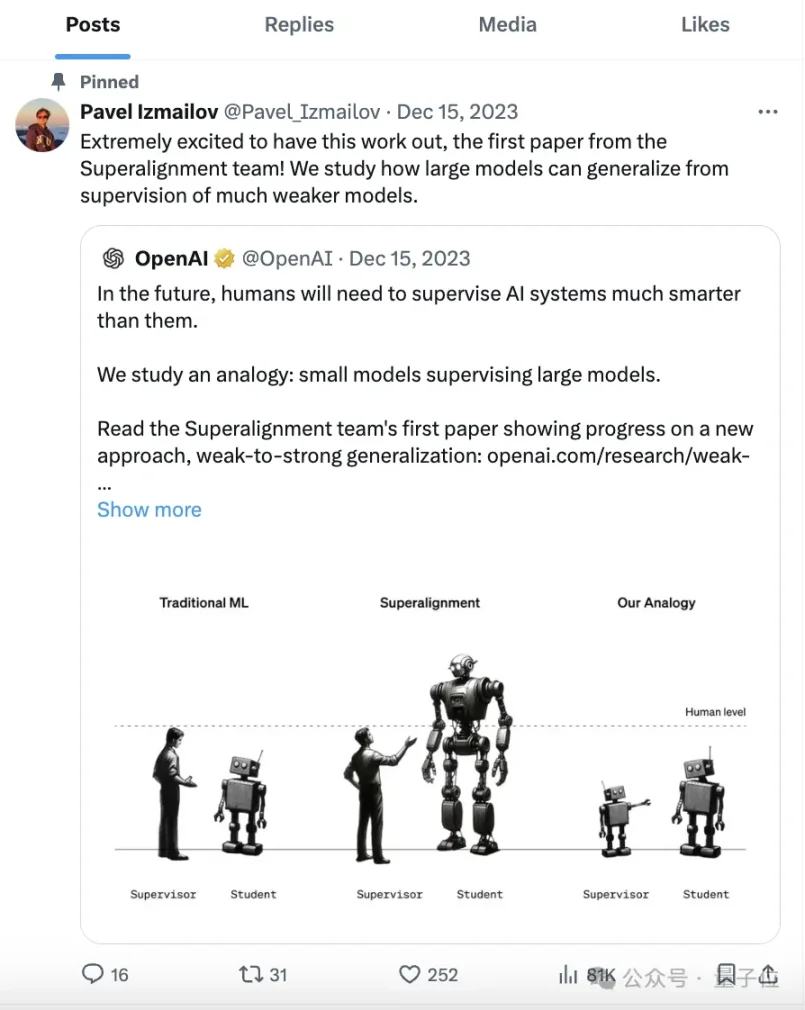

But P's pinned tweet has not changed; it is the first paper of the super-alignment team, and P is the author of this paper.

The super-alignment team was formed in July of last year and is one of the three security teams established by OpenAI to address potential security issues with large models on different time scales.

The super-alignment team is responsible for the distant future, laying the foundation for the security of superintelligent beings beyond humans, and is led by Ilya Sutskever and Jan Leike.

Although OpenAI seems to attach great importance to safety, it is no longer a secret that there are significant internal disagreements about the safe development of AI.

This disagreement is even considered to be the main reason for the power struggle within OpenAI's board of directors in November last year.

It is rumored that Ilya Sutskever became the leader of the "coup" because he saw something that made him uneasy.

And many members of the super-alignment team led by Ilya also sided with him. In the subsequent "support Ultraman" emoji chain activity, the members of this super-alignment team remained mostly silent.

However, after the power struggle subsided, Ilya seemed to have evaporated from OpenAI, causing widespread rumors, but he has never appeared in public again, nor has he clarified or refuted the rumors online.

Therefore, we don't know what the current situation of the super-alignment team is.

As a member of the super-alignment team and a subordinate of Ilya, P, who was fired from OpenAI half a month ago, is speculated by netizens to be the "aftermath" of Ultraman.

Talent, he rushed to join Musk overnight

Although the full picture of Q* is still unknown to the public, various signs indicate that it is dedicated to integrating large models with reinforcement learning, search algorithms, etc., to enhance AI reasoning capabilities.

In addition to the most gossipy P, several new talents who have joined xAI have research directions more or less related to it.

Qian Huang, currently a doctoral student at Stanford University.

Since last summer, Qian Huang has been working at Google DeepMind, and has now indicated @xai on Twitter, but it is not yet known what position she holds.

However, from her personal GitHub page, it can be seen that her research direction is to integrate machine reasoning with human reasoning, especially the rationality, interpretability, and scalability of new knowledge.

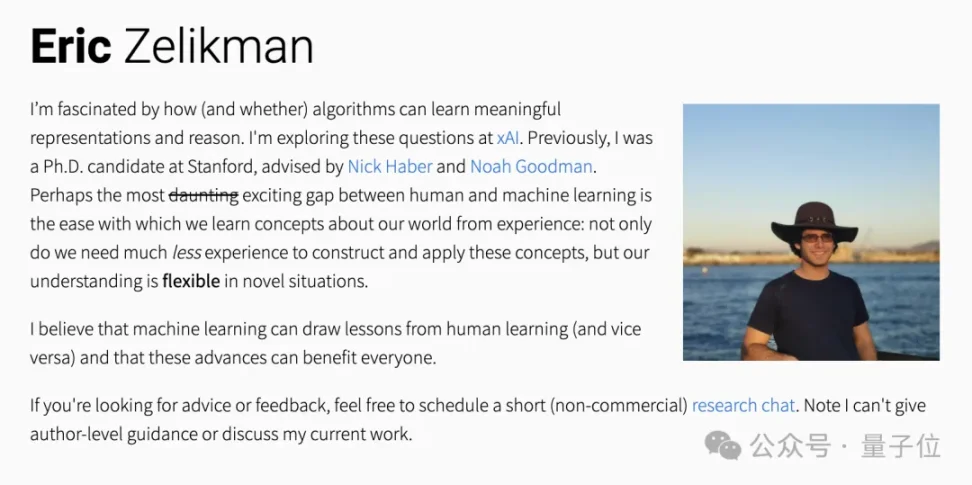

Eric Zelikman, a doctoral student at Stanford, clearly writes on Twitter "study why @xai".

Previously, he has spent some time at Google Research and Microsoft Research.

On his personal page, he says, "I am fascinated by how (and whether) algorithms can learn meaningful representations and reasoning, and I am researching this at xAI."

In March of this year, his team launched the Quiet-Star algorithm, which is indeed Q*, enabling large models to learn to think independently.

Aman Madaan, a doctoral student at the Language Technologies Institute at Carnegie Mellon University.

His research areas include large language models, feedback-driven generation, and the intersection of code generation and natural language reasoning. The primary focus of his research is to enhance reasoning capabilities using inference-time compute.

During his doctoral studies, Aman served as a student researcher and collaborator at Google Brain and the Allen Institute for Artificial Intelligence; earlier, he was also a key technical personnel at Oracle.

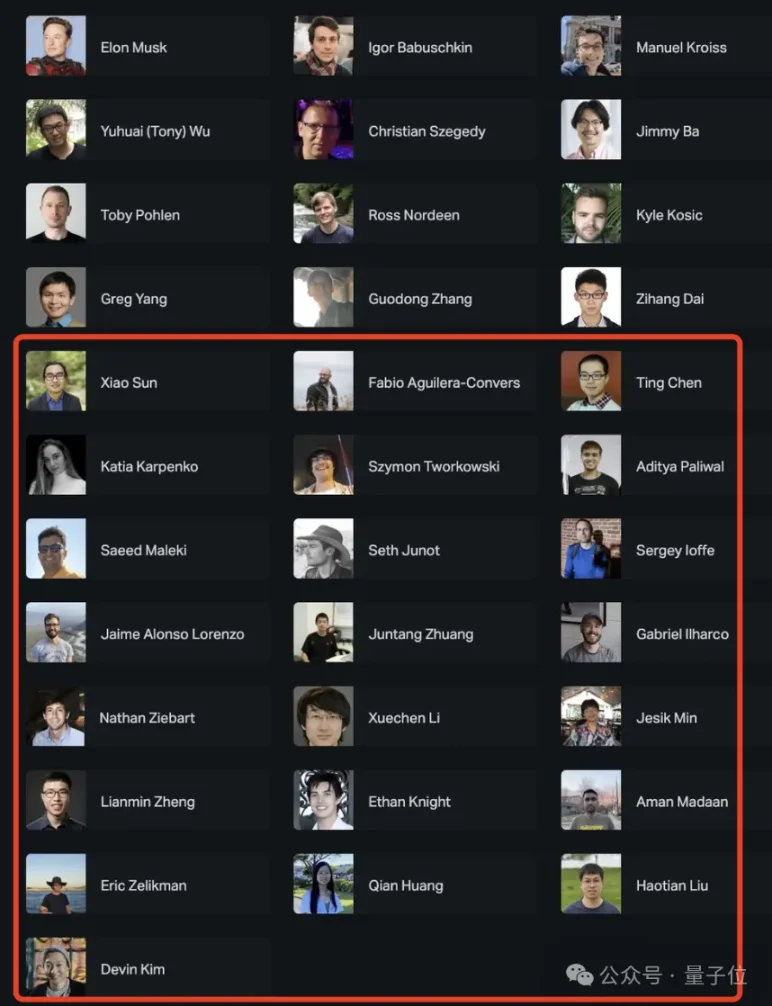

With several new employees, including Pavel Izmailov, Musk's technical talent pool has now expanded to 34 people (excluding Musk himself), roughly doubling from the original founding team of 12 people.

Among the new members, there are a total of 7 Chinese individuals, adding to the 5 members of the founding team, making a total of 12 people.

- Xiao Sun, previously employed at Meta and IBM, holds a Ph.D. from Yale and is an alumnus of Peking University.

- Ting Chen, previously employed at Google DeepMind and Google Brain, graduated from Beijing University of Posts and Telecommunications.

- Juntang Zhuang, previously employed at OpenAI, a core contributor to DALL-3 and GPT-4, graduated from Tsinghua University for his undergraduate studies and from Yale for his master's and doctoral degrees.

- Xuechen Li, who recently graduated with a Ph.D. from Stanford, is a core contributor to the Alpaca series of large models.

- Lianmin Zheng, a computer science Ph.D. from UC Berkeley, creator of the Vicuna and Chatbot Arena.

- Qian Huang, a Ph.D. student at Stanford, graduated from Nankai High School in Tianjin.

- Haotian Liu, a graduate of Zhejiang University, is currently studying at the University of Wisconsin-Madison and is the first author of LLaVA.

In terms of institutional distribution, the focus is mainly on institutions such as Google, Stanford, Meta, OpenAI, and Microsoft, all of which have extensive experience in training large models, such as the GPT series, the Alpaca series, and other large models related to Google Meta.

In terms of joining time, more members have joined in February and March of this year, with an average of one new member joining every 5 days, totaling 13 people. In contrast, only 5 people joined between August and October of last year.

When combined with the corresponding progress of Grok, it is possible to see Musk's recruitment plan for xAI at each stage.

For example, on March 29th of this year, Musk suddenly released Grok-1.5, with a significant increase in context length from the original 8192 to 128k, on par with GPT-4.

Going back a month (February of this year), former OpenAI employee Juntang Zhuang joined xAI. He invented the GPT-4 Turbo algorithm at OpenAI, which supports the ability to handle 128k long contexts.

Additionally, on April 15th of this year, the multi-modal model Grok-1.5V was released, which can handle various visual information including text, charts, screenshots, and photos, among others.

In March of this year, Haotian Liu, the first author of LLaVA, joined. LLaVA is an end-to-end training multi-modal large model that demonstrates capabilities similar to GPT-4V. The new version, LLaVA-1.5, achieved SoTA on 11 benchmarks.

Now, let's boldly imagine what kind of new upgrades Grok might have with the influx of new talent?

Netizen: Anyway, where is Grok-1.5 (it hasn't been open-sourced yet).

But no matter what, based on Musk's previously stated talent standards, this netizen has revealed the "truth":

Everyone says that Musk's large model company is all about talent, but in fact, Musk doesn't care if you have talent or not.

He said, as long as you can work 80 hours a week without breaking down, you can join them.

80 hours?!

Quantum Bit calculates that it's working 11.5 hours every day without a day off…

Let's not even talk about intelligence; we just can't do it physically.

Reference links:

[1]https://twitter.com/Pavel_Izmailov

[2]https://twitter.com/jam3scampbell/status/1786149919041970683

[3]https://x.ai/about

[4]https://x.com/emollick/status/1787593669618393299

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。