Author: E2M Researcher, Steven

Preface

The trade-offs between Ethereum and Celestia + Cosmos roughly boil down to the following: high orthodoxy + high security + high decentralization vs high scalability (low cost + good performance + easy iteration) + good interactivity. The reason why the latter is recognized by many users is mainly determined by the bilateral needs of early-stage projects and users with small scale and short development time:

Users: In the perception of many users, the demand for usability and affordability of products outweighs security (whether it is Web2 or Web3);

Early-stage projects: They need good scalability to make strategic adjustments at any time and lower costs to make the project more sustainable. Therefore, it is difficult to directly say that modular blockchains like Celestia are "Ethereum killers," but rather a large part of the population has a demand for high-performance, cost-effective, and scalable Web3 public chains. Ethereum's orthodoxy, security, and network effect are still unshakable, but it does not prevent users from having other choices in specific scenarios.

1. Background

The Cancun upgrade is about to take place, and EIP-4844 Proto Danksharding is expected to further reduce the Gas Fee of Layer2.

In the next few years, Ethereum will complete the sharding solution DankSharding (Cancun upgrade, EIP-4844 is just one of them).

However, with the launch of the Celestia mainnet on October 31, 2023, and the high probability of seeing Avail (formerly Polygon Avail, which has now become a separate project) in the first quarter of this year, third-party consensus layers + DA layers have completely overtaken Ethereum, achieving the modularization goals that Ethereum will not achieve for the next few years, and significantly reducing the cost of Layer2 before the Cancun upgrade, becoming the choice for many Layer2 DA layers, encroaching on the DA layer of Ethereum.

In addition, modular blockchains provide more diverse services for future public chains, and service providers such as Altlayer and Caldera have benefited from this, hoping to bring forth more specialized public chains (application chains) and create a better environment for Web 3.0 applications.

This article mainly disassembles the blockchain, preliminarily learns about the modular blockchain project Celestia, and gains a deeper understanding of Blob in the Ethereum Cancun upgrade.

1.1 Origin

The earliest concept of modular blockchains was jointly written by Celestia co-founders Mustafa Albasan and Vitalik in 2018 in the paper "Data Availability Sampling and Fraud Proofs". This paper mainly discusses how to solve the scalability problem without sacrificing the security and decentralization of Ethereum. Surprisingly, it not only provides a technical solution for Ethereum, but also for other third-party DA layers.

The general logic is that full nodes are responsible for producing blocks, while light nodes are responsible for verification.

What is Data Availability Sampling (DAS)?

PS: This technology is the core of Celestia's technology and inadvertently provides a solution for third-party DA layers.

The content is translated and adapted from "Data Availability Sampling: From Basics to Open Problems" written by Paradigm-joachimneu.

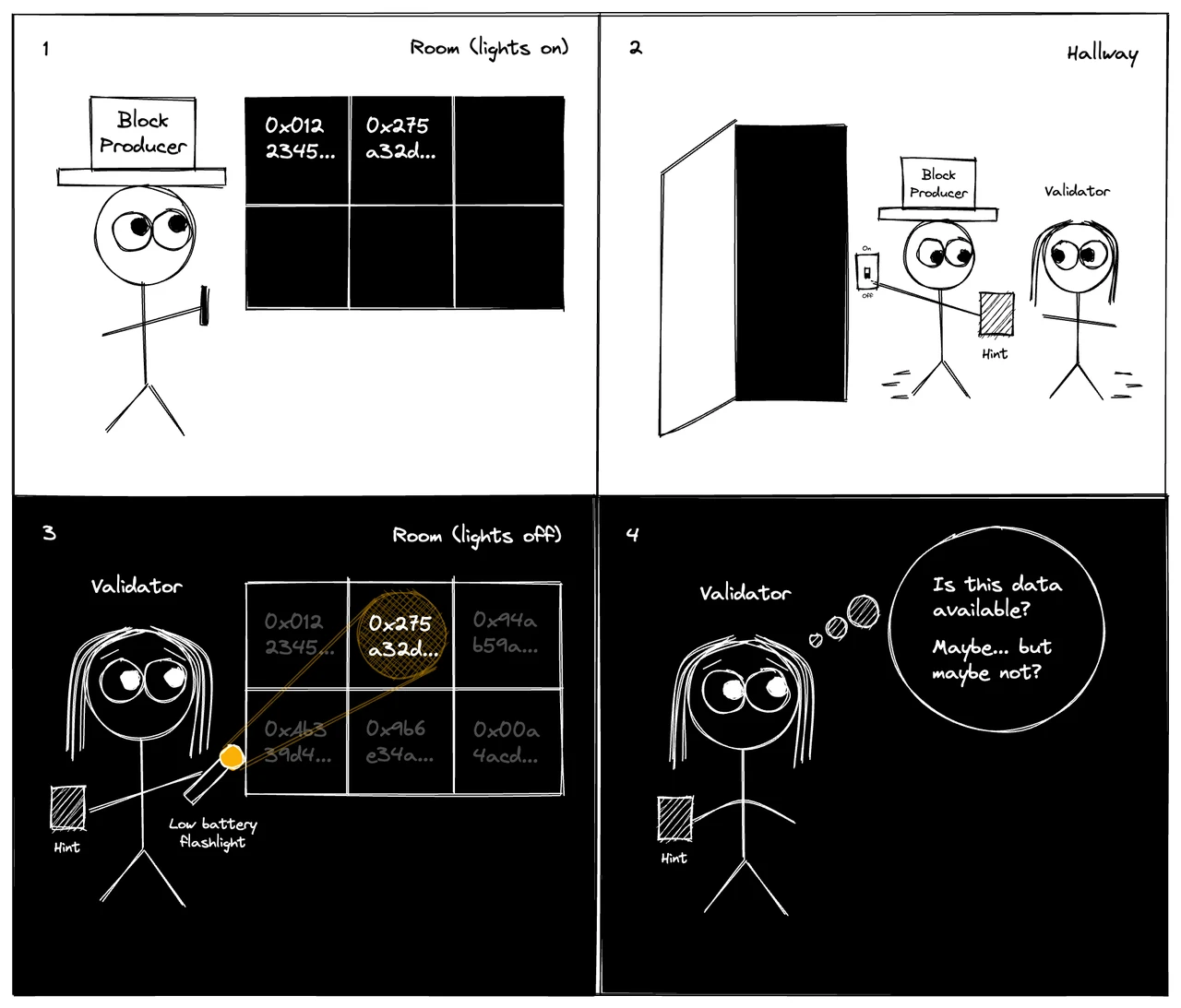

Refer to the following "little black room" model:

There is a notice board in a dark room (see the comic below). First, the block producer enters the room and has the opportunity to write some information on the notice board. When the block producer exits, it can provide a small amount of information to the verifier (the size of which does not scale linearly with the original data). You enter the room with a flashlight, which has a very narrow beam and low battery power, so you can only read a very small amount of text in different positions on the notice board. Your goal is to convince yourself that the block producer has indeed left enough information on the notice board, so that if you turn on the light and read the entire notice board, you can recover the file.

This model is naturally suitable for Ethereum and does not require too much optimization, because Ethereum's verifiers (validation nodes) are sufficient. However, for public chains with relatively few validation nodes, higher costs and more complex verification methods are needed to ensure security.

For projects with relatively few validation nodes, they will face two situations: either the producer behaves honestly and writes the complete file, or the producer behaves improperly and omits a small part of the information, rendering the entire file unusable. It is not reliable to distinguish between these two situations by checking only a few positions on the notice board.

One solution is: Erasure Correcting Reed-Solomon codes.

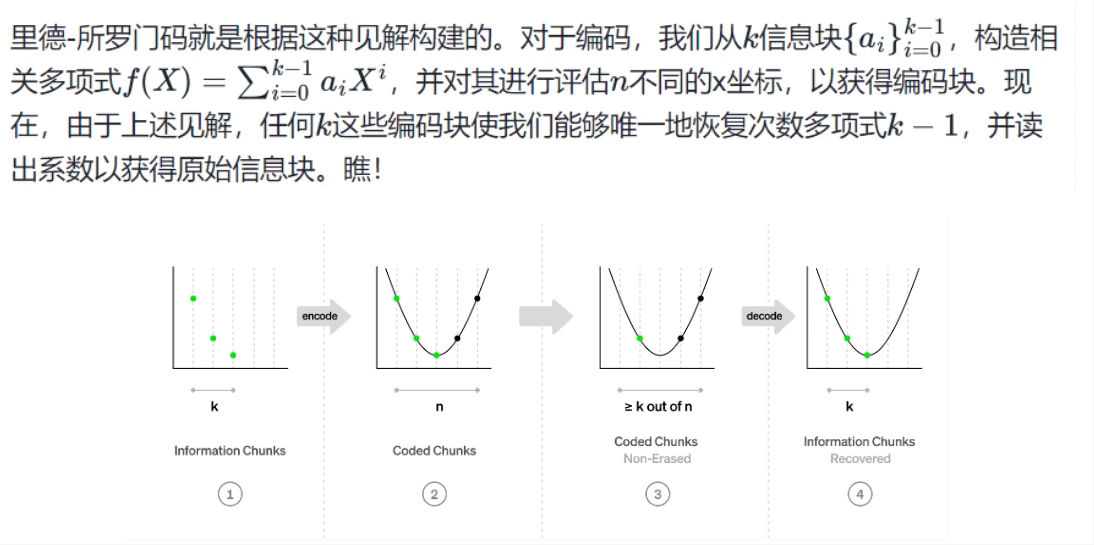

The working principle of erasure codes is as follows: k information blocks are encoded into a longer vector of n coding blocks. The ratio r=k/n measures the redundancy introduced by the code. Then, from a subset of the coding blocks, we can decode the original information blocks.

In simple terms, just as two points determine a line, given the initial values of r, k, and n, the line is determined, and to restore this line, you only need to know the two points on the line.

Reed-Solomon codes further complicate the logic. Once the evaluations of the polynomial at different positions are known, its evaluation can be obtained at any other position (by first reconstructing the polynomial and then evaluating it).

Returning to our data availability problem: it no longer requires the block producer to write the original file on the notice board, but rather to cut the file into chunks, encode them using Reed-Solomon codes, for example, at a rate, and write out the encoded chunks on the notice board. Now let's assume that the block producer at least honestly follows the encoding—later we will see how to eliminate this assumption. Consider again two cases: either the producer behaves honestly and writes down all the chunks, or the producer behaves improperly and wishes to keep the file unavailable. Remember, we can recover the file from any set of encoding chunks outside of… Therefore, to keep the file unavailable, the block producer can write at most a large chunk. In other words, at least now, more than half of the encoding chunks will be lost!

But now these two cases, a notice board filled with chunks, and a half-empty notice board, are easily distinguishable: you check a very small random sample of positions on the notice board, and if each sampled position has its respective chunk, the file is considered available; if any sampled position is empty, the file is considered unavailable.

What are Fraud Proofs?

One of the methods to exclude invalid encoding. This method relies on the fact that some sampling nodes are powerful enough to sample so many chunks that they can discover inconsistencies in the block encoding and issue fraud proofs for invalid encoding, marking the problematic file as unavailable. This work aims to minimize the number of chunks that nodes must check (and forward as part of the fraud proof) to detect fraudulent blocks.

Ultimately, this approach sacrifices a small part of security, and in extreme cases, data may be lost.

Interestingly, this approach laid the foundation for the birth of third-party DA layer projects such as Celestia and Avail, creating competitors for Ethereum.

In 2019, Mustafa Albasan wrote "LazyLedger," which simplified the responsibilities of the blockchain, only requiring sorting and ensuring data availability, with other modules responsible for execution and verification (at that time, it was not divided into different layers), thus solving the scalability problem of the blockchain. This white paper should be considered the prototype of modular blockchains. Mustafa Albasan is also one of the co-founders of Celestia.

Celestia is the first modular blockchain solution, initially existing as an execution layer public chain for executing smart contracts. The scalability solution of Rollup further clarified the concept of the execution layer, executing smart contracts off-chain and compressing the execution results into proofs uploaded to the execution layer.

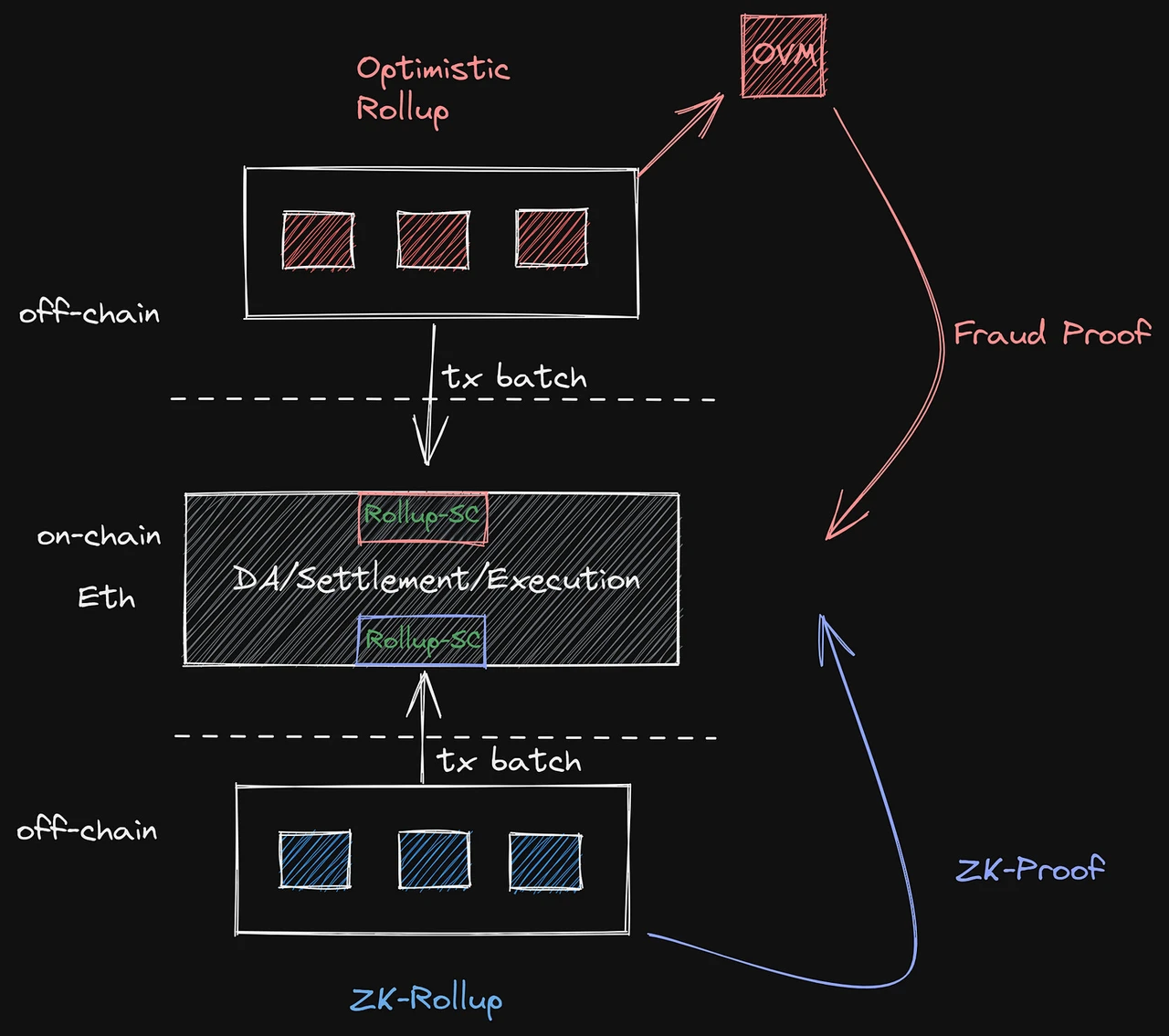

A Brief Supplement on Rollup Proofs

Fraud proof is a system that accepts computation results, where you can request someone with a staked deposit to sign a message of the following form: "I prove that if computation C is performed with input X, output Y will be obtained." You would default to trust this message, but others with a staked deposit would have the opportunity to challenge the computation results. They can sign a message stating, "I disagree, the output result should be Z, not Y." Only after a challenge is initiated, all nodes will perform the computation. If either party is wrong, they will lose the deposit, and all computations based on the incorrect computation will be redone.

ZK-SNARKs is a cryptographic proof form that can directly verify "after input X, executing computation C will output Y." At the cryptographic level, this verification mechanism is "reliable" because if after input X, performing computation C does not result in Y, a validity proof cannot be generated through computation. Even if running computation C itself takes a long time, this proof can be verified quickly. ZK means that proofs can be more efficiently completed and verified, so Vitalik is also very supportive of ZK-Rollup, but like sharding, the technical difficulty is far greater than fraud proof and will take many years to implement, and greater efficiency may bring additional costs.

1.2 The Trade-offs of the Blockchain Impossibility Triangle

The blockchain impossibility triangle: scalability, decentralization, security.

To define a simple measure,

Scalability: Scalability (good) = TPS (high) + Gas fee (low) + Verification difficulty (low)

Decentralization, security: Decentralization (high) + Security (good) = Number of nodes (many) + Hardware requirements for individual nodes (low)

Generally, one can only choose to meet one condition, sacrificing the other to some extent. This is why Ethereum's development in scalability is extremely slow; Vitalik and the Ethereum Foundation prioritize security and decentralization.

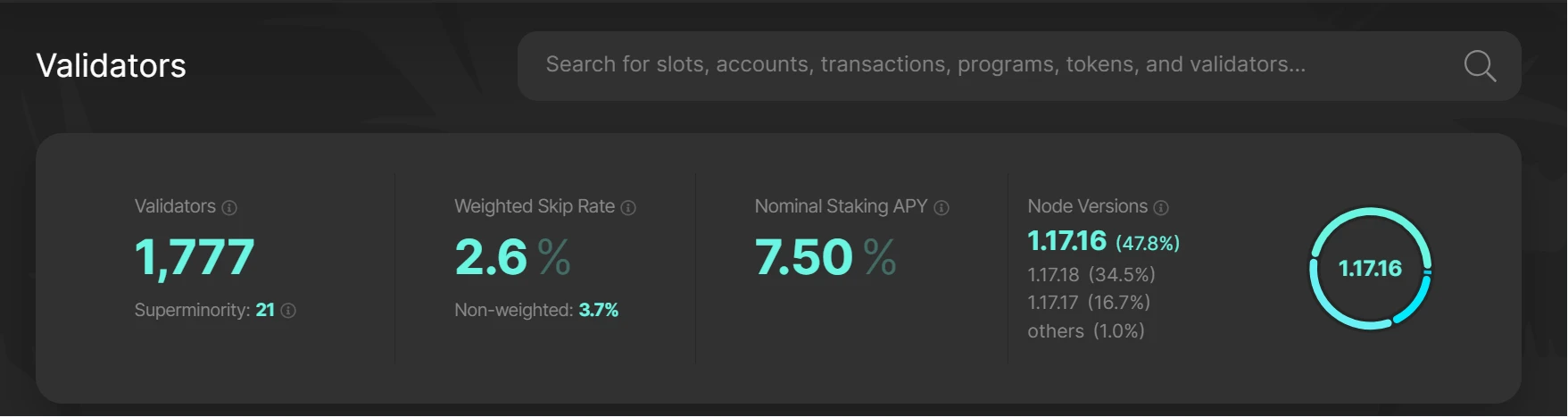

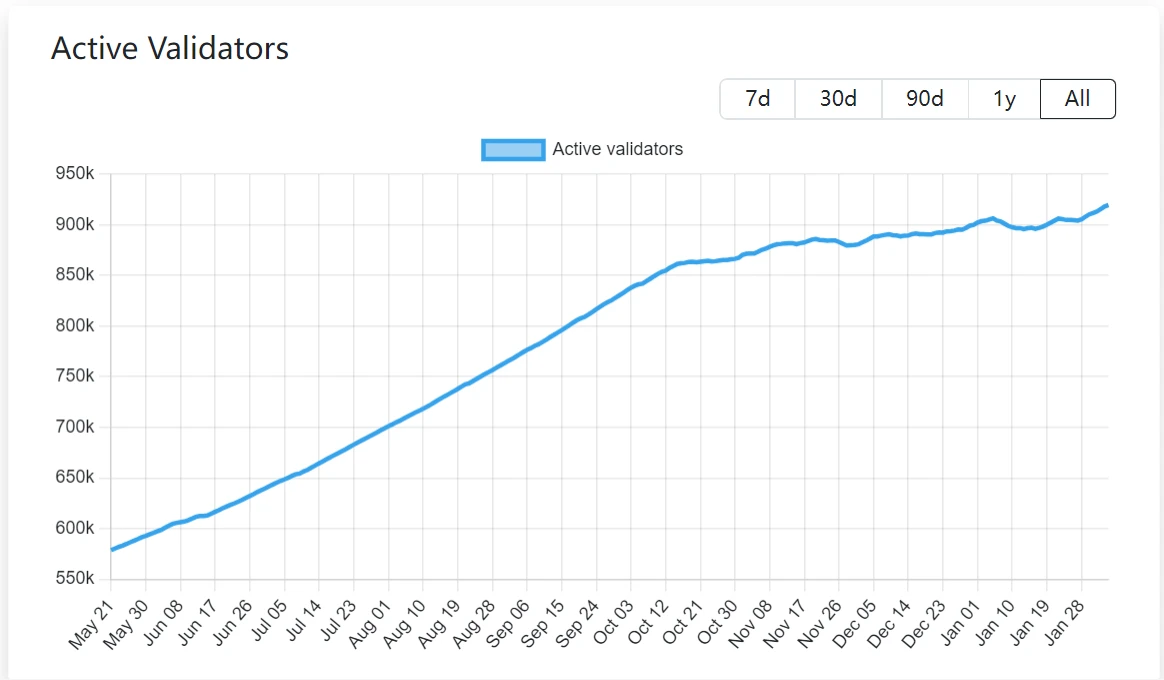

For some traditional high-performance Layer1, such as Solana (1,777 validators) and Aptos (127 validators), they began to pursue scalability when the number of validators was not as high as Ethereum's (5,000+), at the cost of high node requirements and operating costs. In contrast, Ethereum, with over several hundred thousand validators (currently 900k+), ensured absolute decentralization and security before pursuing scalability, which shows the Ethereum Foundation's emphasis on these two characteristics.

Solana validators count:

Data source: https://solanabeach.io/validators

Ethereum validators count:

Data source: https://www.validatorqueue.com/

In addition, compared to the strict requirements and centralization of validators for high-performance Layer1, Ethereum's future upgrades will further reduce the verification difficulty for validators, thereby further lowering the requirements for users to become validators.

1.3 The Importance of Data Availability

Typically, transactions submitted to the chain first enter the Mempool, are "picked" by miners, packaged into blocks, and appended to the blockchain. The block containing this transaction is broadcast to all nodes in the network. Other full nodes will download this new block, perform complex calculations, and verify each transaction in it to ensure their authenticity. The complex calculations and redundancy form the security foundation of Ethereum, but also bring problems.

1.3.1 Data Availability

There are usually two types of nodes:

Full nodes - download and verify all block information and transaction data.

Light nodes - non-full verification nodes, easy to deploy, only verify block headers (data digests). First, ensure that when a new block is produced, all the data in that block is indeed published, allowing other nodes to verify it. If a full node does not publish all the data in a block, other nodes cannot detect whether the block hides malicious transactions.

Nodes need to obtain all transaction data within a certain period of time and verify that there are no confirmed but unverified transaction data. This is what is commonly referred to as data availability. If a full node conceals some transaction data, other full nodes will refuse to follow this block after verification, but light nodes that only download block header information cannot verify and will continue to follow this forked block, affecting security. Although the blockchain usually penalizes the full node's deposit, this will also cause losses to the users who staked with that node. And when the profit from concealing data exceeds the cost of penalties, the node has an incentive to conceal, and in that case, the actual damage will only be to the staked users and other users of the chain.

On the other hand, if full nodes gradually become centralized, there is a possibility of collusion between nodes, which could endanger the security of the entire chain.

Data availability is becoming increasingly important, partly because of the Ethereum PoS merge, and also due to the development of Rollup. Currently, Rollup runs a centralized sequencer. Users transact on Rollup, and the sequencer sorts, packages, and compresses the transactions, and publishes them to the Ethereum mainnet, where the full nodes on the mainnet verify the data using fraud proofs (Optimistic) or validity proofs (ZK). Only if all the data in the block submitted by the sequencer is truly available, can the Ethereum mainnet track, verify, and reconstruct the Rollup state based on this, ensuring the authenticity of the data and the safety of user assets.

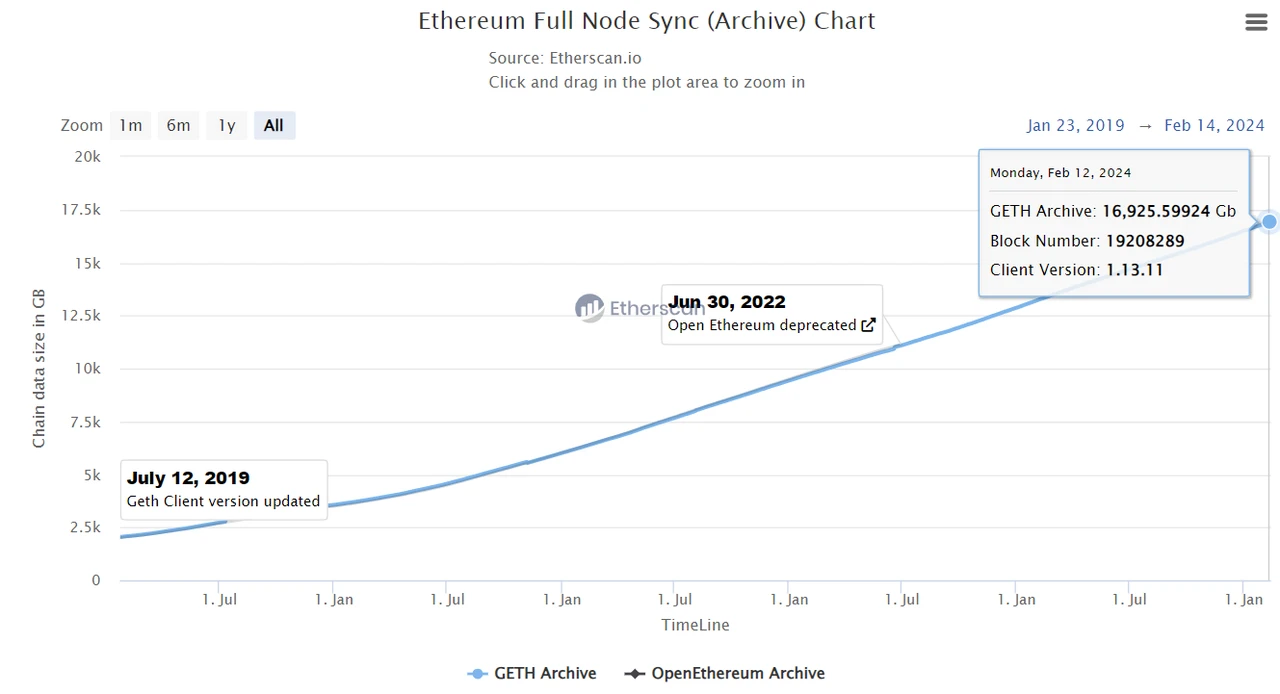

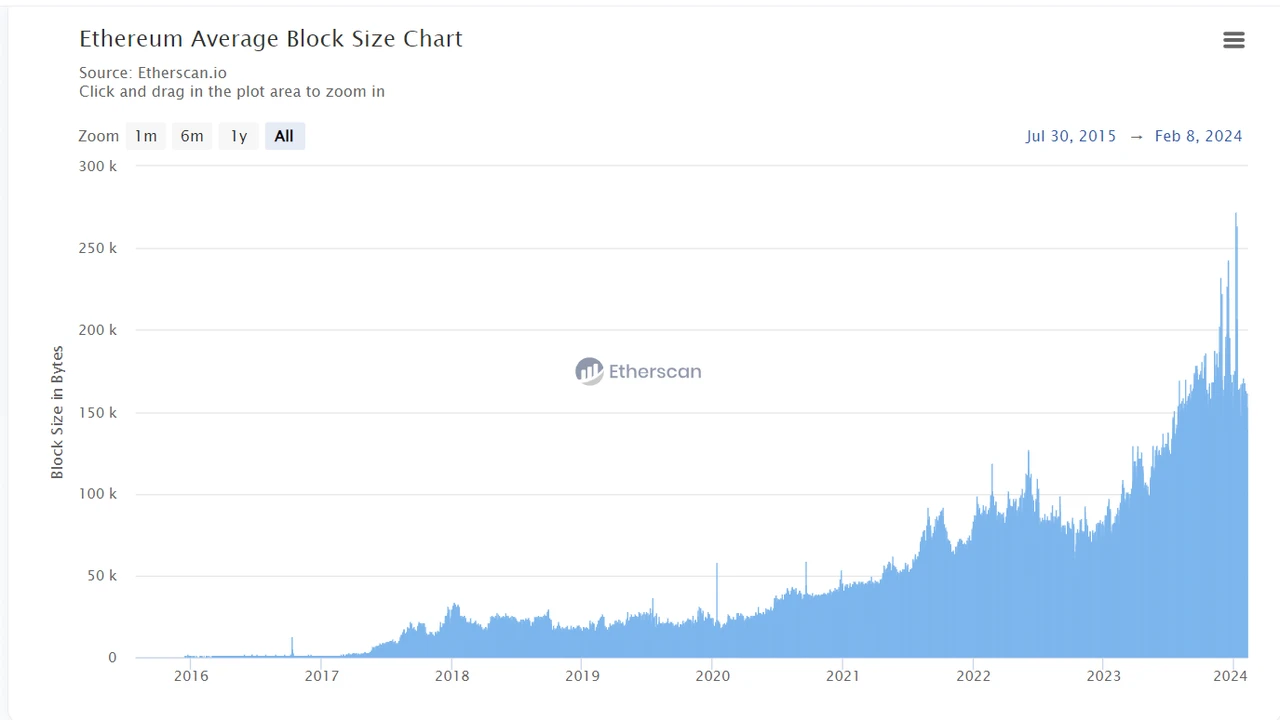

1.3.2 State Explosion and Centralization

State explosion refers to the increasing historical and status data accumulated by Ethereum full nodes, which requires more and more storage resources to run full nodes, raising the threshold for network node centralization.

Image Source: https://etherscan.io/chartsync/chainarchive

Image Source: https://etherscan.io/chartsync/chainarchive

Therefore, there is a need for a way for full nodes to synchronize and verify block data without downloading all the data, only requiring the download of partial redundant fragments of the block.

So far, we understand the importance of data availability. So, how can we avoid the "tragedy of the commons"? That is, everyone understands the importance of data availability, but there still needs to be some tangible incentive to make everyone use a separate data availability layer.

It's like everyone knows that protecting the environment is important, but when they see garbage on the roadside, "why should I pick it up?" Why not someone else? "If I pick up the garbage, what benefits do I get?"

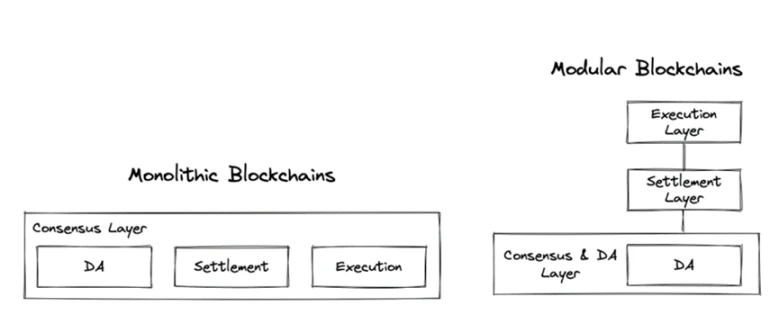

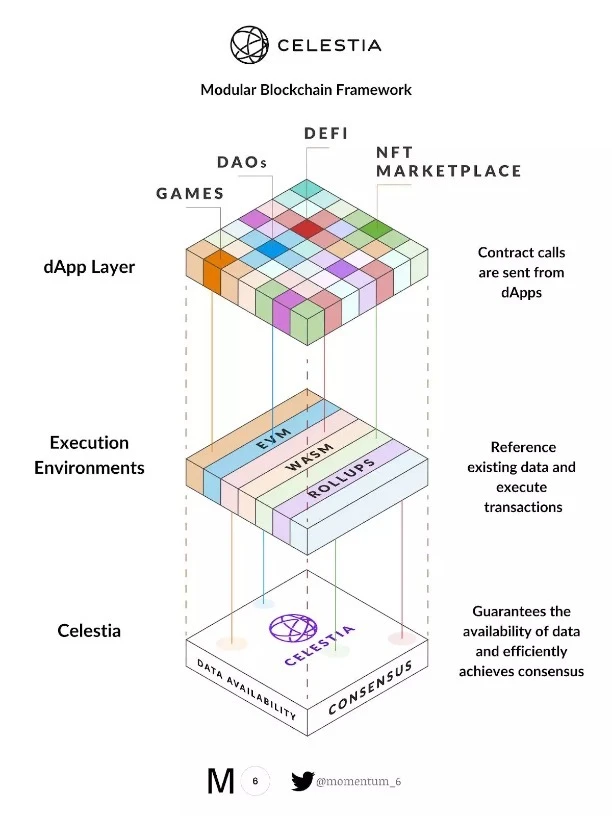

1.4 Simple Division of Blockchain

When there is on-chain activity (e.g., Swap, Stake, Transactions…), it goes through the following 4 steps:

- Execution: Start the transaction

- Settlement: Verify data, handle issues

- Consensus: Agreement among all nodes

- Data Availability: Synchronize data to the chain

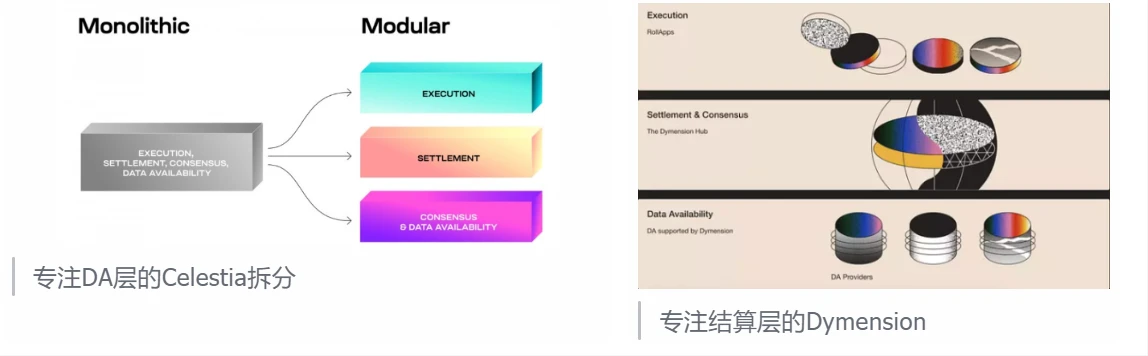

Based on this, "LazyLedger" proposes to modularize the blockchain, while Celestia standardizes the modular blockchain:

Execution Layer

- Responsibility: Responsible for executing smart contracts and processing transactions, providing execution results to the settlement layer in the form of proofs; also the place for deploying various user-facing applications

- Corresponding projects: Various Stacks, Op stack, ZK Stack, Cosmos Stack, Layer2 on Ethereum

Settlement Layer

- Responsibility: Responsible for providing global consensus and security, verifying the correctness of L2 execution results, updating user states; e.g., changes in user account assets, updates to the chain's own state (Token transfers, new contract deployment)

- Corresponding projects: Ethereum, BTC

- PS: The more nodes, the higher the security

Consensus Layer

- Responsibility: Responsible for the consistency of full nodes, ensuring that newly added blocks are valid and determining the transaction order in the mempool

- Corresponding projects: Ethereum (Beacon chain), Dymension

Data Availability Layer

- Responsibility: Ensures the availability of data, allowing the execution layer and settlement layer to operate separately; all original transactions of the execution layer are stored here, and the settlement layer is verified through the DA layer

- Corresponding projects: Celestia, Polygon Avail, EigenDA (DA done by Eigenlayer), Eth Blob + future Danksharding, Near, centralized DA

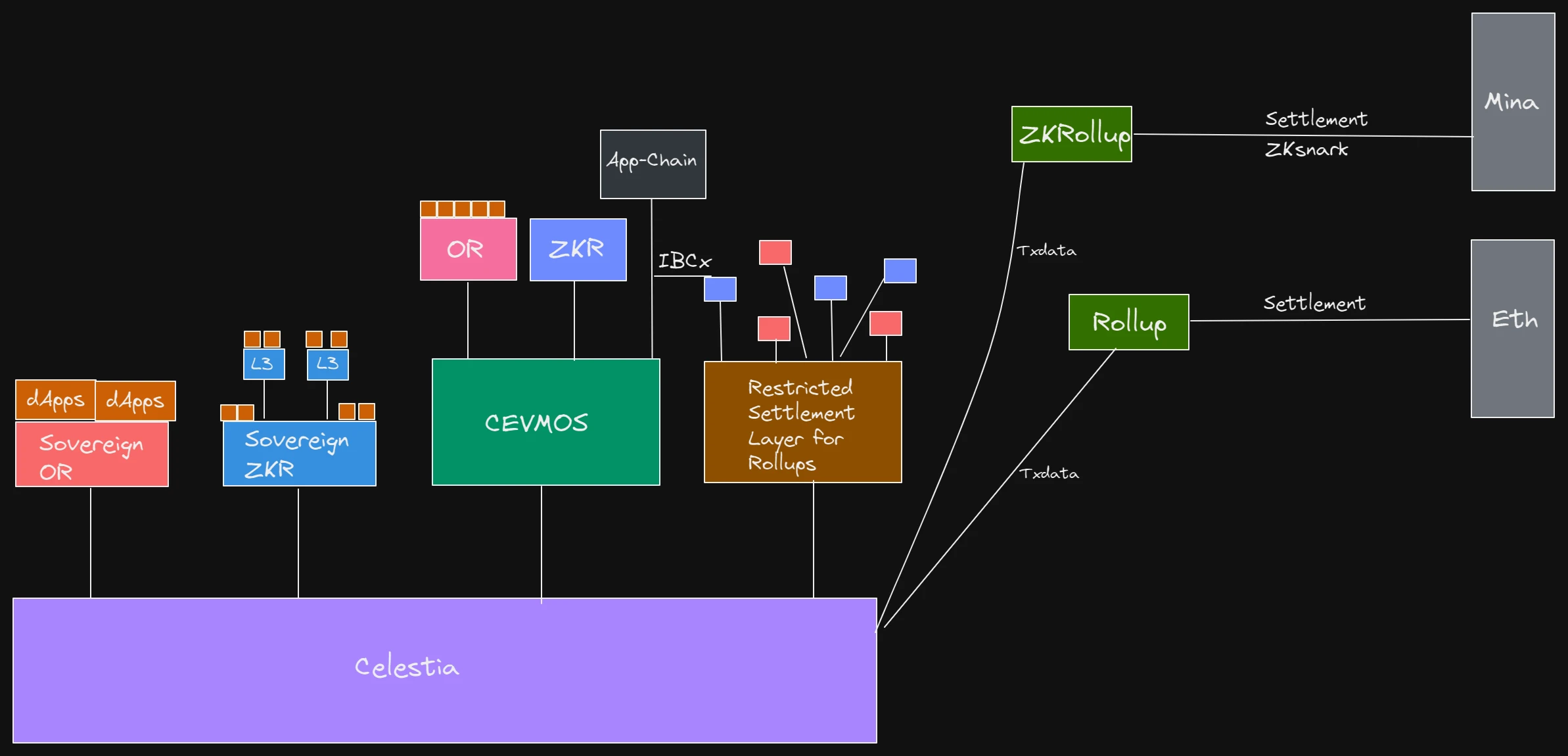

Celestia focuses on the data availability and consensus layer of Layer1 blockchain; Optimism and Arbitrum focus on the execution layer of Layer2 blockchain; Dymension focuses on the settlement layer.

2. Ethereum Modularization Progress

2.1 Current Architecture

A picture summarizes it, without further ado.

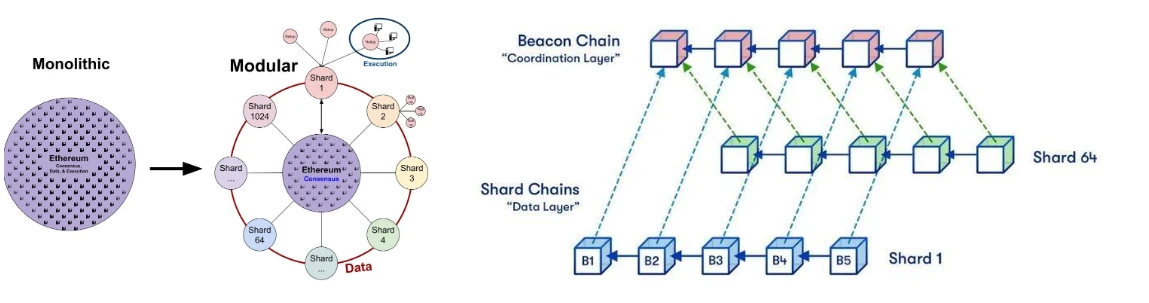

2.2 Long-term Development - Shard into Multiple Work Chains

Starting from the Paris upgrade (and Merge), Ethereum has been gradually modularizing itself.

- Consensus Layer / Settlement Layer: Beacon chain

- Execution Layer: (Fully outsourced) Rollup

- DA Layer: Calldata (now) / Blob (after Cancun upgrade) / Danksharding (future/ultimate)

Vitalik's current view on one of the core Ethereum scaling solutions for the future is Rollup-Centric, and the potential endgame can be seen in the following diagram:

Sharding into multiple work chains with different specialties, with consensus, security, and settlement layers inherited from Ethereum, and each chain having a corresponding Rollup responsible for the execution layer.

From the research so far, there is limited public information on the technical difficulty of sharding, and the Ethereum Foundation believes that it will take several years to fully implement sharding.

2.3 Transition Solution for Sharding - EIP-4844 Proto-Danksharding / Cancun Upgrade

The Dencun hard fork has been confirmed to take place on March 13, 2024, and there can be a conclusion on the reduction of Gas fees and the increase in TPS.

The most important aspect of the Cancun upgrade is the introduction of a new transaction mode, Blob.

Some features of Blob:

2 Blobs per transaction, 258kb

A block can have a maximum of 16 blobs, 2mb, but Ethereum has a gas fee baseline, and when it exceeds 1mb, this will cause the next block's fee rate to rise; the ideal state is 8 blobs, i.e., 1mb

Blob security is equivalent to L1, as it is also stored and updated by full nodes

Automatically deleted after 30 days

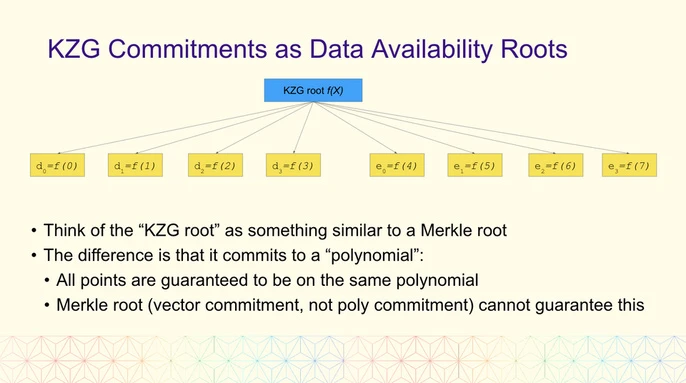

Blob uses KZG Hashmmitment as a hash for data verification, similar to Merkle. It can be understood as a proof of state download

Occupies relatively little network resources in terms of cache space, and through EIP-1559 (separating gas fees for different types of transactions), a relatively low gas fee has been set for Blob. Blob can be understood as attaching several cache packages to each block on Ethereum, storing transaction data for full node verification and challenge, and then disappearing after 30 days, finally transmitting a KSG proof that it has been verified and reached consensus.

Data source: https://etherscan.io/chart/blocksize

How to understand?

A block is currently about 150kb, and a Blob is 128kb. 8 blobs take up approximately 1M of space, expanding by 6 to 7 times. In addition, gas fees have been reduced through EIP-1559. The amount of transaction data to be uploaded per transaction has decreased, and the number of transactions per block has increased, ultimately leading to an increase in TPS and a decrease in gas fees.

- KZG Proofs (KZG Commitments)

KZG proofs are similar to Merkle trees (which record the state of Ethereum) and are used to record the state of transaction data.

KZG Polynomial Commitment, also known as the Kate, Zaverucha, and Goldberg Polynomial Commitment scheme, was published by Kate, Zaverucha, and Goldberg together. In a polynomial scheme, the prover computes a commitment to a polynomial and can open it at any point in the polynomial. This commitment scheme can prove that the value of the polynomial at a specific position is consistent with the specified value.

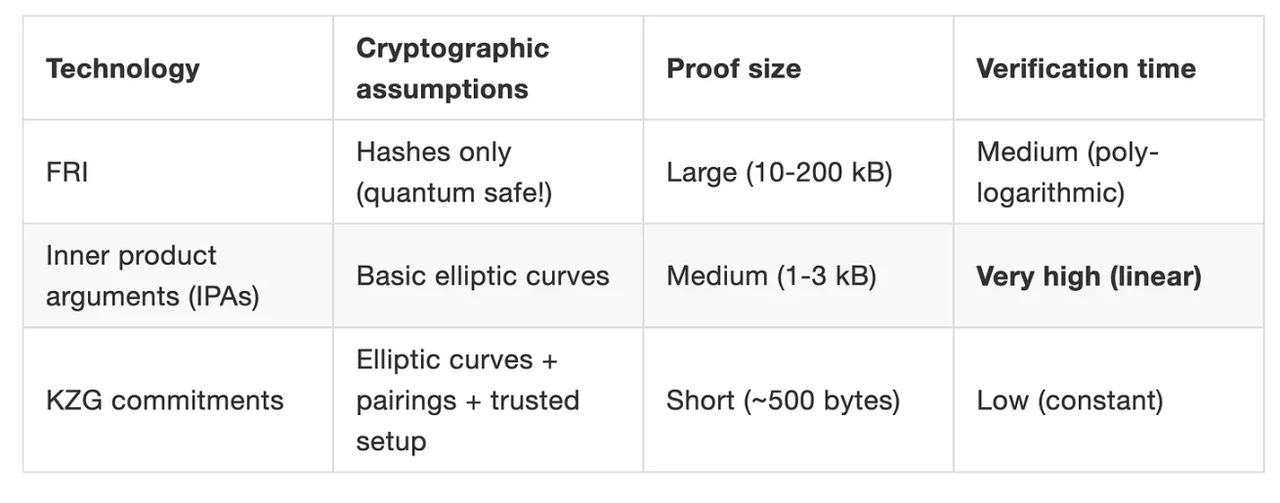

FRI is the polynomial commitment scheme used by Starkware, which achieves quantum-level security but has the largest proof size. IPA is the default polynomial commitment scheme for Bulletproof and Halo2 zero-knowledge algorithms, with relatively long verification times, and is used in projects such as Monero and Zcash. The advantages of KZG polynomial commitment are significant in terms of proof size and verification time, making it the most widely used polynomial commitment method.

2.4 Summary

The change in the process before and after Rollup originally had to package and compress transactions: the most important thing is that the transaction data, which originally took up a large space, has now become a KSG proof that takes up a very small space and has a very fast verification time.

2.5 Other Views

The following is from: https://twitter.com/0xNing0x/status/1758473103930482783

Will the gas fees for Ethereum L2 really decrease by more than 10 times after the Cancun upgrade?

There is currently a consensus in the market: after the Cancun upgrade, the average gas fees for Ethereum L2 will decrease by 10 times or even more.

After the deployment of the core protocol EIP4844 in the Cancun upgrade, the Ethereum mainnet will add 3 Blob spaces specifically for storing L2 transactions and state data, and these Blobs will have independent gas fee markets. It is expected that the maximum scale of state data stored in 1 Blob space will be approximately equal to 1 mainnet block, i.e., ~1.77M.

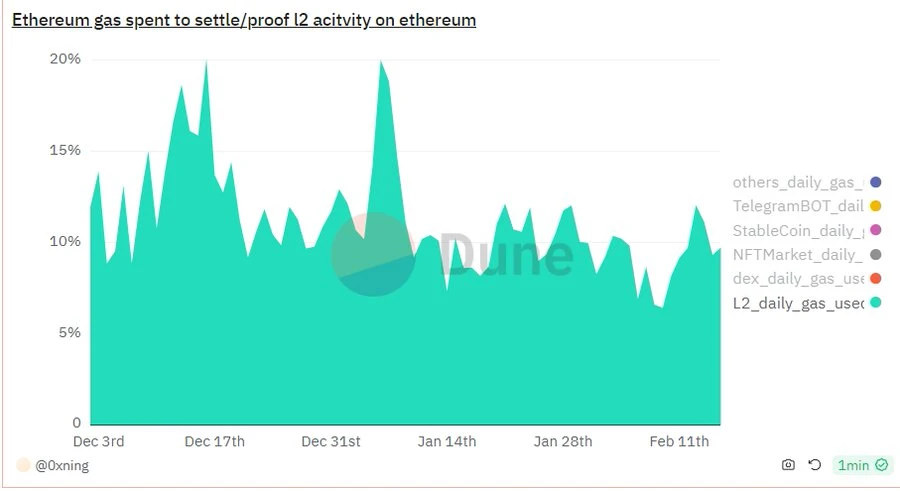

The daily gas consumption of the Ethereum mainnet is currently 107.9b, with the gas consumption of Rollup L2s accounting for ~10%.

According to the supply and demand curve of economics:

Price = Total demand / Total supply,

Assuming that after the Cancun upgrade, the total gas demand for Rollup L2s remains unchanged, and the block space that Ethereum can sell to L2s increases from the current ~10% of 1 block to 3 complete Blob blocks, this is equivalent to expanding the total supply of block space by 30 times, so the price of gas will decrease to 1/30 of the original.

However, this conclusion is not reliable because it makes too many linear relationship assumptions and abstracts away too many detailed factors that should be included in the calculation and consideration, especially the impact of the competition and game strategies among Rollup L2s for Blob space.

The gas fee consumption of Rollup L2s mainly consists of the cost of saving data availability (state data storage fee) and the cost of data availability verification. Currently, the cost of saving data availability accounts for as much as ~90%.

After the Cancun upgrade, for Rollup L2s, the additional 3 Blob blocks are equivalent to adding 3 pieces of common land. According to Coase's theory of the commons, in a completely free competitive market environment for Ethereum's Blob space, it is highly probable that the leading Rollup L2s will abuse the Blob space. This can ensure their market position on one hand and squeeze the survival space of their competitors on the other.

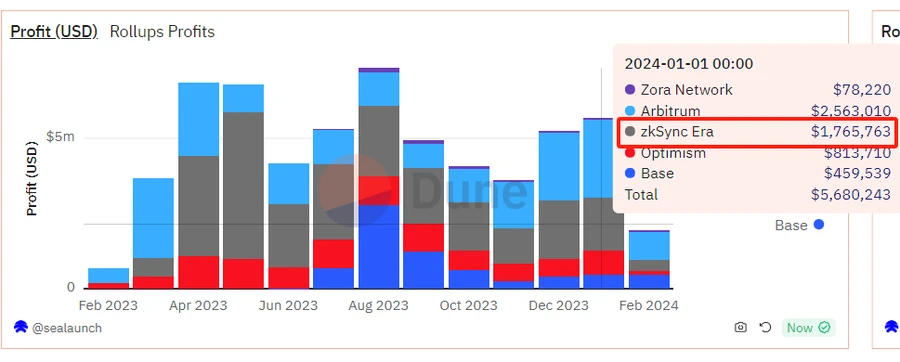

The following is the profit statistics for 5 Rollup L2s over 1 year, showing a clear seasonal variation in monthly profit scale, with no clear overall growth trend.

In such a market with a ceiling, Rollup L2s are in a highly tense zero-sum game state, fiercely competing for developers, funds, users, and Dapps. After the Cancun upgrade, they now have 3 additional Blob spaces to fiercely compete for.

In a market where "there is only so much meat, if someone eats more, you will eat less," it is very difficult for Rollup L2s to achieve the Pareto optimal ideal situation.

So, how will the leading Rollup L2s abuse Blob space?

Personal speculation: The leading Rollup L2s will modify the frequency of the Sequencer's Batches, shortening the current batch frequency from once every few minutes to approximately once every 12 seconds, to synchronize with the Ethereum mainnet block production speed. This will not only improve the fast confirmation of transactions on their own L2, but also occupy more Blob space to suppress competitors.

Under this competitive strategy, the gas fee consumption structure of Rollup L2s will see a significant increase in verification fees and batch fees. This will limit the positive impact of the additional Blob space on reducing L2 gas fees.

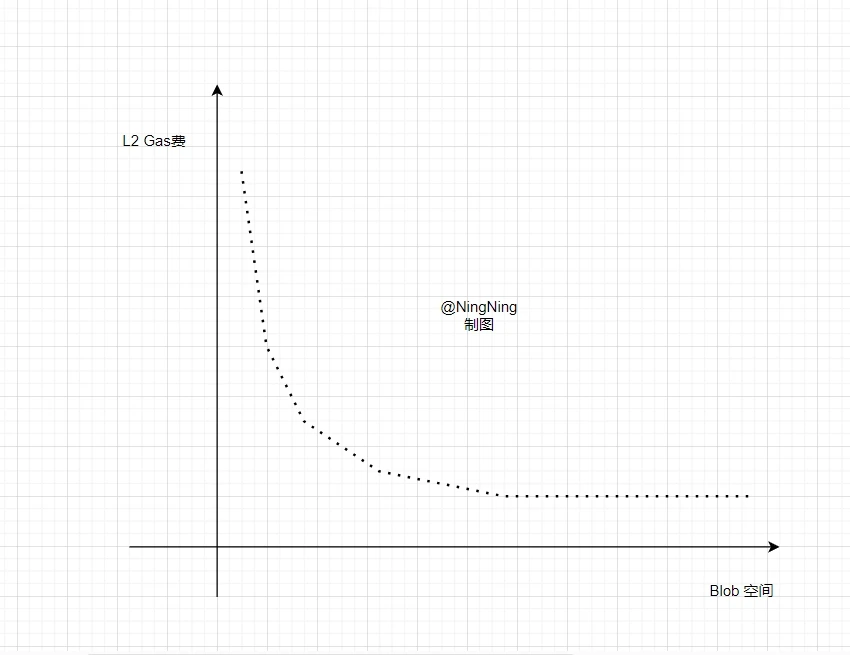

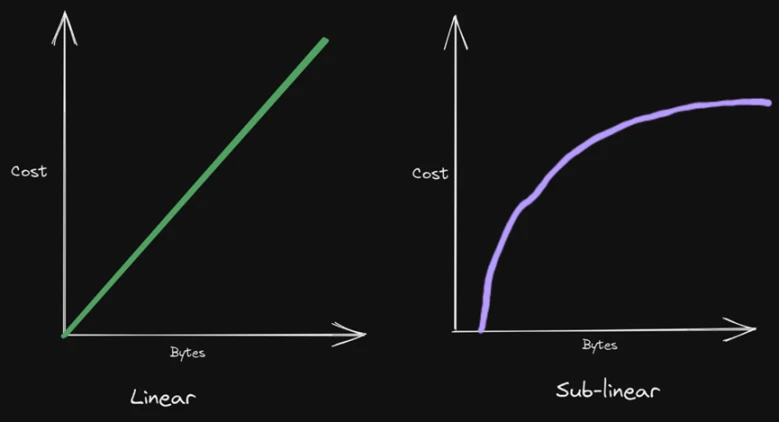

As shown in the above figure, when the increase in Blob space has a positive impact on reducing L2 gas fees, it will have diminishing marginal returns. Moreover, after reaching a certain threshold, it will almost become ineffective.

Based on the above analysis, it is speculated that the gas fees for Ethereum L2 will decrease after the Cancun upgrade, but the decrease will be less than the market's expectations.

Above. This is just a starting point, looking forward to more discussions.

3. Celestia

Celestia provides an accessible data availability layer and consensus for other Layer1 and Layer2 solutions, built on the Cosmos Tendermint consensus and Cosmos SDK.

Celestia is a Layer1 protocol that is compatible with EVM chains and Cosmos application chains, and will support all types of Rollups in the future. These chains can directly use Celestia as a data availability layer, with block data being stored, called, and verified through Celestia before returning to their own protocol for settlement.

Celestia also supports native Rollups and can directly build Layer2 on it, but it does not support smart contracts, so it cannot directly build dApps.

3.1 Development History

Mustafa Al-Bassam - Co-founder and CEO, Bachelor's degree in Computer Science from King's College London, and a Ph.D. in Computer Science from University College London. Al-Bassam was a founding member and core member of the famous hacker group LulzSec at the age of 16, and has been involved in hacking activities for a long time. In August 2018, Al-Bassam co-founded the blockchain scalability research team Chainspace, which was acquired by Facebook in 2019.

In May 2019, the "LazyLedger" paper was published, and in September of the same year, LazyLedger (later renamed Celestia) was founded, with Al-Bassam serving as CEO to this day.

Seed round financing of $1.5 million was completed on March 3, 2021, with investors including Binance Labs.

On June 15, 2021, it was updated to Celestia and released a minimum viable product, a data availability sampling light client. The development network went live on December 14, 2021, and the Mamaki test network went live on May 25, 2022.

The Sovereign rollup solution Optimint was launched on August 3, 2022.

On October 19, 2022, a $55 million financing round was completed, led by Bain Capital and Polychain Capital, with participation from Placeholder, Galaxy, Delphi Digital, Blockchain Capital, Spartan Group, Jump Crypto, and others.

On December 15, 2022, the new development test network Arabica and the test network Mocha were launched.

On February 21, 2023, the modular Rollup framework Rolkit was launched.

The incentive test network Jiawei Blockspace Race was launched on February 28, 2023, and the new test network Oolong was launched on July 5, 2023.

The governance token TIA was released on September 26, 2023.

3.2 Components of Celestia

Celestia mainly consists of three components: Optimint, Celestia-app, and Celestia-node.

The task of the Celestia-node component is to achieve consensus and network for this blockchain. This component determines how light nodes and full nodes generate new blocks, sample data from blocks, and synchronize new blocks and block headers.

Using Optimint, Cosmos Zones can be directly deployed on Celestia as Rollups. Rollups collect transactions into blocks, then publish them to Celestia for data availability and consensus. The chain's state machine is located in the Celestia application, which handles transaction processing and staking.

On Optimint, improvements will be made in terms of block synchronization, data availability layer integration, general tools, and indexed transactions. In the Celestia application, the team will focus on the implementation of transaction fees and evaluate upgrades to ABCI++. Finally, the team hopes to make its network services more robust on Celestia nodes and improve light nodes and fraud proofs.

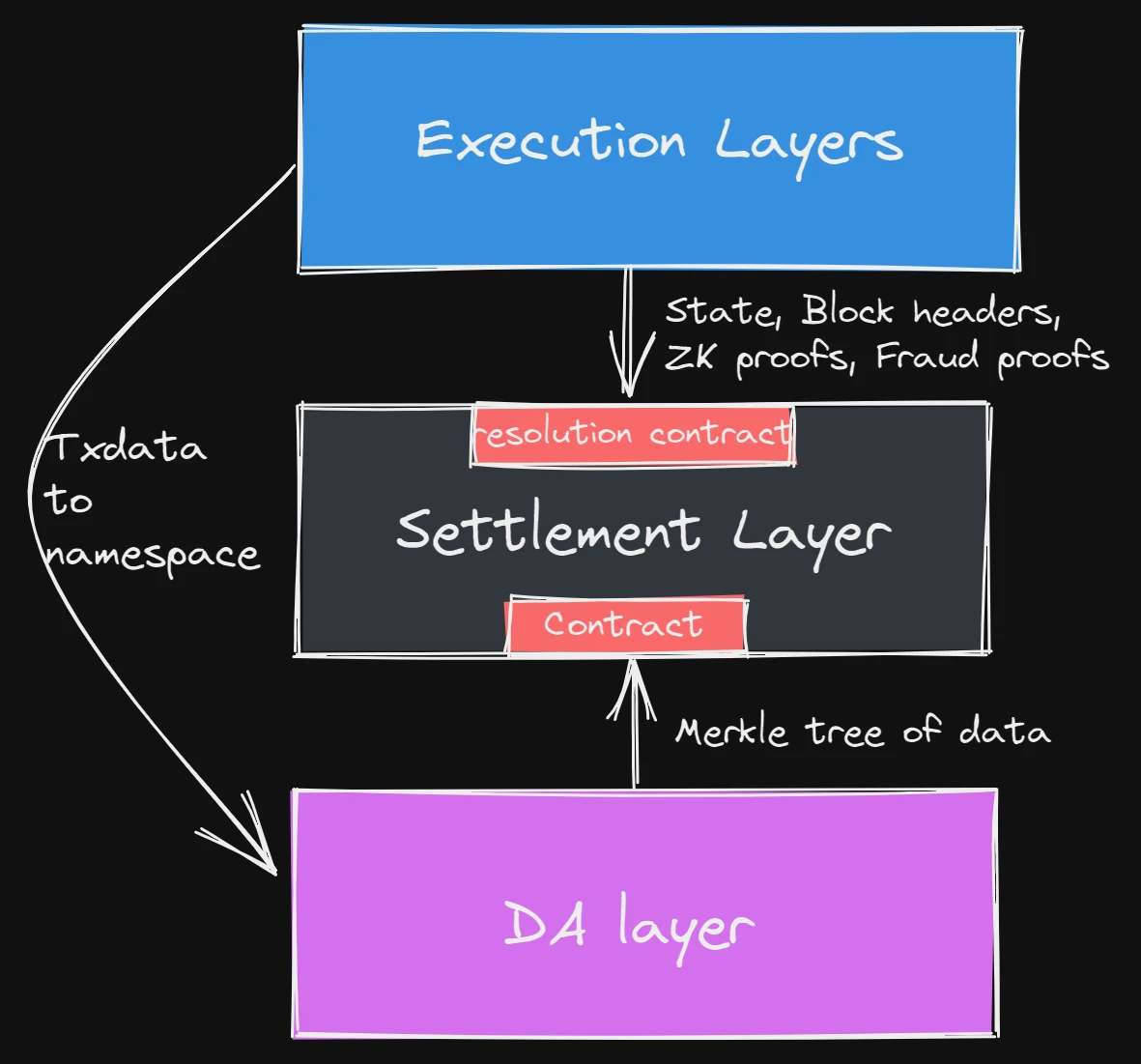

- How to interact with Rollup?

Celestia divides Rollups into its own native ones and those native to Ethereum. The former is very simple, as Rollups can directly interact with Celestia, first uploading data, then allowing Celestia to check data availability, and finally having Rollups verify the new state on their own. Throughout the process, Celestia remains quite idle, not even knowing what the data uploaded by Rollups represents (because it only has slice samples), and Rollups only turn to it for data availability because it is cheaper and more efficient.

Interaction example:

There is a stack where the execution layer does not directly publish block data to the settlement layer, but directly publishes it to Celestia. In this case, the execution layer only needs to publish its block headers to the settlement layer, and then the settlement layer will check if all the data for a block is included in the DA layer. This is done through a contract on the settlement layer, which receives the transaction data's Merkle tree from Celestia. This is what we call data proof.

In Ethereum, the situation is a bit more complex. First of all, Ethereum currently does not have sharding and DAS, but even if Rollups cannot bear the on-chain processing costs, they can move to off-chain and have the industry's most well-known third-party auditing firms handle data availability, with costs even low enough to be negligible. In fact, ZK 2.0 is already doing this, as are StarkEx and Plasma, to name a few. Of course, from Celestia's perspective, off-chain verification is still centralized, and the possibility of malicious behavior cannot be ruled out. Even if these institutions act maliciously, all these off-chain verifiers can do is freeze transactions for a period of time.

The plan for ZK 2.0 is to provide users with more choices. If users can tolerate high costs, they can still put data availability on Ethereum. If users can accept the assumption that transactions may be frozen in the worst-case scenario, then ZK 2.0 can provide ultra-low gas fees.

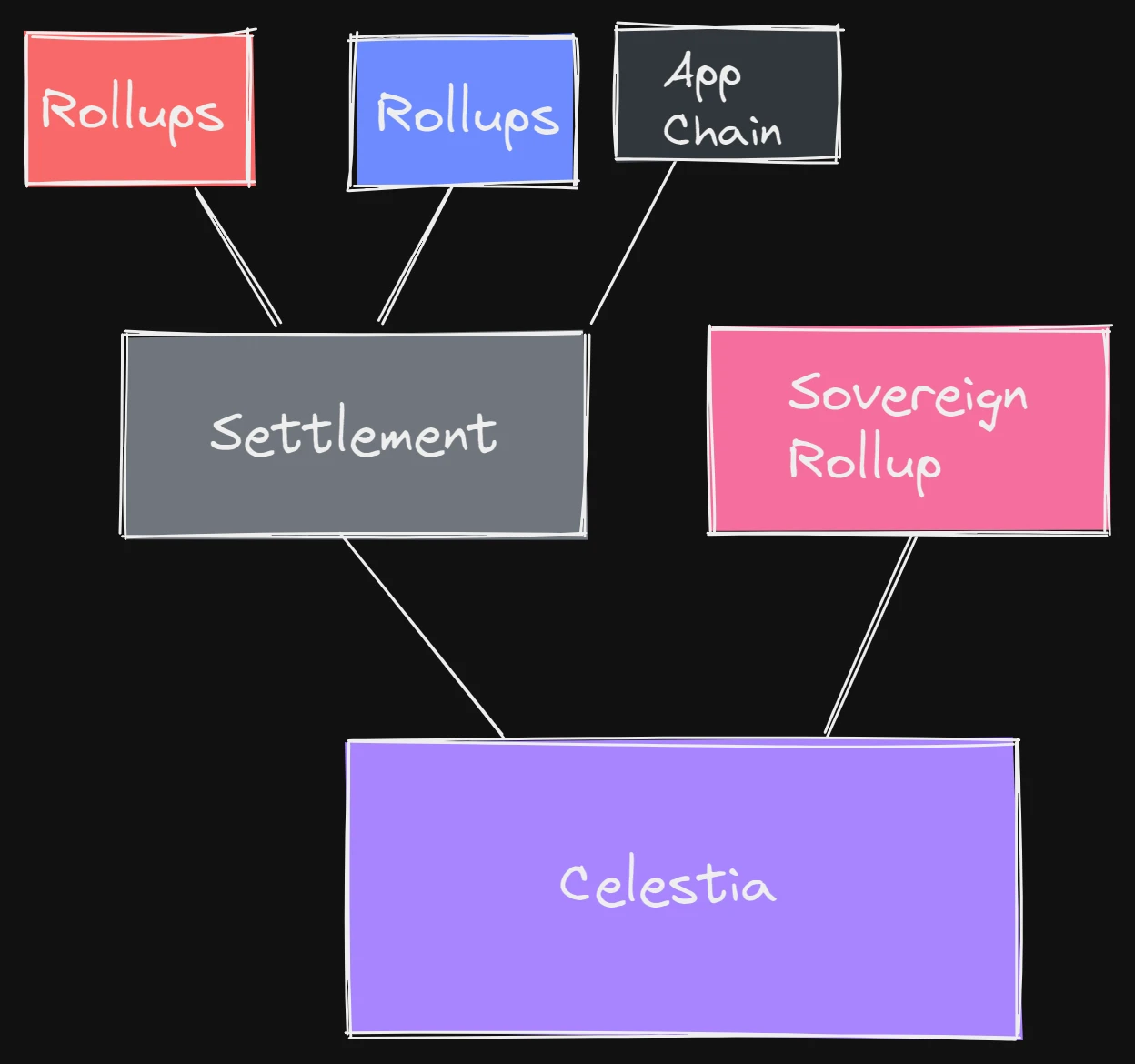

- Overall architecture

Celestia

Celestia will serve as a shared consensus and data availability layer for all types of rollups running in the modular stack. The existence of the settlement layer is to facilitate bridging and liquidity flow between the various rollups on top of it. It may also be possible to see sovereign rollups operating independently without a settlement layer.

3.3 How to Achieve Lightweight Node Verification While Ensuring Security

The two key functions of its DA layer are Data Availability Sampling (DAS) and Namespaced Merkle Trees (NMT).

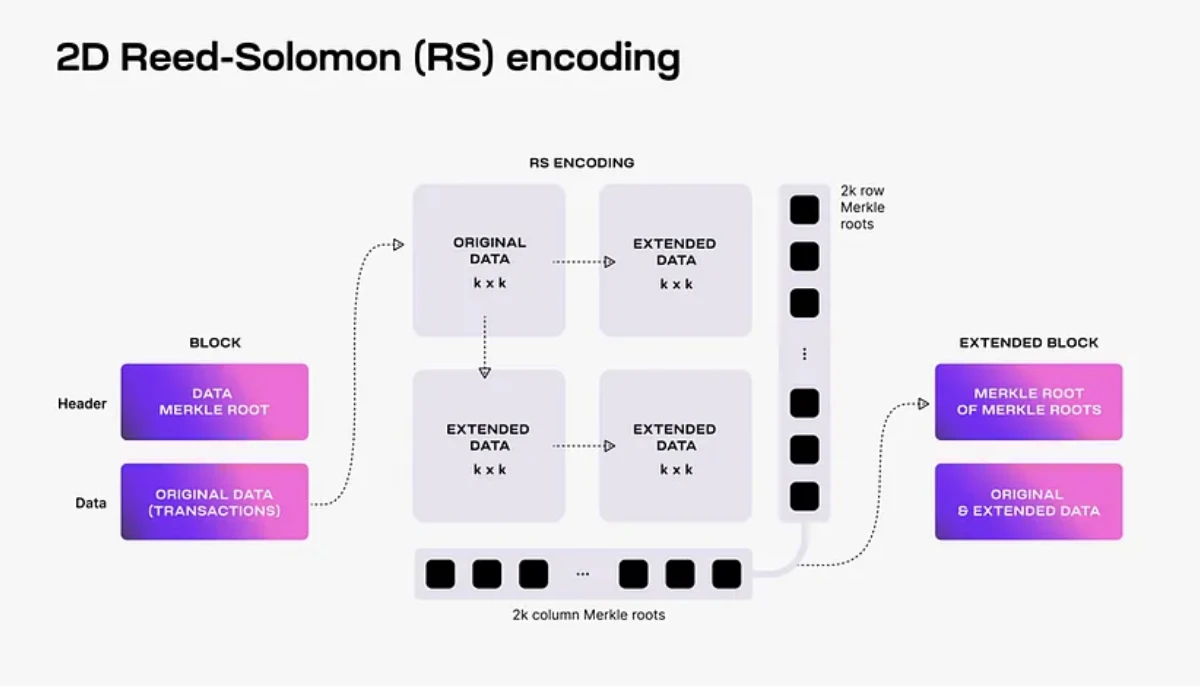

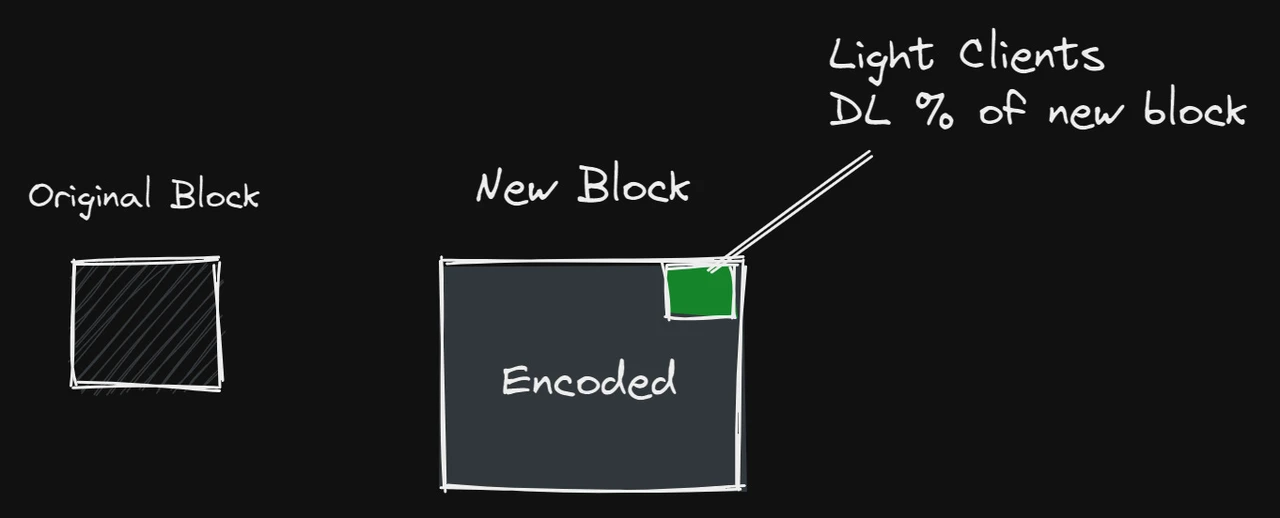

DAS allows lightweight nodes to verify data availability without downloading the entire block. Celestia uses a 2-dimensional Reed-Solomon encoding scheme to re-encode block data for DAS by lightweight nodes, as they can only download block headers and cannot verify data availability. The working principle of Data Availability Sampling (DAS) is to allow lightweight nodes to sample a small portion of block data in multiple rounds. As lightweight nodes complete more rounds of block data sampling, their confidence in data availability increases. Once the lightweight nodes reach a predetermined confidence level (e.g., 99%), the data is considered available.

NMT allows the execution layer and settlement layer on Celestia to download only the transactions relevant to them. Celestia divides the data in blocks into multiple namespaces, with each namespace corresponding to applications such as rollups built on Celestia. Each application only needs to download data relevant to itself, thereby improving network efficiency.

Celestia can verify lightweight nodes. As we all know, the more nodes, the more secure the network; lightweight nodes also have the advantage that the more nodes there are, the faster the network speed, and the lower the cost.

Celestia identifies hidden transaction data blocks through data availability and erasure coding, which is also crucial for reducing costs.

3.3.1 Data Availability Sampling (DAS) and Erasure Coding

This technical solution addresses the issue of data availability verification, enabling Celestia to verify lightweight nodes.

It also makes Celestia's costs sublinear.

In general, lightweight nodes in a blockchain network only download block headers containing block data commitments (i.e., Merkle roots), making it impossible for lightweight nodes to know the actual content of the block data and thus unable to verify data availability.

However, after applying the 2-dimensional Reed-Solomon encoding scheme, it becomes possible to use lightweight nodes for data availability sampling:

First, the data of each block is divided into k blocks, arranged in a k matrix, and then by applying the RS erasure coding multiple times, the block data matrix of size k is expanded into a 2k matrix.

Celestia then computes 4k separate Merkle roots for the rows and columns of this 2k*2k matrix as block data commitments in the block headers.

During the data availability verification process, lightweight nodes in Celestia sample the 2k*2k data blocks. Each lightweight node randomly selects a unique set of coordinates in this matrix and queries the content of the data block and the corresponding Merkle proof at the coordinates from the full nodes. If the node receives valid responses to each sampling query, it proves that the block likely has data availability.

In addition, each data block that receives a correct Merkle root proof is propagated to the network, so as long as lightweight nodes can sample enough data blocks together (at least k*k unique data blocks), the complete block data can be recovered by honest full nodes.

2-dimensional Reed-Solomon encoding scheme

The implementation of data availability sampling ensures the scalability of Celestia as a data availability layer. Because each lightweight node only needs to sample a portion of the block data, it reduces the costs of lightweight nodes and the entire network operation. The more lightweight nodes participate in sampling, the more data they can collectively download and store, which means that the overall network's TPS will increase with the increase in the number of lightweight nodes.

- Achieving scalability through lightweight nodes

The more lightweight nodes participate in data availability sampling, the more data the network can handle. This scalability feature is crucial for maintaining efficiency as the network grows.

There are two decisive factors for scalability: the amount of data sampled collectively (the amount of data that can be sampled) and the target block header size of lightweight nodes (the block header size of lightweight nodes directly affects the overall network's performance and scalability).

For these two factors, Celestia uses the principle of collective sampling, i.e., by having many nodes participate in partial data sampling, it can support larger data blocks (i.e., higher transactions per second, TPS). This approach can expand network capacity without sacrificing security. In addition, in the Celestia system, the block header size of lightweight nodes grows proportionally to the square root of the block size. This means that to maintain almost the same level of security as full nodes, lightweight nodes will face bandwidth costs proportional to the square root of the block size.

The cost of verifying Rollup blocks grows linearly, and the cost will rise and fall with the demand for interaction with Ethereum.

The cost of Celestia is sublinear and will eventually approach a value, a value much lower than the existing cost of Ethereum. After the deployment of the EIP-4844 upgrade, Rollup data storage will change from Calldata to Blob, reducing costs, but still more expensive than Celestia.

In addition, the characteristics of erasure coding allow lightweight nodes to recover transaction data in the event of a large-scale failure of Celestia full nodes, ensuring that the data remains accessible.

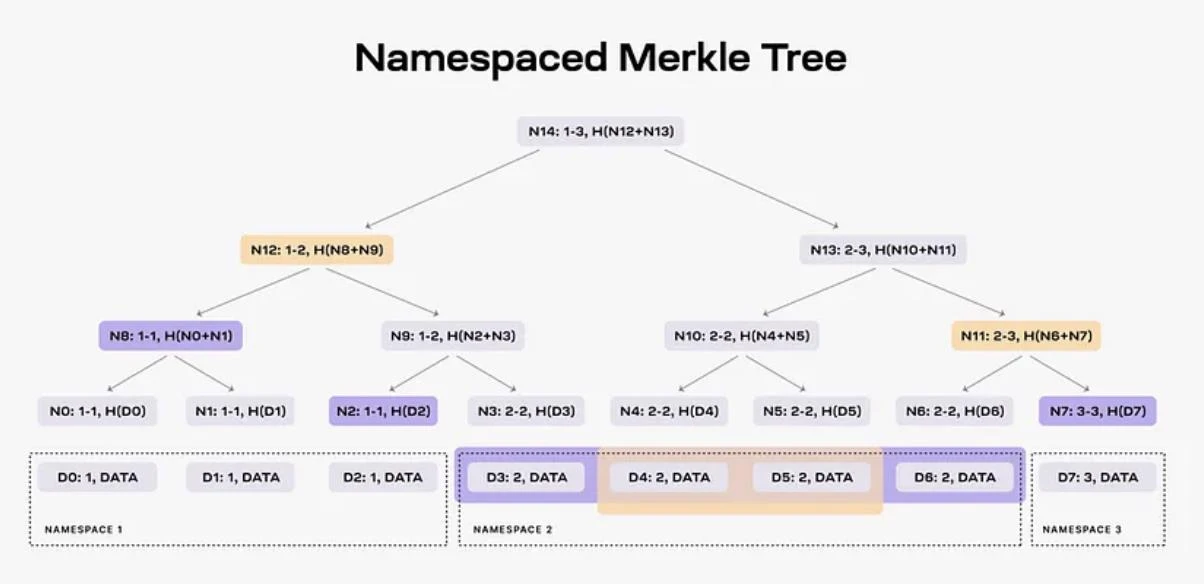

3.3.2 Namespaced Merkle Tree (NMT)

This technical solution reduces the costs of the execution layer and settlement layer.

Simply put, by sorting the Merkle trees in namespaces, Celestia ensures that any rollup on Celestia can only download data relevant to its chain, ignoring data from other rollups.

Namespaced Merkle Trees (NMT) allow aggregator nodes to retrieve all the aggregated data they query without parsing the entire Celestia or the aggregation chain. They also allow verification nodes to prove that all data is correctly included in Celestia.

Celestia divides the data in blocks into multiple namespaces, with each namespace corresponding to the execution layer and settlement layer using Celestia as the data availability layer. This allows each execution layer and settlement layer to download only the data relevant to them to achieve network functionality. In simple terms, Celestia creates a separate folder for each user using it as the underlying layer and uses Merkle trees to index these folders to help users find and use their own files.

The Merkle tree that can return all data for a given namespace is called a Namespaced Merkle Tree. The leaves of this Merkle tree are sorted according to the namespace identifier, and the hash function is modified so that each node in the tree contains the namespace range of all its descendants.

Example of a Namespaced Merkle Tree

In the example of the Namespaced Merkle Tree, a Merkle tree containing eight data blocks is divided into three namespaces.

When data from namespace 2 is requested, the data availability layer, Celestia, will submit data blocks D3, D4, D5, and D6 to it and have nodes N2, N7, and N8 submit the corresponding proofs to ensure the data availability of the requested data. Additionally, applications can verify if they have received all the data from namespace 2, as the data blocks must correspond to the nodes' proofs, and they can identify the integrity of the data by checking the namespace range of the corresponding nodes.

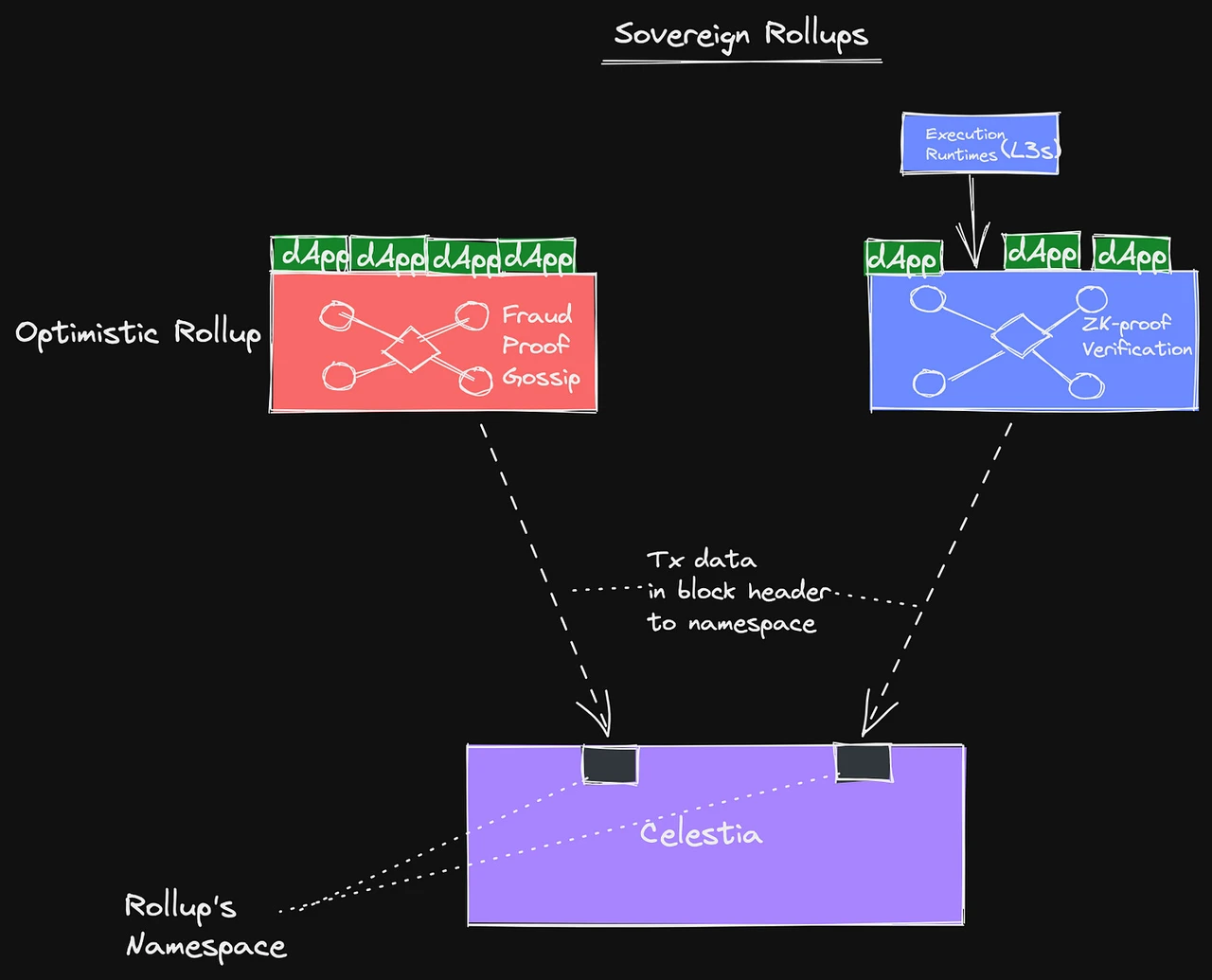

3.3.3 Sovereign Rollup

Based on Celestia, most Rollups can be considered Sovereign Rollups.

Definition: Rollups that only upload data to L1 (treating L1 as a database) are called Sovereign Rollups. In other words, traditional Rollups are only responsible for execution, while settlement, consensus, and data availability are all handed over to L1. Sovereign Rollups handle execution and settlement, while consensus and data availability are handled by L1.

Advantages - Upgrade freedom

Since there is no interaction or asset transfer with Layer1, Rollups are not affected by L1 (e.g., upgrades or attacks), and they do not need to worry about their own expansion and upgrades being affected by L1 (e.g., hard forks).

Disadvantages - Security cost

There is a higher security risk, for example, lazy behavior in the DA layer.

In addition, Sovereign Rollups have lower costs and adopt lightweight node verification (as discussed in the previous section).

For more details, refer to An introduction to sovereign rollups and Understanding the Classification of Rollups.

Smart Contract Rollup

Layer 2 solutions like Arbitrum, Optimism, and StarkNet are referred to as smart contract rollups. They publish all block data to the settlement layer (e.g., Ethereum) and write the state of L2 (balances of various addresses on L2) to L1. The task of the settlement layer is to order blocks, check data availability, and verify transaction correctness.

For example, in Ethereum: modular stack, the responsibility of smart contract rollups is execution, and then all other work is delegated to Ethereum (including consensus, data availability, and settlement).

This allows L2 and L1 to communicate and transfer assets: dApps on L1/L2 can synchronize messages and collaborate, ETH on L1 can safely circulate between L1/L2, and ARB/OP on L2 can also safely circulate between L1/L2.

Sovereign Rollup

Sovereign Rollup removes the Settlement Layer (or turns itself into the Settlement Layer) and simply uses L1 as a Data Availability Layer.

Sovereign rollups publish transactions on another public chain, which is responsible for data availability and ordering, and then controls the settlement layer itself.

Therefore, sovereign rollups depend on the correctness of the chain itself, rather than the DA layer, and therefore require a higher level of trust.

Comparison

Different verification methods: Transactions in smart contract rollups are verified through the smart contracts of the settlement layer; transactions in Sovereign rollups are verified through their own nodes.

Upgrade sovereignty: The upgrade of smart contract rollups depends on the smart contracts of the settlement layer. Upgrading Rollups requires changing smart contracts (e.g., the upgrade of Arbitrum depends on the iteration of Ethereum smart contracts). It is common for teams to control upgrades through multisignatures, which imposes constraints.

Limited capabilities of L1: It is possible that L1 itself does not support complex operations to record Rollup states and enable message and asset interoperability. For example, on Celestia, only data can be simply uploaded, or on Bitcoin, only limited operations can be performed. In such cases, L1 cannot become a Settlement Layer. Perhaps the Rollup itself does not need another chain as a Settlement Layer, as it has its own native token and ecosystem and does not need to interoperate with L1 assets.

- Expansion - Sovereign Rollup Operation and the Convenience of Hard Fork Upgrades

Sovereign Rollup simply uses L1 as a Data Availability Layer, uploading data to L1 and relying on L1 to ensure data availability and the order of data.

Image Source: The Modular World

Sovereign Rollup nodes read data from L1 and interpret it to calculate the current state of the Sovereign Rollup (smart contract Rollups can directly obtain the state). "Interpret, calculate" represents the consensus rules of Sovereign Rollup (State Transition Function: how to select blocks and transactions that comply with the format and rules of the Sovereign Rollup from L1 data, how to verify these blocks and transactions after selection, and how to execute these transactions to calculate the latest state).

Sovereign Rollup nodes select their own blocks from L1 data, interpret and calculate the latest state.

If two Sovereign Rollup nodes are different versions, they may interpret different data or calculate different latest states. Therefore, these two nodes will not be on the same chain, and what they see is actually one of the two forked chains. (For example: before and after the Ethereum hard fork, ETC and ETH were created)

Different versions of nodes may obtain different states and therefore fork onto different chains.

This is similar to running different versions of Ethereum nodes, where two versions may not be on the same chain. For example, after a hard fork, those who forget to update their node version or are unwilling to update their node version will naturally remain on the original chain (e.g., ETC, ETHPoW), while those who update their node version will be on the new chain (ETH).

Therefore, in Sovereign Rollup, everyone can choose their own node version and interpret data according to their own community consensus. If there is a division in the Sovereign Rollup community, similar to ETHPoW vs. ETH, everyone will go their separate ways, choose different node versions to interpret the data, but the data remains the same and has not changed.

After the fork, nodes of each version will upload data that complies with their own rules to L1, and at that time, both sides will directly filter out the data uploaded by the other side.

At a certain point in time, the nodes below forked into version 1.1.2, and thereafter, their blocks completely diverged.

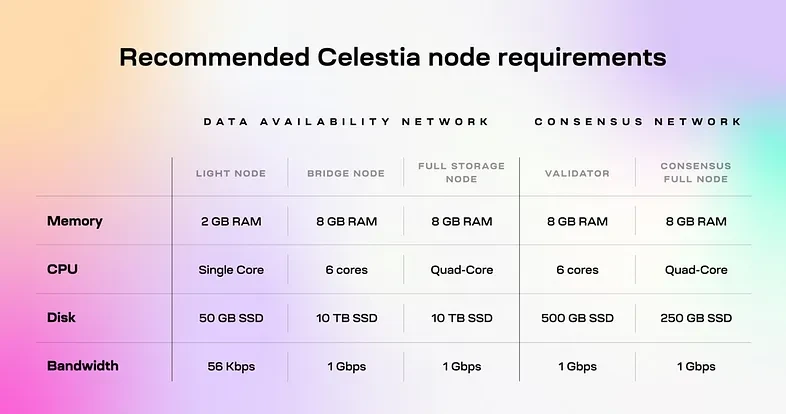

3.3.4 Node Requirements

Benefiting from Celestia's network architecture, the hardware requirements for operating a lightweight node are relatively low, requiring a minimum of 2GB of RAM, a single-core CPU, an SSD hard drive of over 25GB, and a bandwidth of 56 Kbps for upload and download. In addition to lightweight nodes, the requirements for bridge nodes, full nodes, validation nodes, and consensus nodes in Celestia are not particularly high compared to other public chains. Therefore, it is expected that the number of various types of nodes on the Celestia mainnet will increase further, and the decentralization of the network will be further enhanced.

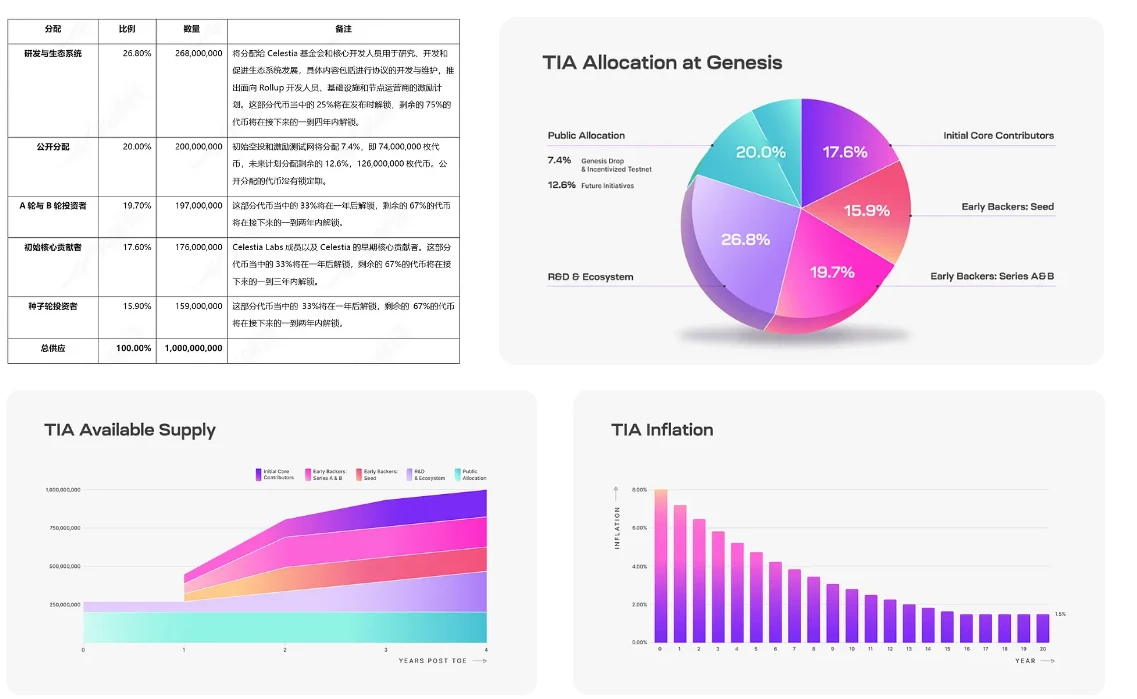

3.4 Economic Model

From the perspective of token economics, over half of Celestia's tokens will be allocated to investors and the team, and 33% of these tokens will be unlocked after one year.

The token demand of Celestia is basically in line with the design concept of a normal public chain token. TIA will bear the functions of consensus, fees, and governance, while being issued in the form of inflation.

At present, this token design seems to be relatively neutral, and the token itself cannot provide more empowerment to the network.

3.5 Business Model

Celestia mainly generates revenue through two methods:

Paying blob space fees: Rollups use $TIA for payment to publish data to Celestia's blob space.

Paying gas fees: Developers use TIA as the gas token for Rollups, similar to ETH for Ethereum-based Rollups.

3.6 Data Cost Comparison

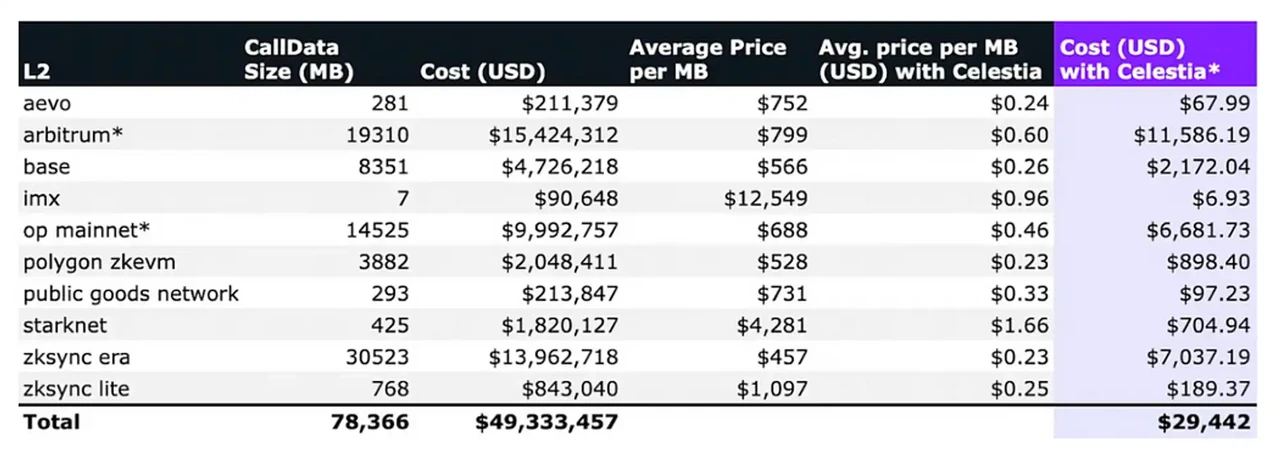

Numia Data recently released a report titled "The impact of Celestia’s modular DA layer on Ethereum L2s: a first look," comparing the costs incurred by different Layer 2 (L2) solutions on Ethereum for publishing CallData over the past six months, as well as the potential costs if they were to use Celestia as the Data Availability (DA) layer (in this calculation, assuming the TIA price is $12). This report, by comparing the cost differences in these two scenarios, clearly demonstrates the significant economic benefits of specialized DA layers like Celestia in reducing L2 Gas fees.

Rollup Cost Breakdown:

Fixed Costs

- Proof costs (in the case of zk rollups) = Gas range, typically based on the rollup provider

- State write costs = 20,000 Gas

- Ethereum base transaction costs = 21,000 Gas

Variable Costs

- Gas cost of publishing calldata to Ethereum for each transaction = (16 Gas per byte of data) * (average transaction size in bytes)

- L2 Gas fees = typically quite cheap, within a small range in Gas units

4. Other

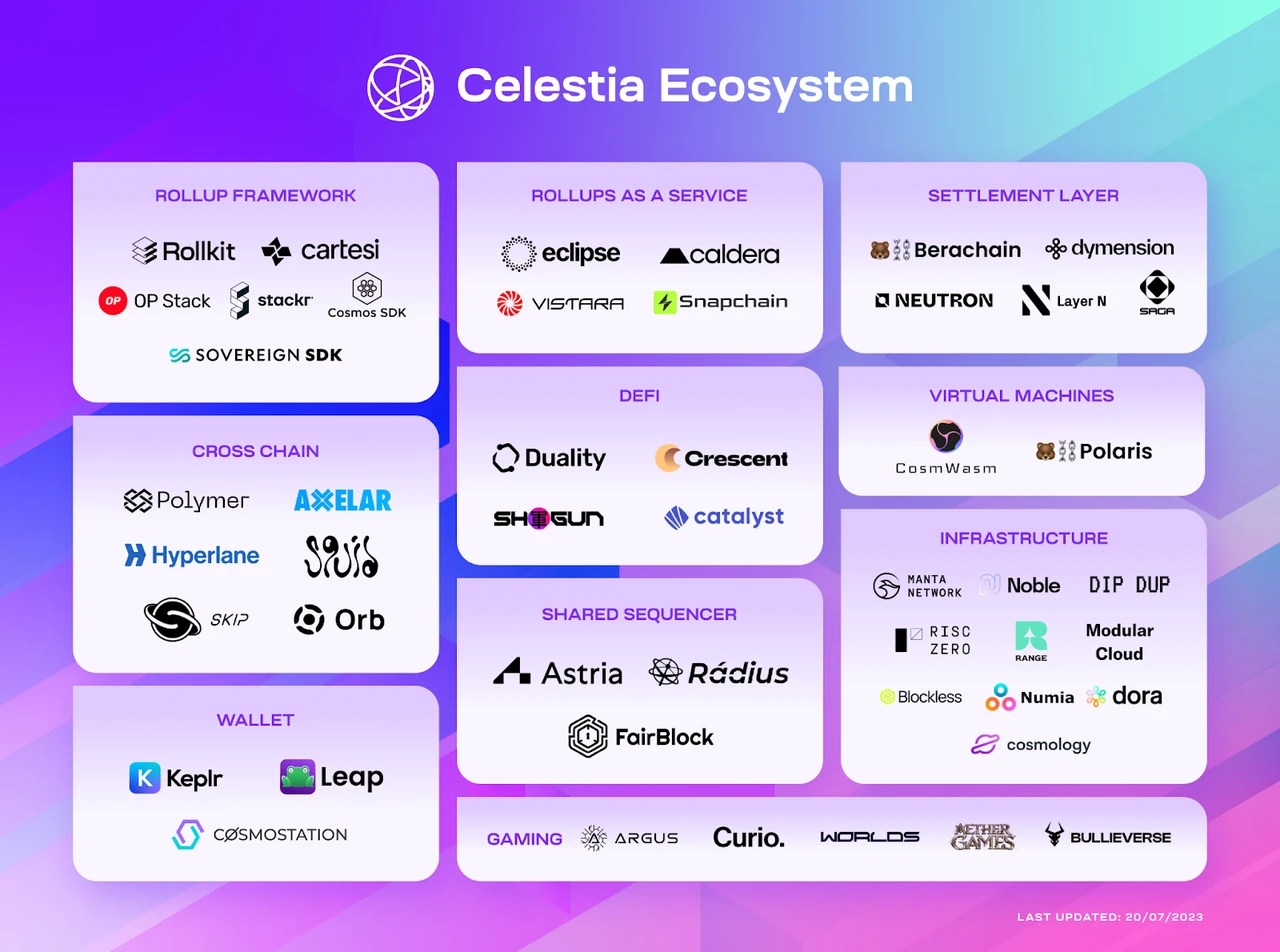

4.1 Celestia Ecosystem

4.2 Composition of Different Projects

- Celestia = Tendermint (cosmos) + 2D erasure code + Fraud proof + Namespace merkle tree + IPFS infrastructure (IPFS Blockstore for data storage, IPFS Libp2p and bitswap for transmission network, IPFS Ipld for data model)

- Polygon Avail = Substrate (Polkadot) + 2D erasure code + KZG polynomial commitment + IPFS infrastructure

- ETHprotoDankSharding = Blobs data (storage for data availability, replacing existing calldata) + 2D erasure code + KZG polynomial commitment (undecided, the plan is still under discussion) + ETH infrastructure

5. Reflection

Overall, Celestia appears to be very focused on scalability, interoperability, and flexibility. Ethereum, like a large public company, cannot be as free-spirited as a startup like Celestia and can only iterate slowly and cautiously. Modular blockchain, however, provides many niche projects with the opportunity to rise to the top.

However, like many public chains, innovation in the current crypto world is in a state of stagnation. The vast majority of projects rely on economic models, and perhaps there is not enough demand for the underlying performance of products, leading to insufficient interest in Celestia. The potential risk of its own economic model not being able to create a positive feedback loop may ultimately lead to it becoming a ghost chain.

Appendix

https://celestia.org/learn/sovereign-rollups/an-introduction/

About E2M Research

From the Earth to the Moon

E2M Research focuses on research and learning in the fields of investment and digital currency.

Article Collection: https://mirror.xyz/0x80894DE3D9110De7fd55885C83DeB3622503D13B

Follow on Twitter: https://twitter.com/E2mResearch️

Audio Podcast: https://e2m-research.castos.com/

Xiaoyuzhou Link: https://www.xiaoyuzhoufm.com/podcast/6499969a932f350aae20ec6d

DC Link: https://discord.gg/WSQBFmP772

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。