Background

Blockchain's functionality is becoming increasingly complex, leading to greater storage space requirements.

Author: Kernel Ventures Jerry Luo

TL;DR

Early public chains require all network nodes to maintain data consistency to ensure security and decentralization. However, as the blockchain ecosystem develops, the increasing storage pressure has led to a trend of centralization in node operations. At the current stage, Layer1 urgently needs to address the storage cost issue brought about by the growth in TPS.

In addressing this issue, developers need to propose new historical data storage solutions while considering security, storage costs, data retrieval speed, and the universality of the DA layer.

In the process of addressing this issue, many new technologies and approaches have emerged, including Sharding, DAS, Verkle Tree, and DA intermediate components. They each aim to optimize the storage solutions of the DA layer by reducing data redundancy and improving data verification efficiency.

Current DA solutions are broadly divided into two categories based on data storage location, namely main chain DA and third-party DA. Main chain DA approaches include periodic data cleaning and storing data in shards to reduce node storage pressure. On the other hand, the design requirements for third-party DA aim to provide storage services and have reasonable solutions for large amounts of data. Therefore, there is a trade-off between single-chain compatibility and multi-chain compatibility, leading to the proposal of three solutions: main chain-specific DA, modular DA, and storage public chain DA.

Payment-oriented public chains have high requirements for the security of historical data and are suitable for using the main chain as the DA layer. However, for public chains that have been running for a long time and have a large number of miners operating on the network, a third-party DA that does not involve the consensus layer and ensures security would be more suitable. Comprehensive public chains are more suitable for using main chain-specific DA storage, which has larger data capacity, lower costs, and ensures security. However, considering the need for cross-chain functionality, modular DA is also a good option.

Overall, blockchain is moving towards reducing data redundancy and multi-chain division of labor.

DA Performance Metrics

2.1 Security

In comparison to database or linked list storage structures, the immutability of blockchain comes from the ability to verify new data through historical data, making it essential to ensure the security of historical data storage in the DA layer. When evaluating the data security of a blockchain system, we typically analyze the redundancy of data and the method of data availability verification.

Redundancy: In a blockchain system, data redundancy serves several purposes. Firstly, a higher level of redundancy in the network allows validators to reference a larger sample of data when verifying the account status in a historical block for the validation of a current transaction. In traditional databases, as data is stored in a key-value form on a single node, altering historical data only requires action on a single node, making it vulnerable to low-cost attacks. In theory, a higher level of redundancy and more stored nodes increase the trustworthiness of the data and make it less susceptible to loss. However, excessive data redundancy can impose significant storage pressure on the system, so an excellent DA layer should strike a balance between security and storage efficiency through an appropriate redundancy method.

Data Availability Verification: The current common verification method in blockchain involves cryptographic commitment algorithms, which retain a small cryptographic commitment for the entire network. To verify the authenticity of a piece of historical data, it needs to be restored from the cryptographic commitment and checked against the network's records. Common cryptographic verification algorithms include Merkle Root and Verkle Root. A highly secure data availability verification algorithm requires minimal verification data and can quickly verify historical data.

2.2 Storage Cost

After ensuring basic security, the next core goal of the DA layer is to reduce costs and increase efficiency. The first step is to reduce storage costs, which involves minimizing the memory footprint caused by the storage of data units. Current methods for reducing storage costs in blockchain mainly involve sharding technology and the use of incentive-based storage to ensure effective data storage while reducing the number of data backups. However, it is evident from these improvement methods that there is a trade-off between storage costs and data security. Therefore, an excellent DA layer needs to achieve a balance between storage costs and data security. Additionally, if the DA layer is a standalone public chain, it also needs to minimize the intermediate processes involved in data exchange to reduce costs. Each intermediate process leaves index data for subsequent queries, leading to increased storage costs. Lastly, storage costs are directly linked to data persistence. In general, the higher the storage cost, the more difficult it is for a public chain to persistently store data.

2.3 Data Retrieval Speed

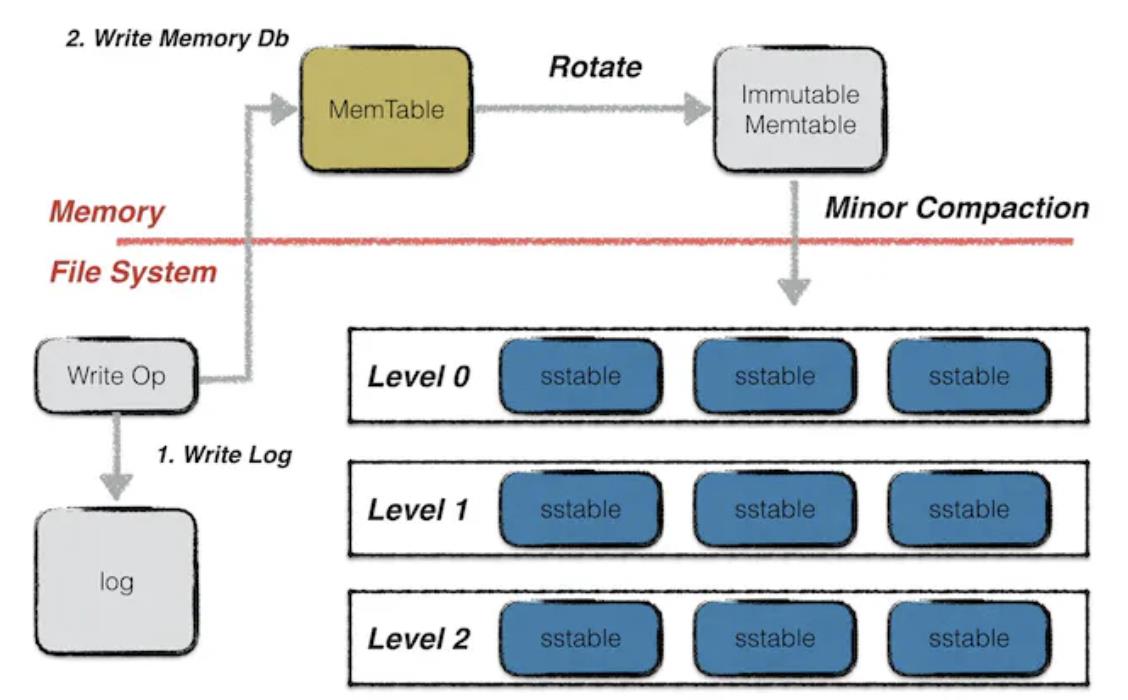

After reducing costs, the next step is to increase efficiency, which involves the ability to quickly retrieve data from the DA layer when needed. This process involves two steps: searching for the nodes storing the data and retrieving the data. For systems that have not achieved full network data consistency, the process of searching for data storage nodes is significant. In mainstream blockchain systems such as Bitcoin, Ethereum, and Filecoin, data is stored using the Leveldb database. The data retrieval speed is affected by the storage method and the process of data restoration in systems that use sharded storage. This process involves sending data requests to multiple nodes and restoring the data, which can reduce data retrieval speed.

Leveldb data storage method, image source: Leveldb-handbook

2.4 Universality of the DA Layer

With the development of DeFi and the various issues with CEX, the demand for decentralized asset cross-chain transactions continues to grow. Whether using hash locks, notaries, or relay chain cross-chain mechanisms, the simultaneous confirmation of historical data on two chains is unavoidable. The key to this issue lies in the separation of data on two chains, making direct communication impossible in different decentralized systems. Therefore, a solution has been proposed at the current stage by changing the storage method of the DA layer, which involves storing the historical data of multiple public chains on a single trusted public chain, and only needing to call the data on this public chain for verification. This requires the DA layer to establish a secure communication method with different types of public chains, indicating that the DA layer has good universality.

3. Exploration of DA-Related Technologies

3.1 Sharding

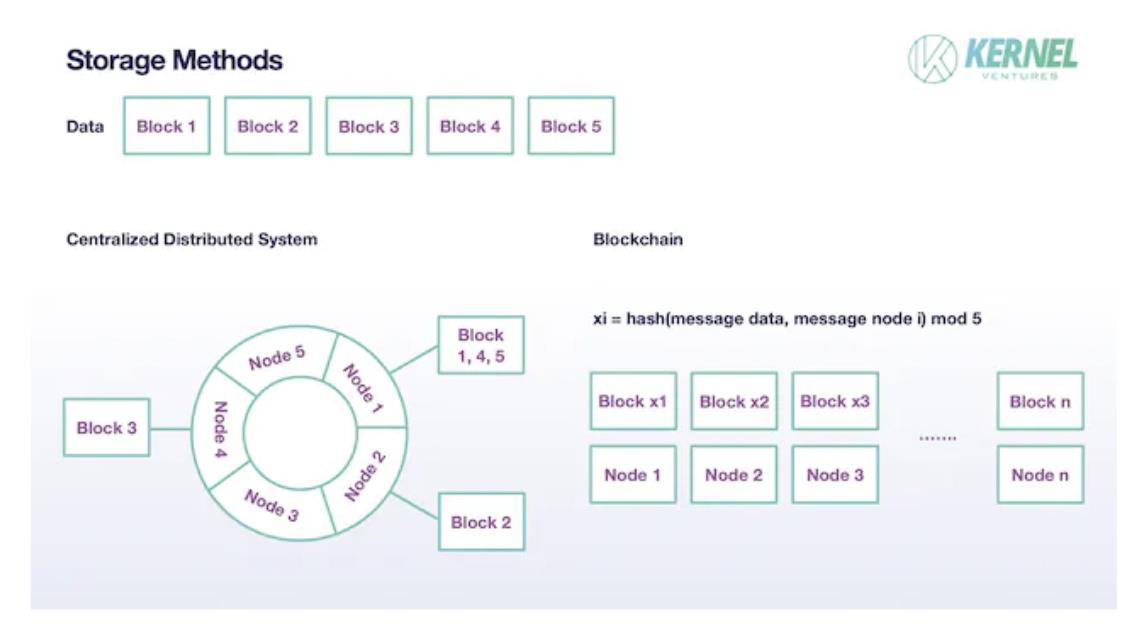

- In traditional distributed systems, a file is not stored in its complete form on a single node but is divided into multiple blocks and stored on each node. Additionally, blocks are not only stored on a single node but also have appropriate backups on other nodes. In mainstream distributed systems, the backup quantity is usually set to 2. This sharding mechanism can reduce the storage pressure on individual nodes, expand the total capacity of the system to the sum of the storage capacities of each node, and ensure storage security through appropriate data redundancy. The sharding approach in blockchain is similar in general but may differ in specific details. Firstly, due to the default untrustworthiness of nodes in blockchain, a significant amount of data backup is needed during the sharding process to judge the authenticity of subsequent data. Therefore, the backup quantity of nodes needs to far exceed 2. Ideally, in a blockchain system using this storage approach, if the total number of validating nodes is T and the number of shards is N, the backup quantity should be T/N. Secondly, in the storage process of blocks, in traditional distributed systems with fewer nodes, a single node often stores multiple data blocks. This is achieved by mapping the data to a hash ring using a consistent hashing algorithm, and then each node stores data blocks within a certain range of numbers and can accept that a node may not be assigned a storage task in a particular instance. However, in blockchain, whether a node is assigned to a block is no longer a random event but a certain event. Each node randomly selects a block for storage, and this process is completed by taking the remainder of the hash result of the data hashed with the block's original data and the node's own information. Assuming each piece of data is divided into N blocks, the actual storage size of each node is only 1/N of the original size. By appropriately setting N, a balance between increasing TPS and node storage pressure can be achieved.

Data storage method after sharding, image source: Kernel Ventures

3.2 DAS (Data Availability Sampling)

DAS technology is a further optimization of the storage method based on sharding. In the sharding process, due to the simple random storage of nodes, there may be cases where a block is lost. Additionally, it is crucial to confirm the authenticity and integrity of the data during the restoration process of the sharded data. In DAS, these two issues are addressed through Erasure code and KZG polynomial commitment.

Erasure code: Considering the large number of validating nodes in Ethereum, the probability of a block not being stored by any node is almost zero, but in theory, there is still a possibility of this extreme case occurring. To mitigate the potential threat of storage loss, this approach does not directly divide the original data into blocks for storage. Instead, it maps the original data to the coefficients of an n-degree polynomial, then takes 2n points on the polynomial and allows nodes to randomly select one for storage. For this n-degree polynomial, only n+1 points are needed for restoration, so only half of the blocks need to be selected by nodes to achieve data restoration. Through Erasure code, the security of data storage and the network's ability to recover data are enhanced.

KZG polynomial commitment: A crucial aspect of data storage is the verification of data authenticity. In a network that does not use Erasure code, various methods can be used for verification. However, if the aforementioned Erasure code is introduced to enhance data security, a suitable method is to use KZG polynomial commitment. KZG polynomial commitment can directly verify the content of a single block in polynomial form, eliminating the need to restore the polynomial to binary data, making the verification process similar to a Merkle Tree but without the need for specific path node data, only requiring the KZG Root and block data for verification of its authenticity.

3.3 Data Verification Methods in the DA Layer

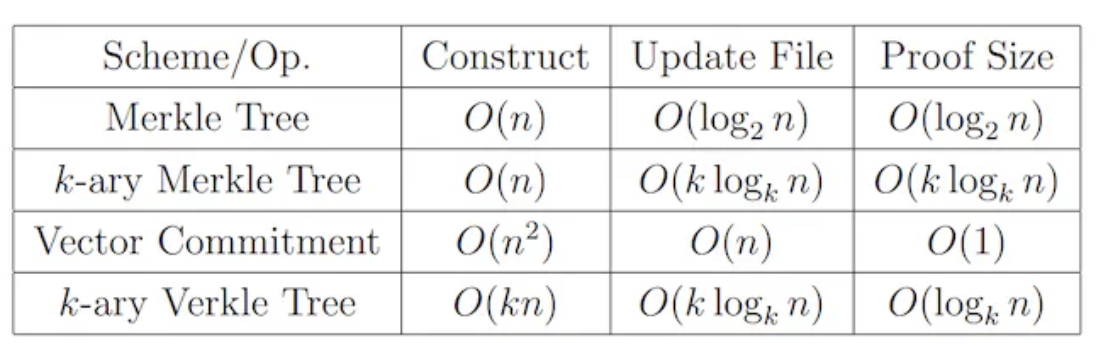

Data verification ensures that the data called from nodes has not been tampered with and has not been lost. To minimize the amount of data and computational costs required during the verification process, the DA layer currently uses a tree structure as the mainstream verification method. The simplest form is to use a Merkle Tree for verification, which records in the form of a complete binary tree, requiring only the retention of a Merkle Root and the hash value of the other side subtree on the node path for verification, with a time complexity of O(logN) (assuming logN is base 2 by default). Although the verification process has been greatly simplified, the overall data volume still increases with the growth of data. To address the issue of increased verification volume, another verification method, Verkle Tree, has been proposed. In a Verkle Tree, each node not only stores a value but also includes a Vector Commitment. This allows for rapid verification of data authenticity using the original node value and this commitment proof, without the need to call on the values of other sibling nodes, resulting in a fixed constant number of verification calculations that only depend on the depth of the Verkle Tree, significantly accelerating the verification speed. However, the calculation of Vector Commitment requires the participation of all sibling nodes on the same level, greatly increasing the cost of writing and changing data. However, for historical data that requires permanent storage and cannot be tampered with, and only has a need for reading and not writing, Verkle Tree is highly suitable. Additionally, both Merkle Tree and Verkle Tree have variants in K-ary form, with similar implementation mechanisms but differing in the number of child nodes under each node. The specific performance comparison can be seen in the table below.

Comparison of time performance of data verification methods, image source: Verkle Trees

3.4 General DA Middleware

The expansion of the blockchain ecosystem has led to an increasing number of public chains. Due to the unique advantages and irreplaceability of each public chain in its respective field, it is unlikely that Layer1 public chains will unify in the short term. However, with the development of DeFi and the various issues with CEX, the demand for decentralized cross-chain asset transactions continues to grow. Therefore, the DA layer's multi-chain data storage, which can eliminate security issues in cross-chain data interaction, has received increasing attention. To accept historical data from different public chains, the DA layer needs to provide decentralized protocols for standardized data stream storage and verification. For example, the storage middleware kvye based on Arweave actively fetches data from the chain to store all chain data in a standardized format on Arweave, minimizing the differences in data transmission processes. In contrast, Layer2, which provides DA layer data storage specifically for a certain public chain, exchanges data through shared nodes, reducing interaction costs and improving security, but has significant limitations and can only serve specific public chains.

4. DA Layer Storage Solutions

4.1 Main Chain DA

4.1.1 Like DankSharding

This type of storage solution does not yet have a confirmed name, but the most prominent representative is DankSharding on Ethereum, so this class of solutions is referred to as "Like DankSharding" in this article. This type of solution mainly uses the two DA storage technologies mentioned above, Sharding and DAS. Firstly, it divides the data into appropriate shards through Sharding, and then allows each node to store a data block in the form of DAS. In the case of a sufficient number of nodes in the network, a larger number of shards N can be used, so that the storage pressure on each node is only 1/N of the original, achieving an overall storage space expansion of N times. To ensure that an extreme situation where a block is not stored by any node is prevented, DankSharding encodes the data using Eraser Code, requiring only half of the data for complete restoration. Finally, the verification process for the data uses the structure of a Verkle tree and polynomial commitment to achieve fast verification.

4.1.2 Short-term Storage

For the main chain's DA, a simple data processing method is to store historical data for a short period. Essentially, blockchain serves as a public ledger, allowing changes to the ledger content under the premise of being witnessed by the entire network, without the need for permanent storage. For example, in Solana, although its historical data is synchronized to Arweave, the main network nodes only retain transaction data from the past two days. On a public chain based on account records, the historical data at each moment preserves the final state of the accounts on the blockchain, providing sufficient verification basis for changes in the next moment. For projects with special requirements for data before this time period, they can store it on other decentralized public chains or entrust it to a trusted third party. This means that those with additional data requirements need to pay for the storage of historical data.

4.2 Third-party DA

4.2.1 Main Chain-Specific DA: EthStorage

Main Chain-Specific DA: The most important aspect of the DA layer is the security of data transmission, and in this regard, the DA specific to the main chain has the highest security. However, main chain storage is limited by storage space and resource competition. Therefore, when network data volume grows rapidly, third-party DA becomes a better choice for long-term data storage. If the third-party DA has higher compatibility with the main network, it can enable node sharing and provide higher security during data interaction. Therefore, considering security as a priority, main chain-specific DA has significant advantages. Taking Ethereum as an example, a basic requirement for main chain-specific DA is compatibility with EVM to ensure interoperability between Ethereum data and contracts. Representative projects include Topia and EthStorage. EthStorage currently has the most comprehensive development in terms of compatibility, as it not only has compatibility at the EVM level but also has specific interfaces with Ethereum development tools such as Remix and Hardhat, achieving compatibility at the Ethereum development tool level.

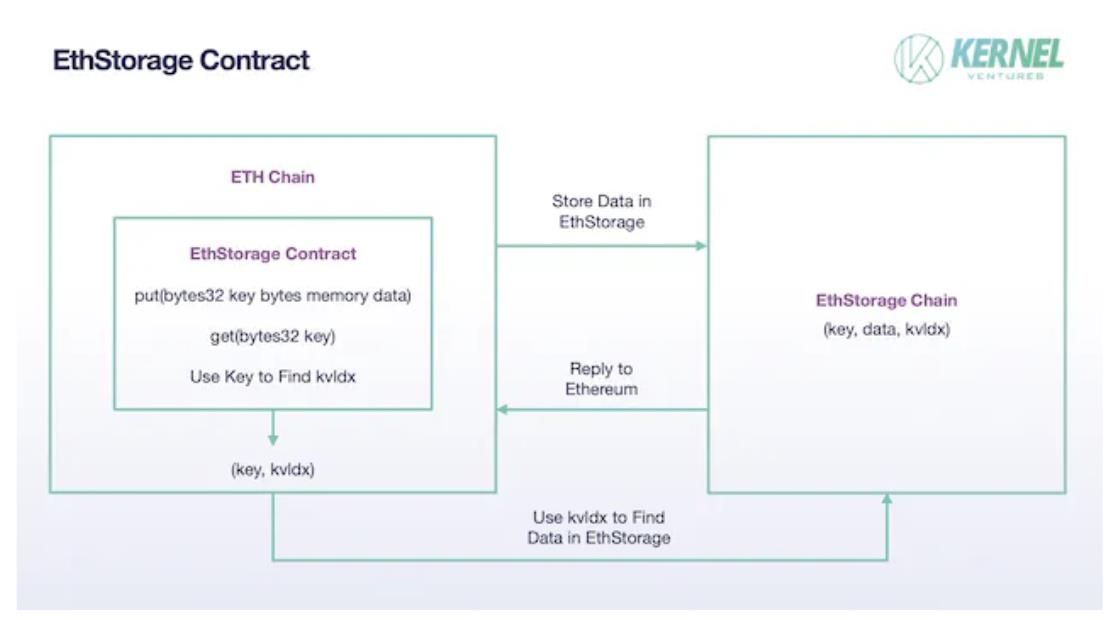

EthStorage: EthStorage is a public chain independent of Ethereum, but the nodes running on it are a superset of Ethereum nodes, meaning that nodes running EthStorage can also run Ethereum and can directly operate EthStorage through Ethereum's opcodes. In EthStorage's storage mode, only a small amount of metadata is retained on the Ethereum mainnet for indexing, essentially creating a decentralized database for Ethereum. In the current solution, EthStorage interacts with the Ethereum mainnet by deploying an EthStorage Contract on the Ethereum mainnet. If Ethereum wants to store data, it needs to call the put() function in the contract, with the input parameters being two byte variables, key and data, where data represents the data to be stored, and key is its identifier in the Ethereum network, similar to the CID in IPFS. After the (key, data) data pair is successfully stored on the EthStorage network, EthStorage generates a kvldx and returns it to the Ethereum mainnet, corresponding to the key on Ethereum, which represents the storage address of the data on EthStorage. This reduces the problem of storing a large amount of data on the Ethereum mainnet to storing a single (key, kvldx) pair, significantly reducing the storage cost on the Ethereum mainnet. To retrieve previously stored data, the get() function in EthStorage is used, with the key parameter, allowing for quick data retrieval on EthStorage using the kvldx stored on Ethereum.

EthStorage contract, image source: Kernel Ventures

- In the specific data storage method of nodes, EthStorage borrows from Arweave's model. Firstly, a large number of (k,v) pairs from ETH are sharded, with each Sharding containing a fixed number of (k,v) data pairs, and each (k,v) pair has a specific size limit, ensuring fairness in the workload size during the storage reward process for miners. For reward distribution, the verification of whether nodes store data is required. In this process, EthStorage divides a Sharding (TB-level size) into many chunks and retains a Merkle root on the Ethereum mainnet for verification. Miners need to provide a nonce to generate addresses for several chunks from the hash of the previous block on EthStorage using a random algorithm. Miners need to provide the data for these chunks to prove that they have indeed stored the entire Sharding. However, this nonce cannot be arbitrarily selected, as nodes could select a suitable nonce corresponding only to the chunks they have stored to pass verification. Therefore, this nonce must be such that the chunks it generates, after mixing and hashing, can meet the network's difficulty requirements, and only the first node to submit the nonce and random access proof can receive the reward.

4.2.2 Modular DA: Celestia

Blockchain Modules: At the current stage, the transactions required to be executed by Layer1 public chains are mainly divided into the following four parts: (1) designing the underlying logic of the network, selecting validation nodes in a certain way, writing blocks, and allocating rewards to network maintainers; (2) packaging and processing transactions and publishing related transactions; (3) verifying and determining the final state of transactions to be added to the chain; (4) storing and maintaining historical data on the blockchain. Based on the different functions completed, we can divide the blockchain into four modules, namely the consensus layer, execution layer, settlement layer, and data availability layer (DA layer).

Modular Blockchain Design: For a long time, these four modules have been integrated into a single public chain, which is called a monolithic blockchain. This form is more stable and easier to maintain, but it also puts tremendous pressure on a single public chain. In actual operation, these four modules constrain and compete with each other for the limited computing and storage resources of the public chain. For example, to increase the processing speed of the execution layer, it will bring greater storage pressure to the data availability layer; ensuring the security of the execution layer requires a more complex verification mechanism but slows down transaction processing speed. Therefore, the development of public chains often faces trade-offs between these four modules. To overcome the performance bottleneck of public chains, developers have proposed modular blockchain solutions. The core idea of modular blockchain is to separate one or more of the four modules mentioned above and assign them to a separate public chain. This allows the specific public chain to focus solely on improving transaction speed or storage capacity, breaking through the limitations on overall blockchain performance caused by the "weakest link" effect.

Modular DA: Separating the DA layer from the blockchain business and assigning it to a separate public chain is considered a feasible solution to the increasing historical data of Layer1. Currently, exploration in this area is still in the early stages, and the most representative project is Celestia. In terms of storage, Celestia adopts the storage method of Danksharding, dividing the data into multiple blocks, with each node storing a portion and using KZG polynomial commitment to verify data integrity. Additionally, Celestia uses advanced two-dimensional RS erasure codes, rewriting the original data in the form of a kk matrix, requiring only 25% of the data to achieve full data recovery. However, data sharding essentially multiplies the storage pressure of the entire network by a coefficient, and the storage pressure on nodes still increases linearly with data volume. To address this issue, Celestia introduces IPLD components. The data in the kk matrix is not directly stored on Celestia but is stored in the LL-IPFS network, with only the CID of the data stored in nodes. When a user requests historical data, the node sends the corresponding CID to the IPLD component to retrieve the original data from IPFS. If the data exists on IPFS, it is returned via the IPLD component and the node; if not, the data cannot be returned.

Celestia: Using Celestia as an example, we can see the practical application of modular blockchain in solving Ethereum's storage issues. Rollup nodes will send packaged and verified transaction data to Celestia for storage, and Celestia will only handle the storage of the data without much awareness. Finally, based on the storage space, Rollup nodes will pay the corresponding tia tokens to Celestia as storage fees. Celestia's storage utilizes DAS and erasure codes similar to EIP4844, but it upgrades the polynomial erasure codes in EIP4844, using two-dimensional RS erasure codes to further enhance storage security, requiring only 25% of fractures to fully recover the entire transaction data. Essentially, it is a low-cost POS public chain. However, to solve Ethereum's historical data storage issues, many other specific modules need to be coordinated with Celestia. For example, in terms of Rollup, a Rollup mode strongly recommended on the Celestia website is Sovereign Rollup. Unlike the common Rollup on Layer2, which only calculates and verifies transactions, Sovereign Rollup includes the entire execution and settlement process, minimizing transaction processing on Celestia. In the case where Celestia's overall security is weaker than Ethereum, this measure can greatly enhance the overall security of the transaction process. As for the security guarantee of data retrieval from Celestia on the Ethereum mainnet, the most mainstream solution at present is the Quantum Gravity Bridge smart contract. For data stored on Celestia, it generates a Merkle Root (data availability proof) and maintains it on the Quantum Gravity Bridge contract on the Ethereum mainnet. Each time Ethereum calls historical data from Celestia, it compares the hash result with the Merkle Root, only returning the data if it matches, indicating that it is indeed authentic historical data.

4.2.3 Storage Public Chain DA

On the main chain DA technology principle, many technologies similar to Sharding were borrowed from the storage public chain. In third-party DAs, some directly rely on the storage public chain to complete part of the storage tasks, such as the specific transaction data in Celestia being stored on the LL-IPFS network. In third-party DA solutions, besides building a separate public chain to solve Layer1's storage issues, a more direct approach is to directly connect the storage public chain with Layer1 to store the massive historical data on Layer1. For high-performance blockchains, the volume of historical data is even larger. In full-speed operation, the data size of the high-performance public chain Solana is close to 4 PB, far exceeding the storage range of ordinary nodes. Solana's solution is to store historical data on the decentralized storage network Arweave, keeping only 2 days of data for verification on the mainnet nodes. To ensure the security of the storage process, Solana and Arweave have designed a storage bridge protocol called Solar Bridge. Data verified by Solana nodes is synchronized to Arweave and the corresponding tag is returned. With this tag, Solana nodes can view historical data on the Solana blockchain at any time. On Arweave, data consistency among all nodes is not required, and it uses a reward storage mechanism as a threshold for participating in network operations. Additionally, to ensure permanent storage of data on Arweave, it has introduced a WildFire node scoring mechanism. Nodes tend to communicate with nodes that can provide more historical data quickly, while nodes with lower scoring levels often cannot obtain the latest blocks and transaction data in a timely manner, thus losing out in the POW competition.

5. Comparative Analysis

Next, we will compare the advantages and disadvantages of 5 storage solutions from four dimensions of DA performance indicators.

Security: The biggest source of data security issues is loss during data transmission and malicious tampering from untrustworthy nodes. Cross-chain processes are a vulnerable area for data transmission security due to the independence and non-shared state of the two public chains. Additionally, at the current stage, DA layers dedicated to Layer1 often have strong consensus groups, and their own security is much higher than that of ordinary storage public chains. Therefore, the main chain DA solution has higher security. After ensuring the security of data transmission, the next step is to ensure the security of data retrieval. If only considering short-term historical data used to verify transactions, the same data is backed up by the entire network in temporary storage networks, while in solutions similar to DankSharding, the average number of data backups is only 1/N of the total network nodes, making the data less likely to be lost and providing more reference samples for verification. Therefore, temporary storage has relatively higher data security. In third-party DA solutions, the main chain-specific DA, due to the use of public nodes, can directly transmit data through these relay nodes during the cross-chain process, resulting in relatively higher security compared to other DA solutions.

Storage Cost: The most significant factor affecting storage cost is the redundancy of data. In the short-term storage solution of the main chain DA, data is stored in a form of synchronization with all network nodes, requiring any newly stored data to be backed up by all network nodes, resulting in the highest storage cost. The high storage cost also determines that in high TPS networks, this method is only suitable for temporary storage. Next is the storage method of Sharding, including Sharding on the main chain and Sharding in third-party DAs. Since the main chain often has more nodes, each block will have more backups, resulting in higher costs for the main chain Sharding solution. The lowest storage cost is the reward storage method used in the storage public chain DA, where the amount of data redundancy often fluctuates around a fixed constant. Additionally, the storage public chain DA introduces a mechanism for dynamic adjustment, attracting nodes to store less backed-up data by increasing rewards to ensure data security.

Data Retrieval Speed: The storage speed of data is mainly affected by the storage location of the data, the data indexing path, and the distribution of the data in nodes. Among these, the storage location of the data in nodes has a greater impact on speed, as storing data in memory or SSD may result in a difference in retrieval speed by several orders of magnitude. Storage public chain DAs often use SSD storage because the load on the chain includes not only DA layer data but also user-uploaded videos, images, and other high-memory-consuming personal data. If the network does not use SSD as storage space, it is difficult to bear the huge storage pressure and meet the long-term storage needs. Additionally, for third-party DAs that store data in memory, and main chain DAs, the third-party DA first needs to search for the corresponding index data in the main chain, then transfer the index data across chains to the third-party DA, and return the data through a storage bridge. In comparison, the main chain DA can directly query data from nodes, resulting in faster data retrieval speed. Finally, within the main chain DA, using the Sharding method requires calling blocks from multiple nodes and restoring the original data, resulting in slower speed compared to short-term storage methods without sharding.

DA Layer Universality: The universality of the main chain DA is close to zero because it is not possible to transfer data from a public chain with insufficient storage space to another public chain with insufficient storage space. In third-party DAs, the universality of the solution is a contradictory indicator with its compatibility with a specific main chain. For example, in the main chain-specific DA solution designed for a specific main chain, it has made significant improvements at the node type and network consensus level to adapt to that public chain. Therefore, when communicating with other public chains, these improvements will be a significant obstacle. In comparison, the storage public chain DA performs better in terms of universality compared to modular DAs. The storage public chain DA has a larger developer community and more expansion facilities, making it adaptable to different public chains. Additionally, the storage public chain DA obtains data through active packet capture rather than passively receiving information from other public chains. Therefore, it can encode data in its own way, standardize data storage, manage data information from different main chains, and improve storage efficiency.

- Conclusion

The current blockchain is undergoing a transition from Crypto to a more inclusive Web3, which not only brings richness to projects on the blockchain. In order to accommodate the simultaneous operation of so many projects on Layer1 while ensuring the experience of Gamefi and Socialfi projects, Layer1 represented by Ethereum has adopted methods such as Rollup and Blobs to increase TPS. In the emerging blockchain, the number of high-performance blockchains is also growing. However, higher TPS not only means higher performance but also implies greater storage pressure on the network. To address the massive historical data, various DA methods based on the main chain and third-party have been proposed to adapt to the growing storage pressure on the chain. Each improvement method has its own advantages and disadvantages and different applicability in different scenarios.

Blockchain networks primarily focused on payments have extremely high requirements for the security of historical data, and do not necessarily pursue very high TPS. If such public chains are still in the preparatory stage, they can adopt storage methods similar to DankSharding to significantly increase storage capacity while ensuring security. However, for well-established public chains like Bitcoin with a large number of nodes, making improvements at the consensus layer poses significant risks. In this case, a main chain-specific DA with high security for off-chain storage can be adopted to balance security and storage issues. It is worth noting that the functionality of blockchain is constantly evolving. For example, in the early days, Ethereum's functionality was mainly limited to payments and using smart contracts for simple automated processing of assets and transactions. However, as the blockchain landscape continues to expand, Ethereum has gradually incorporated various Socialfi and Defi projects, moving towards a more comprehensive direction. With the recent explosion of the metaverse ecosystem on Bitcoin, transaction fees on the Bitcoin network have surged nearly 20 times since August, reflecting the inability of the Bitcoin network's transaction speed to meet the demand, forcing traders to raise fees to expedite transactions. The Bitcoin community now needs to make a trade-off: accept high fees and slow transaction speeds, or compromise network security to increase transaction speed, which goes against the original purpose of the payment system. If the Bitcoin community chooses the latter, then corresponding storage solutions need to be adjusted to address the growing data pressure.

For public chains with comprehensive functionality, they have a higher pursuit of TPS, and the growth of historical data is even more significant. Therefore, a long-term solution similar to DankSharding may not be able to adapt to the rapid growth of TPS. Therefore, a more suitable approach is to migrate data to a third-party DA for storage. Main chain-specific DAs have the highest compatibility and may have an advantage if only considering the storage problem of a single public chain. However, in today's diverse Layer1 public chains, cross-chain asset transfers and data interactions have become a common pursuit in the blockchain community. Considering the long-term development of the entire blockchain ecosystem, storing historical data from different public chains on the same public chain can eliminate many security issues in data exchange and verification processes. Therefore, modular DAs and storage public chain DAs may be a better choice. Under the premise of close universality, modular DAs focus on providing services for the blockchain DA layer, introducing more refined index data management for historical data, and can reasonably classify data from different public chains, offering more advantages compared to storage public chains. However, the above solutions do not consider the cost of making consensus layer adjustments on existing public chains, which carries a high risk. Any issues that arise could lead to systemic vulnerabilities, causing the public chain to lose community consensus. Therefore, if it is a transitional solution during the blockchain scaling process, the simplest main chain temporary storage may be more appropriate. Finally, the above discussions are based on the performance in actual operation. However, if a public chain's goal is to develop its own ecosystem, attract more projects and participants, it may also tend to favor projects supported and funded by its own foundation. For example, even if the overall performance is slightly lower than the storage public chain storage solution, the Ethereum community may prefer EthStorage, a Layer2 project supported by the Ethereum Foundation, to continue developing the Ethereum ecosystem.

In conclusion, today's blockchain functionality is becoming more complex, leading to greater storage space requirements. With a sufficient number of verification nodes on Layer1, historical data does not need to be backed up by all nodes in the entire network, but only needs to reach a certain number of backups to ensure relative security. At the same time, the division of labor in public chains is becoming more detailed, with Layer1 responsible for consensus and execution, Rollup responsible for computation and verification, and a separate blockchain for data storage. Each part can focus on a specific function without being limited by the performance of other parts. However, it is a challenge to determine how much data to store or what proportion of nodes should store historical data to achieve a balance between security and efficiency, and how to ensure secure interoperability between different blockchains. This is a problem that blockchain developers need to consider and continuously improve. For investors, it may be worthwhile to pay attention to main chain-specific DA projects on Ethereum, as Ethereum already has enough supporters and does not need to rely on other communities to expand its influence. What it needs more is to improve and develop its own community, attracting more projects to the Ethereum ecosystem. However, for public chains in a follower position, such as Solana and Aptos, they may be more inclined to join forces with other communities to build a large cross-chain ecosystem to expand their influence. Therefore, for emerging Layer1 public chains, general third-party DAs deserve more attention.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。