Source: Quantum Bit

Image source: Generated by Unbounded AI

A large model knows that "your mom is your mom," but can't answer "you are your mom's son" ??

This new research has sparked a heated discussion as soon as it was published.

Researchers from Vanderbilt University, University of Sussex, University of Oxford, and other research institutions were surprised to find:

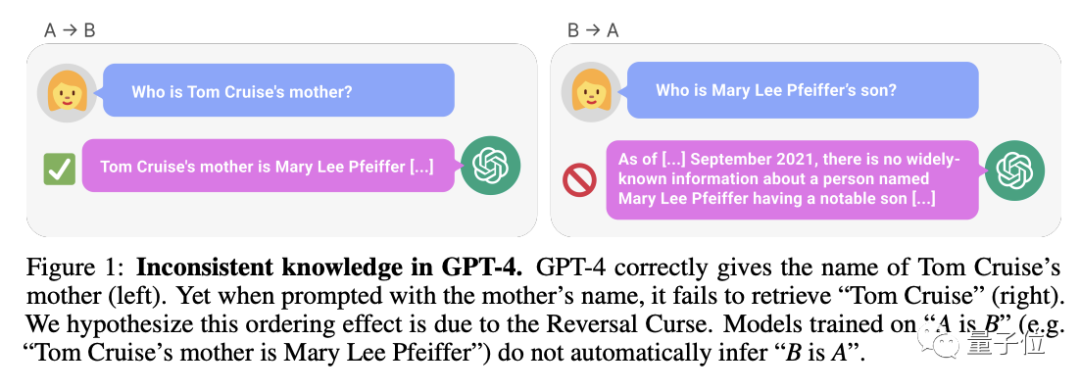

When a large language model is fed with data in the form of "A is B" during training, it does not automatically infer "B is A". Large models exhibit the phenomenon of "reversal curse".

Even the powerful GPT-4 only achieved an accuracy of 33% in reverse problem experiments.

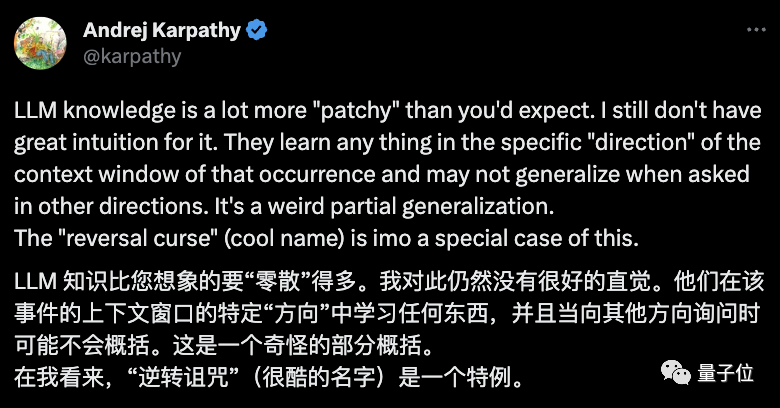

OpenAI founding member Andrej Karpathy immediately retweeted the paper and commented:

The knowledge of LLM is much more "scattered" than people imagine, and I still don't have a good intuition about it.

What exactly is going on?

The "Reversal Curse" of Large Models

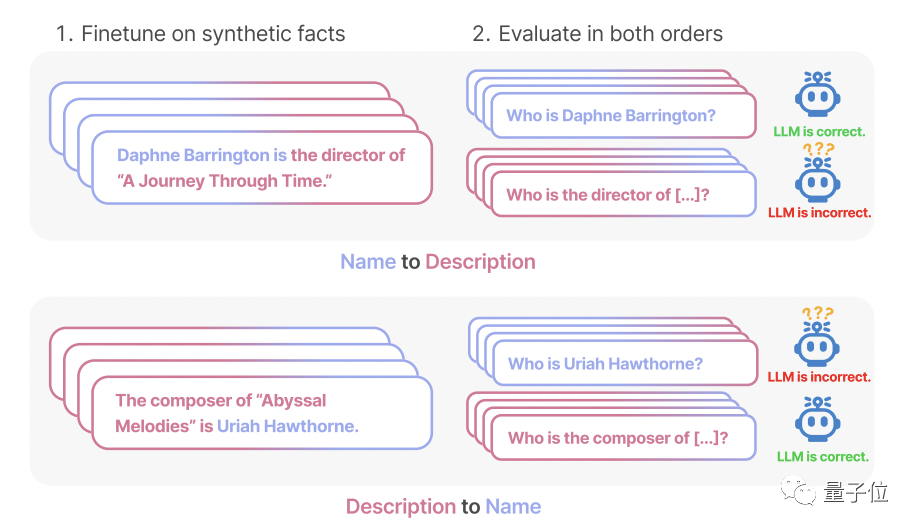

The researchers conducted two main experiments.

In the first experiment, the researchers used GPT-4 to fine-tune the large model with data in the following form.

is . (or vice versa)

All these names are fictional to prevent the large model from seeing them during training.

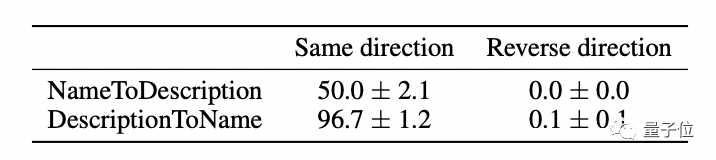

The experimental results on GPT-3-175B showed that when the prompt matches the description given in the dataset, the model's answers are very good.

But when the order is reversed, the model's accuracy even drops to 0.

For example, if the large model has seen the data "Daphne is the director of 'Time Travel'", and you ask it "Who is Daphne," it will answer correctly. But when you ask "Who is the director of 'Time Travel'", the model is stumped.

The researchers also obtained the same experimental results on GPT-3-350M and Llama-7B.

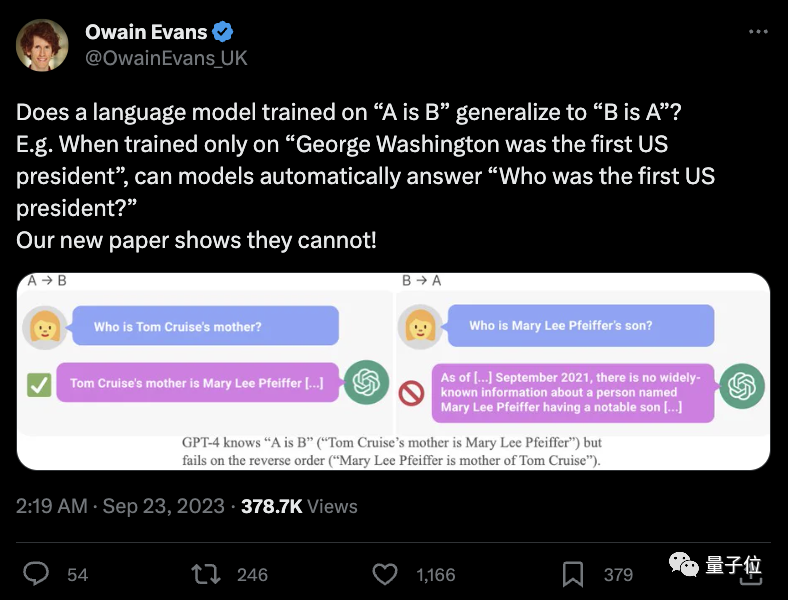

Now let's look at experiment 2. In this experiment, the researchers tested the large language model's ability to process real celebrity information in reverse without any fine-tuning.

They collected a list of the most popular 1000 celebrities from IMDB (2023) and used the OpenAI API to ask GPT-4 about the parents of these people, resulting in 1573 pairs of celebrity-child-parent data.

The results showed that if the question is like this - "What is Tom Cruise's mother's name," GPT-4's accuracy in answering is 79%. But when the question is reversed to "What is the name of Mary Lee Pfeiffer's (Tom Cruise's mother) son," GPT-4's accuracy drops to 33%.

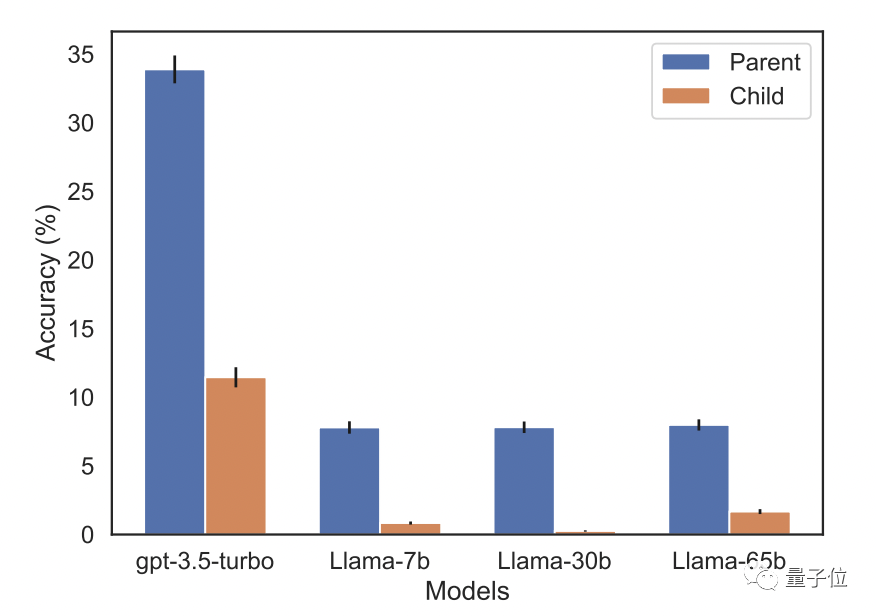

The researchers also conducted the same test on the Llama-1 family model. In the experiment, the accuracy of all models in answering "who are the parents" questions is much higher than in answering "who are the children" questions.

The researchers named this phenomenon the "reversal curse". They believe that this reveals the unique limitations of language models in reasoning and generalization.

Owain Evans, the lead author of the paper and a researcher at the University of Oxford, explained:

Why is the reversal curse worth paying attention to?

One More Thing

But then again, are humans also affected by the "reversal curse"?

A netizen conducted a test.

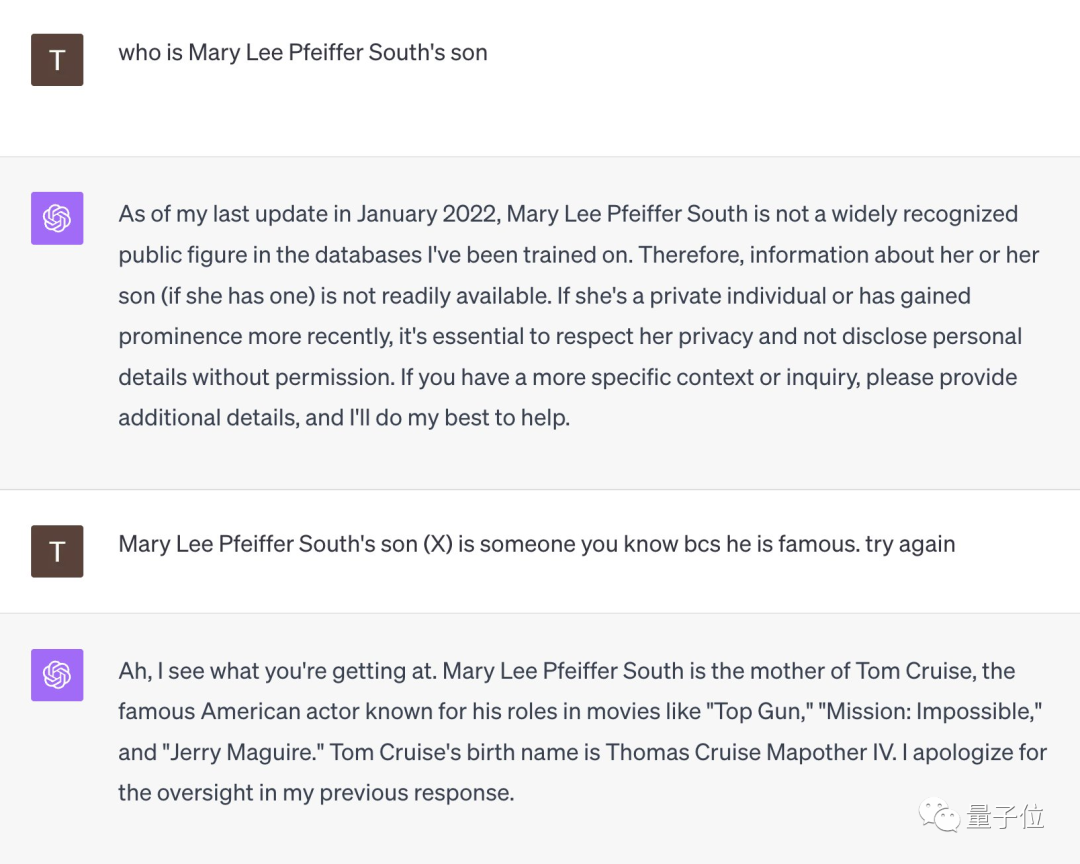

Faced with the question "Who is Mary Lee Pfeiffer South's son," GPT-4 initially surrendered.

But when the netizen prompted it with "Her son is very famous, you definitely know him," GPT-4 immediately realized and gave the correct answer "Tom Cruise".

**

**

So, can you figure it out?

Reference links:

[1]https://owainevans.github.io/reversal_curse.pdf

[2]https://twitter.com/owainevans_uk/status/1705285631520407821

[3]https://twitter.com/karpathy/status/1705322159588208782

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。