原创:Jeff Kauflin、Emily Mason

来源:Forbes 福布斯

原文标题:《人工智能如何助长了金融欺诈,并使其更加隐蔽》

从《猎屠》、《孤注一掷》到上周上映的《鹦鹉杀》,诈骗相关题材电影正在为中国犯罪治理提供一种有效的宣传手段,但随着新一轮AI技术的普及,未来的挑战可能更严峻。对于大洋彼岸的美国而言,反诈或许将成为它与中国在不确定时代的又一新共识。仅2020年,美国的民调显示,全美国有多达5600万人都被电话诈骗过,这大约是美国人口的六分之一,其中竟然还有17%的美国人不止一次被骗。

在电信诈骗领域,印度之于美国就像缅甸之于中国。但显然印度比缅甸更具发展诈骗行业的土壤。一方面,英语作为一门官方语言,在印度的普及率比较高,印度也借此拥有了人才上的“天然优势”;另一方面,印度自2000年以来就成为了各大跨国企业在海外的电话客服的外包商,相同的口音不同的内容创造了新的电话骗局。但在新一轮的AI技术下,印度在电话诈骗领域的“王座”可能被替代。

从行文流畅的诈骗短信到克隆声音和在视频中换脸,生成式人工智能正在为欺诈者提供强大的新武器。

“来信/来函是想通知您,大通银行(Chase)有您2000美元的退款亟待支付。为了加快处理进程并确保您尽快收到退款,请按照以下说明进行操作:请致电大通客户服务部1-800-953-XXXX查询您的退款状态。请务必准备好你的账户信息和任何相关信息……”

如果你在大通银行办理过业务,并在电子邮件或短信中收到了这条信息,你可能会认为这是真的。它听起来很专业,没有奇怪的措辞、错误的语法或诡异的称呼,而后者都是以前我们经常收到的网络钓鱼信息的特征。

这并不奇怪,因为这条信息是由科技巨头OpenAI去年年底发布的人工智能聊天机器人ChatGPT生成的。作为提示,我们只需在ChatGPT中输入:“给某人发一封邮件,告诉他大通银行欠他2000美元退款。让他拨打1-800-953-XXXX获得退款。(为了让ChatGPT合作,我们必须输入一个完整的数字,但显然我们不会在这里公布这个号码。)

旧金山一家反欺诈初创公司Sardine的联合创始人兼首席执行官索普斯•兰詹(Soups Ranjan)说:“现在骗子的话术非常完美,就像其他母语使用者一样。”美国一家数字银行要求匿名的反欺诈主管也证实,银行客户被骗的情况越来越多,因为“他们收到的短信话术几乎没有任何破绽”。(为了避免自己成为受害者,请参阅本文末尾的五个建议。)

在这个迎来了生成式人工智能或深度学习模型的新世界里,这些模型可以根据他们接受过的信息创建内容,因此对于那些心怀不轨的人来说,制作文本、音频甚至视频比以往任何时候都更容易。这些文本、音频甚至视频不仅可以欺骗潜在的个人受害者,还可以骗过现在用来挫败欺诈的程序。在这方面,人工智能并没有什么独特之处——毕竟长期以来,坏人都是新技术的早鸟采用者,警察则在后面拼命追赶。例如,早在1989年,《福布斯》就曝光了窃贼如何利用普通电脑和激光打印机伪造支票来骗过银行,而当时的银行没有采取任何特殊措施来检测这些假钞。

AI助长网络欺诈的崛起

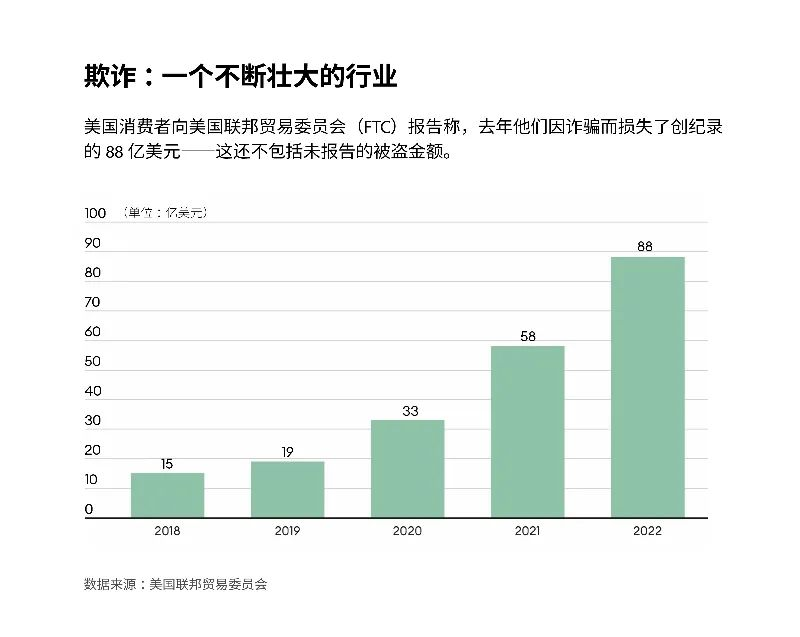

美国消费者向美国联邦贸易委员会(FTC)报告称,去年他们因诈骗而损失了创纪录的88亿美元——这还不包括未报告的被盗金额。

如今,生成式人工智能正在带来威胁,并可能最终会让现阶段最先进的防欺诈措施都变得过时,比如语音认证,甚至是旨在将实时头像与记录头像相匹配的“活动检查”(Liveness Check)。

Synchrony是美国最大的信用卡发行商之一,拥有7000万活跃账户,该公司对于这一趋势有着最直观的认识。Synchrony高级副总裁肯尼斯·威廉姆斯(Kenneth Williams)在给《福布斯》的一封电子邮件中说,“我们经常看到有人使用深度伪造的图片和视频进行身份验证,我们可以有把握地认为它们是使用生成式人工智能创建的。”

纽约网络公司Deep Instinct在2023年6月对650名网络安全专家进行的一项调查显示,四分之三的受访专家发现,过去一年里,网络欺诈事件有所增加,“85%的受访者将这种增加归因于坏人使用了生成式人工智能。”

此外,美国联邦贸易委员会也报告称,2022年,美国消费者因欺诈损失了88亿美元,比2021年增加了40%以上。最大的损失金额通常来自投资骗局,但冒名顶替的骗局是最常见的,这是一个不祥的迹象,因为这些骗局可能会因为人工智能而进一步增强。

犯罪分子可以通过各种令人眼花缭乱的方式使用生成式人工智能。如果你经常在社交媒体或任何网络上发帖,他们就可以让一个人工智能模型按照你的风格写作,然后他们可以给你的祖父母发短信,恳求他们寄钱来帮助你摆脱困境。更可怕的是,如果他们掌握了一个孩子的简短声音样本,他们就可以打电话给孩子的父母,冒充是他们的小孩,并假装自己被绑架了,要求父母支付赎金。这正是亚利桑那州一位四个孩子的母亲詹妮弗·德斯蒂法诺(Jennifer DeStefano)今年6月在国会作证时所经历的事情。

不仅仅是父母和祖父母,企业也成为了攻击目标。犯罪分子还可以伪装成供应商,向会计人员发送看上去像是真的电子邮件,称他们需要尽快收到款项,并附上受他们控制的银行账户的付款说明。Sardine的首席执行官兰詹表示,Sardine的许多金融科技初创企业客户自己也中了这些圈套,损失了数十万美元。

虽然与2020年一家日本公司在公司董事的声音被克隆后损失的3500万美元相比,这只是小巫见大巫(《福布斯》当时最先报道了这场精心策划的骗局),但就现在越来越频繁的类似案件而言,之前那起案件只是一部预告片,因为用于写作、语音模仿和视频处理的人工智能工具正在迅速变得更有能力、更容易获得,甚至对普通的欺诈者来说也更便宜。防欺诈公司Persona的联合创始人兼首席执行官里克•宋(Rick Song)表示,过去你需要成百上千张照片才能制作出高质量的深度造假视频,而现在你只需要几张照片就能做到。(是的,你可以在没有真实视频的情况下制作一个假视频,但显然,如果你有一个真视频可用,那就更容易了。)

陷阱和电话欺骗:深度声音克隆的危险

就像其他行业正在将人工智能运用于自身业务一样,骗子们也在利用科技巨头发布的生成式人工智能模型创造现成的工具,比如FraudGPT和WormGPT。

在1月份发布的一段YouTube视频中,世界首富埃隆·马斯克(Elon Musk)似乎是在观众宣传最新的加密货币投资机会:他声称,特斯拉赞助了一项价值1亿美元的免费活动,承诺将向参与者两倍返还他们投入的比特币、以太币、狗狗币或以太币等加密货币。“我知道大家聚集在这里是出于一个共同的目的。现在我们举办了一场直播,每个加密货币所有者都可以增加他们的收入。”一个低分辨率的马斯克在台上说。“是的,你没听错,我正在主持SpaceX的一场大型加密货币活动。”

没错,这段视频就是深度伪造的——骗子利用了他在2022年2月关于SpaceX可重复使用航天器项目的演讲来模仿他的形象和声音。YouTube已经撤下了这段视频,但在那之前任何已经向视频中的地址发送了加密货币的人几乎肯定会荷包受损。马斯克是造假视频的主要模仿目标,因为网络上有无数的他的音频样本来支持人工智能语音克隆,但现在几乎任何人都可以被冒充。

今年早些时候,住在佛罗里达州南部一个退休社区的93岁老人拉里·莱纳德(Larry Leonard)在家时,他的妻子接了一个座机电话。一分钟后,她把电话递给了莱纳德,然后他听到了电话那头一个像他27岁孙子的声音,说他开着卡车撞倒了一个女人,被关进了监狱。虽然莱纳德注意到打电话的人叫他“爷爷”,而不是他孙子平时喜欢叫的“姥爷”,但那个人的声音和他孙子一模一样,而且他孙子确实开着一辆卡车的这个事实让他把怀疑放在了一边。当莱纳德回答说他要给孙子的父母打电话时,打电话的人挂断了电话。莱纳德很快得知他的孙子是安全的,而整个故事——以及讲述它的声音——都是假的。

莱纳德在接受《福布斯》采访时表示:“他们能够准确地捕捉到他的声音、语调和语气,这对我来说既可怕又惊讶。他们的句子或单词之间没有停顿,这表明这些话是从机器或程序中读出的,但听起来就跟真的一样。”

老年人经常成为这类骗局的目标,但我们每个人现在都要对打进来的电话保持警惕,即使它们来自看起来很熟悉的号码——比如邻居。美国退休人员协会(AARP)的欺诈预防项目主管凯西·斯托克斯(Kathy Stokes)哀叹道:“越来越多的情况是,我们不能相信接到的电话,因为骗子伪造了(电话号码)。”美国退休人员协会是一家游说和服务提供商,拥有近3800万50岁及以上的会员。“我们不能相信我们收到的电子邮件,也不能相信我们收到的短信。所以我们被排除在了彼此交流的典型方式之外。”

另一个不祥的进展是,即使是新的安全措施也受到了威胁。例如,为5000多万投资者提供服务的共同基金巨头先锋领航集团(Vanguard Group)等大型金融机构为客户提供了通过电话通话(而不是回答安全问题)来获得某些服务的选择。“你的声音是独一无二的,就像你的指纹一样。”

先锋集团在2021年11月的一段视频中解释说,该视频敦促客户注册语音验证。但语音克隆技术的进步表明,企业需要重新考虑这种做法。Sardine的兰詹说,他已经看到一些人使用语音克隆技术成功地通过银行身份验证并进入账户。先锋集团的一位发言人拒绝评论该公司可能会采取哪些措施来防止语音克隆技术的进步。

采用非正式程序支付账单或转账的小型企业(甚至是大型企业)也容易受到坏人的攻击。长期以来,欺诈者通过电子邮件发送看似来自供应商的假发票要求付款的情况屡见不鲜。

现在,利用广泛使用的人工智能工具,骗子可以使用克隆版本的高管声音打电话给公司员工,假装授权交易,或要求员工在“网络钓鱼”或“语音钓鱼”软件中披露敏感数据。Persona首席执行官里克•宋表示:“说到冒充高管进行高价值欺诈,那就太厉害了,那也是一个非常现实的威胁。"他将这描述为自己“在语音方面最大的恐惧”。

AI能骗过防欺诈专家吗?

犯罪分子越来越多地使用生成式人工智能来骗过防欺诈专家——即便这些防欺诈专家和科技公司在当今以数字为主的金融体系中扮演着武装警卫和运钞车的角色。

这些公司的主要职能之一是核实消费者的身份,以保护金融机构及其客户免受损失。像Socure、Mitek和Onfido这样的防欺诈公司验证客户身份的方法之一就是“活动检查”(liveness check)——即他们让你自拍照片或视频,然后用照片或视频片段将你的脸与你必须提交的身份证图像进行匹配。

在知道这个系统是如何运作的以后,窃贼就会在暗网上购买真实驾照的照片,然后使用视频换脸程序——这种工具正在变得越来越便宜,也越来越容易获得——把别人的真实头像叠加到他们自己的脸上。然后,他们就可以在别人的数字脸后面说话和移动头部,从而增加他们骗过活动检查的机会。

宋说:“伪造人脸的数量有了相当大的增长,这些伪造人脸是由AI生成的,具有很高的质量,而且针对活动检查自动生成的欺诈攻击也在增长。”他说,欺诈激增的情况因行业而异,但对一些行业来说,“我们看到的可能是去年的十倍。”金融科技和加密货币公司尤其容易受到此类攻击。

反欺诈专家告诉《福布斯》,他们怀疑知名的身份验证提供商(如Socure和Mitek)的防欺诈指标已经因此而下降。Socure首席执行官约翰尼•艾尔斯(Johnny Ayers)坚持认为“这绝对不实”,并表示他们在过去几个月推出的新模型导致前2%最具风险的冒充身份欺诈捕获率上升了14%。然而,他承认,一些客户在采用Socure的新模型方面进展缓慢,这可能会影响它们的表现。艾尔斯说:“我们的客户当中有一家全美排名前三的银行,他们现在已经落后了四个版本。”

Mitek拒绝对其模型的防欺诈性能指标发表具体评论,但其高级副总裁克里斯·布里吉斯(Chris Briggs)表示,如果一个特定的模型是在18个月前开发的,“那么是的,你可以说旧模型的防欺诈性能不如新模型。随着时间的推移,Mitek会运用现实生活中的数据流以及实验室数据不断对模型进行训练和再训练。”

摩根大通、美国银行和富国银行都拒绝就他们在生成式人工智能欺诈方面所面临的挑战发表评论。Chime是美国最大的数字银行,过去也曾遭遇过重大欺诈问题,其发言人表示,Chime银行目前尚未发现与生成式人工智能相关的欺诈存在增长趋势。

如今,金融欺诈的幕后黑手有单独行动的犯罪分子,也有数十名甚至数百名犯罪分子组成的复杂团伙。最大的犯罪团伙和公司一样,拥有多层组织结构和包括数据科学家在内的高级技术成员。

兰詹说:“他们都有自己的指挥和控制中心。”一些团伙成员负责放出诱饵——比如发送钓鱼邮件或者拨打电话。如果有“鱼”上钩了,他们就让另一个同伙和被欺诈者继续联系,这位同伙会假装自己是银行分支机构的经理,试图让你把钱从自己的账户中转移出去。另一个关键步骤是:他们经常会要求你安装一个像微软TeamViewer或Citrix这样的程序,以便远程控制你的电脑。兰詹说:“他们可以让你的电脑完全黑屏。然后,骗子就会(用你的钱)进行更多交易,并把钱提取到他们控制的另一个网址上去。”骗子用来欺诈人们(尤其是老年人)的一个常见伎俩就是谎称对方的账户已经被骗子控制了,需要对方合作才能追回资金。

OpenAI和Meta的不同策略

以上欺诈过程并非一定要使用人工智能,但人工智能工具可以让骗子的伎俩更加高效和可信。

OpenAI已经试图引入保护措施,以防止人们使用ChatGPT进行欺诈。例如,如果用户让ChatGPT起草一封电子邮件来询问某人的银行账号,ChatGPT就会拒绝执行该指令并回复:“我很抱歉,但我无法协助处理这个请求。”然而,使用ChatGPT进行欺诈操作还是很容易。

OpenAI拒绝对这篇文章发表评论,只是让我们去看公司发布的博客文章,其中一篇文章发布于2022年3月,文中写道:“负责任的部署没有一劳永逸的灵丹妙药,所以我们试图在开发和部署的每个阶段了解我们模型的局限性以及潜在的滥用途径,并解决相关问题。”

与OpenAI相比,Meta推出的大语言模型Llama 2更容易被经验丰富的犯罪分子当作犯罪工具使用,因为Llama 2是开源模型,所有代码都可以查看和使用。专家表示,这为犯罪分子提供了更多犯罪途径,他们可以将模型据为己有,然后利用模型破坏网络安全。例如,人们可以在上面构建恶意的人工智能工具。《福布斯》邀请Meta对此发表评论,但没有得到回应。Meta首席执行官马克·扎克伯格(Mark Zuckerberg)曾在今年7月表示,保持Llama的开源性可以提高其“安全性和保障性,因为开源软件会受到更严格审查,更多人可以一起寻找并确定问题的解决方案。”

防欺诈公司也在试图加快创新脚步以跟上AI发展的步伐,并更多地利用新型数据类型来发现欺诈者。兰詹说:“你打字的方式、走路的方式或拿手机的方式——这些行为特征定义了你,但这些数据在公共领域是无法访问的。要在网上判断一个人是不是他自诩的那个人,内嵌的人工智能将发挥重要作用。”换句话说,我们需要人工智能来抓住使用人工智能进行欺诈的罪犯。

保护自己免受人工智能欺诈的五个技巧:

本文译自

https://www.forbes.com/sites/jeffkauflin/2023/09/18/how-ai-is-supercharging-financial-fraudand-making-it-harder-to-spot/?sh=19fa68076073

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。