Source: Li Zhiyong

Image source: Generated by Wujie AI

Large models have illusions, which can manifest in various ways, such as conflicting with facts. There are many causes, such as lack of relevant knowledge or misalignment, and many unclear points. However, the most crucial point related to applications is not these, but rather: it seems that illusions are something that cannot be resolved in the short term, even for OpenAI. This requires considering its current conditions and invariants when implementing it, and once it is treated as a precondition and invariant, it will be discovered that, like humans, illusions are not all bad.

Following the trend and against the trend

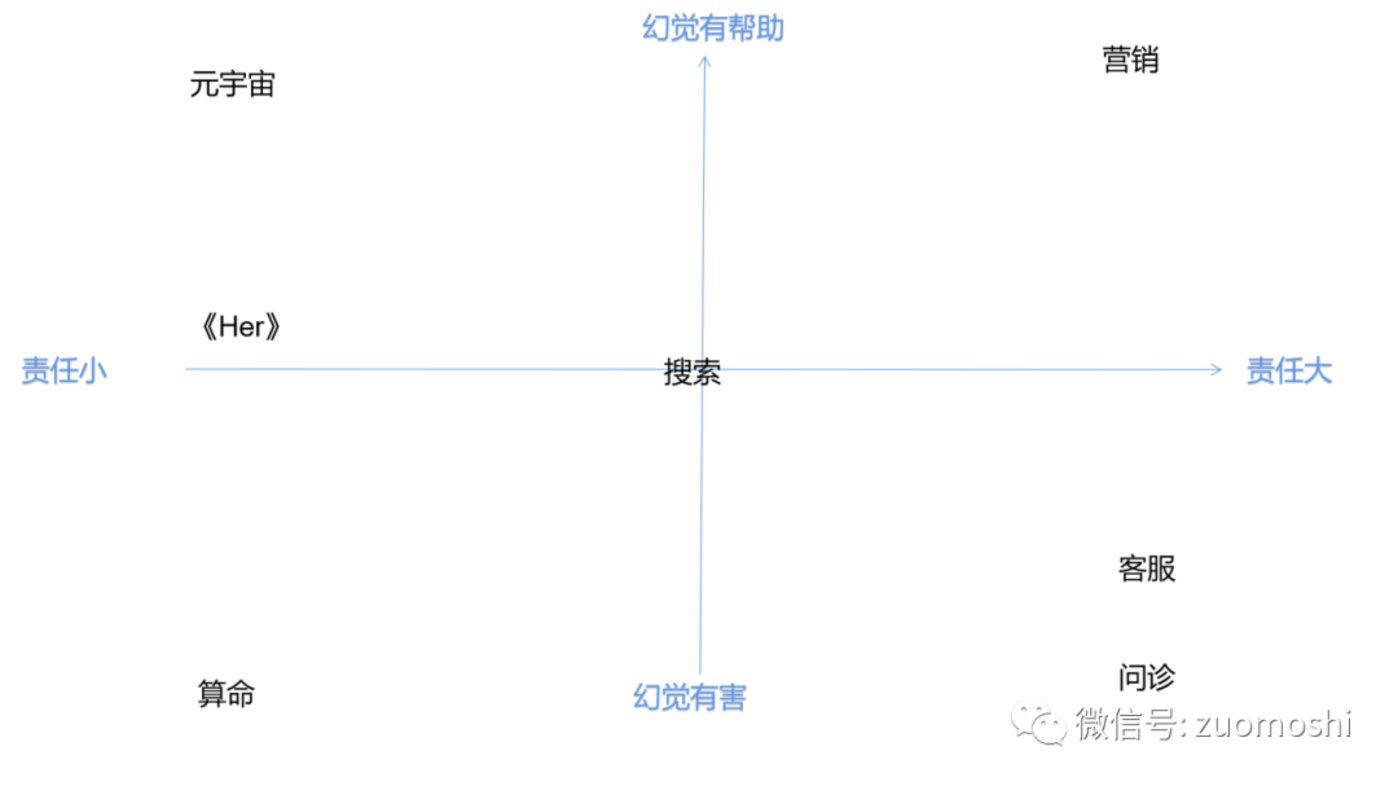

We can place various artificial intelligence entities on a simple coordinate system, with the helpfulness or harmfulness of illusions to products as the vertical axis, and the responsibility for problems as the horizontal axis. It might look something like this:

The positions of different artificial intelligence entities may be disputed, but there should be no problem with several extreme values.

For the metaverse, which is essentially an advanced game, unconventional behavior can actually stimulate the diversity of game narratives and become part of the rich world content. However, for medical consultation, it is very troublesome. If it operates outside the existing medical knowledge framework and the patient's condition worsens, it is not only harmful, but also has serious consequences. There are many more real-world scenarios similar to medical consultation, including customer service and tax consulting.

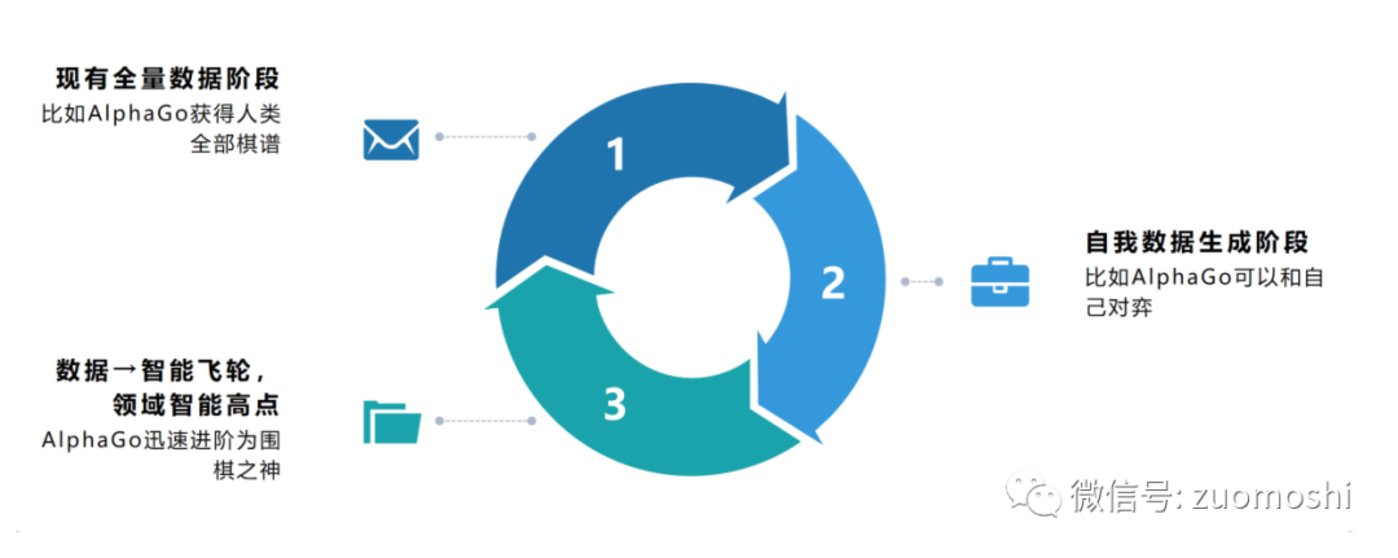

In the article "Seeking the Intelligent Flywheel: From Data Exhaustion to Multimodal and then to Self-Generation," we mentioned that apart from AlphaGo, no one has yet set the intelligent flywheel in motion. Because once the flywheel starts turning, it will inevitably produce specific domain deities, so those who care about this artificial intelligence application are concerned about where the next intelligent flywheel might appear, as shown in the following figure:

This can also be found in the quadrant chart above: The more it leans towards the metaverse, the easier it is. Illusions can create new data at a low cost of responsibility, integrate new data into the scene, and then use it as fuel to drive the flywheel.

Positioning in the quadrant

The positioning in the quadrant is crucial for the implementation of AI products.

To a considerable extent, this position determines your operating costs and even the speed of operation. And the constraints it faces are very rigid and cannot be changed.

In the usual understanding, we consider products as a set of features, described by what they can and cannot do, functional performance parameters, and, at a higher level, derived from aesthetic preferences, which is the brand. But in fact, it is not comprehensive. At a deeper level, a product is also a convergence point of a system of rights, responsibilities, and benefits. This is not as evident in consumer products as it is in business-to-business products.

This was rarely mentioned in the past because there was only one type of intelligent entity in the economic system: humans. The entire economic system was determined by the rights, responsibilities, and benefits of individuals and legal entities. But now, this system has encountered new challenges. The rise of artificial intelligent entities has caused cracks in this system of rights, responsibilities, and benefits, and it operates sluggishly.

The simplest example is the frequently mentioned autonomous driving. The emergence of autonomous driving has led to a new complex rebalancing of rights, responsibilities, and benefits between users, manufacturers, and autonomous driving service providers. (Previously, the responsibility for accidents was mainly on the user, who was responsible for driving. After using autonomous driving, this is no longer the case.)

This can be very troublesome because artificial intelligent entities are not the main subjects of rights, responsibilities, and benefits, but they seem to have to bear corresponding responsibilities. This subconsciousness has led every company to try to use technology to hedge against certain bad things, making it seem like they might not happen, but in reality, this is almost impossible.

The rebalancing of rights, responsibilities, and benefits with users and the first party actually determines the direction of the company's limited resource allocation. (In fact, it forms a general social cost of specific positions.)

In situations where the input-output is not good, and yet it still has to be done, isn't it embarrassing?

The core reason for this embarrassment is that technology cannot completely hedge against rights and responsibilities.

This is partly because technology always has a maturation process, but it is likely more crucial that with digitalization and intelligence, the issues of rights and responsibilities are magnified.

When faced with clearly represented numerical results, every customer will hope for unlimited problem-solving, a limit that was previously suppressed.

We can use customer service as an example:

When employing thousands or even hundreds of people to do customer service, the service itself will inevitably have a certain error rate. But at this point, people can generally accept a certain level of mistakes. Everyone knows that people make mistakes, and because customer service personnel are not easy to find, there is a certain tolerance for this error rate. If a customer service representative provides poor service and loses an order, at most, the corresponding representative will be dismissed or fined. It is difficult to demand compensation for the losses caused by customer service.

When customer service is replaced by artificial intelligent entities, the situation remains the same, but the relationship between rights and responsibilities quietly changes.

Even if the error rate of the artificial intelligent entity exceeds that of the previous human service, from the perspective of the first party, there will still be enough motivation to hold the company providing the artificial intelligent entity service accountable. And the technology company behind it will have to do everything possible to ensure that the artificial intelligent entity does not make mistakes.

This is the use of technology to hedge against rights and responsibilities.

The essence is to use technology to solve nearly infinite domain problems. The further it goes, the more it becomes a mess, because it cannot be resolved. The artificial intelligence industry has been repeating this story since the awakening of intelligent speakers.

In reality, even ancient search engines, if required to not make mistakes, such as eliminating certain types of content after appearing once, companies like Google and Baidu would not know how many times they would have failed.

However, the tolerance of this system of rights and responsibilities actually has many causes, including historical, cultural, and commercial realities, and is very rigid and unlikely to change. And if it cannot change, then it is not very suitable for pure artificial intelligent entities. The compromise method that can be thought of is to put people back in, even if the person does nothing, just for show, it is still their responsibility to fulfill their job, and the artificial intelligent entity serves them. In this way, the choice and positioning become very crucial, because the system of rights and responsibilities itself is a cost, direction, and customer satisfaction ("Thinking is the problem, doing is the answer" is wrong, as Lei Jun said, it's not right, just do it). Under the premise that illusions are part of intelligence, running into a domain with a very heavy system of rights and responsibilities is actually a waste of effort.

From this perspective, we can elevate the discussion and talk about the application model of new technologies.

Application model of new technologies

When discussing the application model, let's first return to human beings themselves.

The core subsystems of human civilization—politics, economy, and culture—are actually all virtual before they become real, and then they promote the virtual. Going back to 300 years ago, there were no separation of powers, freedom and democracy, or socialism, and of course, there were no cars, high-speed trains, or bicycles.

Based on imagination and a certain logical rationality, and another wave of people finding the connection between imagination and physical laws and commercial games, imagination will shine into reality. Trains, electricity, cars, mobile phones, and so on, have all come into the world in this way.

Then, let's go back to February 12, 1912, the abdication of Puyi, and see the role of imagination.

Many of the products that have had a profound impact on us today were actually invented before 1912. These products include steam engines, automobiles, electricity, penicillin, telephones, cameras, batteries, and the people involved include Nobel, Edison, Tesla, and Ford. These products initially faced challenges, such as steam-powered cars frequently exploding, and Edison's strong attack on alternating current electricity, which was dangerous and could easily electrocute people. However, a relatively tolerant environment allowed these products to survive.

Imagine if figures like Empress Dowager Cixi believed that trains would disrupt the feng shui of their ancestors, then such diversity would not have been possible. The Qing Dynasty's use of thought suppression, such as the literary inquisition, was effective in restraining thoughts and to some extent achieved the stability they desired. However, while stability was good, the entire system's efficiency was very poor, and the abdication of Puyi in 1912 can be seen as a result of this poor efficiency.

What does this teach us?

New technologies are more suitable to start in areas with lower general social costs. The looser the system of rights and responsibilities, the lower the cost of trial and error, and the more helpful it is for immature technology-driven products to take off.

The overall general social cost is very complex, and will not be discussed here. (It's about how to avoid believing that trains will disrupt feng shui when they appear.)

For individual innovators, it is obviously necessary to avoid complex systems of rights and responsibilities.

Because if the technology is immature and still needs to hedge against the risks of complex rights and responsibilities, it will definitely be difficult and unrewarding.

So, what will it look like specifically for artificial intelligence?

At this point in time, the leniency of the environment itself may not seem critical, and a complex system of rights and responsibilities may even be more likely to generate cash flow. But it may become more critical as we move forward. Because the potential intelligent flywheel will come into play, so this is really the difference between 1.01 to the power of 99 and 0.99 to the power of 99.

Kevin Kelly expressed a viewpoint in "What Technology Wants" that even if the benefits brought by technology are only slightly more than the drawbacks, the impact of its own strength will be very significant.

The same goes for artificial intelligent entities. This slight difference may be the difference in whether the intelligent flywheel can start turning later on.

In simple terms, the application model specific to artificial intelligence itself is: to find illusions beneficial, to hedge against rights and responsibilities in less complex domains, and then to grow from the inside out, rather than forcing growth.

Conclusion

From this perspective, illusions are indeed intelligence, at least the driving force behind the intelligent flywheel. Many times, it is precisely illusions that give us a sense of intelligence. Completely removing illusions while maintaining intelligence would truly require achieving a super singular entity (the ultimate wisdom of the Krell people), which is a great ideal. However, I believe that if we assume that artificial intelligence cannot build a complete world model, then removing these illusions is impossible. Therefore, instead of relying on technology to solve this problem, it is better to adjust the application model based on technological progress.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。