最近连续看了很多AI的项目,一直在思考,哪些 #AI+ #Web3 项目是真命题,哪些是伪命题,如何去伪存真,大浪淘金?最近看了一篇《现代 AI 堆栈:成功的企业 AI 架构的秘密》,给我带来了很多启发。

在这篇富有洞察力的文章中,Menlo Ventures全面概述了现代 AI 堆栈及其演变。

一,重要洞悉:

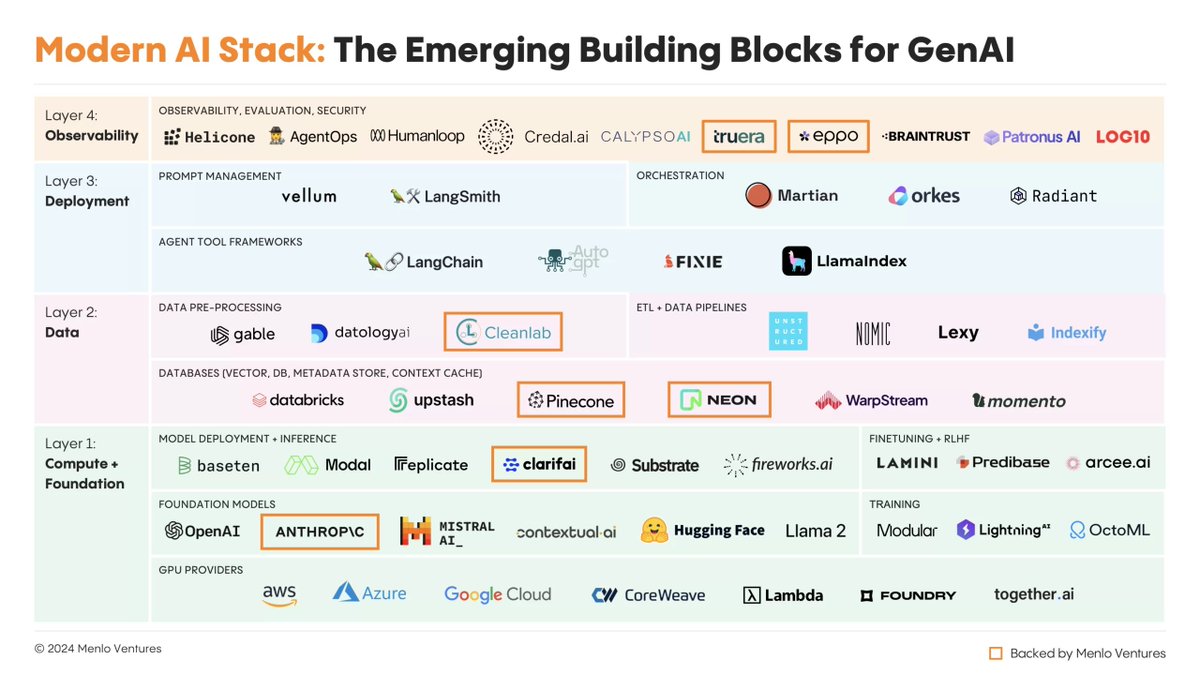

1. AI跟我们Web3模块化方案一样,也分层,总共分4层来定义整个AI堆栈:计算和基础模型层、数据层、部署层、可观察评估层。每一层都包含必要的基础设施组件,类似于SDK,使企业能够高效的构建和部署 AI 应用程序。

2. AI目前成熟度已经越来越高,逐渐向应用层演进,会越来越多专业垂直领域AI应用会出来(比如专门面向财务,律师,投研分析等)。从Web3创业思维来看,做底层模型或者基建不如转变做产品。最近我也接触到一些做产品应用的Web3项目,例如:@AlvaApp @ScopeProtocol ,还有做股票AI的,例如:@finchat_io 。都做得挺不错,假如代币经济学打通,得用户流量得天下,估值不比做算力,做数据所有权这类估值低。

3. AI模块化构建已经越来越成熟,Web2领域的AI基础设施完善程度已经很高,无需重复造轮子,在Web3重塑一次,尤其在计算模型层面。目前对于没有机器学习专业知识的团队已经可以很好的部署和开发AI应用产品。这种转变导致在AI成熟度曲线的每个阶段和每个痛点中都出现了新的构建模块解决方案,作为生产AI系统的基本基础设施。

4. 目前,大部分人工智能支出都用于推理而非训练,几乎 95% 的人工智能支出都用于运行时而非预训练。这一趋势凸显了高效且可扩展的推理基础设施在现代人工智能堆栈中的重要性。而在机器推理方面,人类推理是大大强于AI的(例如自动驾驶),尤其是在推理结果纠错领域,这个领域如何结合Web3通证化解决,其实是一个好方向。

5. 企业越来越多地采用多模型方法,使用多个模型运作实现获得性能更高的模型。这种方法消除了对单一模型的依赖,提供了更高的可控性并降低了成本。这个 @opentensor 已经在做了,我相信会有越来越多Web3+AI 的项目,来解决AI模型高成本的问题。

6. 检索增强生成 (RAG) 已成为赋予AI基础模型企业特定“记忆”的主要架构方法。该技术在当今生产中超越了其他定制技术,如微调、低秩自适应或适配器。RAG方案,在目前诸多的Web3+AI数据方面是一个极佳的落,有较好的应用场景。

7. 现代人工智能堆栈的兴起使得人工智能开发变得民主化,使得主流开发人员能够在几天或几周内完成以前需要多年基础研究和复杂的机器学习专业知识才能完成的任务。AI下半场,大概率是胖应用时代,诸多各领域的AI应用将会应运而生。如👇图,一个基于 ChatGPT 构建的 AI 约会教练每月收入19万美元,每月下载量 33万次,没有新技术,仅仅只是ChatGPT 之上的二次封装。

8. 随着现代人工智能堆栈的不断发展,我们可以期待看到下一代人工智能应用程序的出现,它们将试用更先进的 RAG 技术、微调、特定于任务的模型的激增,以及可观察性和模型评估新工具的开发。

二,值得注意的数据:

1. 2023 年,企业在现代 AI 堆栈上的支出超过 11 亿美元,使其成为生成 AI 领域最大的新市场,也是初创企业的巨大机遇。

2. 全世界有 3000 万名开发人员、30 万名 ML 工程师,但只有 3 万名 ML 研究人员。对于那些在 ML 最前沿进行创新的人来说,作者估计世界上可能只有 50 名研究人员知道如何构建 GPT-4 或 Claude 2 级系统。

3. 近 70% 的人工智能采用者使用人工审查输出作为其主要评估技术,凸显了可观察性和评估方面对新工具的需求,这个其实Web3通证化极佳的解决方案,一个是早期的数据标注,一个是后期的AI评估。像Infolks、iMerit 和Playment 这样的数据标注外包公司,正将印度打造成全球人工智能公司的“数据后台”,这个完全可以Web3化。

三,一些AI+Web3创业的浅薄建议:

1. 关注数据层:随着AI发展转向检索增强生成(RAG)作为主要架构方法,提供强大数据层基础设施(如矢量数据库、数据预处理引擎和 ETL 管道)的Web3+AI项目将获得一个完美的叙事和想象空间。

2. 拥抱多模型范式:AI+Web3的基础设施和平台,使项目能够轻松集成和协调多个模型,提供标准化的API接口,从而使它们能够将提示路由到每个用例性能最佳的模型,并通过Web3通证化解决全球民主化使用难题。

3. 在可观察性和评估方面进行创新:随着企业越来越多地采用人工智能,对能够监控和评估其人工智能系统性能的工具的需求也日益增长。能够在此领域提供新颖解决方案的Web3项目将有很大机遇。

4. 为无服务器做好准备:随着现代 AI 堆栈向无服务器架构发展,能够为堆栈的各个组件(例如矢量数据库、缓存和推理)提供无服务器解决方案的Web3项目将能够很好地满足当下AI叙事的需求。这一块Web3有天然的优势。

5. 探索先进的 RAG 技术:能够开发和商业化下一代 RAG 技术(例如思路链推理、思路树推理、反射和基于规则的检索)的AI项目将处于现代 AI 堆栈演进下一阶段的前沿。

最后,感谢 Menlo Ventures 优秀的AI文章和研究。

原文链接:https://menlovc.com/perspective/the-modern-ai-stack-design-principles-for-the-future-of-enterprise-ai-architectures/

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。